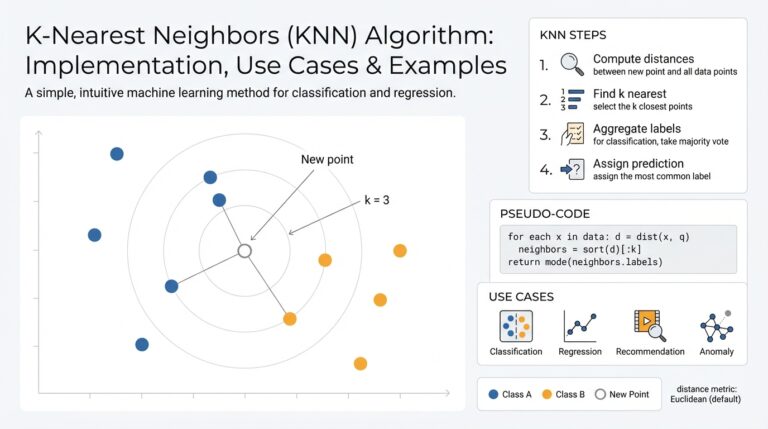

K-Nearest Neighbors (KNN) Algorithm Explained: Implementation, Use Cases & Examples

KNN Overview and Intuition K-Nearest Neighbors (KNN) is one of the simplest yet most instructive machine learning algorithms, and understanding its intuition pays off when you design practical models. At its core, KNN is an instance-based, non-parametric method that predicts a label for a query point by looking at the