Understanding Mixture of Experts (MoE) in Large Language Models

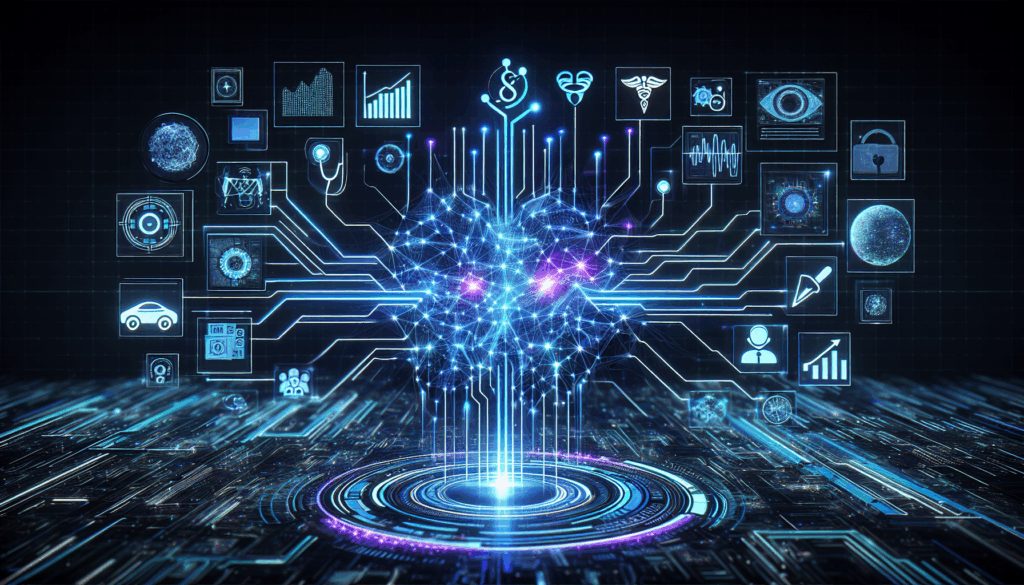

What is a Mixture of Experts (MoE) Architecture? A Mixture of Experts (MoE) architecture is an advanced neural network design […]

Understanding Mixture of Experts (MoE) in Large Language Models Read More »