Introduction to News Source Classification

The rapid proliferation of digital news content has made it increasingly challenging to assess the credibility and origin of the information we consume. As readers demand trustworthy news, the need for reliable news source classification systems has never been greater. News source classification is a crucial task in the field of Natural Language Processing (NLP) and Machine Learning, as it aims to categorize articles based on their publication source—be it mainstream outlets, independent platforms, or even sources of misinformation.

At its core, news source classification is more complex than simple topic tagging or sentiment analysis. It involves understanding linguistic patterns, editorial styles, and the ideological slants unique to each source. The process often begins with the collection of labeled datasets, such as those available from public news source archives or platforms like NewsAPI, which provide structured news articles tagged by publication.

NLP techniques play a critical role in feature extraction—turning raw text into measurable data points. Early approaches utilize keyword-based methods and simple statistical models, but as challenges such as paraphrasing, bias, and noisy data emerge, more sophisticated approaches become necessary. For example, domain-specific lexicons might help uniquely identify language habits typical of certain news organizations, as discussed in detail by ACL research. Additionally, machine learning algorithms such as logistic regression or decision trees lay the groundwork for initial classification but often struggle to generalize across diverse, real-world data.

A deeper understanding of news source classification is vital for multiple purposes:

- Combating misinformation: By identifying the source, content platforms can more effectively flag or verify questionable stories (International Fact-Checking Network).

- Personalizing news delivery: Reader preferences for certain outlets can improve news recommendation systems (Harvard research on news personalization).

- Analyzing media bias: Automated source analysis assists researchers and the public in detecting biases or agendas embedded in news coverage (Media Bias/Fact Check).

Recent advances leverage deep learning and transformers, such as BERT, which can capture nuanced context and semantics difficult for traditional models. By leveraging such technologies, modern systems can achieve a much deeper and more accurate understanding of an article’s likely source, as evidenced in ongoing projects from leading research labs (BERT by Google AI).

Ultimately, the field of news source classification serves as a foundation for ensuring media integrity in the digital age. As the landscape evolves, marrying state-of-the-art NLP tools with robust, ethical frameworks will be essential for preserving the quality of public discourse.

Traditional Baseline Approaches for News Classification

Before the surge of deep learning models like BERT in natural language processing (NLP), traditional baseline approaches played a critical role in news classification tasks. These foundational techniques, many of which are still widely used as benchmarks, have enabled researchers and practitioners to understand the unique challenges and nuances involved in categorizing news articles by topic, sentiment, or source bias.

1. Bag-of-Words (BoW) and TF-IDF

One of the earliest and most straightforward approaches is the Bag-of-Words (BoW) model. This technique treats a news article as an unordered collection of words, disregarding grammar and word order. Each article is represented as a vector, where each dimension corresponds to a word from a defined vocabulary, and the value indicates its frequency.

However, raw word count often overemphasizes common words. To address this, the Term Frequency-Inverse Document Frequency (TF-IDF) weighting scheme is used. TF-IDF reflects how important a word is to a document in relation to a corpus. This approach helps highlight keywords specific to a category or topic, making classification more accurate.

Steps to Implement:

- Tokenize articles into individual words.

- Create a vocabulary from the training data.

- Represent each article with a BoW or TF-IDF vector.

- Feed these vectors into a machine learning algorithm such as logistic regression or SVM.

Example: If a news source frequently uses the terms “parliament,” “election,” or “minister,” a classifier might learn to categorize its content as political news.

2. Naïve Bayes Classifier

A widely used probabilistic approach, Naïve Bayes assumes that features (words) are independent given the class label. Despite this simplistic assumption, it is highly effective for text classification problems, especially with well-separated classes like sports, politics, and business news.

The classifier calculates probability scores for each category based on the presence and frequency of words in the input article and then assigns the most probable label.

Steps to Implement:

- Extract BoW or TF-IDF features from articles.

- Train the Naïve Bayes classifier on labeled data, learning word distributions for each category.

- Use the learned probabilities to classify new articles.

Example: If the word “championship” appears frequently in articles labeled as sports, the model will steer future articles containing that term toward the sports category.

3. Support Vector Machines (SVM)

Support Vector Machines are effective for high-dimensional tasks like text classification. SVMs seek to find the optimal boundary (hyperplane) that separates article feature vectors belonging to different news categories. By focusing on the “support vectors”—those labeled examples nearest to the boundary—SVMs are highly robust to noisy data and can handle thousands of correlated features extracted from text.

Steps to Implement:

- Vectorize news articles with BoW or TF-IDF.

- Train an SVM classifier to distinguish between categories based on training data.

- Predict the class label of new articles by determining which side of the hyperplane they fall on.

Example: Given two similar-looking news stories—one about finance and one about politics—the SVM can use subtle textual cues to accurately differentiate between the two based on labeled patterns learned during training.

4. Evaluating Baselines

Baseline approaches like these are invaluable as reference points. They establish a minimum standard for performance, allowing newer models to demonstrate tangible improvements. According to Stanford’s CS124: Text Classification notes, comparing different models on the same baseline features helps highlight the benefits of more advanced NLP techniques when handling complex news data.

In conclusion, traditional methods like BoW, TF-IDF, Naïve Bayes, and SVMs provide the backbone of early news classification systems. They are fast, interpretable, and surprisingly competitive in many contexts—making them vital stepping stones for anyone entering the world of news source classification.

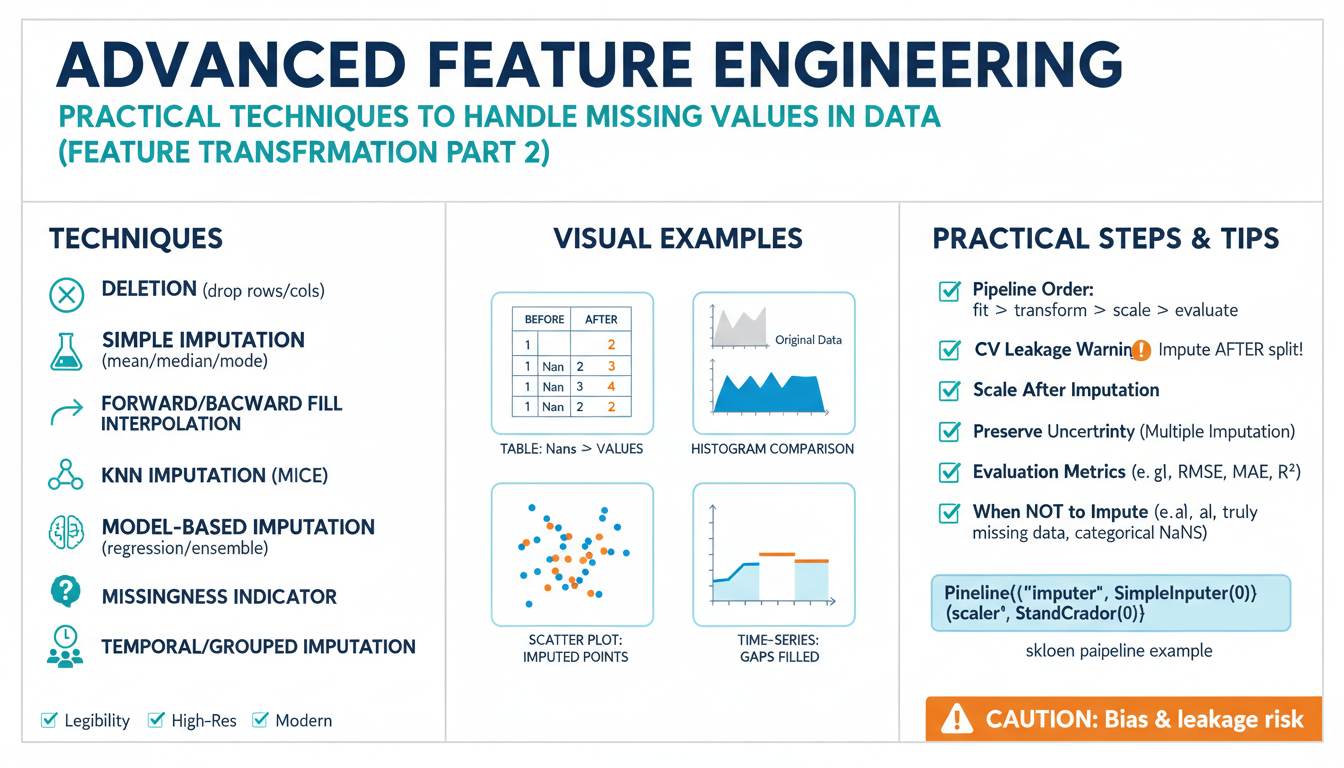

Feature Engineering: Text Preprocessing and Representation

Before applying machine learning (ML) or advanced natural language processing (NLP) models to classify news sources, it’s crucial to transform raw text into a structured format machines can interpret. This process—known as feature engineering—starts with meticulous text preprocessing and then moves to selecting effective ways to represent text numerically. Proper execution of these steps can significantly impact the predictive power of your classification models.

Why Text Preprocessing Matters

Raw news articles are noisy: they come with headlines, bylines, HTML tags, special characters, and irregular formats. Preprocessing aims to clean and normalize this data, making it both consistent and more informative for downstream models. Typical preprocessing steps include:

- Tokenization: Breaking text into sentences or words (tokens). For example, “Fake news spreads fast” becomes [“Fake”, “news”, “spreads”, “fast”]. Learn more about tokenization from the Stanford NLP Group.

- Lowercasing: Converting text to lowercase to avoid treating “News” and “news” differently.

- Stop-word Removal: Eliminating common words like “the”, “is”, and “and” that carry little meaning for classification. Find more about stop words at Scikit-learn.

- Punctuation and Special Character Removal: Cleaning text further by stripping out non-alphanumeric symbols.

- Stemming and Lemmatization: Reducing words to their base forms. For instance, “running” becomes “run”. You can explore more about this topic at GeeksforGeeks.

Proper preprocessing reduces complexity and boosts the signal-to-noise ratio, making it easier for algorithms to discover patterns specific to certain news sources.

Representing Text for Machine Learning

Once the text is clean, it needs to be represented in a way that machine learning models can understand. There are several effective approaches:

1. Bag-of-Words (BoW)

BoW is a simple yet powerful technique. It creates a vocabulary of all unique words and represents each document by a vector indicating the frequency of each word. For instance, using the words “fake”, “news”, “spreads” from above, an article containing “fake news spreads fast” might be represented as [1, 1, 1, 1]. BoW ignores word order, focusing solely on word occurrence.

2. TF-IDF (Term Frequency-Inverse Document Frequency)

TF-IDF refines BoW by not just counting how often a word appears, but also considering its rarity across the entire dataset. This helps highlight words that are more relevant for distinguishing between sources. For an in-depth explanation, check out this guide from Towards Data Science.

3. Word Embeddings

Embedding methods such as Word2Vec or GloVe map words into high-dimensional vectors, capturing semantic relationships. As an example, “article” and “story” will have similar vectors. Embeddings are foundational for modern NLP techniques, especially in deep learning applications.

4. Contextual Embeddings

The advent of transformer models like BERT has revolutionized text representation. Unlike static embeddings, BERT generates dynamic word representations that vary based on context. For instance, “bank” in “river bank” is represented differently than in “bank account.” These models are pretrained on vast corpora and can be fine-tuned for news classification with exceptional success.

Conclusion

Effective feature engineering—through preprocessing and thoughtful text representation—lays the foundation for any successful news source classification. As NLP and ML continue to evolve, the tools for extracting meaningful features from raw text are becoming ever-more sophisticated, driving improved accuracy and reliability in real-world applications. To further dive into these methods, consider resources by Machine Learning Mastery and Google’s machine learning guides.

Applying Classic Machine Learning Algorithms

When it comes to identifying and classifying news sources, classic machine learning algorithms offer a robust starting point before jumping into more advanced deep learning models. These algorithms are well-documented, computationally efficient, and often provide surprisingly strong results for tasks like fake news detection, source classification, or sentiment analysis.

1. Data Preprocessing and Feature Engineering

Before diving into classic algorithms, the text data must be preprocessed and transformed into features that machines can understand. Steps usually include:

- Tokenization: Breaking text into individual words or terms.

- Stop Words Removal: Filtering out common words (“the,” “and,” etc.) that add little discriminative value.

- Stemming/Lemmatization: Reducing words to their root forms. For example, “running,” “runs,” and “ran” become “run.”

- Vectorization: Converting text into numerical representations using methods like TF-IDF (Term Frequency-Inverse Document Frequency) or bag-of-words.

This preprocessing ensures that algorithms can examine meaningful patterns in news articles rather than being distracted by irrelevant text features.

2. Applying Classic Algorithms: An Overview

Among classic machine learning algorithms, several stand out for text classification:

- Naive Bayes Classifiers: Naive Bayes is particularly well-suited for categorical text data because it assumes independence between features (words). This simple approach often yields impressive accuracies for tasks like spam filtering or author/source classification. For a deeper exploration, review the seminal research on Naive Bayes for text classification.

- Support Vector Machines (SVM): SVMs find the optimal line (in higher dimensions, a hyperplane) to separate different news categories. They’re notable for handling high-dimensional data like word counts and offer robustness even with sparse text representations. The scikit-learn documentation provides hands-on examples and practical guidelines.

- Logistic Regression: Despite its simplicity, logistic regression is a powerful linear classifier for binary or multi-class problems. It’s prized for its interpretability, revealing which words most influence the prediction for a news source.

- Decision Trees and Random Forests: Decision trees split data based on feature thresholds. When combined in ensembles (random forests), they guard against overfitting and often yield strong baselines for structured text features.

3. Evaluating and Interpreting Results

Model performance should be evaluated using appropriate metrics like accuracy, F1-score, and confusion matrices. Holdout validation (splitting data into training and test sets) or k-fold cross-validation is standard for reliable performance estimates. For more on evaluation strategies, refer to the Machine Learning Mastery overview on performance measures.

Feature importance analysis—especially using coefficients in logistic regression or feature importances in random forests—can help understand which terms or phrases are most indicative of certain sources. This interpretability is crucial for trust and transparency, especially in news contexts where bias and accountability matter greatly (Harvard Data Science Review).

4. Limitations and Next Steps

While classic algorithms often provide strong baselines, they usually require extensive feature engineering and may struggle with nuanced linguistic phenomena like sarcasm or context. To address these, more advanced natural language processing (NLP) approaches—such as word embeddings or transformer-based models—can be introduced after establishing solid benchmarks with classic methods.

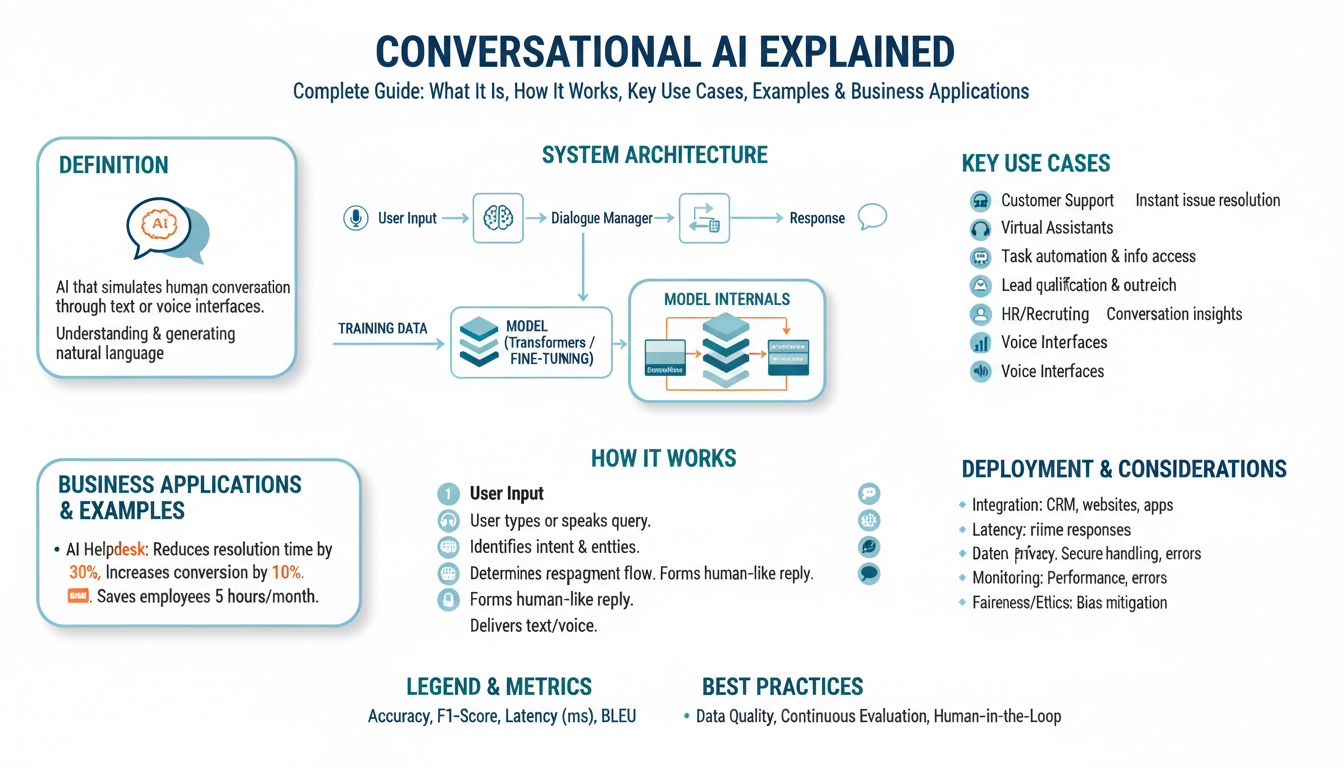

Introduction to BERT and Transformer-Based Models

Transformer-based models have dramatically changed the landscape of Natural Language Processing (NLP), offering unprecedented accuracy and flexibility in a wide range of language tasks—news source classification included. At the heart of this revolution is BERT (Bidirectional Encoder Representations from Transformers), developed by Google in 2018. Unlike earlier models, BERT leverages a transformer architecture designed to understand the context of a word based on all of its surroundings (i.e., bidirectional context). This fundamental change allows BERT to grasp the intricacies of language far more effectively than traditional methods.

The original paper on BERT introduced a new way of pre-training representations by conditioning on both left and right context in all layers. This is a core advantage of transformers, which are built upon the concept of self-attention. Self-attention allows the model to weigh the importance of each word in the sequence, making it possible to capture complex dependencies, such as those needed for recognizing a news article’s bias or factual reporting style.

Here’s how transformer-based models fundamentally operate:

- Tokenization and Embedding: Incoming text (like a news article) is split into subword units (tokens) and represented as high-dimensional vectors. This forms the input embeddings.

- Self-Attention Mechanism: The model examines all tokens in a sentence simultaneously, determining which words are most important to each other for the given task. For example, in distinguishing news from an independent outlet versus a partisan blog, the model might focus on words indicating subjectivity or loaded phrasing.

- Multiple Transformer Layers: Stacked layers of attention further refine this understanding, enabling the model to learn subtle cues spanning long text passages.

- Pre-training and Fine-tuning: BERT is first pre-trained on large text corpora using unsupervised learning, then fine-tuned on smaller, task-specific datasets (such as labeled news sources) for optimal performance.

Let’s consider an example: imagine you want to classify a batch of news articles by their reliability. Traditional bag-of-words or baseline models might rely heavily on the frequency of certain keywords. However, a transformer-based model can instead learn to identify trustworthy sources by understanding context, style, and subtle signaling in the text—far beyond simple keyword spotting.

This step change in learning capacity has been validated across multiple benchmarks. For instance, BERT not only set new state-of-the-art results on tasks like the GLUE benchmark but also enabled fine-tuning for highly specialized tasks, such as fake news detection and author style identification (Google AI Blog).

Ultimately, transformer-based models have elevated our ability to classify news not just on surface features, but by engaging with deeper representational structures of language. Whether you’re building a simple classifier or deploying advanced stance detection, understanding BERT and related transformer models is now an essential step to leveraging machine learning for high-stakes NLP applications. For a deeper dive, excellent resources such as The Illustrated BERT, ELMo, and co. provide accessible walkthroughs into the inner workings of these models.

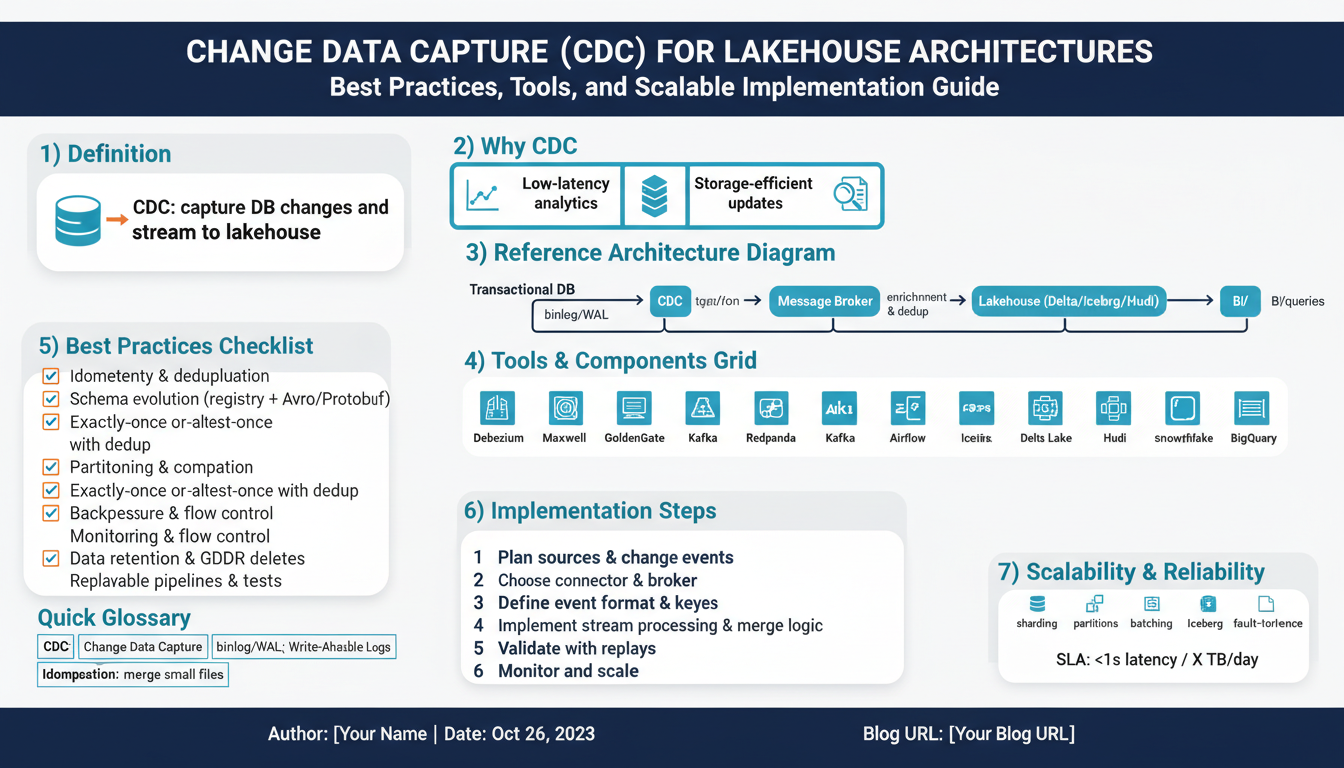

Performance Comparison: Baselines vs. BERT

Evaluating the effectiveness of news source classification models is crucial to understanding their real-world applicability and impact. Traditionally, baseline models like Naive Bayes, Support Vector Machines (SVM), and logistic regression have been employed due to their simplicity and efficiency. These models typically rely on bag-of-words representations or TF-IDF features to map text to vectors. While these approaches are computationally efficient and interpretable, they often struggle to capture deeper contextual nuances and semantic meaning within the text.

Let’s break down how performance is typically assessed and explore how BERT, a modern transformer-based model, outperforms classical baselines:

1. Baseline Models: Fast and Simple, But Limited

Baseline models process news articles by extracting features such as word frequencies or n-grams. For example, a Naive Bayes classifier may use the probability of word occurrence conditioned on the source label, making predictions based on the words’ likelihood. Similarly, SVM and logistic regression learn linear boundaries in high-dimensional feature space.

However, these models usually miss out on:

- Word order and syntax: Baselines consider each word or phrase independently, ignoring structure and context.

- Ambiguity and semantics: Homonyms and polysemous terms can confuse models lacking deeper language understanding.

Despite these limitations, such models set a foundational “bar” of performance and can still be useful for rapid prototyping or when data and resources are limited. Their accuracy can range from 70% to 85% on well-formed datasets, but they tend to degrade in the face of nuanced or adversarial texts (reference).

2. The BERT Advantage: Contextual Understanding and Robust Accuracy

BERT (Bidirectional Encoder Representations from Transformers), introduced by Google Research (arXiv), revolutionizes text classification by employing attention mechanisms to model context bidirectionally. Unlike baselines, BERT understands each word in relation to all others in a sentence, enabling a richer grasp of meaning and intent.

Deploying BERT for news source classification involves:

- Tokenization: Converting sentences into BERT-compatible tokens that include word fragments and special markers.

- Embedding Generation: Generating deep contextualized representations for each token, capturing the influence of surrounding words.

- Fine-tuning: Training the model on a labeled dataset, optimizing for accurate source prediction based on nuanced patterns.

The result is a remarkable jump in performance. According to benchmarks reported by Google AI Blog and subsequent peer-reviewed studies, BERT-based classifiers often surpass 90% accuracy on standard news datasets, outperforming baselines by significant margins—especially in handling sarcasm, negation, and subtle bias.

3. Real-World Impacts and Considerations

The leap in performance from baseline models to BERT has immediate practical benefits:

- Higher reliability under diverse linguistic conditions.

- Improved detection of subtle misinformation and source-specific narratives.

- Greater adaptability to evolving language dialects and genres.

However, it’s important to weigh the trade-offs: BERT models require significantly more computational resources and expertise for training and deployment, which may not be feasible for all organizations. Careful consideration should be given to dataset quality, bias mitigation, and ongoing model maintenance (fast.ai analysis).

In conclusion, while baseline models still hold value for their speed and simplicity, BERT represents a powerful advance, enabling much more reliable and sophisticated classification of news sources. For most production applications, the performance advantages of BERT are hard to ignore, making it a leading choice for modern media analysis workflows.