Redefining Natural Language Understanding: Where We Are Now

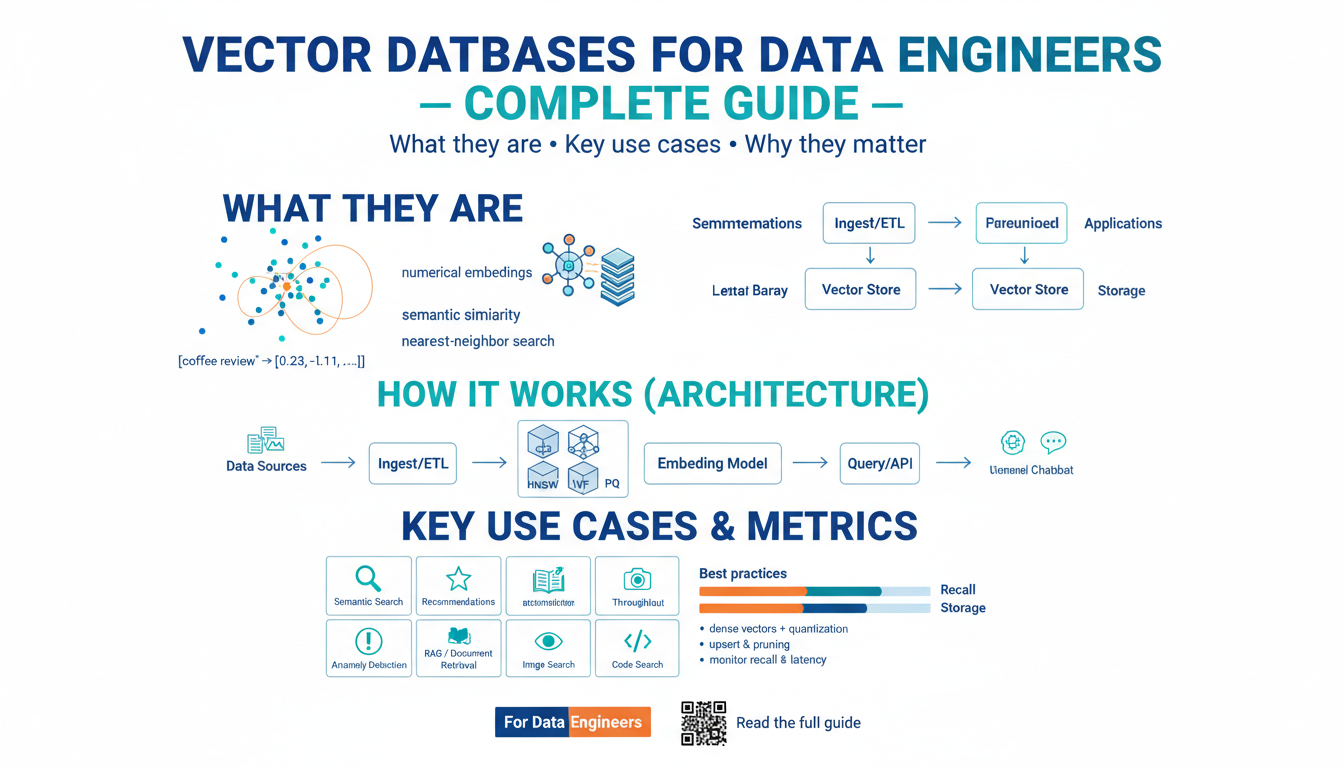

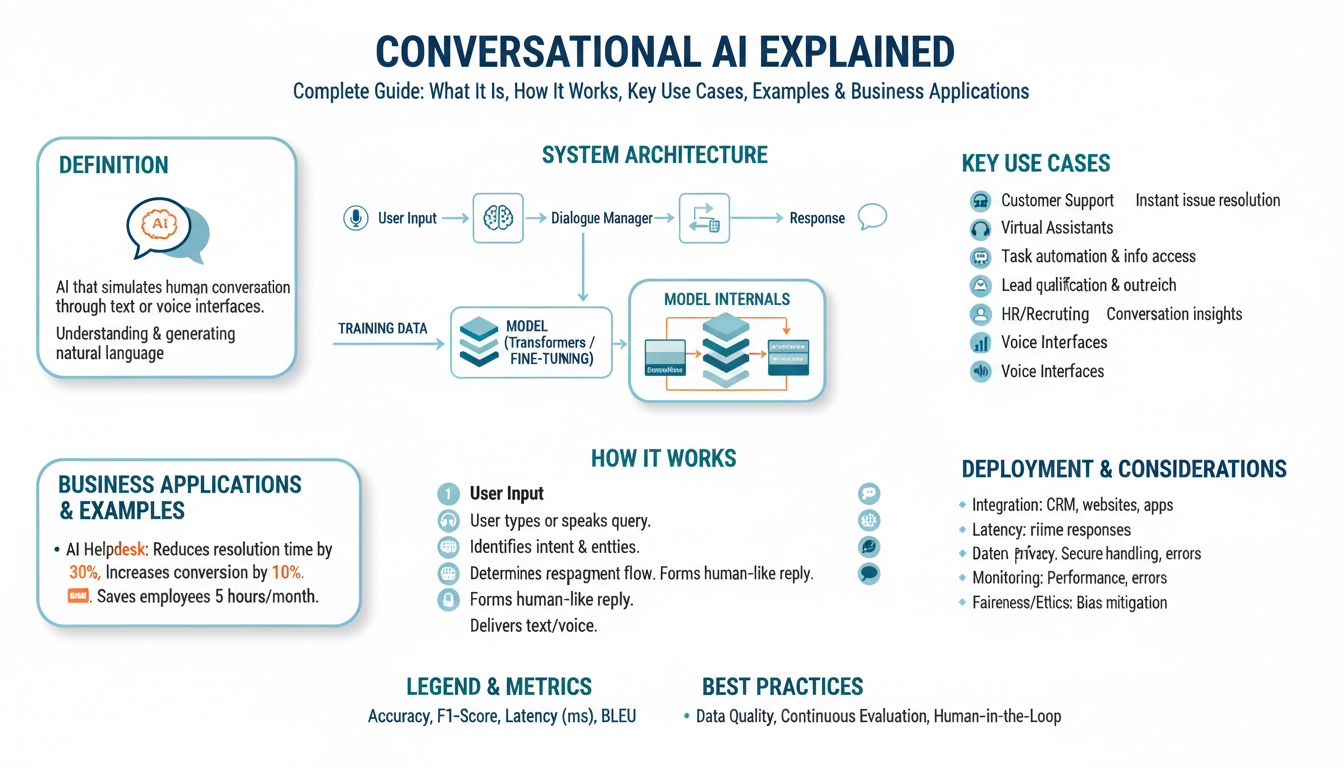

Conversational AI has progressed rapidly in recent years, evolving far beyond rule-based chatbots and simple voice assistants. At the heart of this evolution is Natural Language Understanding (NLU)—the branch of artificial intelligence focused on enabling machines to comprehend and process human language in all its richness and complexity. With the emergence of advanced models like transformer-based architectures, NLU now achieves feats that were once considered science fiction.

Modern conversational AI systems leverage NLU frameworks to parse, interpret, and even generate nuanced human responses. These systems are not limited to understanding basic commands; they can grasp context, sentiment, and subtle variations in intent. For example, Google’s BERT and OpenAI’s GPT-4 are transforming how digital assistants interpret ambiguity and context—a user asking “Can you book me a table for two at 7pm?” is not only understood as wanting to make a reservation, but the system can infer location, cuisine preferences, and even adapt its tone based on prior conversation history.

One of the critical steps achieved has been the move towards contextual language models. Earlier models operated on a single sentence or statement, failing to connect the dots over longer interactions. Today’s conversational AI uses entire conversation histories, referencing previous questions and answers to maintain a coherent thread. This has opened doors for applications in mental health, customer support, and education, where aligning with the user’s ongoing narrative is essential.

Another breakthrough in NLU is handling multilingual and cross-domain conversations. Advanced models increasingly handle multilingual inputs, allowing consistent performance across languages and dialects without the need for extensive retraining. Tools like Facebook’s XLM and Google’s Multilingual BERT demonstrate the ability to engage users globally, making NLU more inclusive and accessible than ever before.

Finally, integrating external knowledge sources—such as encyclopedic data, structured databases, and real-time content—has redefined what conversational AI can achieve. By connecting to knowledge graphs and semantic networks, AI can reference facts, understand cultural nuances, and provide informed opinions. This step towards knowledge-grounded conversation turns digital agents into true virtual collaborators rather than simple query responders.

Despite these advances, the journey is ongoing. Challenges remain in fully interpreting sarcasm, managing highly domain-specific jargon, and blending ethical considerations into conversations. Yet, the progress so far points to a future where interacting with AI feels indistinguishable from conversing with another human being—a goal that is now coming into sharper focus as new breakthroughs continue to emerge.

Emerging Multimodal AI: Combining Voice, Vision, and Text

As conversational AI rapidly advances, the integration of multiple sensory inputs—namely voice, vision, and text—is forging the next generation of truly intelligent digital assistants. Unlike traditional AI chatbots, which primarily process text, these multimodal models can understand and respond to a much richer tapestry of data, dramatically expanding the potential use cases and elevating user experiences.

Understanding Multimodal AI:

Multimodal AI refers to systems that process and combine different types of input, such as speech, imagery, and written language, to generate contextually appropriate responses. By allowing AI to see, hear, and read simultaneously, researchers are overcoming the constraints of single-channel intelligence. For example, Google DeepMind’s Gemini project and OpenAI’s recent advancements now enable AI to interpret complex visual scenes, engage in natural spoken dialogue, and provide nuanced answers by synthesizing multisensory information.

How Voice, Vision, and Text Combine:

- Voice: By incorporating automatic speech recognition (ASR), AI can capture and interpret spoken language, adding tone, emotion, and real-time communication to its capabilities. This technology powers digital assistants like Amazon Alexa and Google Assistant but is now being supercharged with more natural, context-aware dialogue flows. For a technical overview, see Google AI’s multimodal work.

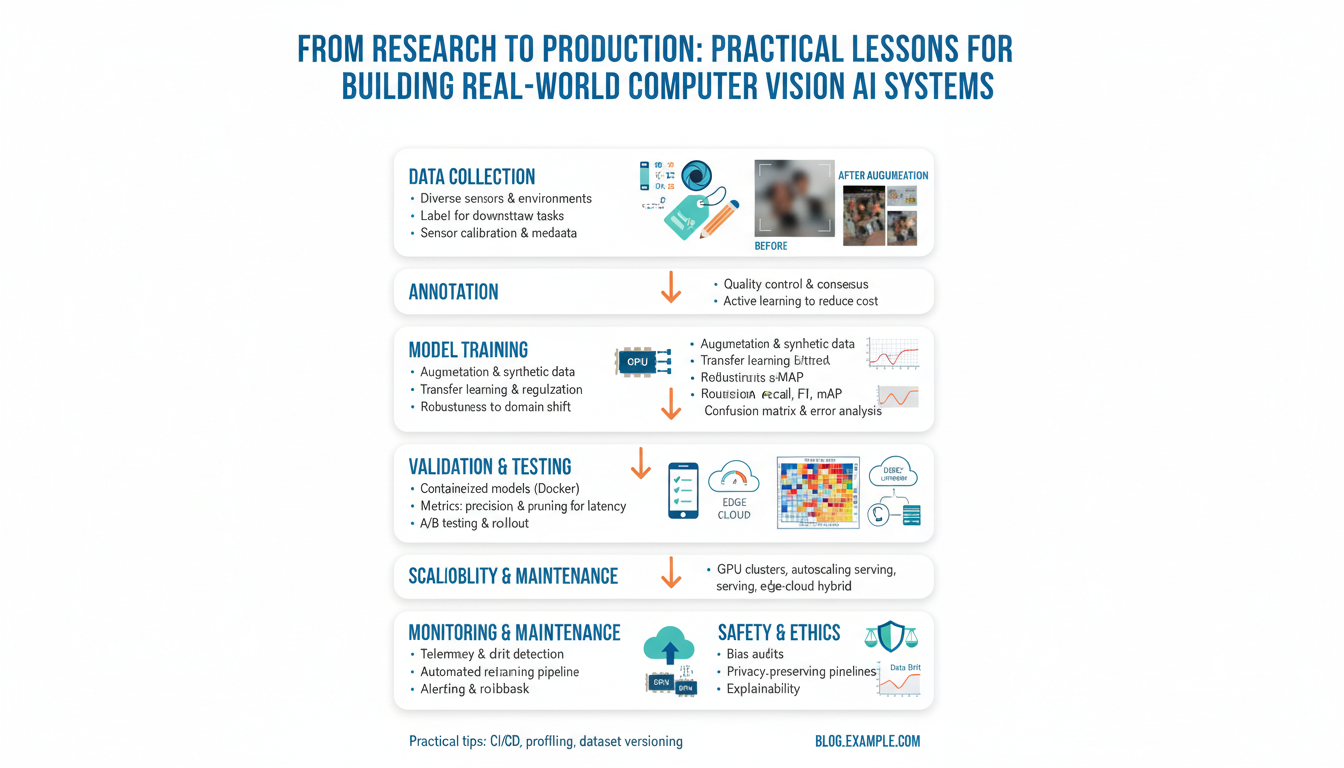

- Vision: Computer vision allows AI to “see” images and interpret visual information—whether it’s reading a menu, analyzing objects in a photo, or recognizing a face during a video chat. New models, such as those developed by Microsoft Research, deliver integrated image and language understanding, enabling richer, more intuitive user interactions.

- Text: Natural Language Processing (NLP) remains the backbone of conversation, parsing written input and constructing coherent, context-relevant responses. When fused with speech and visual cues, text-based AI becomes a more holistic companion, able to clarify ambiguous requests and maintain conversational context across different formats.

Real-World Applications and Examples:

- Healthcare: A doctor could upload a patient’s scanned X-ray while discussing symptoms aloud with a digital assistant. The AI parses the speech, analyzes the image, cross-references the patient’s electronic health records, and generates diagnostic suggestions. For deeper insights, Nature Medicine discusses the transformative impact of multimodal AI in clinical diagnostics.

- Customer Support: Modern virtual agents can now “see” screenshots or documents shared by users, “hear” the customer’s inquiry via voice, and “read” chat logs—all at once, streamlining issue resolution. This leap is covered by McKinsey in their analysis of AI-driven customer experience.

- Education: Imagine a student verbally describing a math problem, uploading a photo of their worksheet, and asking follow-up questions via text. The AI’s ability to process all these modalities allows for more personalized tutoring solutions, improving engagement and outcomes. For examples of such educational applications, consult Stanford Graduate School of Education.

Challenges and the Path Forward:

While the promise of multimodal AI is immense, challenges such as data privacy, interpretability, and bias remain. Integrating diverse data streams without losing nuance requires sophisticated neural architectures and access to large, high-quality datasets. As noted by leading experts in Nature, ongoing research aims to overcome these hurdles by developing more robust, transparent models and improving dataset curation.

By combining voice, vision, and text, emerging multimodal AI is poised to revolutionize how we interact with intelligent systems—unlocking new opportunities in communication, professional workflows, and personal assistance.

Personalized Conversational Agents: Tailoring Responses to Users

As conversational AI continues to evolve, one of the most exciting advancements is the development of personalized conversational agents. Unlike traditional chatbots that deliver generic, one-size-fits-all responses, these next-generation agents are designed to tailor their interactions based on a deeper understanding of individual users. This hyper-personalization is redefining user engagement and setting new standards for digital interaction.

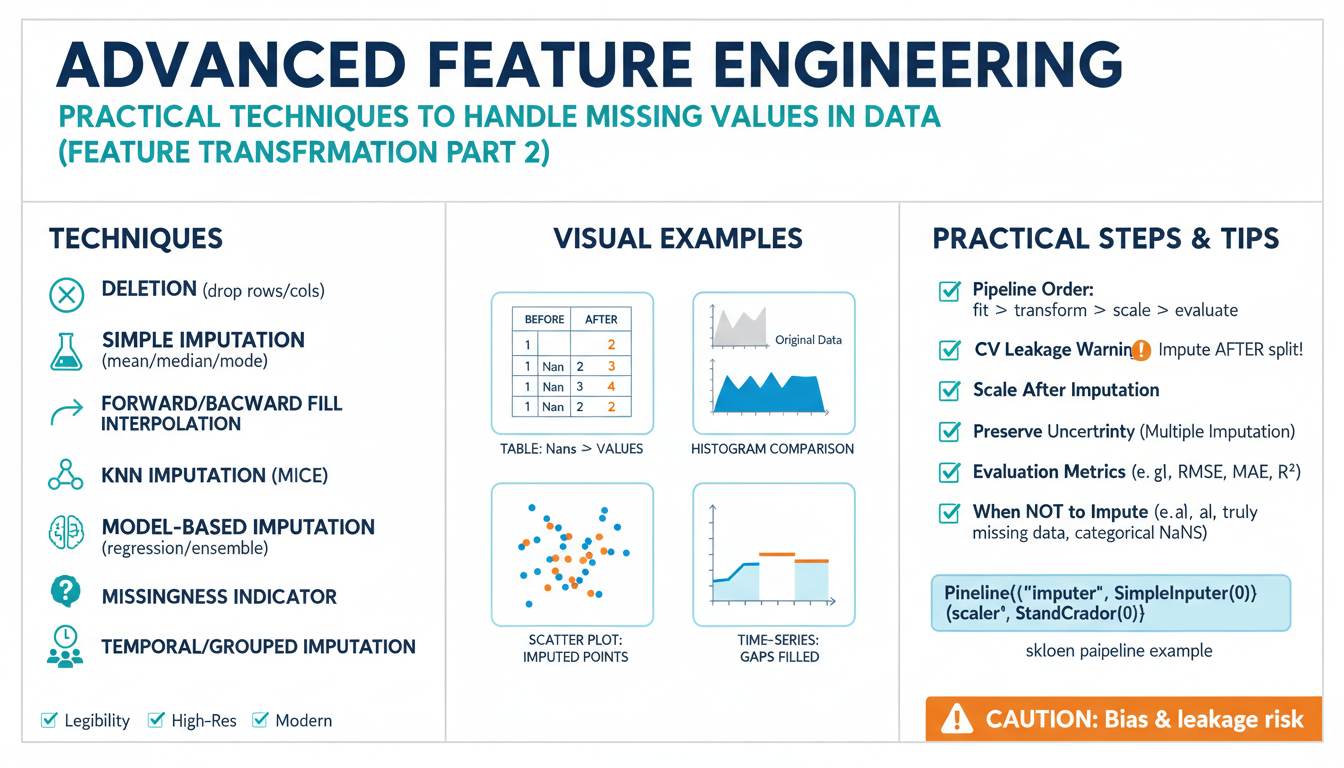

The power of personalized conversational agents comes from their ability to analyze and interpret vast amounts of user data—ranging from previous conversations to contextual cues, preferences, and behavioral patterns. By utilizing machine learning and advanced natural language processing (NLP) algorithms, these agents can remember user preferences, adapt their tone, and even predict future needs. For instance, a financial assistant can recall past transactions to offer budget-friendly tips, or a virtual healthcare provider can reference prior health data for more informed recommendations. To dive deeper into how machine learning achieves this, check out this insightful article from ScienceDirect.

One critical technology behind this tailored experience is user profiling, which involves building dynamic and secure models based on every interaction. Such profiling allows conversational agents to deliver truly bespoke responses. For example:

- Dynamic Tone Adjustment: If a user frequently responds formally, the agent adapts its replies to match that tone, creating a more comfortable and relatable conversation.

- Contextual Recall: Agents remember prior topics discussed, enabling smoother follow-on conversations without requiring the user to repeat themselves. This is especially useful in mental health support apps, where conversational continuity is crucial for effective care. Research on this can be found in the National Institutes of Health (NIH) repository.

- Anticipation of Needs: By analyzing patterns, agents can anticipate what resources or information the user might need next, creating a proactive rather than reactive experience. Retail chatbots, for instance, can suggest products based on a customer’s browsing history and seasonal trends.

However, this level of personalization comes with challenges, chiefly around data privacy and ethical considerations. Building trust requires transparent policies and robust data protection measures. Leading tech companies are proactively addressing these issues; for best practices and guidelines, refer to this comprehensive overview from The Brookings Institution. Ensuring users are aware of how their data is used—and securing explicit consent—remains paramount. Techniques such as Federated Learning are also being adopted to enable AI training without compromising personal data privacy (Google AI Blog).

In practice, user-centered AI design is already making a mark. Major platforms like digital banking apps and health management systems leverage conversational agents that adjust advice and reminders based on individual behavior and feedback. Virtual learning environments, too, are using adaptive agents to provide tailored tutoring, helping students progress at their own pace. A detailed exploration of such educational technologies can be found at TecScience.

Ultimately, personalized conversational agents represent a major leap forward in AI usability. By focusing on individual needs and preferences, these agents deliver meaningful interactions that foster loyalty and satisfaction. As the field advances, expect to see even more sophisticated strategies for personalizing digital experiences, driving the next wave of innovation in conversational AI.

Real-Time Emotion Recognition and Responsiveness

As conversational AI continues to mature, real-time emotion recognition and responsiveness are quickly becoming defining features that set advanced systems apart. Unlike earlier iterations of virtual assistants, emerging AI platforms harness sophisticated algorithms capable of interpreting the full spectrum of human emotion—enabling deeper, more empathic interactions.

Modern emotion recognition relies on a combination of natural language processing (NLP), voice analysis, and even computer vision. By parsing subtle vocal cues, word choices, facial expressions, and contextual patterns, AI systems can infer whether a user is happy, frustrated, confused, or anxious. For example, Microsoft’s Azure Cognitive Services employs speech analysis to detect sentiment and mood in real-time, adjusting its responses accordingly. You can read more about these capabilities from Microsoft’s Emotion Recognition Lab.

This real-time feedback loop fundamentally improves user experience. For instance, if a customer’s tone of voice signals annoyance during a chatbot conversation, the AI could proactively escalate the interaction to a human agent or employ more empathetic language to help resolve the issue. Companies like Affectiva, an MIT Media Lab spin-off, are pioneering this space with emotionally intelligent AI that analyzes facial and vocal signals—visit their technical documentation at Affectiva Emotion AI for in-depth examples.

To implement emotional responsiveness effectively, several steps are essential:

- Core Signal Detection: Identify which data streams (text, audio, video) will be analyzed. For instance, analyzing voice tone and pitch using tools like IBM’s Watson Tone Analyzer can reveal frustration or excitement in real-time.

- Real-time Processing Pipelines: Integrate APIs and machine learning models capable of interpreting emotional signals instantly. This might involve training neural networks on large, diverse datasets to recognize cultural and individual emotional nuances.

- Dynamic Conversational Adjustments: Build adaptive dialog flows. When negative emotions are detected, the chatbot can slow down, clarify, or offer a supportive response, much like an intuitively responsive human.

- Privacy and Ethics: Given the sensitivity of emotion recognition, it’s crucial to comply with ethical guidelines and data privacy laws. The Google Responsible AI Practices page offers insight into developing ethical AI interactions.

Real-world examples highlight the transformative power of emotion-aware AI. In mental health apps, such as Woebot, real-time sentiment analysis helps identify users in distress and modulate conversations to offer immediate reassurance or recommend professional support. In customer service, a virtual agent’s awareness of rising customer frustration can trigger timely interventions, decreasing churn and improving satisfaction.

Ultimately, real-time emotion recognition isn’t just a technical milestone—it marks a fundamental step toward AI that understands not only our words, but the feelings behind them. This leap paves the way for more authentic digital experiences, fostering trust and engagement that were once the exclusive domain of human-to-human interactions. To explore further, consider reading Harvard’s summary on the future of emotionally intelligent machines at Harvard Business Review.

Integrating Conversational AI into Everyday Life and Industries

As conversational AI rapidly matures, its applications stretch far beyond digital assistants, finding their way into nearly every aspect of our lives and transforming industries. This next wave is not just about chatting with smarter bots—it’s about seamless integration, where AI becomes a reliable companion in daily routines and professional workflows.

Smart Homes, Smarter Living

Conversational AI is now woven deep into the fabric of modern homes. Beyond controlling lights or setting reminders, these systems manage energy consumption, adjust home environments based on preferences, and provide proactive suggestions. For instance, AI-powered voice assistants like Amazon Alexa, Google Assistant, and Apple’s Siri now integrate with smart home platforms to create personalized experiences. If the AI senses you’re stressed via tone analysis, it may dim the lights or suggest a meditation playlist, effectively blending into your lifestyle and improving wellbeing.

Healthcare: AI’s Healing Touch

The healthcare sector stands among the greatest beneficiaries of conversational AI integration. Virtual health assistants are now capable of conducting symptom checks, scheduling appointments, and providing medication reminders, reducing the administrative burden on medical staff and improving patient outcomes. For example, the Mayo Clinic leverages AI chatbots to disseminate reliable health information and triage patient needs (Mayo Clinic on AI Chatbots). For patients, this means accessible support 24/7, and for practitioners, more time dedicated to complex cases.

Customer Experience Redefined

Businesses across retail, hospitality, and finance are using conversational AI to deliver faster, more personalized support. Chatbots handle everything from simple product queries to complex troubleshooting. For instance, Bank of America’s Erica virtual assistant has helped millions of customers navigate banking tasks, offering financial advice and detecting fraud patterns. This not only enhances customer satisfaction but also fosters long-term loyalty by making support accessible anywhere, anytime.

Education: Personalized Learning Journeys

Conversational AI is transforming education by fostering interactive, adaptive learning environments. Intelligent tutoring systems, such as those developed by Carnegie Mellon University, provide instant feedback, tailor content to individual student needs, and track progress over time. Through simulated conversations and scenario-based learning, students develop critical thinking and communication skills at their own pace, powered by AI’s capacity for personalization and data analysis.

Workplaces: AI-Driven Collaboration

The integration of conversational AI into daily workflows boosts productivity for teams of all sizes. AI meeting assistants automatically transcribe discussions, extract action items, and even help schedule follow-ups. Platforms like Microsoft Teams and Google Workspace have adopted these AI-driven features, making collaboration more efficient and less prone to human error. The result: streamlined workflows and smarter decision-making across organizations.

Steps for Seamless Adoption

- Identify key touchpoints: Start by mapping out where conversational AI can add the most value—customer service, internal operations, or user engagement.

- Prioritize integration: Opt for platforms that enable easy API integration with existing tools, ensuring a frictionless user experience.

- Educate users: Host training sessions or share resources, helping staff and users understand how to interact with and benefit from AI systems.

- Monitor and optimize: Continually assess AI performance using analytics, making data-driven improvements for more intuitive interactions.

By thoughtfully integrating conversational AI into homes, workplaces, and society, we move towards a world where natural, helpful, and proactive interaction with technology is not just the norm—but an expectation. Those who embrace these advances will find themselves ahead in efficiency, engagement, and innovation. For a deeper dive into the role of conversational AI across industries, consider reviewing guidance from the Harvard Business Review.

Ethical Dimensions and Challenges in Next-Gen AI

The rapid advancement of conversational AI presents exciting possibilities, but it also raises crucial ethical considerations that cannot be ignored. As we move beyond basic chatbots like ChatGPT toward smarter, more autonomous systems, the stakes around responsible development and deployment grow ever higher. Here are some of the critical ethical dimensions and challenges associated with next-generation AI:

Bias and Fairness in AI Conversations

One of the most pressing ethical concerns in conversational AI is ensuring fairness and minimizing bias. AI systems learn from massive datasets, many of which inadvertently contain biased perspectives or stereotypes. When these biases go unchecked, they can be perpetuated or even amplified by AI. For instance, research from the Association for the Advancement of Artificial Intelligence (AAAI) has shown how gender and racial biases can manifest in language models, affecting everything from job recommendations to healthcare advice.

Addressing this issue requires a multi-step approach:

- Curating diverse training datasets: Diverse and representative training data can help minimize bias from the outset.

- Ongoing auditing and testing: Regularly evaluating AI outputs for problematic responses through continuous auditing, as recommended by NIST, helps manage emergent issues.

- Transparency and explainability: Developers should be transparent about data sources and the methods used to address bias, empowering external review and fostering public trust.

User Privacy and Data Protection

Conversational AIs interact with users in personal and often sensitive ways, raising significant concerns around privacy, data collection, and consent. Unlike traditional web platforms, conversational AI may gather intimate insights into a user’s emotions, habits, and private life.

Effective measures include:

- Adherence to privacy regulations: Compliance with standards such as GDPR and COPPA is vital, especially for applications serving global and child audiences.

- Data minimization: Collecting only the essential information needed to provide services is a foundational principle, as highlighted by the UK Information Commissioner’s Office (ICO).

- Clear user consent: Providing transparent information about what data is collected and how it is used enables users to make informed choices.

Accountability and Transparency

As conversational AIs become more autonomous, determining responsibility for their actions is increasingly complex. Who is accountable when an AI makes a harmful recommendation, or when misinformation is spread?

Answering these questions requires:

- Clear documentation: Developers and organizations must document system capabilities and limitations, following guidelines from the Google AI Principles.

- Explainable AI: Making AI decision-making processes understandable to users and stakeholders fosters accountability.

- Ethical review boards: Encouraging oversight bodies to evaluate high-impact projects can prevent or mitigate harm before widespread deployment.

Preventing Harm and Misinformation

Conversational AI has the potential to inadvertently spread misinformation, give risky medical or legal advice, or manipulate vulnerable users. As these systems move closer to human-level fluency, the risks intensify.

Strategies to combat these risks include:

- Rigorous fact-checking protocols: Integrating up-to-date, vetted information sources is essential. For example, partnering with organizations like Snopes or International Fact-Checking Network (IFCN).

- Safeguard mechanisms: Implementing warning systems when the AI is unsure about an answer or offers only general information, especially in high-stakes domains like healthcare or finance (Nature Digital Medicine).

- Human-in-the-loop: Allowing for human review of sensitive or escalated conversations preserves both safety and quality.

Ethical stewardship of next-generation conversational AI is not just a technical challenge—it is a shared societal responsibility. Engaging with best practices, supporting ongoing research, and prioritizing transparency and accountability will help ensure that these powerful tools benefit everyone.