What Is Conversational AI?

Conversational AI refers to technologies that enable humans to interact with machines using natural language, whether it’s spoken or written. At its core, conversational AI combines multiple technologies—including Natural Language Processing (NLP), machine learning, and sometimes even voice recognition systems—to enable computers to understand, process, and respond to human language in a meaningful way.

Unlike standard chatbot systems that follow rigid decision trees or rules, conversational AI is designed to facilitate a much more flexible, human-like dialogue. This sophistication allows businesses to implement virtual assistants, customer support bots, and voice-activated devices that can handle complex inquiries and deliver personalized responses. For instance, when customers engage with a support chatbot on a retail website, conversational AI powers the experience by interpreting the intent behind their messages and generating relevant answers or actions.

The technology underpinning conversational AI includes several distinct layers:

- Speech Recognition: Converts voice input into text. This is a critical first step for systems like smart speakers and voice assistants. For more about how speech recognition works, visit ScienceDirect: Speech Recognition.

- Natural Language Understanding (NLU): Interprets the meaning and intent behind user inputs, allowing the system to comprehend context, slang, and ambiguity. Detailed insights about NLU can be found at IBM’s Natural Language Understanding Guide.

- Dialogue Management: Maintains the flow of the conversation, chooses what responses to give, and manages the “state” of the interaction so that conversations feel natural and connected.

- Natural Language Generation (NLG): Crafts human-like responses, transforming structured data or internal actions into coherent and contextually fitting replies. For a deeper dive, see DeepAI: Natural Language Generation.

Conversational AI offers immense value for businesses. For example, financial institutions deploy virtual assistants to guide customers through transactions, explain services, and even handle fraud alerts—all without human intervention. Similarly, e-commerce companies use conversational AI to recommend products, resolve sales inquiries, and streamline order processing, significantly enhancing the customer experience.

By integrating conversational AI, organizations can scale customer support operations, deliver instant and consistent responses, and free up human agents to focus on complex cases. This level of automation not only improves operational efficiency but can also boost customer satisfaction and retention. For more information on the business impact of conversational AI, check out research from McKinsey & Company.

In summary, conversational AI serves as the foundation for many of today’s smart, interactive systems. It empowers businesses to deliver seamless, 24/7 support and engagement, transforming how companies interact with their customers and creating new opportunities for growth.

Understanding Large Language Models (LLMs)

Large Language Models, commonly known as LLMs, are advanced artificial intelligence systems designed to understand, generate, and interpret human language at scale. Built on deep learning frameworks, these models are trained on vast datasets sourced from the internet, books, academic papers, and other text-rich repositories. This allows them to acquire an impressive grasp of syntax, semantics, and even the nuances in tone and context.

One of the most notable achievements in LLM technology comes from OpenAI with its GPT (Generative Pre-trained Transformer) series, which has set the standard for natural language processing capabilities. LLMs are not just theoretical—real-world applications span from customer service chatbots to content generation and complex data analysis. For an in-depth exploration, check resources like Google AI Blog and DeepMind’s research on multilingual LLMs.

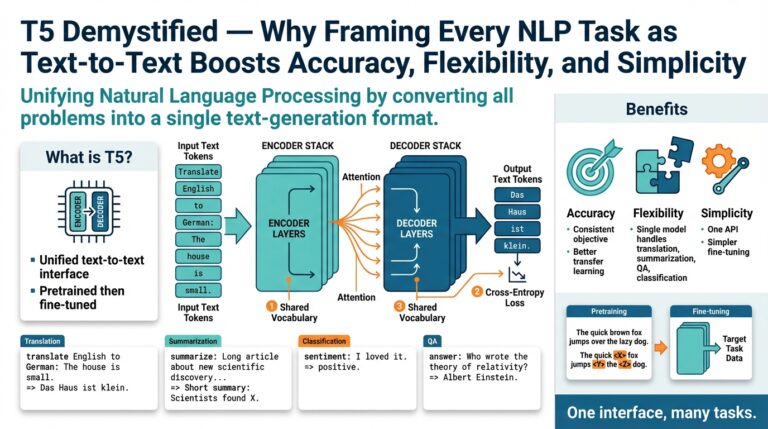

- Training Process: LLMs undergo a two-step training process: pre-training and fine-tuning. In pre-training, the model learns to predict the next word in a sentence given the previous context, which helps it develop a general purpose understanding of language. Fine-tuning then customizes this knowledge to specific tasks or industries, such as healthcare, legal, or finance.

- Scalability: LLMs can be scaled up with more data and parameters, making them adaptable for businesses of all sizes. Whether you’re a startup or a large enterprise, there’s an LLM version that can fit your needs. Read more about scalability in AI on McKinsey’s insight on generative AI.

- Understanding and Generation: Unlike traditional rule-based systems, LLMs excel in language generation (creating text) and comprehension (understanding user input). For example, LLM-powered systems can draft emails, write product descriptions, summarize lengthy reports, or even generate code based on plain English instructions.

- Continual Learning: While current LLMs are not typically updated in real time, organizations can re-train or fine-tune these models periodically with their proprietary data, allowing the AI to stay current and reflect company-specific knowledge. Learn about this process on O’Reilly’s introduction to LLMs.

In practice, integrating LLMs into business operations can streamline content curation, automate knowledge base updates, and enhance personalization in customer communication. For instance, businesses can deploy a customized LLM to quickly analyze feedback from thousands of customer interactions and generate actionable insights, saving time and improving decision-making efficiency.

As these models continue to evolve, they unlock new levels of automation and creativity, holding transformative potential for industries worldwide. To stay ahead, it’s crucial for business leaders to understand not just what LLMs can do, but also their limitations and ethical considerations, as discussed in Harvard Business Review.

Core Functional Differences Between Conversational AI and LLMs

At the heart of today’s AI-powered business solutions are two distinct technologies: Conversational AI and Large Language Models (LLMs). Understanding their core functional differences is crucial for organizations aiming to strategically integrate AI for success and sustainable growth.

Purpose and Primary Functionality

Conversational AI systems are purpose-built to facilitate interactive dialogues with users, often in the context of customer service or virtual assistance. Their strength lies in task-oriented conversations—they are trained and optimized to handle specific queries, route support tickets, or provide guided responses based on user intent. In contrast, LLMs, such as OpenAI’s GPT-4, are designed for broad natural language processing and generation. They support a wide variety of language tasks beyond conversation, including summarization, content creation, and complex reasoning (McKinsey).

Training Data and Adaptability

Conversational AI typically utilizes domain-specific datasets and predefined dialog flows to deliver fast, accurate responses. This makes them highly efficient for routine tasks, such as scheduling appointments or answering FAQs. LLMs, on the other hand, are trained on massive and diverse datasets pulled from books, articles, forums, and more. This broad exposure enables them to adapt to diverse topics and generate human-like text. However, this generalization presents challenges in maintaining accuracy and relevancy in specialized business settings unless further fine-tuned (IBM).

Personalization and Context Awareness

One standout feature of Conversational AI is its ability to incorporate tightly integrated context from customer databases, previous chats, and ongoing user interactions. This allows for highly personalized experiences, as these systems can remember preferences, recognize returning users, and anticipate needs. For instance, a banking chatbot can suggest relevant products based on transaction history. LLMs, while context-aware within a single session, often lack long-term memory unless explicitly integrated with external tools or APIs. This difference significantly impacts their efficacy in delivering tailored customer experiences (Salesforce).

Integration with Business Workflows

Conversational AI is commonly designed for seamless integration with enterprise software—CRM systems, ticketing solutions, knowledge bases, and backend processes. This enables automated execution of tasks, such as updating records or processing requests. LLMs are generally platform-agnostic and, unless extended through custom development, are less adept at directly interacting with company-specific tools. Businesses looking for end-to-end workflow automation often leverage conversational platforms or blend both technologies to maximize output (Gartner).

Real-World Example: Customer Support

Imagine a retailer aiming to enhance customer support. A conversational AI chatbot, trained on product FAQs, can handle high volumes of standard inquiries—order tracking, return policies, and payment issues—quickly and efficiently. For more intricate queries requiring nuanced understanding or handling exceptions, an LLM can provide detailed explanations or draft personalized responses by leveraging its expansive language capabilities and access to a broader knowledge base (MIT).

By grasping these core functional differences, businesses can make informed decisions about which technology is better suited to their goals, whether optimizing routine processes or unlocking new avenues for intelligent automation and customer engagement.

Use Cases: Where Conversational AI and LLMs Excel

When evaluating the adoption of Conversational AI and Large Language Models (LLMs), it’s essential to understand the unique scenarios where each technology excels. Their applications in business differ markedly, and capitalizing on their strengths can significantly drive efficiency, customer satisfaction, and growth.

Customer Service and Support

Conversational AI: These platforms, such as chatbots and virtual assistants, are engineered to deliver structured, efficient customer service experiences. They excel in environments where predictable, repetitive queries dominate, such as banking FAQs, e-commerce order tracking, or flight status updates. For instance, an airline can deploy a conversational AI to handle common passenger questions about flight schedules or baggage rules, freeing up human agents for more complex interactions. This is possible through pre-defined conversational flows, natural language understanding, and integration with backend systems. For a deeper understanding, see IBM’s overview on Conversational AI.

LLMs: In contrast, LLMs like OpenAI’s GPT-4 or Google’s PaLM thrive in ambiguous or unstructured scenarios where information extraction, summarization, or creative problem-solving is needed. If a customer contacts a telecom provider with a complex technical issue, an LLM can generate detailed troubleshooting steps, adjust its responses based on the customer’s technical proficiency, and even summarize past interactions for agents. Such context-rich support is difficult for traditional rule-based agents. Learn more about LLMs and their capabilities at Harvard Data Science Review.

Marketing Automation and Personalization

Conversational AI: This technology can automate appointment bookings, product recommendations, or lead qualification in real time. A retailer, for example, can use chatbots on their website to guide customers toward products based on straightforward preferences such as size or color, and automate follow-ups via SMS or email. These systems enhance lead conversion by providing quick and accurate responses, thus shortening the sales cycle.

LLMs: LLMs push personalization further by dynamically generating unique marketing content, emails, and responses tailored to individual user profiles. Businesses can use LLMs to analyze customer data, craft compelling product descriptions, and even respond to nuanced feedback on social platforms with human-like fluency. This can improve engagement and foster brand loyalty. For reference on how generative AI is transforming marketing, visit Harvard Business Review.

Employee Productivity and Knowledge Management

Conversational AI: Companies often use conversational bots for automating internal tasks like scheduling meetings, approving expenses, or answering HR policy queries. For instance, a conversational agent can help employees onboard faster by walking them through company policies and automating help desk interactions. By simplifying these processes, businesses can reduce administrative overhead and improve employee satisfaction.

LLMs: LLMs, due to their context-aware language processing, can power advanced internal tools such as document search, summarization, or even drafting business reports. Employees can interact with these systems to get quick, accurate insights from vast troves of data, which is especially valuable in legal, research, or consulting firms. To explore these use cases in more depth, check Forrester’s research on the business impact of generative AI.

Industry-Specific Applications

Conversational AI: The healthcare industry uses conversational AI to streamline appointment bookings, send reminders, and provide initial triage, reducing clinical administrative burden. Similarly, financial institutions leverage chatbots for tasks like balance inquiries and fraud alerts. These applications demand predictable, rule-based workflows to ensure reliability and compliance.

LLMs: In contrast, LLMs are proving revolutionary in areas like legal contract analysis, medical literature review, or customized curriculum development in education. Their ability to digest and generate insights from vast, unstructured datasets unlocks new efficiencies and innovation in fields that rely heavily on data interpretation. For a more comprehensive look at industry adoption of LLMs, consult McKinsey’s analysis on generative AI’s economic potential.

Ultimately, the key to leveraging these technologies for business growth lies in matching their strengths to the right use cases, whether it means deploying conversational AI for scalable, rule-based interactions or LLMs for rich, context-driven solutions.

Integration and Implementation Considerations for Businesses

When businesses weigh the adoption of Conversational AI and Large Language Models (LLMs), the integration and implementation process becomes a defining factor for ensuring long-term value. Both technologies promise advanced capabilities, but their practical rollout, ongoing management, and alignment with business needs differ significantly. Below we delve into the crucial aspects that organizations should consider and offer detailed steps and real-world context to empower your decision-making process.

Assessing Infrastructure Needs

Before implementation, a thorough analysis of your existing IT infrastructure is vital. Conversational AI platforms, which often focus on rule-based interactions and narrow applications, may seamlessly plug into existing customer relationship management (CRM) or enterprise resource planning (ERP) systems. In contrast, LLMs, with their demand for significant computational power and data storage, might require robust cloud resources or partnership with providers like Google Cloud AI or Microsoft Azure AI Services. Ensuring compatibility and scalability from day one can save future headaches and expenditures.

- Step 1: Conduct a technology audit to identify integration gaps.

- Step 2: Estimate workload requirements for both Conversational AI and LLMs.

- Step 3: Experiment with pilot deployments in a sandbox environment.

Customizing for Industry-Specific Needs

Generic solutions seldom address the nuances of specific industries. Conversational AI tools typically come with pre-built integrations for industries like banking, retail, and healthcare, enabling rapid setup. However, LLMs can be customized for advanced tasks such as document summarization, legal research, or scientific data analysis. Customization requires a clear understanding of language data, pertinent regulations, and business workflows. For example, healthcare organizations must prioritize HIPAA compliance, while financial institutions may need to integrate KYC (Know Your Customer) features, as explained by IBM’s guide to KYC.

- Tip: Create cross-functional teams involving IT, legal, and business units during customization.

- Example: A retail company deploying LLMs might configure models to understand product catalogs, loyalty programs, and customer service queries in multiple languages.

Data Security and Privacy

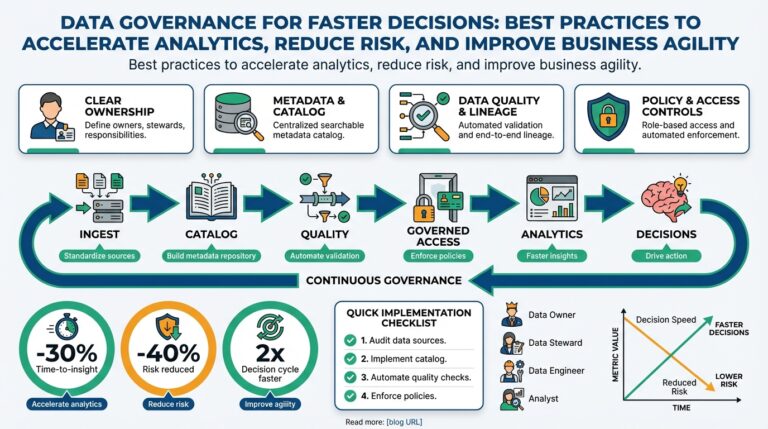

Implementation success hinges on robust data governance. Conversational AI solutions often feature data privacy controls tailored for sensitive exchanges—think customer PII in chatbots. For LLMs, the risks can be magnified due to the vast data ingested and generated. Businesses need to ensure compliance with regulations like GDPR and CCPA, employing practices outlined by organizations such as IAPP.

- Step 1: Map data flow patterns and identify sensitive data touchpoints.

- Step 2: Utilize encryption, access control, and anonymization wherever possible.

- Step 3: Conduct regular security audits after each integration phase.

Training, Fine-Tuning, and Human in the Loop

LLMs typically require additional fine-tuning using proprietary datasets to yield domain-specific results, a process that Conversational AI tools might approach with simple scenario-based scripting. Both, however, benefit from a “human-in-the-loop” strategy for monitoring and iteratively improving performance. Experts from McKinsey underscore the importance of ongoing model evaluation and retraining to minimize errors and bias.

- Step 1: Gather representative training datasets that reflect your customer base and operations.

- Step 2: Apply supervised fine-tuning to ensure relevance and compliance.

- Step 3: Set up feedback channels for employees and customers to report inaccuracies.

Monitoring Performance and Continuous Improvement

Real-world deployment is just the beginning. Businesses should implement monitoring systems to track key performance indicators (KPIs) such as response time, accuracy, user satisfaction, and conversion rates. For LLMs, use recent research from arXiv and best practices for continuous learning and model drift detection. Regularly updating both Conversational AI scripts and LLM configurations ensures long-term adaptability and maximizes ROI.

- Step 1: Establish baseline metrics before launch.

- Step 2: Use dashboards and analytics tools to track post-launch performance.

- Step 3: Schedule quarterly reviews for model updates based on business evolution and user feedback.

The journey from evaluation to full implementation can be complex but highly rewarding for organizations that prepare well. Businesses that follow structured, strategic steps will find themselves better positioned to harness the transformative potential of both Conversational AI and LLMs.

Cost, Scalability, and Customization: A Comparative Look

When evaluating Conversational AI and Large Language Models (LLMs) for deployment in business settings, the differences in cost, scalability, and customization must be understood thoroughly. Each solution brings unique strengths and limitations, potentially dictating their effectiveness for specific organizational needs. Here’s a closer look at each critical area, supported by real-world examples and authoritative analysis.

Cost Considerations

Cost is one of the most significant factors influencing the adoption of AI technologies. Conversational AI—which usually involves predefined rules and natural language processing tailored for specific business applications—tends to be less expensive to implement and maintain in targeted use-cases. Most enterprise-grade chatbots built on Conversational AI can be deployed using existing platforms, requiring lower upfront investment and less compute power compared to LLMs. These solutions often involve a subscription model or usage-based pricing; platforms like IBM Watson Assistant and Google Dialogflow exemplify cost-effective entry points.

LLMs like OpenAI’s GPT-4 or Google’s Gemini, in contrast, require substantial computational resources, especially during training and real-time inference. This translates to higher operational costs, both in terms of cloud infrastructure and energy consumption. According to a recent analysis by MIT Technology Review, the energy usage and cost of running large models can be prohibitive for smaller organizations. Thus, businesses must evaluate the long-term ROI not just for model development but also ongoing deployment, including updates and scaling.

Scalability

Scalability defines how well a solution can handle increased user demand without performance degradation. Conversational AI platforms are typically designed to serve tens of thousands of concurrent users reliably—making them excellent for customer support, sales, and internal help desks. Their modular architecture lets organizations scale up by integrating with existing IT infrastructure, third-party apps, and CRMs with relative ease.

LLMs, because of their complexity and compute requirements, can be more challenging to scale. While cloud-based services offered by providers like Microsoft Azure OpenAI Service and Google Cloud Vertex AI allow for robust scalability, costs can rise sharply as usage grows. LLMs are, however, capable of handling vast amounts of unstructured data and can provide more nuanced, context-aware responses—ideal for knowledge management, content generation, and sophisticated conversational applications that demand depth over sheer transaction volume.

Customization: Tailored Experiences at Scale

The need for customization varies depending on business requirements. Conversational AI generally allows for highly customized flows, tightly coupled with business logic. They can be programmed to handle specific intents, comply with stringent regulations, and integrate with proprietary databases or APIs. Businesses can easily script interactions, set escalation paths, and maintain control over the output, making these solutions particularly valuable in regulated industries or where exactness is critical. Learn more about custom Conversational AI workflows in this Gartner overview of chatbots.

LLMs, on the other hand, shine in dynamic environments where businesses seek rapid adaptation to new data or user behaviors. With techniques like prompt engineering or fine-tuning, organizations can tailor LLMs for specialized use-cases such as multi-lingual support, advanced semantic search, or creative content development. However, customization requires advanced skills in data science and significant computational resources, making them less accessible for non-technical teams. Industry leaders are innovating rapidly to simplify customization—see the advancements in easy-to-tune LLMs described in this blog post by Google.

In summary, choosing the optimal AI solution relies on a clear understanding of business goals, available resources, and technical expertise. Conversational AI continues to be the preferred choice for tightly scoped, cost-sensitive, and highly regulated deployments. LLMs, while more expensive, offer unparalleled adaptability and intelligence for businesses aiming to differentiate through advanced AI-driven experiences. For deeper technical insights, consult this comprehensive comparison by McKinsey & Company.