Demystifying AI: From Simple Algorithms to Complex Reasoning

Understanding artificial intelligence (AI) often feels like stepping into the realm of science fiction—but at its core, AI is about teaching computers to mimic aspects of human intelligence. The journey from rudimentary algorithms to today’s advanced reasoning machines is a story of relentless innovation, grounded in mathematics, computer science, and an ever-deepening understanding of cognition.

From Rule-Based Systems to Machine Learning

Decades ago, AI began with rule-based systems, where computers were programmed with specific instructions to follow. These early AI programs operated much like a complex ‘if-this-then-that’ script, excelling in constrained environments but struggling with anything unexpected or nuanced. For example, early chess engines would consider exhaustive move trees, yet failed at generalizing their knowledge beyond the chessboard.

Machine learning marked a turning point. Instead of hard-coded rules, these systems learn patterns from data. Imagine a basic spam filter software: rather than listing every possible spam phrase, it analyzes hundreds of thousands of emails to identify distinguishing characteristics of unwanted messages. This process, known as supervised learning, enables the AI to predict or classify new, unseen data—a leap akin to going from a calculator to a student who can learn from example.

The Deep Learning Revolution

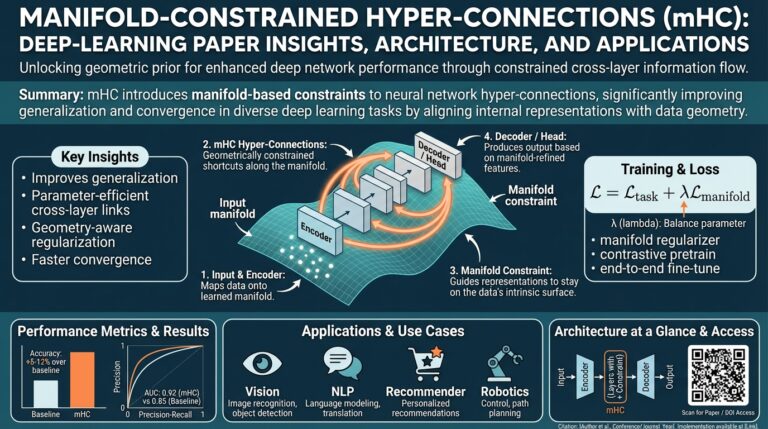

The advent of deep learning—using neural networks inspired by the human brain—catapulted AI’s capabilities. These models, trained on vast datasets, can recognize images, translate languages, and even compose music or art. A deep neural network works in layers: the first might recognize simple shapes in a photograph, the next combines shapes to recognize objects, and higher layers draw increasingly abstract connections. This layering enables systems like GPT-3 to construct entire paragraphs of human-like text from simple prompts.

Consider facial recognition. Earlier systems struggled with changes in lighting or angle, but deep learning models trained on millions of images can identify faces with remarkable accuracy. Each layer of the network parses features—from pixels to noses to entire faces—allowing robust, context-aware recognition.

The Rise and Architecture of Large Language Models

Large Language Models (LLMs) like Google’s LaMDA or OpenAI’s GPT series extend deep learning’s concepts to vast scales. These models are trained on colossal text corpora mined from books, news sites, and the web. The result is a system not just capable of grammar, but one that can craft stories, recommend products, answer questions, or even code.

The architectural shift underlying LLMs is the transformer—a design that pays attention to the relationships between words, even across long passages (more on transformers). For example, when asked about the capital of France, the LLM understands not only the word “capital” but its relationship to “France” in context, generating the answer “Paris” with convincing reasoning.

Examples: AI in Everyday Life

- Personal Assistants: Siri and Alexa anticipate user needs using natural language understanding.

- Medical Diagnostics: AI models help spot anomalies in X-rays by comparing images against massive training datasets (study example).

- Recommendation Engines: Netflix and Spotify use patterns in your choices and preferences to suggest new content, powered by collaborative filtering and deep learning.

These advances have steadily moved AI from abstract laboratories into everyday applications, reshaping everything from entertainment to healthcare and communication.

The next leap is agents—AI systems that combine reasoning, memory, and decision-making to interact dynamically with the world. But to understand why agents are so transformative, it’s crucial first to appreciate just how far AI has come: from mechanical, rule-based calculators to creative, contextually aware partners driven by massive language models.

What are Large Language Models (LLMs) and How Do They Learn?

Large Language Models, or LLMs, are at the heart of many breakthroughs in artificial intelligence today. Simply put, LLMs are AI systems trained to understand and generate human-like text. Unlike early chatbots that relied on simple rules or keywords, LLMs use deep learning—a subset of machine learning—powered by neural networks that mimic certain aspects of the human brain. But what makes LLMs so transformative in how machines process language?

First, LLMs are built upon vast neural network architectures with billions, sometimes trillions, of parameters. Parameters are essentially the knobs and dials that the model tunes during its training to make the best possible predictions about language use. This training process involves feeding the model an enormous volume of textual data—think Wikipedia, news articles, books, and web content—so it can detect patterns, grammar, meaning, and even style over time. Stanford University offers a comprehensive high-level explanation of this learning process in their AI Blog.

The learning phase, called “pre-training,” allows the LLM to build a statistical map of language. During pre-training, the model might be prompted to fill in a missing word in a sentence. For example, given the input “The sky is ___,” the LLM learns that “blue” is a likely completion. It does this billions of times, learning nuance, context, and even some reasoning skills. After pre-training, LLMs often undergo ‘fine-tuning’ where they are trained further on specialized datasets to improve performance for specific tasks—like legal analysis, medical information, or customer support.

Understanding how LLMs generate text is also crucial. When you prompt an LLM with a question or a message, it predicts the most likely next word based on everything it has learned. By stringing together these predictions, it can generate coherent paragraphs, answer questions, or even write poetry. A deeper dive into “transformer” architecture, the key innovation behind LLMs, can be found in this Google AI Blog post. Transformers revolutionized natural language understanding by allowing models to focus on different parts of a sentence for context, which greatly improves accuracy and coherence.

Practical applications of LLMs are everywhere—from automatic translation in apps like Google Translate to AI writing assistants used by journalists and marketers. The models continue to improve as researchers develop new training techniques, gather more representative datasets, and use smarter evaluation metrics. The competition among tech giants to build larger and more capable LLMs is a testament to their transformative potential in fields as varied as education, healthcare, and entertainment. For a balanced overview of ethical considerations and societal impact, the Harvard Data Science Review is an excellent resource.

In summary, LLMs are not just chatbots—they are sophisticated tools that have fundamentally changed the possibilities in natural language understanding and generation. By continuously learning from vast swaths of text and being fine-tuned by human feedback, they are increasingly able to support, inform, and entertain users around the globe.

The Building Blocks: Data, Training, and Neural Networks

Understanding how artificial intelligence (AI) and large language models (LLMs) operate begins with grasping their foundational elements: data, training, and neural networks. Each of these components plays a unique role in shaping an AI system’s ability to learn, adapt, and interact with the world.

1. Data: The Raw Material

Everything starts with data — vast, diverse collections of information that serve as the “fuel” for machine learning. Think of data as the experiences from which AI learns, much like humans absorb knowledge from books, conversations, and daily life. LLMs rely on enormous datasets culled from sources such as Wikipedia, news articles, social media, medical journals, and technical documentation. For context, OpenAI’s GPT-3 was trained on a dataset known as Common Crawl, which contains petabytes of publicly available web content.

- Data must be cleaned, structured, and, in some cases, filtered to ensure the model doesn’t learn biased or inappropriate patterns. Responsible data sourcing is a hot topic in AI ethics, as data directly impacts the behavior and fairness of the resulting models.

- The diversity and relevance of data influence an AI’s ability to generalize across different topics and tasks.

2. Training: Learning by Example

Once data is gathered, the model undergoes a process called training, which teaches it to recognize patterns, infer relationships, and generate coherent responses. Training involves running millions (or billions) of text samples through the AI, adjusting its internal parameters to minimize error. This process, called gradient descent, gradually tunes the model’s weights so it can predict the next word in a sentence or complete complex tasks.

- Imagine teaching a child to read by giving them a series of books and correcting them each time they make a mistake. Similarly, AIs learn through repeated exposure and feedback, a process enhanced with techniques such as supervised learning or unsupervised learning.

- The scale of modern LLM training is staggering—GPT-4, for example, was reportedly trained on trillions of words over weeks or months, consuming vast amounts of computational power from specialized hardware called GPUs (graphics processing units).

3. Neural Networks: The Architecture of Intelligence

At the heart of every LLM lies a neural network—a mathematical architecture inspired by the structure of the human brain. Neural networks consist of layers of interconnected nodes (“neurons”) that process and transform information. Each node applies mathematical operations to incoming data, with the collective output enabling the model to understand language, generate new content, or answer complex queries. The most powerful LLMs employ advanced neural network structures known as transformers that excel at handling long-range dependencies in text.

- Each layer of a transformer neural network breaks down and recombines information, detecting patterns ranging from simple (individual words) to highly complex (context, sentiment, and nuance).

- For example, when you ask an LLM to write a poem about space travel, each neuron activates in response to relevant words and concepts, ultimately producing a cohesive and creative output.

The interplay between immense datasets, sophisticated training processes, and intricate neural networks forms the backbone of today’s AI. These building blocks enable LLMs to mimic human-like understanding and creativity, setting the stage for even more advanced systems—including autonomous agents that act on behalf of users. To learn more about these core concepts, you can explore detailed guides from Stanford AI Lab or read introductory tutorials from Coursera’s Neural Networks course.

Real-World Applications: How LLMs Power Modern Tools

Large Language Models (LLMs) have rapidly evolved from fascinating research projects to foundational pillars of today’s most advanced digital tools. Their ability to understand, generate, and manipulate natural language enables real-world applications that impact countless industries. Here’s a closer look at how these powerful models drive modern innovations, along with some detailed examples and the steps behind their transformative power.

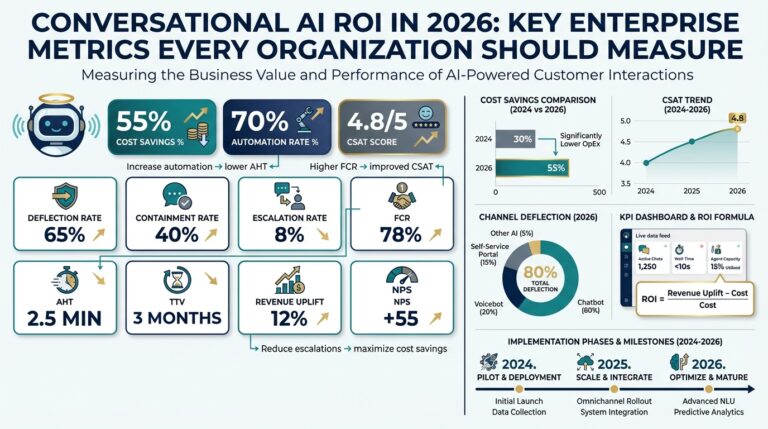

Conversational AI: Revolutionizing Customer Support

LLMs power a new era of conversational AI solutions that can engage in nuanced dialog with customers. These virtual assistants are not limited to simple queries; they resolve complex problems, understand context, and even detect sentiment. For instance, when a customer reaches out to a financial institution via chat, an LLM-driven bot can:

- Authenticate the user securely through conversation.

- Access transaction histories and provide actionable advice.

- Escalate issues to human agents by analyzing the customer’s language for urgency or frustration.

Such AI-powered systems save time, reduce operational costs, and improve customer satisfaction by delivering instant, reliable responses around the clock.

Content Generation and Curation: Enhancing Creativity

Media organizations, marketers, and educators now routinely turn to LLMs for content creation and idea generation. By understanding prompts and leveraging massive knowledge bases, LLMs:

- Draft articles, reports, ad copy, and social posts in seconds.

- Paraphrase information and suggest headlines tailored to specific audiences.

- Generate summaries for lengthy documents, making information accessible and actionable.

For example, educators can input a lesson topic and receive multiple explainer versions suited to different grade levels. Marketers use LLMs to brainstorm campaign ideas based on emerging social trends, backed by real-time data analysis.

Code Generation and Automation: Accelerating Software Development

LLMs are not only language experts—they can also write and explain code. Platforms such as OpenAI’s GPT models and Google’s Codey can:

- Translate natural language requirements into functioning code snippets.

- Debug and optimize existing code, pointing out logical flaws or inefficiencies.

- Automate repetitive programming tasks, freeing up developers to focus on design and strategy.

This transformation accelerates innovation and lowers the barrier to entry for aspiring developers, as they can learn by example and interact with these models to understand complex algorithms in simpler terms.

Real-Time Translation and Accessibility: Breaking Language Barriers

Instant translation has long been a goal of AI, and LLMs now deliver on that promise with contextually accurate, conversational translations. According to research by Brookings Institution, these models don’t just translate words—they capture idioms, tone, and local nuance, making communication smoother across borders. With real-world steps like:

- Detecting the source and target languages automatically.

- Maintaining conversational context even when meanings are ambiguous.

- Providing accessibility through audio, braille, or simplified summaries for people with disabilities.

This technology is a boon for global business, education, and inclusive design, expanding opportunities for millions worldwide.

Personalization at Scale: Customizing Digital Experiences

From personalized shopping recommendations to adaptive learning platforms, LLMs help deliver bespoke experiences in real time. Forbes Tech Council highlights that these models drive recommendation engines and adaptive interfaces by:

- Analyzing user interactions and preferences with astonishing depth.

- Predicting user needs before they are explicitly stated.

- Crafting product or content suggestions that feel uniquely relevant.

This personalization, made possible through the sophisticated capabilities of LLMs, fosters deeper engagement and higher satisfaction across e-commerce, streaming platforms, and digital learning environments.

With their ability to read, write, reason, and remember across countless domains, LLMs have become essential engines behind the digital experiences we increasingly rely on. As they continue to advance, their real-world applications will only grow, powering tools that are smarter, more adaptive, and increasingly indispensable.

The Rise of AI Agents: Beyond Chatbots and Automation

Today’s AI landscape is far richer than just chatbots scheduling appointments or automating repetitive emails. AI agents represent the next giant stride, moving beyond basic automation to become sophisticated digital collaborators capable of complex problem-solving, reasoning, and independent goal pursuit. Rather than just responding to queries, agents can perceive environments, make decisions, and take action — all while adapting to feedback in real time.

If you think about your interactions with digital assistants and chatbots, their actions are typically limited to scripted responses and very narrow tasks. However, modern AI agents are built upon advanced large language models (LLMs) and additional capabilities such as tool use, memory, and multi-step planning. For instance, an AI agent might be tasked with market analysis: instead of only responding with static facts, it can autonomously surf the web, analyze current events, synthesize financial reports, and deliver an actionable summary, similar to how a human research assistant operates. CB Insights explores various use cases showing how AI agents bring value that chatbots can’t match.

Key Features that Set AI Agents Apart

- Autonomy: Unlike most chatbots, AI agents are equipped to make decisions and take multi-step actions without continuous human prompting. For example, an agent can receive an overarching goal — such as booking a trip — and proceed to research flights, compare prices, reserve hotels, and even notify you of visa requirements, intelligently handling each phase on its own.

- Environmental Awareness: Agents are not confined only to the data they’re initially trained on. With recently added capabilities, such as API access or browsing, agents can stay updated and react to live information. Companies like OpenAI and Anthropic have published research on agents that continually learn from and adapt to evolving environments.

- Tool Use: Instead of just generating text, AI agents can use third-party tools and software to execute tasks, such as using spreadsheets for analysis or code editors for debugging. This leap mirrors how a junior analyst or developer at a company might approach day-to-day responsibilities.

- Memory and Iteration: Advanced agents don’t just perform one-off actions — they keep track of past outcomes, learn from mistakes, and improve over time. This iterative capability makes them invaluable for complex projects where context and continuity matter, such as product design or customer support workflows.

For example, consider the burgeoning trend of AI assistants in customer service. Instead of handing over a query to a human representative when faced with an unknown, today’s agents can detect when they’re stuck, research the problem, consult documentation, and even trigger escalation protocols autonomously. Harvard Business Review has investigated how these new agents are transforming workflows and expectations in industries as diverse as healthcare, retail, and legal services.

Enthusiasts and experts speculate that as agents become more widespread, their use will broaden from enterprise solutions to everyday life. Think AI household managers that optimize grocery shopping, budget scheduling, or elder care — working independently, just like trusted assistants. The underlying technology is only now reaching the tipping point where these scenarios are not just possible, but practical. For further exploration of this trend, IEEE Spectrum provides a deep dive into the evolving landscape of AI agents and their expected societal impact.

Why Agents Represent the Future of AI Innovation

Artificial intelligence (AI) has experienced astonishing progress, driven chiefly by large language models (LLMs) such as GPT-4 and Google Gemini. These tools have showcased their power in understanding and generating language, translating text, and summarizing content. However, beneath the surface lies a mounting limitation: LLMs are essentially predictive engines, generating responses one word at a time based on probability. While impressive, they are ultimately “stateless,” unaware of their previous outputs or dynamically changing goals.

The future of AI lies in the emergence of agents—systems that not only use LLMs but also blend reasoning, memory, perception, and action to autonomously achieve objectives. Here’s why agents mark the next seismic shift in AI innovation:

Continuous, Goal-Oriented Autonomy

Unlike static LLMs, agents are designed as persistent entities that can pursue complex goals over time and adjust their behavior based on feedback and environmental cues. For example, an AI agent tasked with booking your travel might interact with flight APIs, compare hotel prices, manage your calendar, and even negotiate lower rates—taking dozens of context-aware actions across many domains. This autonomy bridges the gap between static task execution and dynamic problem solving.

Researchers highlight that autonomous agents use a loop of sensing, planning, and action—akin to how humans navigate the world. Agents not only generate text or code but also take real steps: scheduling meetings, updating documents, or interfacing with real-world systems.

Multi-Step Reasoning and Memory

LLMs usually handle prompts in isolation, lacking the continuity needed for complex processes. Agents, however, are equipped with memory mechanisms—storing a history of actions, decisions, and relevant external information. This feature enables multi-step reasoning, where each decision is informed by what came before, providing a “thread of consciousness” for tackling sophisticated, evolving tasks.

A practical illustration can be seen in Microsoft’s Copilot agents, which reference previous user interactions and continuously improve at prioritizing relevant information for next steps. This advancement is critical for real-world applications where context can’t be reset after each request.

Interaction with Diverse Data Sources and Tools

Where LLMs are confined to their training data or recent context, AI agents can connect to external databases, APIs, and even sensors, integrating real-time information to enhance their decision-making. This capability elevates them from passive responders to proactive problem-solvers.

For instance, agents in finance can monitor market data streams and execute trades based on predefined strategies. As Nature observes, these agents act as orchestrators, autonomously managing a suite of smaller AI tools to meet end-to-end business objectives.

Ecosystem Creation: Customizable and Domain-Specific Agents

Beyond general-purpose AI, agents can be tailored to specific domains, industries, or tasks. Developers can craft agents for medical research, sales outreach, engineering design, or customer support—each integrating vertical-specific tools and workflows. This modularity unlocks an ecosystem where AI agents drive innovation in fields as diverse as healthcare diagnostics and smart logistics.

In essence, agents are set to transform AI by making it contextual, action-oriented, and perpetually adaptive. Compared to standalone LLMs, agents promise a future where digital colleagues seamlessly carry out tasks, optimize outcomes in real time, and continually learn from every interaction. This evolution is already capturing the attention of leading academic researchers and the world’s biggest technology companies, setting the stage for a new era in artificial intelligence.