Introduction to Modern Data Lakehouse Architectures

The evolution of data storage solutions has led to the development of modern data lakehouse architectures, combining elements of traditional data warehouses and data lakes. These systems offer a versatile approach to handle large volumes of structured and unstructured data efficiently, integrating analytical tools to derive insights. Here, we delve into the core components and advantages of these architectures.

Key Characteristics

- Unified Storage and Processing: Data lakehouses aim to unify the storage and processing capabilities of data lakes with the structured data management capabilities of warehouses.

- Scalability: Designed to handle large-scale data with flexible infrastructure that supports vertical and horizontal scaling.

- Schema Enforcement & Governance: Provides robust mechanisms for schema enforcement and data governance, ensuring data quality and compliance.

- Real-time Analytics: Supports real-time data analytics, enhancing the ability to make timely business decisions.

Core Components

-

Storage Layer:

– Built on distributed storage systems like Amazon S3 or Azure Data Lake Storage, allowing easy scalability and cost-effectiveness.

– Utilizes open storage formats such as Apache Parquet or ORC for efficient storage and retrieval. -

Processing Engine:

– Leverages powerful engines like Apache Spark or Trino to process batch and stream data efficiently.

– Supports SQL query execution to facilitate data analysis without deep programming expertise. -

Metadata Management:

– Managed by systems like Apache Hive Metastore or AWS Glue, helping maintain a coherent and comprehensive view of metadata across datasets. -

Optimization and Performance Tools:

– Implement systems like Apache Iceberg for managing large sets of data tables and ensuring efficient querying and transactional consistency.

Benefits of a Lakehouse

- Cost Efficiency: Combines the low-cost storage solutions of data lakes with the computing efficiency of warehouses.

- Flexibility: Accommodates different data formats and computing engines, fostering a flexible data ecosystem.

- Data Consistency: Maintains consistency through ACID transactions, making it reliable for enterprise use.

- Machine Learning and Batch-processing Support: Provides infrastructure for executing complex machine learning tasks alongside classic ETL operations.

Practical Implementations

Organizations implement hybrid tools and techniques to achieve the desired capabilities of a data lakehouse. Examples include:

- Netflix: Initially moved from a monolithic data warehouse to a data lake, eventually layering a transactional data lakehouse architecture to manage its expanding data needs.

- Databricks: Offers a commercial platform that integrates various lakehouse components, bringing advanced analytics and BI access within reach of non-technical users.

Real-World Example

A typical workflow using modern data lakehouse architecture might involve:

- Data Ingestion: Extracting data from various sources, such as transactional databases or IoT devices, and storing it in an S3 bucket using a tool like Apache NiFi.

- Data Transformation: Using dbt to clean and transform data, enabling business intelligence applications to perform analytics.

- Analysis: Leveraging Trino to run SQL queries against dataset tables stored in Apache Iceberg format.

By integrating these components and practices, businesses can achieve a holistic, efficient solution tailored to their ever-growing data analytics requirements. This flexibility and robustness make data lakehouse architectures an appealing choice across various domains, from finance to healthcare.

Setting Up Trino, dbt, and Apache Iceberg

Trino Setup

Trino, formerly known as PrestoSQL, is a powerful SQL query engine designed for large-scale analytics. Follow these steps to set up Trino in your data lakehouse architecture:

Installation

-

Download Trino:

– Visit the Trino downloads page and download the latest version.

– Extract the tarball using:bash tar -zxvf trino-server-[version].tar.gz -

Configure Trino:

– Navigate to theetcdirectory inside the extracted folder.

– Create ajvm.configfile with appropriate heap size and performance settings:bash -Xmx16G -XX:+UseG1GC -XX:+UseGCOverheadLimit -

Launch Trino:

– Start the Trino server using:bash bin/launcher start

Connectors

- Setup S3 connector for accessing data stored in Amazon S3:

ini

connector.name=s3

s3.access-key=[your-access-key]

s3.secret-key=[your-secret-key]

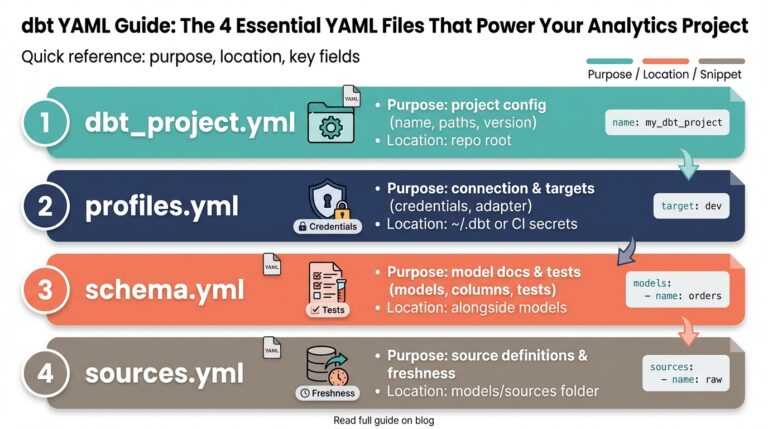

hive.metastore.uri=thrift://[metastore-host]:9083Setting Up dbt (data build tool)

dbt is a transformation tool that enables efficient SQL-based transformation workflows. Follow these steps:

Installation and Initialization

- Install dbt:

bash

pip install dbt-core dbt-trino- Initialize a dbt Project:

-

Run the following command in your working directory:

bash dbt init [project-name]

Configuration

- dbt Profile for Trino: Customize your

profiles.ymlto set up the connection.

yaml

[your-profile]:

target: dev

outputs:

dev:

type: trino

host: [hostname]

port: 8080

user: [username]

catalog: hive

schema: analyticsRunning dbt

- With your transformations defined in the

modelsdirectory, run:

bash

dbt runApache Iceberg Setup

Apache Iceberg is an open table format for huge analytic datasets. Here’s how to set it up alongside Trino and dbt:

Table Format Setup

-

Configure Hive Metastore:

– Install Hive Metastore to manage Iceberg tables.

– Update configurations to enable Iceberg support. -

Create Iceberg Tables in Trino:

– Use SQL in Trino to create and manage tables:sql CREATE TABLE hive.analytics.sample_iceberg ( id bigint, data string ) WITH ( format = 'ICEBERG' );

Integration

- Validation and Queries:

-

Use Trino’s powerful query engine to perform complex analytics on Iceberg tables:

sql SELECT * FROM hive.analytics.sample_iceberg;

By setting up Trino, dbt, and Apache Iceberg, you can build a robust, scalable, and efficient modern data lakehouse architecture, providing a seamless platform for executing queries, transformations, and managing large datasets effectively.

Implementing ELT Pipelines with Trino and dbt

Understanding ELT Pipelines

The Extract, Load, Transform (ELT) process flips the traditional Extract, Transform, Load (ETL) paradigm. By first loading data into a centralized repository before transforming it, ELT leverages the power of modern cloud-based data warehouses to execute complex transformations at scale. This approach is well-suited to environments using Trino and dbt, providing efficient data processing capabilities necessary for a lakehouse architecture.

Step-by-Step Implementation

1. Data Extraction with Trino

Trino plays a pivotal role in querying data across diverse sources. Here’s how to effectively set it up for extraction:

- Connect to Data Sources:

- Use Trino’s connectors to link with various data inputs such as relational databases, cloud storage (e.g., Amazon S3), NoSQL databases, etc.

-

Configure credentials and access parameters in Trino’s configuration files:

ini connector.name=connector-type connection-properties key1=value1 key2=value2 -

Query and Extract Data:

- Execute SQL queries to extract relevant datasets.

-

Save the queried data directly into a centralized data lake using native Trino batch export functionalities.

sql CREATE TABLE s3.output_bucket.table_name AS SELECT * FROM source_table WHERE conditions;

2. Loading Data Using Trino

With Trino, loading data into a data lake or warehouse is seamless:

- Data Lake Integration:

- Load data extracted from multiple sources into a specified bucket in your data lake, maintaining a raw data layer.

-

Set up your Trino catalog configuration to interface directly with your storage solution:

ini connector.name=hive hive.metastore.uri=thrift://[metastore-host]:9083 s3.bucket=output_bucket -

Optimization With Partitioning:

- Implement partitioning strategies to optimize query performance by enabling selective data scanning.

3. Data Transformation with dbt

After data resides in your lakehouse, dbt handles the transformation phase:

- Model Creation:

- Write SQL models in dbt that define how raw data should be transformed into an analysis-ready form.

-

Place these models in the

models/directory of your dbt project.sql SELECT id, TRIM(data) AS cleaned_data, current_timestamp AS load_time FROM {{ ref('source_table') }} WHERE condition; -

Ensure Transformation Logic Is Executable:

-

Utilize dbt’s CLI tools to run the transformations:

bash dbt run

4. Orchestration and Scheduling

Orchestration tools like Apache Airflow can schedule and manage the ETL flow:

- Define Workflow:

-

Set up a Directed Acyclic Graph (DAG) to outline the ELT tasks:

python with DAG('example_dag', schedule_interval='@daily', ...) as dag: extract_task = BashOperator( ... ) load_task = BashOperator( ... ) transform_task = BashOperator( ... ) -

Schedule Execution:

- Incrementally run tasks based on dependencies and workflows defined.

Benefits of Using Trino and dbt for ELT

- Scalability: Trino’s distributed architecture allows the handling of large volumes of data seamlessly, providing a foundation for scaling transformations through dbt.

- Flexibility: The combination enables processing a wide array of data formats, while dbt supports the modularity of transformation logic.

- Speed: Executes transformations at the database level, cutting down data movement and offering substantial performance gains.

By embracing this methodology, businesses can ensure a robust ELT setup that not only leverages the strengths of Trino for querying but also the transformation efficiencies that dbt provides, supporting a comprehensive lakehouse ecosystem.

Optimizing Data Management with Apache Iceberg

Enhancing Data Management with Apache Iceberg

Apache Iceberg is an innovative table format designed to manage data at massive scale efficiently. It offers unique capabilities that enhance data management within modern data lakehouse architectures, providing solutions to common challenges such as scalability, consistency, and schema evolution. Here’s how Apache Iceberg optimizes data management:

Key Features of Apache Iceberg

- Scalable and Efficient Processing:

-

Iceberg tables efficiently handle petabytes of data, optimizing both storage and retrieval processes. This scalability ensures high performance for both batch and streaming operations.

-

Schema Evolution and Partitioning:

- One of the standout features is its support for schema evolution without costly rewrite operations. This allows seamless modification of data schemas over time while maintaining historical data integrity.

-

It also enables advanced partitioning strategies, improving data query performance by minimizing data scanned during operations.

-

ACID Transactions:

-

Apache Iceberg provides full ACID transactional support, ensuring consistency even in high-concurrency environments. This robustness is crucial for reliable data insights and operations.

-

Time Travel and Snapshot Isolation:

- Data can be accessed using snapshots, which allows for easy rollback to previous states or querying historical data. Snapshot isolation enhances analytical capabilities by enabling consistent data views across operations.

Setting Up Apache Iceberg for Data Lakehouse Management

1. Installation and Setup

- Configure Hive Metastore:

-

Set up Apache Hive Metastore, which many Iceberg deployments use for metadata storage. Ensure compatibility by updating configuration files to support Iceberg.

ini iceberg.catalog.hive.uri=thrift://[metastore-host]:9083 iceberg.engine.hive.enabled=true -

Integration with Trino:

-

Leverage Trino’s powerful query engine to operate on Iceberg tables, configuring catalog files for seamless interaction:

ini connector.name='iceberg' catalog-type='hive'

2. Managing Tables

- Creating and Querying Tables:

-

Use SQL to create and handle Iceberg tables within your database environment. This enables straightforward management of complex data structures.

sql CREATE TABLE hive.analytics.my_table ( id VARCHAR, name STRING, timestamp TIMESTAMP ) WITH ( format = 'ICEBERG' ); -

Executing Queries:

-

Query Iceberg tables using Trino, providing robust analytics and reporting capabilities:

sql SELECT * FROM hive.analytics.my_table WHERE id = '123';

3. Optimizing Performance

- Partition Pruning:

-

Implement effective partitioning strategies to boost query performance. By defining partitions based on data access patterns, Iceberg avoids unnecessary data reads, reducing latency and resource usage.

-

Compact and Optimize:

- Regularly compact the tables to ensure optimal layout and improved read performance. Operations like compaction help maintain efficiency by organizing data and minimizing fragmentation.

4. Advanced Use-Cases

- Incremental Data Processing:

-

Iceberg supports incremental data processing by handling append-only data as well as modifications. This feature is essential in environments requiring frequent updates and data streams.

-

Collaborating with Real-Time Systems:

- Use Iceberg with streaming platforms to process real-time data, enabling up-to-the-moment insights and analyses using snapshot isolation features.

By utilizing Apache Iceberg, enterprises can significantly enhance their data management capabilities within a lakehouse environment. Its design optimizes processing, ensures data reliability through ACID transactions, and enables seamless integration with existing data tools like Trino and dbt, setting a solid foundation for scalable and dynamic data solutions.

Integrating these capabilities allows businesses to achieve effective, efficient data analysis, ultimately supporting more informed decision-making and operational efficiencies. Deploying Apache Iceberg empowers organizations to make the most of their data infrastructure, aligning with broader data strategies, and enabling cutting-edge analytical workflows.

Best Practices for Maintaining a Data Lakehouse

Data Governance and Security

To maintain a robust data lakehouse, implementing data governance and security practices is essential:

-

Define Data Policies: Establish clear data governance policies to ensure consistent data management. Outline data access controls, retention policies, and compliance guidelines tailored to industry standards (e.g., GDPR, HIPAA).

-

Role-Based Access Control (RBAC): Implement RBAC to ensure that users have appropriate access levels. Assign roles based on job functions, minimizing unnecessary access to sensitive data.

# Example of RBAC configuration in a cloud environment

roles:

- name: data_analyst

permissions:

- read: true

- write: false

- delete: false

- Data Encryption: Encrypt data at rest and in transit to protect sensitive information from unauthorized access or breaches. Use SSL/TLS for data in transit and storage encryption options provided by cloud services.

Metadata Management

Maintaining a well-organized metadata system helps in data discoverability and quality assurance:

-

Centralized Metadata Repository: Use tools like Apache Hive Metastore or AWS Glue to maintain a centralized repository of metadata, facilitating easier data cataloging and management.

-

Automated ETL Metadata Capture: Integrate tools like Apache Nifi to automatically capture metadata during ETL processes. This includes data lineage and transformation logs, enabling traceability.

-- Example of a database query to fetch metadata

SELECT * FROM metadata_catalog WHERE table_name = 'sales_data';

Performance Optimization

Enhancing performance is crucial for large-scale data processing:

-

Partitioning and Clustering: Strategically partition and cluster data to improve query performance. Use features like Apache Iceberg’s partition pruning to minimize data scans.

-

Indexing Strategies: Create appropriate indexes on frequently queried columns to speed up data retrieval. Balance index usage with storage costs and update performance.

-

Caching Solutions: Employ caching to reduce latency and increase efficiency. Implement distributed caching layers such as Redis or use cloud-based solutions offered by platforms like Databricks.

Monitoring and Maintenance

Consistently monitor the health and performance of your data lakehouse:

-

Real-Time Monitoring Tools: Use tools like Apache Superset or Grafana for real-time monitoring of data workflows. Analyze key performance metrics including query execution times and system load.

-

Scheduled Maintenance: Regularly perform maintenance tasks such as cleaning up old data, updating software, and patching security vulnerabilities. Schedule these during low-traffic periods to minimize impact.

# Example of setting up regular maintenance through cron jobs

0 2 * * 0 /path/to/maintenance-script.sh

Scalability and Evolution

Plan for future growth and adaptability by designing a scalable architecture:

-

Modular Components: Choose modular and expandable tools and frameworks (e.g., Trino, dbt). This flexibility allows swapping components with minimal disruption as needs evolve.

-

Cloud Scalability Features: Leverage auto-scaling features in cloud environments to adjust resources based on demand. This ensures that performance levels remain consistent despite fluctuating workloads.

-

Schema Evolution Support: Use formats like Apache Iceberg that support schema evolution so that changes in data structure over time do not lead to costly rewrites or data quality issues.

By adopting these practices, organizations can ensure their data lakehouses are secure, efficient, and capable of supporting long-term data strategy goals.