Introduction to Conversational AI and Its Evolution

Conversational AI has become an integral part of modern technology, fundamentally transforming the way humans interact with machines. At its core, conversational AI refers to technologies that enable machines to engage in human-like dialogues. This includes a wide array of platforms ranging from simple chatbots to more sophisticated virtual assistants.

One of the earliest attempts to create a conversational agent was ELIZA, developed in the 1960s by Joseph Weizenbaum. ELIZA simulated a psychotherapist by using basic pattern matching, which, although primitive, laid the groundwork for future AI developments.

Over the decades, advancements in natural language processing (NLP) and machine learning (ML) have propelled conversational AI to new heights. These technologies enable machines to understand, process, and respond to human language in a more natural and effective manner. NLP allows the machine to parse human languages and extract meaningful data, which are then processed by advanced ML algorithms to generate appropriate responses. This evolution has been driven by increased computational power and the availability of large datasets, which allow AI systems to learn from vast amounts of text data.

Another significant milestone was the advent of virtual assistants like Apple’s Siri, Google Assistant, Amazon’s Alexa, and Microsoft’s Cortana. These systems utilize complex algorithms and neural networks to manage a range of tasks, including answering queries, playing music, and controlling smart home devices. This facet of AI demonstrates an evolution from static, rule-based systems to dynamic, learning-based systems that improve through interaction and data accumulation.

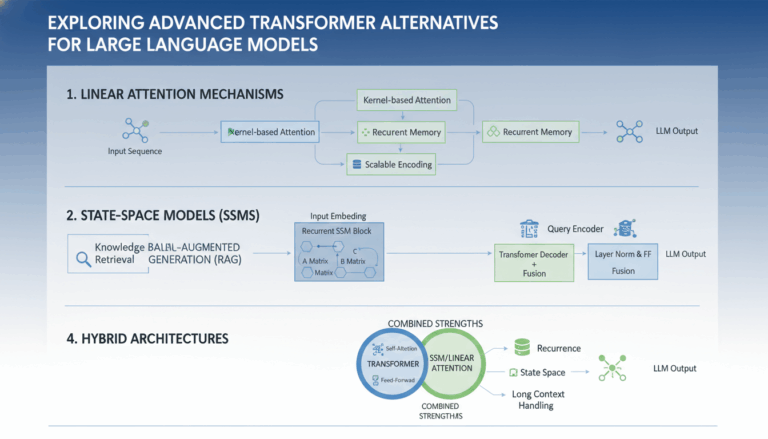

In recent years, the development of transformer models, such as OpenAI’s GPT-3, has significantly enhanced conversational capabilities. These models use deep learning architectures that are remarkably efficient at understanding context and generating human-like text. Such models are trained on diverse text datasets, enabling them to understand varied topics and manage context over longer conversations.

The ongoing evolution of conversational AI is also heavily influenced by user expectations and business needs. For instance, in customer service, AI-driven chatbots are used to handle basic inquiries and route complex issues to human agents, striking a balance between automation and personalized service. Additionally, AI in healthcare is being utilized for scheduling appointments or providing basic medical advice, illustrating the versatile applications of conversational AI.

Moreover, advances in emotion recognition and sentiment analysis are beginning to imbue conversational systems with the ability to detect users’ emotions and adjust responses accordingly, making interactions more engaging and effective. This ability is pivotal in sectors like mental health, where understanding user sentiment can lead to more supportive interactions.

Overall, the trajectory of conversational AI is one of rapid growth and continual improvement, driven by technological advancements and an ever-expanding understanding of human language nuances. As AI systems evolve, they hold the potential to vastly improve efficiency and user experience across diverse domains.

Overview of the RASA Framework

The RASA Framework is an open-source platform for building engaging, contextual chatbots and voice assistants. At its core, RASA provides developers with an array of tools to implement conversational AI systems from scratch, allowing for customizability and fine-tuning that suit specific use cases. It overcomes the traditional bottlenecks faced by other frameworks, such as limited customization options and dependency on external APIs, making it particularly attractive for projects where privacy and data control are paramount.

RASA consists of two main components: Rasa NLU and Rasa Core. Each plays a pivotal role in crafting sophisticated conversational agents.

Rasa NLU

Rasa NLU (Natural Language Understanding) is responsible for the classification of intents and extraction of entities from a user’s input. It functions by breaking down user input into comprehensible elements, thus allowing the system to infer the intention behind the users’ statements. This is achieved through various NLP techniques such as tokenization, stemming, and the application of pre-trained models like spaCy or transformers. Rasa NLU supports multiple languages, which adds flexibility for global applications.

Developers can augment Rasa NLU with custom pipeline configurations, tailored to specific datasets. This is crucial for projects requiring unique processing needs, where predefined setups may not suffice. For instance, integrating word embeddings or using specific machine learning models for language processing can significantly enhance understanding capabilities in niche domains.

Rasa Core

Rasa Core handles the dialog management aspect, dictating how the system responds based on the context and historical interactions. It employs machine learning to predict the best next action to take during a conversation. This dynamic dialog management is powered by policies that can be customized to fit various business logic requirements. Developers can employ pre-defined policies provided by RASA, such as the Memoization Policy or the Rule Policy, or configure their own policies to manipulate how the conversation flows.

A standout feature of Rasa Core is its support for stories and interactive learning. Stories are training data that illustrate conversations between users and the assistant, depicting sequences of user inputs and corresponding actions. By training Rasa Core on these stories, developers can enable their assistants to handle long-term context and manage complex conversation scenarios more effectively.

Integration and Deployment

A notable advantage of the RASA Framework is its ease of integration with various platforms and channels, like Slack, Facebook Messenger, and custom web interfaces. Additionally, RASA allows for seamless connection with back-end services, databases, and APIs, creating a cohesive flow of data and responses tailored to meet user needs.

Deployment flexibility is another aspect where RASA shines. Since it’s open source, developers can host RASA on their own servers, enabling complete control over the deployment and data handling processes. This independence from third-party services not only improves privacy but often reduces costs, as self-hosted solutions can negate the need for costly API usage.

Community and Ecosystem

The RASA community is robust and active, offering extensive documentation, tutorials, and forums where developers can seek help or share insights. This community-driven model accelerates the growth of the framework by constantly introducing fresh ideas, plugins, and solutions to common problems encountered in building conversational agents.

RASA also offers an enterprise version with additional support and features for businesses looking to scale their conversational AI solutions, including advanced analytics, annotation tools, and integration options suited for large-scale deployments.

The robustness and adaptability of the RASA Framework render it a powerful tool for building advanced AI-driven conversational agents, suitable for a variety of contexts and industries. Its open-source nature encourages innovation and customization, making it a leader in the conversational AI framework landscape.

Setting Up Your First RASA Project

To embark on your journey with RASA, the first step is to set up your project environment and framework. This process involves several key phases to ensure everything is correctly configured to build and deploy conversational AI systems efficiently.

Begin by installing the necessary prerequisites. For a RASA project, having Python 3.6 or later is crucial, as RASA runs on Python. Begin by checking your current Python version to ensure compatibility:

python --version

If Python is not installed or needs updating, download it from the official Python website and follow the installation instructions for your operating system.

With Python ready, the next step is to set up a virtual environment, which helps manage dependencies and isolate your project:

-

Install

virtualenvusing pip:

pip install virtualenv -

Create a new virtual environment:

virtualenv rasa-env -

Activate the virtual environment:

– On Windows:

.\rasa-env\Scripts\activate

– On macOS/Linux:

source rasa-env/bin/activate

Once the virtual environment is activated, proceed to install RASA. Use the following command:

pip install rasa

After the installation process completes, confirm that RASA is set up correctly by checking its version:

rasa --version

With your environment prepared, you can now initialize a new RASA project. This process involves creating the initial project files that RASA needs to start building your conversational AI:

rasa init

This command performs several key actions:

– Project Structure Creation: RASA initializes the project directory with essential files such as config.yml, domain.yml, and data directories.

– Sample Bot Generation: It provides a sample bot, which is a simple assistant containing example intents and a basic conversation flow. This sample serves as a template and a learning resource to build your chatbot.

– Interactive Training Prompt: You’ll be prompted to train the initial model, allowing you to interact with your sample bot and understand how RASA processes and manages dialogues.

After the initial setup, you can explore the directory structure:

– config.yml: Contains pipeline configurations and policies.

– domain.yml: Defines intents, entities, slots, responses, etc.

– data/nlu.yml and data/stories.yml: Hold training data for intents and story flows respectively.

Before you start modifying files for your specific use case, it is essential to test the initial setup:

Start the RASA server to run your bot:

rasa shell

This launches an interactive chat session where you can communicate with the sample bot, examining how it handles user inputs.

To begin customizing your assistant, acquaint yourself with RASA’s documentation and the architecture of declarative files. Tailor the domain.yml and add your intents and responses in data/nlu.yml to align the bot with your application’s needs.

Throughout your project development, employ rasa train to train your models after every change to update your bot’s capabilities. Also, consider using version control systems, like Git, to track changes and facilitate collaboration.

In summary, setting up your first RASA project requires careful preparation of your environment, followed by the initiation of a sample project. Utilize the provided configuration files and training data as a foundation, customizing them to suit your specific conversational AI project requirements, fostering a robust and interactive experience.

Building and Training a Chatbot with RASA

Building a chatbot using RASA involves several stages, each critical to developing an efficient and responsive conversational AI. The process starts by defining the problem you want your chatbot to solve, and setting specific user scenarios. This clarity guides the subsequent steps, ensuring the chatbot meets user expectations and interacts seamlessly.

Start by identifying and outlining user intents. Intents represent the possible purposes behind user messages, such as asking for information, placing an order, or getting support. For example, a banking chatbot might include intents like checking account balances, transferring money, or finding nearby ATMs. Carefully craft these intent categories based on the expected interactions for effective dialog management.

Next, gather and prepare training data for Natural Language Understanding (NLU). Compile examples of each intent — these example utterances train the model to recognize user queries accurately. Consider augmenting these with synonyms or slight variations to enhance recognition accuracy. Store them in a structured format within the data/nlu.yml file, clearly associating each example with its respective intent.

Entities are the next consideration and involve extracting relevant data from user inputs, like dates for an appointment scheduler or product names in an e-commerce chatbot. Define these entities within your training data, guiding RASA in extracting necessary information during interactions. Custom entities can be crafted using regex patterns or by implementing a custom component if complex extraction logic is needed.

Configure the NLU pipeline in config.yml. This file dictates how RASA processes and understands language inputs. Choose components that best match your project’s requirements — options include pre-trained models like BERT for advanced understanding or simple tokenizers for lightweight applications. Tweak these settings to balance between performance and computational efficiency.

Dialog management is governed by stories and rules, which you define next. Stories are sequences representing user interactions, detailing how the system should react to certain inputs. Store these in the data/stories.yml file. Simulate potential real-life exchanges between users and the chatbot to capture a broad spectrum of interactions.

Rules, defined in the rules.yml file, handle static conversation patterns crucial for establishing deterministic responses, such as greeting users or handling fallback scenarios when user intent is unclear. Understanding when to utilize rules versus stories ensures the chatbot can manage varied conversational flows effectively.

Training your chatbot involves running the command rasa train, which compiles the intent and story data to create models for Rasa NLU and Rasa Core. This process might take some time, especially with large datasets or complex pipelines, yet it is a crucial step toward optimizing chatbot performance.

Testing is an iterative part of building and training. Utilize rasa shell to engage with your chatbot in a simulated environment, essential for diagnosing unexpected behavior and validating that intents and entities are recognized correctly. Fine-tune intents and stories to better align responses with user expectations.

Deployment finalizes your RASA chatbot. Leverage RASA’s range of channel connectors to integrate with platforms like Slack or Facebook Messenger. Customize these connections in credentials.yml, ensuring authentication and data handling align with platform requirements.

Monitoring and continuous improvement are vital post-deployment stages. Use analytic tools provided by RASA Enterprise, or third-party solutions, to analyze interaction data, assessing performance and making iterative adjustments based on user feedback. Regular updates and retraining with new data enhance the chatbot’s accuracy and scope.

Building a RASA chatbot is a multi-faceted task that melds thorough preparation, imaginative design, and rigorous training. Each phase requires meticulous attention to user needs an understanding of RASA’s configuration capabilities to develop a responsive, intuitive conversational agent.

Integrating RASA with Messaging Platforms

Integrating RASA with messaging platforms is a critical phase in deploying a chatbot to interact with users on popular messaging services. This integration enhances accessibility and allows the chatbot to reach users where they already communicate daily. The process involves configuring connectors that enable your RASA assistant to interact with these platforms effectively.

To begin the integration, it’s essential to first prepare your RASA project and ensure that your chatbot is functioning correctly locally. Once confirmed, proceed to configure the specific messaging platform you wish to integrate with, using the following steps as a general guide:

-

Understand Platform Requirements: Each messaging service has its own API and permissions structure. Familiarize yourself with the requirements and limitations specific to platforms like Facebook Messenger, Slack, or WhatsApp. This often includes setting up developer accounts and creating an application or bot within the platform’s ecosystem.

-

Set up Credentials: RASA uses the

credentials.ymlfile to manage authentication with external services. In this file, you will specify the necessary access tokens, API keys, or webhooks required to connect your RASA bot to the chosen messaging platform.

For example, in the case of Facebook Messenger, you’d need to set a verify token and a page access token that you obtain from Facebook’s developer portal. Similarly, Slack integration would require a bot token that you get after setting up your bot application in Slack’s API dashboard.

- Configure Webhooks: Messaging platforms communicate with RASA through HTTP webhooks. Set up these webhooks to handle incoming messages and events. When configuring your webhook, you’ll need to specify your RASA server’s URL that should be internet-accessible.

For instance, with Facebook Messenger, you create a webhook in their developer settings, pointing it to your RASA server and subscribing to events like messages or postbacks. Ensure your RASA server can receive webhook requests from the internet, often requiring hosting on a public server or using services like ngrok temporarily during development to expose your local server.

-

Modify RASA’s Endpoint Configuration: In the

endpoints.ymlfile, determine how your RASA assistant will respond to incoming requests. For asynchronous messaging operations, you might need to manually set up a REST interface if not using pre-configured channel integrations. -

Testing and Validation: Once integrated, rigorously test the interaction between RASA and the messaging platform. Check for message delivery, format correctness, and response accuracy. Tools like Postman can simulate requests to the webhook URL to ensure configurations are correct.

-

Monitoring and Logging: Employ logging to monitor your bot’s interactions post-deployment. RASA supports integration with various logging tools, which can provide insights into user interactions and help identify issues.

-

Iterate and Adjust: Based on user interactions and feedback, continuously adjust your integration setup and dialog management policies to improve performance.

While this overview provides a basic framework, detailed steps vary with each platform and updating your project’s documentation resources is advised to stay aligned with new changes in platform APIs. Integrating RASA with messaging platforms not only extends your bot’s reach but also enriches user experience by providing seamless interaction across popular communication channels.