Introduction to Self-Evolving Machine Learning Models

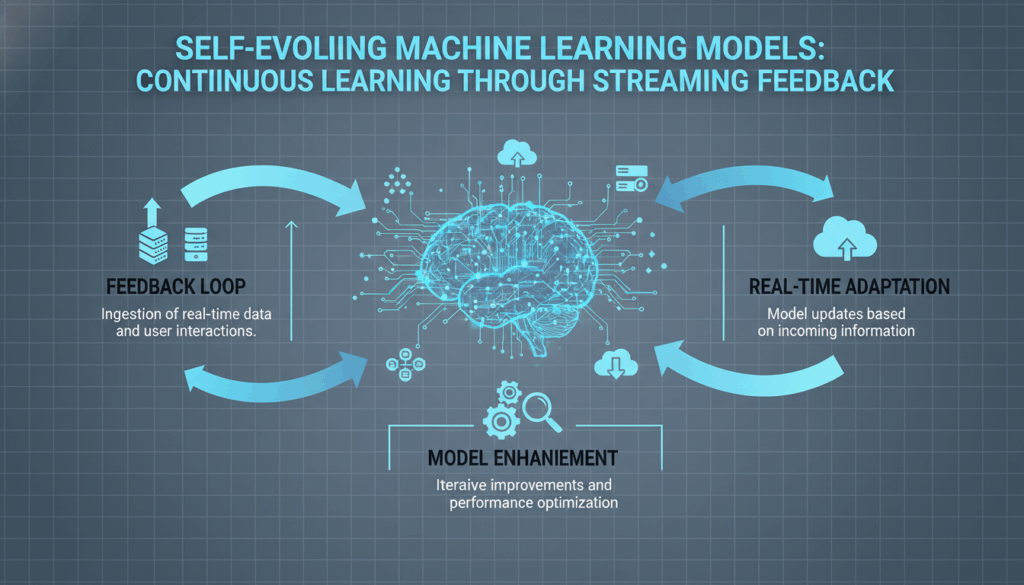

The concept of self-evolving machine learning models represents a significant step forward in the field of artificial intelligence (AI). Traditional machine learning models are often static, requiring extensive and periodic retraining with new datasets to maintain their effectiveness. This retraining can be resource-intensive and time-consuming. In contrast, self-evolving machine learning models aim to achieve continuous learning from data streams, adapting in real-time to new information without requiring significant human intervention.

At the core of these models is the ability to learn incrementally from streaming data—a process often referred to as online learning. Unlike batch learning, where models are trained on a fixed dataset, online learning techniques allow models to update themselves continuously as new data arrives. This adaptability is crucial in dynamic environments where data patterns can shift quickly.

To illustrate, consider a self-evolving model deployed in a financial trading system. Such a model would receive a constant stream of data, including asset prices, market indices, and trading volumes. As these inputs change, the model learns and adapts its predictive strategies immediately, optimizing investment decisions in real-time. This continuous feedback loop enables the model to better capture volatile market trends, potentially leading to significant financial gains compared to traditional static models.

The mechanisms enabling self-evolving models involve advanced algorithms such as stochastic gradient descent (SGD) variations, which update the model’s parameters incrementally. These algorithms are designed to handle data one instance at a time or in small mini-batches, ensuring that the model’s learning process is both efficient and robust against noisy data.

Moreover, self-evolving models incorporate concepts from reinforcement learning. In these scenarios, the model continuously evaluates its actions based on the rewards or penalties received, allowing it to refine its behavior. For instance, a self-driving car might use such models to adjust its driving strategies as it navigates different traffic conditions, learning from each successful maneuver or correction.

Model evaluation is another critical component of self-evolving systems. Unlike traditional models evaluated during a separate testing phase, self-evolving models must be assessed in an ongoing manner. Techniques such as rolling window evaluation or adaptive validation leverage real-time feedback mechanisms to continually measure performance. This allows developers to detect and rectify any degradation in model accuracy or effectiveness promptly.

The integration of self-evolving machine learning models into various sectors can significantly enhance decision-making processes. For instance, in healthcare, these models can predict medical conditions by continuously assimilating data from patient records and emerging health trends. The agility of these models in adapting to new data ensures that predictions remain accurate and relevant over time.

In conclusion, the approach to developing self-evolving machine learning models involves a combination of continuous data assimilation, online learning algorithms, and ongoing performance evaluation. As these models become integral to operations across diverse fields, they promise to revolutionize how we perceive and leverage AI in solving real-world challenges.

Understanding Continuous Learning and Streaming Feedback

Continuous learning in the context of machine learning refers to the capability of models to adapt and improve their performance dynamically as new data points are introduced over time. This process is distinct from traditional learning approaches, where models are trained with a static dataset and require scheduled retraining to update their knowledge base. Continuous learning relies heavily on the concept of incremental updates, where the model’s parameters are subtly adjusted with each new data input, allowing it to consistently evolve without reprocessing the entire dataset from scratch.

One of the main advantages of continuous learning is its ability to handle non-stationary environments gracefully. For instance, consider a news aggregation platform that personalizes content for users based on reading preferences and interactions. As users’ interests evolve, a continuously learning model can rapidly incorporate these shifts in behavior, ensuring that content recommendations stay relevant and engaging. This adaptability is achieved through mechanisms designed to imbibe new patterns in user activity as they emerge, without waiting for a complete retraining cycle.

In tandem with continuous learning, streaming feedback plays a crucial role. Streaming feedback pertains to the ongoing influx of data that informs the model’s learning process. It encompasses real-time information such as user interactions, market changes, or environmental data that can influence the model’s behavior. This constant stream of feedback acts as the lifeline for continuous learning models, enabling them to remain responsive and up-to-date.

Consider an example of a model in a customer support chatbot application. This model processes user queries while concurrently receiving feedback from resolved interactions. If a particular type of query starts trending—possibly due to a new product launch—the streaming feedback allows the chatbot to prioritize learning responses that address these queries. The model’s underlying algorithms, often built on variations of stochastic gradient descent (SGD) or reinforcement learning techniques, leverage this feedback by adjusting weights and strategies based on real-time input.

For seamless integration of continuous learning and streaming feedback, architectural considerations are crucial. Models need to be designed to handle asynchronous data streams effectively and ensure minimal latency in processing. This involves using techniques such as buffer mechanisms for data stream synchronization and implementing efficient data preprocessing pipelines that can act in real-time.

Moreover, continuous monitoring and evaluation are essential to identify any performance drifts or biases in the models. By employing techniques like rolling window evaluation, developers can maintain oversight on model accuracy and initiate corrective measures as needed. This form of evaluation ensures that feedback loops remain beneficial, continuously fine-tuning the model’s learning trajectory.

In conclusion, the interplay between continuous learning and streaming feedback is foundational in developing agile, self-evolving machine learning systems. By ensuring that models continuously assimilate new information and promptly respond to feedback, organizations can enhance decision-making processes, maintain relevance, and address challenges dynamically across various industries.

Implementing Self-Adaptive Systems in Non-Stationary Environments

Implementing self-adaptive systems in non-stationary environments requires strategic design and robust adaptation mechanisms. These systems must dynamically adjust their behavior to maintain optimal functioning despite ever-changing circumstances. The key to achieving this lies in developing models that are not only capable of continuous learning but are also engineered to react to variations in data distribution, user behavior, or environmental factors in real-time.

To begin with, it is crucial to establish a comprehensive understanding of the specific non-stationary environment in which the system will operate. This involves thorough data analysis to identify patterns and potential sources of variability. For instance, in the context of a smart city application managing traffic flow, the system must account for various unpredictable factors such as weather conditions, public events, and construction activities, all of which can affect traffic patterns significantly.

Designing a self-adaptive system involves selecting suitable algorithms that can handle the incremental updates necessitated by streaming data. Algorithms like adaptive gradient methods, online versions of support vector machines, and neural networks with recurrent architectures are particularly effective. These algorithms allow the system to adjust its parameters with each new data input, preventing drift—a situation where the model’s predictions become less aligned with reality due to changing data patterns.

Integrating reinforcement learning techniques further enhances the adaptability of these systems. In reinforcement learning, the model learns policies that maximize rewards based on direct interactions with the environment. By employing these techniques, the system can continuously refine its decisions and actions, prioritizing strategies that yield the best results under current conditions. An example could be an energy management system in a smart grid that modulates electricity distribution based on real-time consumption data and network load.

To support these algorithms, constructing an infrastructural framework that can efficiently process and store data is important. Implementing microservices architectures or leveraging cloud-based solutions can ensure scalability and flexibility. These platforms should support real-time data integration, enabling the system to ingest large volumes of data with minimal latency.

Moreover, it is essential to incorporate mechanisms for anomaly detection and error correction. Systems should be equipped with diagnostic tools to identify and address model weaknesses or failures quickly. This could involve setting up automated feedback loops where anomalous patterns trigger refinement processes. For example, in a financial forecasting application, the system may need to recognize when predictive accuracy diminishes due to unforeseen economic changes and adjust its models accordingly.

Continuous evaluation is another critical aspect, ensuring the system’s decisions and adaptations remain valid and effective. Implement techniques such as rolling window evaluation to constantly assess performance metrics and adjust parameters as needed. This involves continuously recalculating predictions using the most recent data and comparing outcomes against historical benchmarks to identify potential degradations or improvements in performance.

Finally, user engagement mechanisms that provide immediate feedback can significantly enhance system adaptability. Collecting user feedback in real-time helps the model to immediately recognize changes in user behavior or preferences. In a personalized recommendation system, for example, user interactions with suggested content can serve as valuable feedback, guiding the system to fine-tune its recommendations.

These strategies collectively enable the deployment of self-adaptive systems that thrive in non-stationary environments, maximizing operational efficiency and ensuring continuous relevance across various dynamic contexts.

Techniques for Real-Time Model Adaptation and Error Compensation

Real-time model adaptation and error compensation are crucial components in maintaining the efficacy of self-evolving machine learning systems. As these models ingest streaming data, they confront continuously shifting trends and anomalies, requiring sophisticated strategies to ensure ongoing accuracy and reliability.

A primary technique involved in real-time model adaptation is online learning. This approach allows models to adjust their parameters incrementally as new data arrives. Unlike traditional batch learning that processes fixed datasets, online learning manages data instance by instance, which is immensely beneficial for adapting to real-time changes. Algorithms employing stochastic gradient descent (SGD) or its variants, such as Adaptive SGD, provide robust mechanisms to update weights dynamically based on the incoming data flow.

Kalman filters are another essential technique for real-time adaptation, particularly in systems requiring precise time-series predictions. These recursive algorithms estimate the state of a dynamic system from a series of incomplete and noisy measurements. In applications such as autonomous vehicles or industrial automation, Kalman filters enable the smooth tracking and adjustment of system states, minimizing prediction errors by continuously correcting discrepancies.

Incorporating reinforcement learning further enhances the adaptability of real-time systems. By defining a reward system aligned with desired outcomes, models can self-optimize through trial and error. For example, a model managing energy distribution in a smart grid can learn to adjust its strategies based on consumption patterns, thus reducing wastage and enhancing efficiency.

To effectively manage errors and compensate for discrepancies, anomaly detection is a vital tool. Real-time monitoring of model outputs allows quick detection of deviations from expected behavior. Techniques like statistical process control and machine learning-based anomaly detection frameworks can be used to identify and rectify outlier actions before they affect the overall system performance.

Error compensation involves implementing feedback loops that promptly adjust model predictions. This is particularly significant in systems where inaccuracies can lead to substantial costs, such as financial trading platforms. Here, models continuously receive feedback in the form of market performance metrics, allowing for immediate recalibration of trading strategies based on errors identified in prior predictions.

Another critical aspect is the use of ensemble methods to enhance model resilience. By combining predictions from multiple sub-models, the system can average out individual biases and errors, leading to more robust predictions. Techniques like bagging and boosting can be particularly effective, as they iteratively refine the model’s predictions by focusing on error-prone instances.

Moreover, implementing sliding window approaches in conjunction with these adaptive techniques ensures models only work with the most recent and relevant data chunks. This method not only enhances model agility but also mitigates risks associated with outdated information. By continually updating the dataset window, models are better equipped to handle rapidly changing environments, enabling fine-tuned and context-aware decision-making.

Lastly, it is imperative to establish a strong infrastructure capable of handling high-velocity data streams. Leveraging cloud-based platforms with real-time processing capabilities supports scalability and ensures the system can handle fluctuations in data volume and velocity effectively. These systems must also integrate real-time logging and diagnostic tools to provide visibility into model performance, allowing for swift interventions when issues arise.

By employing these techniques, self-evolving machine learning models can adapt in real-time, ensuring sustained accuracy and reliability across diverse dynamic environments. These capabilities not only support innovation in AI applications but also drive significant improvements in operational efficiency and decision-making processes.

Case Studies: Applications of Self-Evolving Models in Industry

The integration of self-evolving machine learning models across various industries highlights their transformative potential and adaptive capability, particularly in environments where real-time data processing and decision-making are crucial. Financial Sector: One such application is in the financial trading sector, where these models significantly enhance trading strategies through continuous adaptation to market dynamics. For instance, hedge funds and trading firms utilize self-evolving models to process large quantities of real-time financial data from global markets, including stock prices, interest rates, and economic indicators. By leveraging online learning techniques and reinforcement learning algorithms, these models dynamically adjust their predictions and trading strategies in response to shifts in market conditions, thereby optimizing investment returns and minimizing risks. These adaptive systems are capable of real-time anomaly detection as well, which assists in the early identification and mitigation of potential trading inaccuracies or market anomalies.

Healthcare: In healthcare, self-evolving models play a pivotal role in developing predictive analytics applications that continuously learn from patient data streams. For example, in predictive diagnostics, these models process patient data from wearable devices, electronic health records, and genetic information, adapting to evolving health trends and patient-specific data patterns. This continuous learning capability allows for timely intervention in patient care, early disease detection, and personalized treatment plans, leading to improved patient outcomes and resource management. Hospitals use these models to predict patient admissions and optimize staffing requirements, ensuring efficient allocation of medical resources.

Retail and E-commerce: The retail sector utilizes self-evolving models for dynamic pricing and personalized marketing strategies. Models analyze customer behavior, purchasing patterns, and competitor pricing in real-time, allowing retailers to adapt pricing strategies on the fly. For example, an e-commerce platform may implement a self-evolving model to adjust product prices based on real-time demand, inventory levels, and consumer trends, optimizing revenue and enhancing customer satisfaction through personalized offers. These models not only enhance pricing strategies but also improve customer segmentation for targeted advertising campaigns, increasing conversion rates and customer loyalty.

Transportation and Logistics: In logistics, self-evolving models optimize supply chain operations by adapting to variables like demand shifts, transportation routes, and delivery times. A logistics company might employ these models to process streaming data from GPS devices and traffic information systems, allowing for real-time route adjustments to reduce delivery times and logistical costs. Similarly, transport services use self-evolving algorithms to optimize scheduling and resource allocation based on passenger demand patterns, improving efficiency and service quality. For instance, ride-sharing services rely on these models to predict demand fluctuations and optimize driver dispatch algorithms, ensuring passenger wait times and operational efficiency are maintained.

Manufacturing: In the manufacturing industry, self-evolving models contribute to predictive maintenance and quality control processes. By analyzing data from sensors and IoT devices on production lines, these models predict equipment failures and identify potential defects, allowing manufacturers to schedule maintenance activities proactively and minimize downtime. The continuous learning ability of these models adjusts quality assurance processes based on production trends and defect patterns, streamlining operations and reducing waste. This leads to enhanced productivity, cost reduction, and the prevention of costly equipment failures.

These case studies exemplify the wide-ranging applicability of self-evolving models, showcasing their capacity to revolutionize how industries operate. This continuous learning approach enables businesses to respond dynamically to new information, reduce operational inefficiencies, and enhance service offerings, ultimately leading to significant competitive advantages in rapidly evolving environments.