Introduction to Natural Language Processing (NLP)

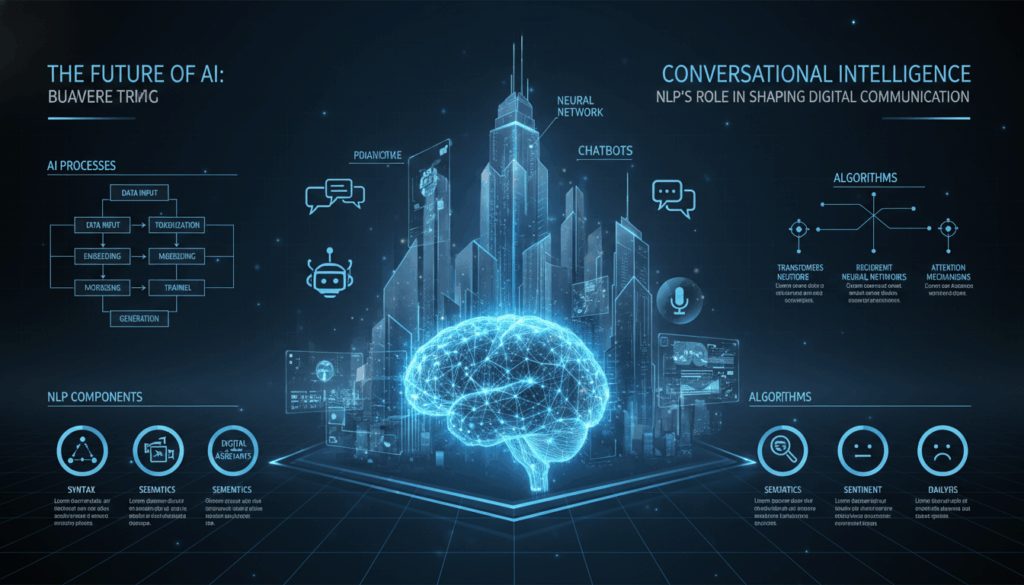

Natural Language Processing (NLP) stands as a critical pillar in the advancement of artificial intelligence, tasked with bridging the communicative gap between human language and computer understanding. This field of study and technology focuses on the interaction between computers and humans through natural language, seeking to make processes like reading, comprehension, and production of text accessible and intuitive for machines. The developments in NLP are not only pivotal for creating conversational agents but also for enhancing the overall interaction between users and machines in diverse settings.

At its core, NLP combines linguistic knowledge and machine learning techniques to equip computers with the ability to process and analyze large amounts of natural language data. Such integration allows computers to understand context, ambiguity, and even the subtle nuances present in human communication. This capability is fundamental in applications ranging from voice-activated assistants like Amazon’s Alexa and Apple’s Siri to advanced chatbots used in customer service platforms.

Key Components of NLP

Understanding NLP involves delving into various components, each contributing to the system’s ability to process language:

-

Tokenization: This is the process of breaking down text into smaller units called tokens, which can be words, phrases, or other significant elements. By identifying these units, machines can parse text data more effectively.

-

Part-of-Speech Tagging (POS): This involves labeling each token with its respective part of speech, such as noun, verb, adjective, etc. POS tagging helps establish the structure of sentences, which is crucial for deeper analysis.

-

Named Entity Recognition (NER): NER identifies and classifies key entities within text into predefined categories like names, organizations, dates, and more. This component is particularly useful in applications like information retrieval and question answering.

-

Sentiment Analysis: By analyzing the sentiments expressed in the language, this component evaluates attitudes, opinions, and emotions represented in text data, helping businesses gain insights into customer feedback and trends.

-

Syntax and Semantic Analysis: Syntax refers to the arrangement of words in a sentence to convey meaning, while semantic analysis focuses on the meaning behind those words. Together, they allow machines to interpret text in a human-like manner.

Machine Learning in NLP

Machine learning plays a pivotal role in powering NLP applications. It allows systems to learn from data patterns and improve over time, handling varying language inputs more efficiently. Supervised learning techniques, using labeled datasets, build models that recognize patterns for tasks like classification. Unsupervised learning, on the other hand, helps uncover hidden structures in text data, which is particularly advantageous for tasks without labeled data.

Deep learning, a subset of machine learning, has significantly accelerated advancements in NLP, especially with the rise of neural network architectures like Recurrent Neural Networks (RNNs) and Transformers, the latter of which has become particularly influential with models such as BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-training Transformer). These models help capture complex relationships in text, enhancing tasks from translation to abstract summarization and beyond.

Applications and Real-World Impact

NLP is transforming industries by streamlining operations, enabling real-time language translation, automating customer service, and even assisting in medical diagnoses by parsing vast amounts of clinical data. For instance, in legal sectors, NLP-based solutions expedite document analysis and contract management processes. In social media, sentiment analysis tools monitor public opinion and brand perception, offering real-time insights that shape marketing strategies.

Moreover, the integration of NLP in education has led to personalized learning experiences, where systems provide feedback and guidance tailored to individual needs. These applications underline the transformative power of NLP in creating more responsive and intelligent systems that understand and respond to human language more effectively.

In summary, as NLP continues to evolve, it promises deeper integration into daily life, reshaping how individuals interact with technology and each other in an AI-driven future.

Evolution of Conversational AI: From Rule-Based Systems to NLP-Driven Models

In the realm of conversational AI, a seismic shift has taken place over the past few decades. Initially, the landscape was dominated by rule-based systems, characterized by their simplicity and rigidity. Early conversational models operated based on a predefined set of rules and scripts. These rules were manually crafted and painstakingly organized in decision trees or similar hierarchical frameworks. While these systems were effective for narrow, domain-specific applications, like FAQ bots, they lacked flexibility and struggled with the complexities of natural human dialogue.

The limitations of rule-based systems became abundantly clear as users demanded more natural interactions. Rule-based models couldn’t handle variations or unexpected inputs reliably, often resulting in frustrating user experiences. This paved the way for more sophisticated approaches driven by advancements in machine learning and, more specifically, Natural Language Processing (NLP).

The evolution towards NLP-driven models marked a significant breakthrough. These models are rooted in data-driven methodologies that enable machines to comprehend, interpret, and generate human language with remarkable accuracy. Instead of relying on static rules, NLP models learn from extensive datasets, absorbing syntax, semantics, and the pragmatics of language through exposure to real-life conversations and text data.

An integral component of this evolution was the introduction of statistical methods and machine learning algorithms like Hidden Markov Models (HMM) and Support Vector Machines (SVM), which enhanced language understanding capabilities by leveraging probabilities and patterns in data. These techniques help models discern meaning from a myriad of possible interpretations.

However, the real revolution came with the emergence of deep learning, especially through neural network architectures. Recurrent Neural Networks (RNNs), and particularly their variant Long Short-Term Memory (LSTM) networks, allowed for the handling of sequential data, effectively capturing time-series and contextual dependencies in conversations.

The game-changer, however, was the advent of the Transformer architecture and models like BERT and GPT. Transformers revolutionized NLP by eschewing sequential processing in favor of self-attention mechanisms that enable models to consider the broader context of words in a sentence, regardless of their position. This capacity for contextual understanding enabled the generation of more coherent and contextually aware conversations, ushering in a new era for chatbots and virtual assistants.

These models are not only adept at interpreting human language but can also engage in more human-like interactions by generating responses that are contextually relevant and semantically correct. For example, AI-driven customer service bots now understand user intents better, manage queries more efficiently, and escalate issues to human agents only when necessary.

NLP-driven conversational AI has also brought about improvements in sentiment analysis, enabling systems to detect emotional tone and adjust responses accordingly. In customer support contexts, this can translate to more empathetic interactions, enhancing user satisfaction and trust.

Overall, the transition from rule-based systems to NLP-driven models represents a monumental leap in the field of conversational AI. It reflects a broader trend towards systems that not only process language but understand and generate it in a way that feels seamless and intuitive to human users, making interactions more natural and meaningful.

Key NLP Techniques Enhancing Conversational Intelligence

Natural Language Processing (NLP) plays a pivotal role in enhancing conversational intelligence through a variety of innovative techniques. These approaches enable machines to understand, process, and respond to human language in ways that are contextually aware and deeply nuanced. Let’s delve into some of the key techniques that drive this transformation.

1. Word Embeddings

One of the foundational techniques in NLP, word embeddings, represents words in continuous vector space based on their contextual similarities. Popular methods such as Word2Vec, GloVe (Global Vectors), and FastText convert words into vectors that capture semantic relationships, enabling systems to understand language in a way that reflects human understanding. For instance, the embedding for “king” minus “man” plus “woman” results in a vector close to “queen,” demonstrating an innate understanding of relationships.

This understanding enhances conversational systems by enabling them to grasp synonyms and context, facilitating more natural and intuitive interactions. For example, if a user states, “I need a cab,” a chatbot can recognize “taxi” as an equivalent request due to the closeness of these word vectors in embedding space.

2. Sequence-to-Sequence Models

Sequence-to-sequence (seq2seq) models revolutionize conversational AI by allowing systems to transform an input sequence into an output sequence of different lengths. These models are essential for applications like language translation and dialogue systems. They typically comprise an encoder, which processes the input query, and a decoder, which generates the appropriate response.

A practical application of this is in virtual assistants, which can take a spoken query, understand its intent, and produce a textual response, even if the output differs in length from the input query.

3. Attention and Self-Attention Mechanisms

Attention mechanisms, particularly self-attention, are crucial in managing dependencies in data sequences, allowing models to focus on important parts of inputs when generating outputs. The Transformer architecture, which relies heavily on self-attention, processes words in parallel instead of sequentially, which significantly improves the speed and effectiveness of managing context and relationships in language.

Through self-attention, a system can determine the importance of each word relative to others in a sentence, improving the relevance and coherence of responses. For instance, in a chatbot, understanding that “book” in “Can you book a flight for me?” refers to an action rather than an object like a novel is essential for accurate response generation.

4. Contextual Language Models

Contextual language models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) have made strides in understanding language context. Unlike traditional models, these models consider the entire context of a word within a sentence, allowing for a more nuanced understanding.

BERT, through its bidirectional approach, reads sentences from both left to right and right to left, capturing a broader context. This capability enables chatbots to understand and disambiguate sentences that may have multiple interpretations, leading to more accurate responses. For instance, “bank” in “river bank” versus “financial bank” is understood based on surrounding words.

5. Named Entity Recognition (NER) Enhanced with Deep Learning

Advanced Named Entity Recognition (NER) systems use deep learning to identify entities in text with higher accuracy. NER is critical in conversational intelligence as it allows systems to extract names, dates, locations, and other critical information from a user’s input, which can be used to personalize responses or trigger specific actions.

In customer service chatbots, NER ensures that entities such as “order number” or “product name” are accurately identified and processed, thus enhancing the efficiency and effectiveness of customer interactions.

6. Dialog Management Systems

Dialog management orchestrates the flow of conversation, controlling how systems manage dialogue states and plan responses. This involves understanding the conversational context, tracking intents, and adopting appropriate strategies for interaction.

State-of-the-art dialog systems leverage reinforcement learning to improve interaction strategies over time, learning from each user interaction to optimize outcomes. For example, a customer service bot can learn to de-escalate frustration by using calming language when it identifies customer dissatisfaction.

Through these advanced techniques, NLP fuels the capability of conversational systems to interact in ways that are more aligned with human communication patterns, leading to enhanced user satisfaction, efficiency, and engagement in a multitude of real-world applications.

Applications of NLP in Modern Conversational AI

Natural Language Processing (NLP) is pivotal in the design and enhancement of modern conversational AI, providing the backbone for machines to interpret, understand, and generate human language effectively. These applications are transforming how users interact with technology, making interfaces more intuitive and accessible.

One of the most significant applications of NLP in conversational AI is in developing sophisticated chatbots and virtual assistants. These systems leverage NLP to process user queries, understand intent, and generate relevant responses. For instance, chatbots like Google Assistant and Amazon Alexa use advanced NLP models to perform tasks ranging from setting reminders to answering complex questions. These assistants rely on language models that can parse intent, manage context, and maintain conversational flow.

In the healthcare industry, NLP-powered conversational AI plays an influential role in facilitating telemedicine and patient support. By integrating with electronic health records and utilizing advanced algorithms, chatbots can provide patients with preliminary diagnoses, offer medical advice, and even manage prescriptions. This application not only enhances access to healthcare but also reduces the burden on medical staff, allowing them to focus on more critical cases.

Additionally, NLP is transformative in the realm of customer service and support, where conversational AI systems handle a massive volume of inquiries efficiently. By employing sentiment analysis, these AI systems can detect customer emotions, adjust their tone of response, and escalate complex or sensitive issues to human agents when necessary. This application is apparent in services like LivePerson and Drift, which streamline customer interactions through intelligent dialogue management and real-time analytics.

The e-commerce sector benefits immensely from NLP in enhancing personalized shopping experiences. AI-driven recommendation systems analyze customer preferences and past interactions to suggest products, ensuring a tailored shopping experience. These systems use NLP to understand reviews, feedback, and search queries, helping to automate and refine product curation.

In the realm of finance, NLP applications in conversational AI are optimizing client interactions and automating financial advice. Robo-advisors like Betterment and Wealthfront utilize NLP to assess client inquiries, provide financial advice, and help manage investment portfolios. By understanding natural language inputs, these systems can offer more personalized financial guidance and support compliance and fraud detection, analyzing transaction data for inconsistencies or suspicious activity.

Educational platforms also harness the power of NLP to create interactive learning environments. AI tutors use natural language understanding to provide immediate feedback on exercises, answer student questions, and suggest additional resources. NLP enhances the adaptability of these systems to cater to individual learning styles and paces, fostering a more engaging learning experience.

Finally, media and content creation industries benefit from advances in NLP within conversational AI. Systems like OpenAI’s GPT models enable the generation of creative content and assist journalists in fielding information, summarizing articles, and even drafting copy. These NLP applications streamline content production processes and support real-time information dissemination, crucial for maintaining news updates and engaging audiences.

Through these varied applications, NLP not only augments the capabilities of conversational AI but also creates new avenues for innovation across diverse industries, truly embedding itself in the fabric of modern technological interaction.

Challenges and Ethical Considerations in NLP for Conversational AI

Natural Language Processing (NLP) for conversational AI faces a myriad of challenges, both technical and ethical. As machines strive to understand and genuinely converse with humans, several critical issues arise that require meticulous consideration and handling.

One of the foremost challenges is language ambiguity. Human language is inherently complex and filled with nuances that can lead to multiple interpretations. For instance, words like “bank” can refer to a financial institution or the side of a river, depending on the context. NLP models must grasp these subtleties to ensure accurate understanding and response generation. Techniques such as context vector representations and the employment of extensive language models like BERT and GPT strive to address these complexities by considering the broader context in which words appear. However, these models are not infallible and often struggle with idioms or slang that are contextually dependent.

Another challenge lies in bias and fairness. Language models are only as unbiased as the data they are trained on. If the training data contains biases—whether societal, racial, gender, or otherwise—the conversational AI will reflect these biases in its interactions. For instance, if a dataset predominantly includes texts authored by a particular demographic, the resulting model may unintentionally favor perspectives and vernacular from that group, leading to skewed interactions and potential marginalization of non-represented groups. Developing mechanisms to detect, mitigate, and ideally eliminate these biases is a significant research focus, often involving the diversification of training datasets and the implementation of fairness-aware algorithms.

Moreover, privacy concerns are paramount, especially considering the sensitive nature of the data often processed by conversational AI systems. These systems might handle personal information, requiring robust privacy-preserving techniques to protect user data. Approaches such as federated learning and differential privacy are at the forefront, allowing models to learn from data across multiple devices without ever transmitting actual data back to central servers, thus safeguarding user information.

From an ethical standpoint, transparency and accountability are crucial. Users interacting with AI should understand when they are conversing with a machine and what data is being collected and used. This calls for clear user interfaces and the establishment of AI transparency protocols, which ensure that the functioning and decision-making processes of the models are understandable to non-expert users. Additionally, establishing governance frameworks for AI accountability, where developers and companies bear responsibility for misuse or malfunctions, is essential for maintaining public trust in these technologies.

Security risks also pose significant challenges. Adversaries may attempt to manipulate NLP systems through adversarial attacks, triggering inappropriate or harmful responses. Developing robust adversarial defenses to fortify models against such attacks requires ongoing research into anomaly detection and model evaluation frameworks, ensuring that systems are not only robust but also reliable and safe for end-users.

Lastly, cultural sensitivity is a vital consideration, as AI systems are deployed globally. Conversational models must be adaptable to respect cultural nuances and variations in language use across different regions. This adaptability involves not only linguistic adjustments but also sensitivity to cultural references, humor, and etiquette. NLP developers often face the challenge of tailoring their models to handle multi-cultural interactions by diversifying linguistic datasets and incorporating region-specific context understanding functionalities.

Overall, addressing these challenges demands a multidisciplinary approach, combining advances in technology with continuous ethical evaluation, ensuring that NLP in conversational AI not only advances technically but also aligns with societal values and human rights.

Future Trends: The Next Frontier in NLP and Conversational Intelligence

Natural Language Processing (NLP) and conversational intelligence are poised at the brink of an innovative transformation, ushering in new potentials that could redefine human-machine interactions to an unprecedented degree. As we stand on this exciting threshold, several key trends and developments are emerging that promise to propel the field forward.

One of the most prominent trends is the fusion of multimodal learning. Traditional NLP systems have predominantly focused on text, but the future lies in integrating multiple data types, including voice, images, and even video. Multimodal NLP systems can process diverse data sources in tandem, allowing for a richer and more nuanced understanding of context. For example, by combining facial recognition data with text analysis in security applications, systems can better assess the sentiment and intent behind a user’s words, providing not only text-based responses but also context-aware behaviors and adaptive user interfaces.

Another area rapidly gaining traction is continuous and lifelong learning for NLP models. Unlike traditional models that undergo isolated training sessions using static datasets, future NLP systems will evolve dynamically, learning continuously from streams of data. This approach mimics human learning and adaptation, enabling conversational agents to acquire new vocabulary, slang, and idiomatic expressions as they emerge in everyday language. These models can be deployed in environments such as customer service or tech support, where agents can autonomously learn problem-solving techniques from repeated interactions.

The advent of more sophisticated contextual awareness and personalization holds particular promise for conversational AI. Future models will not only track historical interaction data but also integrate situational context, such as a user’s recent activities or emotional state, to tailor responses in real-time. For instance, a virtual assistant might adjust its tone or suggest specific actions based on detecting user stress levels through voice analysis, thus creating more empathetic and useful dialogues.

Explainable AI (XAI) is set to redefine transparency in conversational systems. As these models become more complex, the demand for understanding their decision-making processes grows. Future trends will likely see improvements in the interpretability of NLP models, making it easier for users to comprehend how and why a system arrived at certain conclusions. For businesses, this means developing AI systems that can articulate reasoning in human terms, supporting decision-making in industries such as healthcare or finance, where understanding and trust are crucial.

Ethically guided AI development is another future-oriented trend gaining momentum. The move towards creating AI that aligns with human values and ethics involves more than just addressing biases and fairness. It includes creating inclusive datasets and designing algorithms that respect privacy, promoting well-being, and generating positive societal impact. Initiatives around AI ethics frameworks and inclusive AI development practices are essential in ensuring that advancements in NLP contribute positively to society.

The integration of quantum computing with NLP poses limitless possibilities. Quantum computing has the potential to process language data at scales and speeds far beyond conventional systems. As quantum technologies mature, they could offer breakthroughs in solving problems of complexity and ambiguity inherent in language processing, enabling real-time global language translation or significantly enhancing model precision in high-stakes scenarios.

Finally, the convergence of robotics and NLP is an exciting frontier. By imbuing robots with advanced conversational intelligence, we create opportunities for deeply interactive machines capable of complex tasks like caregiving or collaborative creativity. These systems, armed with advanced NLP, could navigate and understand the world similar to humans, making decisions and taking actions more autonomously than ever before.

As these trends progress, we can anticipate a future where NLP and conversational intelligence are intricately woven into the fabric of everyday life, transforming not just industries but also enhancing personal and professional interactions on a global scale. The future is not only about what NLP can tell us but how it can support and enhance the way we live and work.