Understanding Voice Navigation and Its Importance

Voice navigation is an advanced technology enabling users to interact with their devices using natural language voice commands. This form of interaction has increasingly gained traction in various applications, from GPS systems to smart home devices, due to its convenience and hands-free nature.

In essence, voice navigation leverages speech recognition technology, which converts spoken words into digital signals that a device can process. By analyzing these signals, the system interprets the intent and provides appropriate responses or actions. This sophisticated interaction model rests on robust algorithms powered by artificial intelligence and machine learning.

Importance of Voice Navigation

-

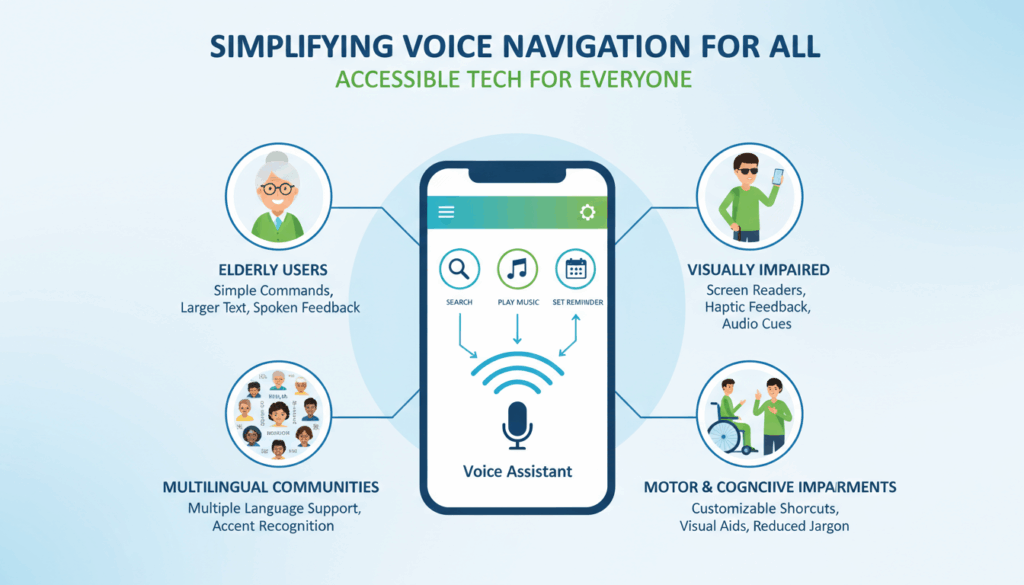

Accessibility: One of the most significant benefits of voice navigation is its ability to enhance accessibility for individuals with disabilities. People with visual impairments can use voice commands to operate devices without needing a screen, making technology more inclusive. Similarly, voice navigation aids those with mobility challenges, offering a convenient alternative to traditional point-and-click interfaces.

-

Safety: In environments where hands-free operation is vital, such as while driving, voice navigation minimizes distractions. For instance, drivers can keep their hands on the wheel and eyes on the road while using voice commands to control music, send messages, or get directions. This hands-free interaction not only improves safety but also enhances user experience by allowing seamless multitasking.

-

Efficiency: Voice navigation significantly expedites routine tasks. For example, setting reminders, sending texts, or making phone calls can be done in seconds with a simple voice command. This efficiency is particularly valuable in business settings, where time savings translate into increased productivity.

-

User Experience Enhancement: Engaging with devices through voice can make interactions more natural and intuitive. Users often find speaking easier and faster than typing, especially on small touchscreens. By providing immediate, spoken responses, voice navigation systems offer a conversational interface that improves overall user satisfaction.

Real-World Applications and Examples

-

Smart Home Automation: Devices like Amazon Echo and Google Home use voice navigation to control appliances, set timers, and even order groceries. This allows users to manage their home environment effortlessly.

-

Navigation Systems: GPS applications, such as Google Maps and Waze, integrate voice navigation to guide users turn-by-turn. Voice inputs can further refine searches and navigate efficiently without diverting attention.

-

Healthcare: Physicians and medical staff use voice navigation to update records verbally or to navigate through patient files, reducing manual entry time and minimizing errors.

The integration of voice navigation into everyday technology continues to evolve as AI advances. Future developments aim to understand context and intent better, leading to more personalized and anticipatory interactions. This evolution not only promises to make voice navigation more accurate and versatile but continues to underscore its importance in creating accessible, efficient, and engaging technological experiences.

Key Challenges in Voice Navigation Accessibility

Voice navigation, while offering numerous benefits, faces several accessibility challenges that need to be addressed to maximize its potential for all users. These challenges are grounded in the diversity of user needs and environmental variables that impact effective usage.

One major challenge is speech recognition accuracy. For users with speech impairments, including those with conditions like dysarthria or stuttering, recognition systems may struggle to accurately transcribe voice commands. Similarly, strong accents, dialects, or non-native language speakers can present additional hurdles. Improving natural language processing (NLP) algorithms to account for these variances through extensive database training is essential. Diverse datasets that include samples from various speech impairments and accents can help train systems to recognize a broader range of speech patterns accurately.

Another significant issue is environmental noise. Voice navigation systems often falter in noisy environments, such as crowded streets or busy offices. Microphone technology must be advanced to better filter background noise and focus on the primary speaker’s voice. Devices could incorporate directional microphones or algorithms that enhance speech recognition in dynamic settings.

Privacy concerns present another substantial barrier. Users might be hesitant to use voice navigation due to fears related to data security. They may worry about their verbal commands being recorded or shared without consent. Implementing robust data encryption and transparent privacy policies can reassure users. Providing clear and accessible explanations about what data is collected and how it is used can help mitigate these concerns.

Feedback and interaction design also pose challenges. Inaccessible feedback mechanisms make it difficult for visually impaired or otherwise challenged users to receive immediate and useful responses. For instance, if a command isn’t understood, reiterating the request or providing an alternate mode of feedback, like tactile alerts or audible confirmations, can significantly improve usability. Ensuring that interaction design supports multi-modal feedback can cater to a wider audience.

Furthermore, the complexity of language models can sometimes limit understanding of natural, conversational input. Systems must advance beyond basic command recognition to interpret nuance and context within conversational speech. AI that learns user preferences and anticipates needs based on past interactions can make the experience feel more intuitive.

Lastly, hardware limitations can restrict the smooth implementation of voice navigation. Devices with outdated operating systems or inadequate processing power may not run the latest voice recognition software efficiently, restricting access for users with older devices. Encouraging scalable software that performs optimally across various hardware configurations is crucial.

Addressing these challenges requires ongoing collaboration between technologists, designers, and user feedback groups to make voice navigation as inclusive as possible. By tackling these barriers, voice navigation technology can better serve all users, providing truly universal accessibility.

Designing User-Friendly Voice Navigation Interfaces

Creating a voice navigation interface that is user-friendly involves several critical considerations that blend intuitive design, robust technology, and user-centered research. The design process should emphasize clarity, simplicity, and accessibility to cater to a diverse user base.

Effective voice navigation design requires understanding the needs and behaviors of users. Conducting thorough user research is foundational. This means engaging with diverse user groups, including those with disabilities, to gather insights into how various individuals interact with voice systems. Interviews, usability testing, and surveys can help uncover specific pain points and preferences.

One key aspect is simplifying voice commands. Users benefit from a system that understands and responds to natural language rather than requiring precise phrasing. This involves designing algorithms capable of processing conversational language, nuances, and context. The use of machine learning to continually adapt to individual speech patterns and preferences can further enhance user experience by making interactions feel more personalized and efficient.

Clarity in feedback is crucial. Users must receive immediate and clear responses to their voice commands. This includes providing audible confirmations when a command is understood or executed and offering solutions when input is unclear or not recognized. Multi-modal feedback, such as visual indicators on a screen or subtle vibrations, can also enhance the user experience, particularly for those with hearing challenges.

Building in redundancy and flexibility in design allows users to achieve their objectives through multiple pathways. For example, offering both voice command and screen-based options enables users to choose their preferred interaction mode. Ensuring that the system can handle interruptions or offer correction suggestions when commands are misunderstood also contributes to smoother interactions.

Another vital aspect is ensuring privacy and security. Users must trust that their voice interactions are secure and private. This requires designing systems that minimize data collection to only what is necessary for functionality and ensuring robust encryption and permission settings. Clear and transparent communication about how data is used and secured is essential to build trust.

Customization options can enhance satisfaction by allowing users to adjust settings, such as voice speed, accent, and verbosity of responses. Such personalization makes the interface feel more accommodating and tailored to individual preferences and needs.

Prototype testing is essential in this design process. Iteratively testing prototypes with users can highlight unforeseen usability issues, providing opportunities to refine and enhance the system before a full rollout. Observing real-world use cases and gathering feedback ensures that the final product meets user expectations and is truly accessible.

Overall, designing user-friendly voice navigation interfaces demands a synthesis of empathetic user research, cutting-edge technology, and a commitment to inclusivity. By focusing on these areas, designers can create interfaces that not only cater to a broad audience but also elevate overall user experience through seamless, intuitive, and secure interactions.

Implementing Voice Navigation in Web Applications

Implementing voice navigation in web applications provides a seamless, intuitive interaction method that enhances accessibility and user experience across different platforms. Here are the key steps and considerations for integrating this feature into your web applications:

Voice navigation is largely dependent on web technologies such as Web Speech API, which facilitates the recognition and synthesis of speech in web environments. This API allows developers to convert user speech into textual data that applications can process.

Start by ensuring compatibility across different browsers. While many modern browsers support Web Speech API, thorough testing across platforms is essential. Implement comprehensive feature detection to gracefully manage scenarios where the API is unsupported. This can be done using JavaScript to programmatically check for API presence and provide an alternative input mechanism when necessary.

Next, set up the speech recognition service. Begin by creating an instance of SpeechRecognition() if you are developing in a supported environment. Use this instance to start capturing and interpreting voice inputs. It’s critical to configure the recognition object with appropriate settings such as language preferences and continuous recognition if necessary (e.g., listening beyond single commands to process ongoing input).

Event listeners form the backbone of interactivity for voice input. Attach listeners to handle essential recognition events such as:

– onstart: Indicate to users that the system is ready to receive input.

– onspeechend: Acknowledge when the speech has stopped and the system is processing.

– onresult: Acquire the results of the speech recognition process, determining user intent and responding accordingly.

– onerror: Manage errors promptly to assist users in understanding what went wrong, offering retry options if feasible.

For enhanced user feedback, integrate text-to-speech synthesis. This can be achieved using the SpeechSynthesis interface, which outputs verbal responses, further enriching the interactive experience. Define a clear, natural voice for responses within the SpeechSynthesisUtterance object and bind it to interactive elements to provide contextually appropriate feedback.

Security and user privacy are paramount when collecting and processing voice data. Ensure adherence to data protection regulations, encrypt voice data, and clearly communicate terms of use to end-users. Implement data handling practices that safeguard personal information, potentially offering users opt-out options for data collection.

Lastly, refine your application through iterative testing. Gather feedback from diverse user groups to fine-tune the voice recognition system, attending to any dialectic or language variances presented by the user base. Ensure that environmental factors like background noise are taken into account by adjusting microphone input levels or employing noise-cancellation techniques as required.

By focusing on these key components and engaging with users throughout development, you can create an inclusive, efficient voice navigation system that significantly elevates web application interactivity and accessibility.

Best Practices for Testing and Refining Voice Navigation Features

Testing and refining voice navigation features is a multifaceted process that involves careful evaluation of functionality, accuracy, and user satisfaction. To achieve optimal performance and user experience, thorough testing across diverse scenarios and iterative refinement based on feedback are essential.

One fundamental aspect of the testing process is engaging diverse user groups. This involves recruiting participants with varying accents, dialects, speech patterns, and disabilities to ensure the system accommodates a wide range of voices. By including users with speech impairments or those for whom the language is not their first, developers can assess how the navigation responds to real-world diversity and adjust the system to recognize and process inputs more accurately.

Simulating real-world environments during tests is crucial. Voice navigation systems should be assessed in various conditions that users are likely to encounter, such as noisy streets, quiet rooms, or echo-prone environments. Incorporating background noise and other auditory distractions during testing sessions can help evaluate the robustness of the speech recognition algorithms. This can further lead developers to improve on noise-cancellation techniques or introduce directional microphones that enhance the clarity of the speaker’s voice.

Measuring accuracy and response time is another best practice. Developers should collect and analyze data on how often voice commands are correctly recognized and the speed at which the system responds. Tools that log interactions and track occurrences of errors or delays can provide insight into areas that need enhancement. If a system consistently misinterprets certain phrases or commands, this data can guide algorithmic adjustments and the addition of personalized voice training capabilities.

Iterative user feedback loops are instrumental in refining the user experience. After initial testing phases, collecting feedback from users about their experiences with the voice navigation system can highlight unforeseen pain points or areas for enhancement. Feedback can be gathered through surveys, usability testing sessions, and in-app analytics. Prompt adaptation based on this feedback can improve satisfaction and functionality, as users’ insights directly contribute to the system’s evolution.

Benchmarking against industry standards and competitor analyses can provide a framework for assessing the effectiveness of voice navigation features. Developers should remain informed about advancements in voice technology and integrate best practices accepted within the industry. This might include adopting newer versions of speech recognition algorithms, exploring machine learning models that enhance contextual understanding, or improving cross-compatibility across different devices and platforms.

Ensuring privacy and security compliance in all testing phases is imperative. Users must feel confident that their voice data is being managed securely. Implementing rigorous data protection standards and transparently communicating how data is used and protected fosters trust. Consent forms and clear disclosures about data usage during testing promote ethical testing practices.

Finally, continuous monitoring and optimization post-launch are critical. Voice navigation systems require ongoing assessment even after deployment. Automated monitoring tools can alert developers to issues in real time, while periodic updates based on technology advancements or changing user habits ensure that systems remain relevant and functional.

By adhering to these best practices, voice navigation systems can be rigorously tested and refined to provide a seamless, inclusive, and reliable user experience. Engaging in comprehensive testing and maintaining flexibility to incorporate new technologies will ensure long-term success and satisfaction in voice navigation implementation.