Design goals and constraints

Design decisions should prioritize correctness and predictable performance. Enforce data integrity with appropriate types and database constraints (primary keys, foreign keys, unique and check constraints) so the schema itself prevents invalid states. Define clear access patterns and SLOs early: optimize for the most frequent queries rather than theoretical normalization alone. For read-heavy workloads consider selective denormalization or materialized views to reduce costly joins; for high-concurrency write paths favor normalized layouts to minimize update contention. Choose indexes that reflect filter and join columns, but remember each index costs write throughput and storage—measure before adding broad coverage indexes. Plan for scale by considering partitioning/sharding strategies that align with natural data segments (time, tenant, region) and by keeping hot-path tables compact. Account for operational constraints such as backup and restore windows, migration complexity, schema evolution, and team expertise; prefer migrations that are backward-compatible and incremental. Include nonfunctional constraints up front: latency targets, throughput, consistency requirements, and regulatory/security needs (encryption, PII handling, audit trails). Make trade-offs explicit: state why a denormalized table, index, or partitioning scheme was chosen given the workload, and iterate based on monitoring and real traffic.

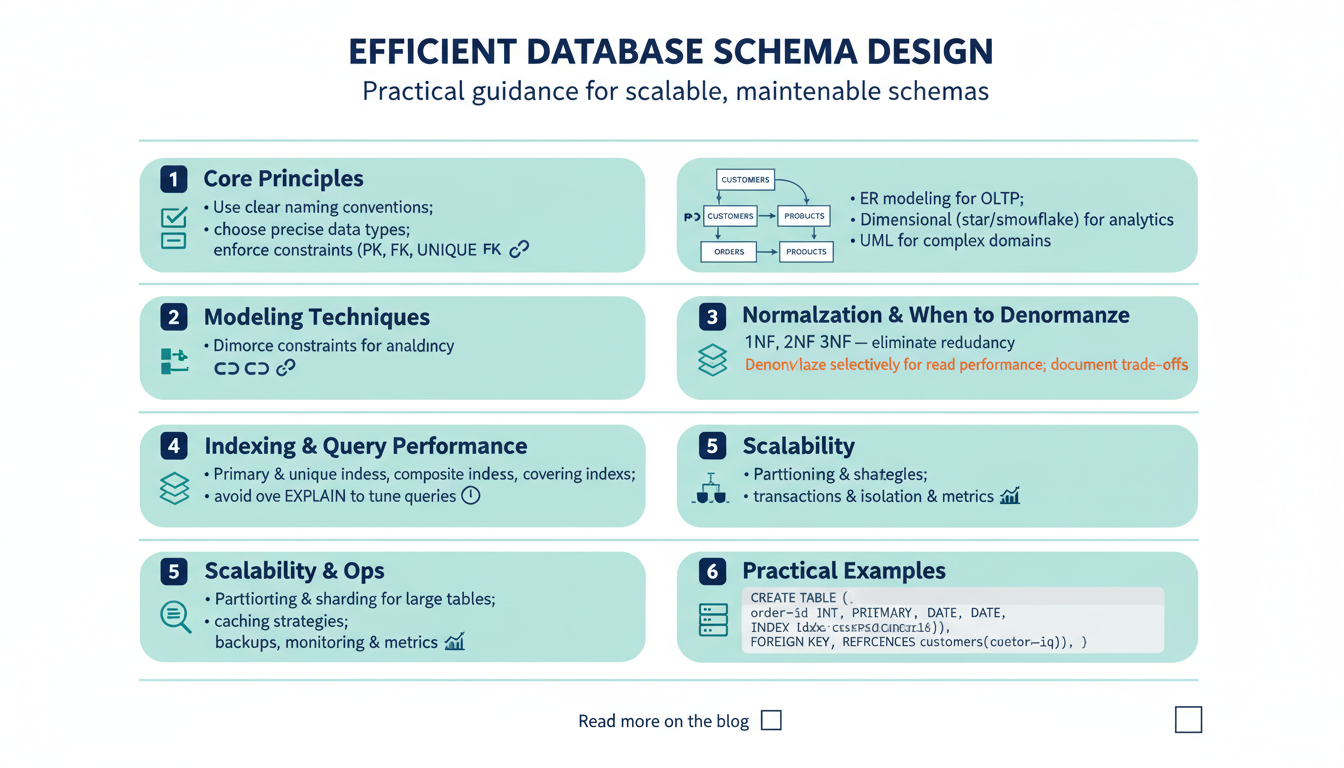

Entity-relationship modeling basics

Start by modeling real-world nouns as entities and their properties as attributes; choose a stable primary key for each entity (surrogate keys are common for performance and evolution). Express relationships explicitly: one-to-one, one-to-many, and many-to-many. Represent cardinality and optionality (nullable vs required) because these drive NOT NULL and foreign key constraints in the physical schema. Distinguish identifying relationships (where a child’s identity depends on a parent) from non-identifying ones; use composite keys only when they reflect true identity or simplify queries.

When translating the diagram to tables, map many-to-many relationships to associative (join) tables that include foreign keys and any relationship attributes. Enforce integrity with primary keys, foreign keys, unique constraints, and appropriate column types. Mark derived attributes (age, totals) as computed fields or materialized columns rather than stored state unless performance needs dictate otherwise. Model enums and small reference sets as lookup tables when they require metadata or change control; use constrained types for small, stable sets to reduce joins.

Design ER models with access patterns in mind: prioritize shapes that make the most frequent queries efficient. For read-heavy paths, plan selective denormalization or materialized views; for high-write paths, prefer normalized layouts to minimize contention. Add indexes that reflect join and filter columns, but balance write cost. Keep the ER diagram lightweight and versioned so it can evolve with monitoring-driven trade-offs between normalization, performance, and operational complexity.

Normalization: normal forms explained

Normalization organizes tables to reduce redundancy and avoid insert/update/delete anomalies while making dependencies explicit. A first step is atomicity: each column holds single (atomic) values and repeating groups are removed, which enables the relational model and further normal forms. (en.wikipedia.org)

After 1NF, eliminate partial dependencies: if a table has a composite key, no non-key attribute should depend on only part of that key. Achieving this prevents update anomalies tied to composite-key components. (en.wikipedia.org)

Remove transitive dependencies next: non-key columns must depend directly on the candidate key, not on another non-key column. This is the essence of third normal form and keeps facts about one entity in one place. (en.wikipedia.org)

Boyce‑Codd Normal Form (BCNF) tightens 3NF: every determinant (left side of a functional dependency) must be a candidate key. BCNF handles edge cases where overlapping candidate keys still allow redundancy. (en.wikipedia.org)

In practice, normalize until tables capture single facts and integrity is enforced, then measure performance. For read‑heavy workloads selectively denormalize, use materialized views or precomputed columns to avoid expensive joins—trading storage and more complex writes for faster reads and simpler queries. Make these trade‑offs explicit and reversible. (techtarget.com)

Keys, relationships, and constraints

Treat keys and constraints as the schema’s first line of defense: they enforce correctness, inform indexing, and document invariants for developers and DBAs.

Choose a primary key that’s small, stable, and immutable. Surrogate keys (serial/UUID) are usually better for evolution and joins; use composite keys only when they represent true business identity and simplify queries. Always add a UNIQUE constraint when a natural key must remain enforced even if you use a surrogate.

Model relationships explicitly. One-to-many relationships map to a foreign key on the child; many-to-manys become associative tables that contain the two FKs plus any relationship attributes and a primary key (surrogate or composite). Index foreign key columns used in joins or lookups to avoid sequential scans and to speed up cascade operations.

Use declarative constraints to capture business rules: NOT NULL for required attributes, UNIQUE for alternate keys, CHECK for domain invariants, and constrained/enumerated types for small stable sets. Prefer database-level enforcement over ad-hoc application checks because constraints are cheaper to reason about and prevent race conditions. Consider DEFERRABLE constraints for multi-step transactions where temporary violations are acceptable.

Make delete/update actions explicit (ON DELETE CASCADE / SET NULL / RESTRICT) and document the rationale—cascades simplify cleanup but can produce hidden performance spikes. Avoid redundant indexes that duplicate UNIQUE constraints unless you need special index types (partial, expression) for performance.

Example:

CREATE TABLE orders (

id uuid PRIMARY KEY,

customer_id uuid NOT NULL REFERENCES customers(id) ON DELETE RESTRICT,

status order_status NOT NULL,

amount numeric CHECK (amount >= 0),

UNIQUE (customer_id, id)

);

Indexing and query performance

Indexes are the primary lever for turning slow scans into fast lookups, but they’re a trade-off: every index speeds reads and increases write latency, storage, and maintenance. Pick index keys that match real access patterns—columns used in WHERE, JOIN, ORDER BY and GROUP BY clauses—and favor highly selective predicates. Keep statistics fresh (ANALYZE) and use EXPLAIN / EXPLAIN ANALYZE to validate that the planner actually uses an index for critical queries.

When building composite indexes, column order matters: put the most selective and commonly filtered column first so the index can serve left‑prefix lookups. Create covering indexes to satisfy queries entirely from the index and avoid heap lookups by including frequently selected non-key columns (e.g., PostgreSQL’s INCLUDE or storing columns in the index). Example:

CREATE INDEX idx_orders_customer_status ON orders(customer_id, status) INCLUDE (amount, created_at);

Use partial/filtered indexes for sparse predicates (status = ‘active’) and functional/expression indexes for computed filters (lower(email)). Choose index type by access pattern: B-tree for equality and range, GIN/GiST for full‑text, arrays, and JSON containment. Monitor and remove redundant or unused indexes; duplicate indexes waste resources and hide write-impact costs.

For high-write or very large tables, combine indexing strategy with partitioning, materialized views, or cached aggregates to keep hot paths small. Regularly measure end-to-end SLOs (latency, throughput) and iterate: add or change indexes based on real query profiles, not hypothetical coverage.

Partitioning, caching, and denormalization

Partition large tables along the natural axes of your workload to reduce scan scope and improve maintenance. Use range partitions for time-series (monthly/yearly), list partitions for discrete segments (region, tenant), and hash partitions for even distribution when no natural key exists. Design access patterns first: place frequently-queried columns and hot rows in few partitions to enable partition pruning and fast single-partition operations. Be mindful of cross-partition joins, global unique constraints, and transactions—these add complexity and often favor co-locating related data or using application-level coordination.

Cache strategically to shave latency from repeat reads while preserving consistency guarantees. Favor cache-aside for simplicity: read from cache, fall back to DB, populate cache on miss. Use short TTLs or active invalidation for frequently-updated entities; choose write-through or write-behind only when you can tolerate write-path latency or complexity. Keep caches small and keyed by query shape (e.g., user:id, order:recent) and instrument hit/miss metrics and eviction patterns so you can tune TTLs and sizes against SLOs.

Apply denormalization selectively to eliminate expensive joins: materialized views, precomputed aggregates, and duplicated lookup columns speed reads but shift complexity into writes. Update denormalized state synchronously for strong consistency or asynchronously (events, background jobs) when eventual consistency is acceptable. Automate correctness with triggers or idempotent updater jobs and include reconciliation checks. Always document the trade-offs and add tests and monitoring for stale data, partition skews, cache saturation, and write amplification so you can iterate safely based on real traffic.