Why normalization matters

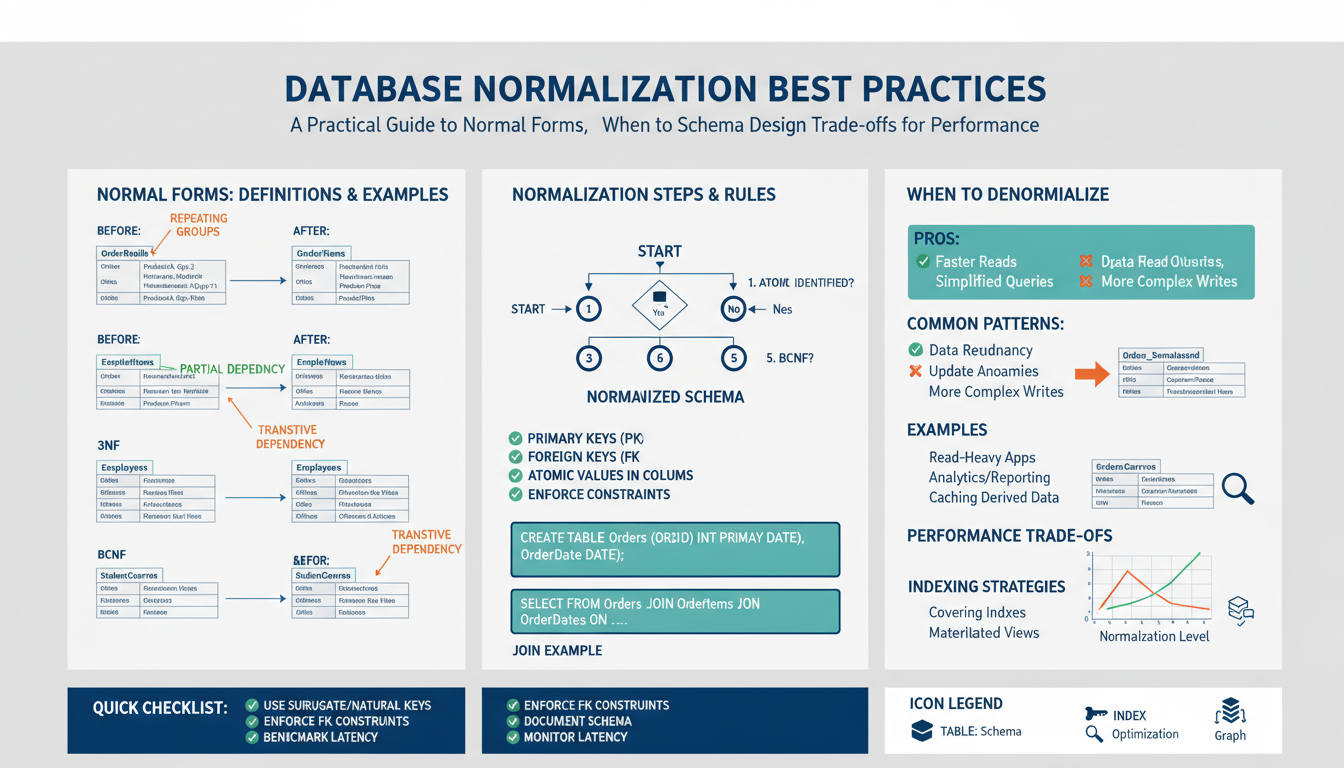

Normalization reduces redundancy and enforces consistent, unambiguous data models so updates, inserts, and deletes don’t create contradictions. By separating entities (for example, keeping customers and orders in distinct tables rather than copying address data into every order row) you avoid update anomalies, shrink storage for repeated values, and make referential integrity simple to enforce with foreign keys and constraints. Clean normalization also clarifies business rules—what is an order attribute versus a customer attribute—which simplifies queries, migrations, and testing.

Normalization isn’t just theoretical: it yields predictable maintenance costs and safer analytics. That said, fully normalized schemas can increase join complexity and hurt read performance for large aggregated queries. Use profiling to find hotspots, then apply targeted denormalization (materialized views, cached summary tables, or selective duplicated columns) with automated refreshes or change-data-capture to keep consistency. As a practical baseline, design to at least 3NF for correctness, index the most-used join keys, and only denormalize where measured latency or I/O proves it necessary. Treat denormalization as a pragmatic optimization, not a substitute for a correct logical model.

Key concepts and terminology

Normalization is the process of organizing tables to eliminate redundant data and enforce clear, rule-driven relationships. A functional dependency (A → B) means B’s value is determined by A; identifying these dependencies drives decomposition. Keys: a candidate key uniquely identifies rows, the chosen primary key enforces uniqueness, and foreign keys express referential integrity between tables. 1NF requires atomic (indivisible) column values and no repeating groups. 2NF removes partial dependencies on a composite key (every non-key column must depend on the whole key). 3NF removes transitive dependencies (non-key columns depending on other non-key columns) so attributes depend only on keys. BCNF tightens 3NF by requiring every determinant to be a candidate key. Higher forms address multivalued and join dependencies (4NF, 5NF) for specific advanced anomalies. Common anomalies: insert (can’t add related data), update (inconsistent copies), and delete (unintended data loss). Practical terms: surrogate keys (synthetic IDs) versus natural keys, composite keys, and denormalization strategies—duplicated columns, summary tables, or materialized views—used to reduce joins for read-heavy workloads. Index the most-used join and filter columns, profile queries to find hotspots, and use automated refresh or CDC to keep denormalized copies consistent. Treat normalization as the correctness baseline and denormalization as a measured, reversible optimization.

First to Third Normal Forms

Start by enforcing atomic, single-valued columns and eliminating repeating groups: store each phone number, order line, or tag as its own row rather than as comma-separated lists or arrays inside a field. This prevents ambiguous updates and makes indexing and constraints reliable.

When a table uses a composite primary key, remove partial dependencies: every non-key attribute must depend on the whole key, not a subset. For example, an OrderItems table keyed by (order_id, product_id) should not carry product_name or product_description; those belong in a Products table keyed by product_id. If your table has a single-column key, 2NF is effectively satisfied by default.

Remove transitive dependencies so non-key columns depend only on the primary key. If Customer has columns customer_id, zip_code, and zip_city, zip_city depends on zip_code (a non-key), so move postal details to a PostalCodes table and reference it by zip_code. This keeps updates consistent and prevents subtle anomalies when lookup data changes.

Practical guidance: design to this level as a correctness baseline—1NF for atomicity, 2NF to eliminate partial dependencies in composite-key tables, and 3NF to eliminate transitive dependencies. Use surrogate keys where natural keys are awkward, but keep functional dependencies explicit in your schema and documentation. Index foreign keys and common filters to offset join costs. When you denormalize for read performance, do so deliberately: duplicate minimal fields, record why and how they are refreshed, and prefer materialized views or cached summaries with clear invalidation strategies rather than ad-hoc copies.

BCNF and higher normal forms

Beyond third-normal-form cleanup, enforce that every determinant in a relation is a candidate key: if X → Y holds, X must uniquely identify rows. Violations create subtle update anomalies even when 3NF appears satisfied. For example, a table OrderAssignment(order_id, product_id, supplier_id, supplier_region) with supplier_id → supplier_region and (order_id, product_id) → supplier_id is in 3NF but not in BCNF because supplier_id is a non‑key determinant. Decompose into Suppliers(supplier_id, supplier_region) plus OrderAssignment(order_id, product_id, supplier_id) to remove the anomaly. Be aware decomposing to satisfy this stricter rule can break dependency preservation, forcing extra joins or triggers to enforce certain FDs; when preserving FDs matters, 3NF is sometimes the practical choice.

Higher normal forms address rarer dependency classes. Fourth normal form targets multivalued dependencies: when a table stores two independent one‑to‑many attributes (e.g., a Person with multiple phone numbers and multiple certifications), it creates combinatorial row duplication. Split the independent lists into separate tables to avoid inflated Cartesian combinations. Fifth normal form (project‑join normal form) deals with complex join dependencies where correct reconstruction requires multiple joins; it’s mainly relevant in deeply factored schemas or where complex constraints exist.

In practice, aim for BCNF when clear non‑key determinants cause anomalies and you can tolerate extra joins. Reserve 4NF/5NF for cases with independent multivalued attributes or enforced join constraints. Always validate by writing representative queries and profiling: if joins harm performance, prefer targeted denormalization (materialized views or derived tables) with clear refresh semantics rather than skipping these logical normalizations.

When to denormalize for performance

Only after you’ve normalized to a sensible baseline, benchmarked queries, and exhausted indexing, caching, and query-tuning should you consider introducing redundancy as a performance optimization. (compilenrun.com)

Reach for redundancy when read-side latency or CPU is dominated by repeated joins or heavy aggregations on predictable query shapes (dashboards, list views, or API endpoints with stable filters). If p95/p99 response times or I/O on hot joins are the bottleneck, denormalization often yields the largest win. (datacamp.com)

Choose the right denormalization pattern for the problem: precomputed aggregates or rolled-up summary tables for analytics, materialized views when the engine can maintain them efficiently, and selective duplicated columns (or small lookup snapshots) for hot-path reads in OLTP reads. Use read-replicas or a cache for very transient needs; use denormalized tables when you need query simplicity and lower compute cost per request. (learn.microsoft.com)

Avoid denormalizing frequently updated, high-cardinality, or many-to-many data unless you can afford the maintenance: denormalization shifts join work to ingestion and can multiply write costs and complexity (triggers, CDC pipelines, or full-refresh windows). If near-real-time consistency is required, measure the engineering cost of synchronization first. (clickhouse.com)

Make the change reversible and operationally visible: profile queries to prove the join/aggregation hotspot, pick a refresh strategy (periodic batch, incremental materialized view maintenance, or CDC-driven updates) that matches your staleness tolerance, document the reason and invariants, and add verification jobs to detect drift. Treat denormalization as a targeted, measurable optimization—not a schema substitute. (datacamp.com)

Denormalization patterns and trade-offs

Denormalization applies targeted redundancy to improve read performance while accepting additional write and operational costs. Common patterns include duplicating a few hot lookup columns directly into fact rows to avoid frequent joins, maintaining precomputed aggregates or rollup tables for dashboard queries, using materialized views where the DB can refresh incremental results, keeping narrow snapshot tables tailored to specific API endpoints, and storing read-optimized JSON blobs for hierarchical or rarely-updated reference data.

Each pattern trades storage and write complexity for simpler, cheaper reads. Duplicated columns reduce join CPU but create write amplification: every update to the source requires synchronized writes or an ingestion pipeline. Aggregates and rollups cut query latency but introduce staleness unless you invest in incremental refresh or low-latency CDC pipelines. Materialized views offload compute to the engine but may be limited by refresh semantics and locking behavior. Snapshots and JSON blobs simplify reads but make selective updates awkward and can break relational constraints.

Choose a pattern only after profiling: prove that joins or aggregations dominate latency or I/O. Prefer denormalization that duplicates minimal fields and preserves the canonical normalized tables as the source of truth. Match refresh strategy to business SLAs: periodic batch for minute‑to‑hour tolerance, incremental/CDC for near‑real‑time, and synchronous updates only when strict consistency is required and write throughput is low.

Operationalize denormalization: document the invariant and why it exists, add automated verification jobs to detect drift, and provide rollback paths (ability to drop the denormalized copy and revert queries). Treat denormalization as reversible, measured optimization—use it to simplify hot read paths, not to mask modeling errors or replace good schema design.