Overview: AI Voice Assistants for Call Centers

When contact volume spikes, the first thing your customers notice is the hold music—what they don’t see is the operational drag behind it. AI voice assistants front-load this problem by automating routine interactions, routing complex issues to agents, and helping you reduce wait times without hiring more staff. What makes a successful deployment isn’t only speech accuracy but the full orchestration between recognition, understanding, business logic, and escalation—so how do you evaluate those pieces in your environment?

Building on this foundation, let’s break down the technical stack you’ll integrate. The core components are automatic speech recognition (ASR) to transcribe audio, natural language understanding (NLU) to map utterances to intents and entities, a dialog manager to maintain conversational state, and text-to-speech (TTS) to return natural-sounding responses. We also connect these pieces to backend services—CRM, ticketing, and knowledge bases—via APIs so the voicebot can authenticate callers, fetch records, and perform transactions securely. Understanding these layers helps you optimize latency, error-handling, and data flows for enterprise-grade call centers.

Deployment choices shape performance and compliance trade-offs and should guide architecture decisions early. You can host models in the cloud for rapid iteration and elastic scaling, deploy hybrid setups where PII-sensitive processing stays on-premises, or run inference at the edge for ultra-low-latency customer interactions. Each option influences tooling (container orchestration, service meshes, observability), cost models (per-minute transcription vs. self-hosted GPUs), and upgrade cycles; choose based on throughput, compliance needs, and operational maturity.

Interaction design determines whether customers feel helped or frustrated during a call. Start conversations by confirming intent and context quickly, then use progressive disclosure—ask only for the data required at each step. For example, when handling a billing inquiry we authenticate the caller, surface the invoice line items from CRM, ask a single clarifying question if confidence < 0.7, and escalate to an agent with full context if intent confidence remains low. This pattern—authenticate, validate, act, escalate—keeps false positives down and handoffs smooth.

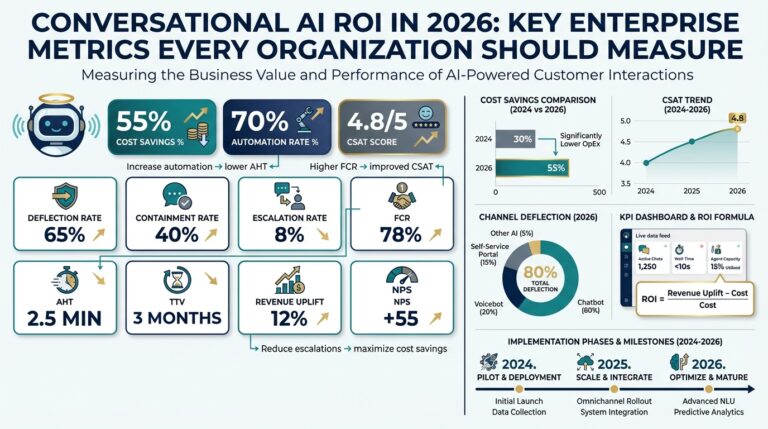

Measure what matters: containment rate, average handle time (AHT) for agent-handled calls, mean time to resolution, and customer satisfaction (CSAT). Track model-specific KPIs too: ASR word error rate, NLU intent accuracy, dialog fallbacks per 1,000 calls, and latency percentiles. These metrics let you quantify how intelligent voicebots reduce wait times and operational cost, and they provide levers for targeted improvement—retraining NLU where intent confusion is high or optimizing TTS for better comprehension in noisy environments.

Operational risks are real but manageable with design and governance. Protect PII by encrypting audio in transit and at rest, tokenize sensitive fields before sending them to third-party models, and maintain auditable consent flows for call recording. Mitigate failure modes with deterministic fallbacks: confidence thresholds that trigger menu-based recovery or immediate agent transfer, and circuit breakers to avoid cascading failures during upstream outages. Continuous monitoring, A/B testing of dialog flows, and controlled model rollouts reduce regressions and keep the system reliable.

Taking this concept further, the next step is designing a minimal viable voice flow that proves containment and measures ROI quickly. Start with high-frequency, low-complexity interactions—balance inquiries, password resets, and appointment scheduling—and instrument every transition. We’ll use those learnings to scale to transactional scenarios and to architect intelligent voicebots that truly augment your agents rather than replace them, improving throughput and customer experience in measurable ways.

Benefits and Use Cases for Voicebots

Voicebots, AI voice assistants, and call center automation deliver measurable value from day one when you pick the right entry points. Start by thinking about containment and latency: voicebots that resolve routine issues remove callers from queues and lower average wait time while letting agents focus on high-skill work. Building on this foundation, we should treat the voice channel as both a throughput problem and a context problem—throughput because call volume is finite, context because successful handoffs require state, CRM data, and a dialog manager that preserves intent. How do you prioritize? Start where containment lifts agent AHT most predictably.

The primary business benefits are cost reduction, speed, and consistent customer experience. Voicebots reduce operational cost by automating repeatable transactions—authentications, balance checks, status updates—so you need fewer agent minutes per call. They shorten resolution time because ASR and NLU can surface the correct record and validate minimal inputs before taking action or routing to an agent with full context. They also improve quality by enforcing business rules and compliance prompts deterministically, which reduces error-prone manual steps and increases CSAT.

Real-world use cases map neatly to frequency and complexity: start with high-frequency, low-complexity flows such as balance inquiries, appointment scheduling, password resets, and order status checks. These workflows provide quick wins because they require limited entity extraction and a small set of deterministic actions in the backend. Move to mixed flows—payment processing, refunds, PIN updates—only after you’ve instrumented fallbacks and confidence thresholds. For escalations, design your voicebot to collect and attach a problem summary, recent utterances, and relevant metadata to the agent session so handoffs are seamless rather than duplicative.

From a systems perspective, the technical payoff depends on tight integration between ASR, NLU, the dialog manager, and your backend systems. High containment needs low latency and accurate intent recognition; that means optimizing ASR for your acoustic environment, fine-tuning NLU on domain utterances, and using a dialog manager that supports slot-filling and context windows without excessive turn-taking. We often see failures when orchestration is treated as an afterthought: a fast ASR with a brittle NLU or slow CRM calls will negate the benefits of automation. Design for observability—trace calls across components, emit intent confidence, and capture fallbacks for retraining.

How do you measure ROI and decide what to scale? Track containment rate, average handle time for agent-assisted calls, mean time to resolution, and CSAT as primary outcomes; supplement with model KPIs like ASR word error rate and NLU intent precision/recall. Translate containment into minutes saved per period and convert that into agent labor reduction or redeployment value. Use A/B tests on dialog variations to quantify CSAT lift and regression risk before widening rollouts. These metrics give you both operational validation and governance signals for controlled model updates.

Operational constraints drive architectural choices: compliance-sensitive organizations may keep PII processing on-premises or in private clouds, while teams prioritizing rapid iteration use cloud-hosted models with tokenization for sensitive fields. Latency-sensitive scenarios—high-volume IVR replacements or edge deployments inside carrier networks—benefit from local inference or hybrid architectures. Choose your orchestration tools and container management so you can scale transcription workers independently from dialog state stores and backend connectors; this separation of concerns reduces blast radius during upgrades.

Taken together, these benefits and use cases show how voicebots become productivity multipliers rather than blunt instruments. We recommend proving value with a small set of high-frequency transactions, instrumenting every path, and iterating on NLU and orchestration before expanding into transactional domains. Next, we’ll design a minimal viable voice flow that proves containment and provides the telemetry needed to scale intelligently.

Core Architecture and System Components

Building on this foundation, the system design for AI voice assistants must be treated as a distributed, real-time pipeline rather than a single AI model you call from a button. We start by mapping responsibilities: streaming ASR (automatic speech recognition) converts audio to incremental transcripts, NLU (natural language understanding) converts transcripts to intents and entities, the dialog manager maintains conversational state and policy, and TTS (text-to-speech) renders responses back to the caller. How do you ensure low-latency ASR at scale? You design for parallelism and short-circuiting—accept partial transcripts from ASR, let NLU act on high-confidence partials, and only wait for final hypotheses when you must commit a transaction or authenticate a caller.

In practice, these components become separate services with well-defined contracts and backpressure controls. The ASR tier typically exposes a streaming API that pushes partial and final events; the NLU service subscribes to those events and returns an intent object with a confidence score and extracted slots. The dialog manager implements policies (slot-filling, confirmation, escalation) and orchestrates backend connectors to CRM and payment systems via idempotent APIs. We recommend treating backend calls as asynchronous when possible—enqueue an update and return a user-facing confirmation while the transaction completes—so that slow third-party APIs don’t block the voice path.

A concrete integration pattern is event-driven streaming with correlation IDs for every call leg. For example, an onPartialTranscript(callId, text, timestamp) handler can update the session window while onIntent(callId, intent, confidence) influences the dialog policy; when a confidence threshold is crossed you trigger side effects like fetchAccount(callId, accountId) and patch the session state. Use a small state store (Redis or in-memory keyed by callId) for active sessions and a durable store (Postgres, DynamoDB) for transcripts and audit logs. This hybrid store pattern keeps your hot path low-latency while preserving full traceability for audits and model training.

Scalability and resilience depend on separating concerns and choosing the right orchestration tools. Scale ASR workers independently from NLU workers because ASR is CPU/GPU-bound and NLU is often memory-bound; use container orchestration and autoscaling policies that reflect those resource profiles. Apply a service mesh or API gateway to implement retries, timeouts, and circuit breakers so upstream outages degrade gracefully to deterministic IVR fallbacks or immediate agent transfer. When you need ultra-low latency or regulatory isolation, consider hybrid hosting: ASR or PII-sensitive components on-premises with NLU/TTS in the cloud, or run edge inference for carrier-proximate deployments.

Observability should be built into every hop: emit trace IDs, intent confidence, ASR partial-to-final latency, and dialog fallbacks per 1,000 calls. Instrument p50/p95/p99 latencies for ASR, NLU, and backend calls and monitor model-specific KPIs such as ASR word error rate and NLU precision/recall by intent. Capture transcripts and fallbacks for retraining while preserving PII via tokenization or redaction. Use distributed tracing to link a single customer experience across services so you can answer operational questions like “Which call paths cause the most handoffs?” and then iterate on the dialog or model.

Security and operational controls close the loop: encrypt audio in transit and at rest, tokenize or redact PII before forwarding to third-party models, and maintain consent metadata alongside recordings for auditability. Implement deterministic fallbacks—menu prompts, confidence-based confirmations, or immediate escalation to an agent—so failures are predictable and measurable. Finally, instrument your deployment so rollouts are staged (canary, dark-launch) and metrics gate promotion; next, we’ll use these architectural constraints to design a minimal viable voice flow that proves containment and instruments the exact telemetry you need to scale voicebots responsibly.

ASR, NLU, and Dialogue Management

Building on this foundation, the runtime choreography between the speech-to-text layer (ASR), the language-understanding layer (NLU), and the dialog manager makes or breaks caller experience. If you tune only one component, the system will still fail at the boundaries: noisy transcription creates garbage intents, brittle intent models trigger unnecessary handoffs, and a weak dialog policy amplifies those failures into loops. We’ll unpack where teams commonly trade off latency, accuracy, and robustness so you can make concrete design and operational decisions for production voicebots.

Start with ASR because it produces the raw signal everything else consumes. ASR (automatic speech recognition) provides partial and final transcript hypotheses; use partials to reduce round-trip latency but only accept actions on partials that exceed a calibrated confidence threshold. For example, implement an event handler like onPartialTranscript(callId, text, confidence) that forwards high-confidence partials to NLU while deferring transactions until a final event or an explicit confirmation. Optimize acoustic models for your contact center audio profile and measure ASR word error rate by intent-critical words rather than global WER to focus retraining where it matters.

NLU turns transcripts into actionable intents and structured slots, and you must design it for the domain, not general chat. Define intent taxonomy narrowly for high-frequency tasks, combine intent classification with slot-filling (sequence labeling or joint models), and maintain an entity resolver that maps noisy text to canonical records in CRM. How do you calibrate model decisions? Use confidence calibration (isotonic regression or Platt scaling) and set per-intent thresholds; if intent_confidence < 0.7 ask a clarifying question or fall back to a menu. Maintain a labeled corpus of real call utterances and continuously retrain on fallbacks captured in production to reduce false negatives over time.

The dialog manager enforces policy: slot collection, confirmations, conditional branching, and escalation rules. Implement session state keyed by callId in a low-latency store (Redis or in-memory) and persist audit logs elsewhere; this hybrid pattern keeps the hot path snappy while preserving traceability. Choose a control model that fits your use case—a deterministic finite-state flow for payment or authentication, a frame-based slot-filling system for bookings, or a policy-driven controller (with simulated rollout) for adaptive behavior. Importantly, design deterministic fallbacks: if the dialog manager observes repeated low-confidence turns or repeated NLU intent switches, trigger an immediate agent transfer with a condensed context payload.

Orchestration is where integration engineering pays dividends. Correlate ASR partial-to-final latencies, NLU confidence trajectories, and dialog fallbacks with a single trace ID so you can answer operational questions like “Which call path causes the most handoffs?” In practice, make backend calls asynchronous where possible: enqueue fetchAccount or submitPayment and return a confirmation voice message while the transaction completes; reserve synchronous paths for authentication or consent. Instrument p50/p95/p99 latencies for each hop and capture the last N utterances on handoff so agents get context without replaying the call.

In production, treat these components as a feedback loop rather than static blocks. Start with high-frequency, low-complexity flows, set conservative confidence thresholds, and run A/B tests on dialog variations to measure containment lift and CSAT impact. We recommend three operational rules: tune ASR for domain vocabulary, calibrate NLU per intent with production data, and bake deterministic escalation into the dialog manager. Taking these steps ensures your voicebot reduces wait times while preserving predictable, auditable behavior—next we’ll apply these constraints to design the minimal viable voice flow that proves containment and instruments ROI.

Integrating with CRM and Telephony

Integrating CRM and telephony is where automation stops being clever and starts being useful: you get context-rich conversations, lower handle time, and handoffs that don’t force agents to ask the same questions twice. In the first 10–20 seconds of a call the voicebot should authenticate, fetch the canonical customer record from CRM, and surface actionable fields to the dialog manager so decisions are data-driven. These front-loaded steps improve containment and reduce average handle time in the contact center while keeping the conversation natural and transactional.

Design the integration as a low-latency, correlated data flow rather than a sequence of independent API calls. Start each call with a unique correlation ID that travels through ASR, NLU, dialog state, CRM fetches, and telephony events so you can trace a single customer journey end-to-end. How do you guarantee the right data at the right time? Use a short-lived session cache (Redis or in-memory store) keyed by callId to store authenticated identities, fetched CRM pointers, and recent utterances, and only persist durable audit logs asynchronously to your database.

Authentication and data minimization govern what you fetch from CRM and when. Perform multi-factor or conversational authentication early—tokenize or redact PII immediately before storing or forwarding—and fetch only the CRM fields required for the current intent (balance, last order, open tickets). Implement an entity resolver that maps noisy slot values to CRM keys (for example, map “last payment” to transactionId) and make CRM writes idempotent so retries don’t create duplicate records. A simple pattern is to expose a thin backend connector that translates dialog actions into canonical CRM API calls and returns standardized success/failure payloads to the dialog manager.

Telephony integration requires attention to call legs, media, and event semantics—SIP for call control, RTP/WebRTC for media, and CTI/webhooks for screen-pop. Bridge the telephony platform and application logic with a media gateway or cloud telephony provider that exposes webhooks and a streaming API for audio. For transfers and warm handoffs, attach a context payload to the CTI session containing the last N utterances, intent confidence trajectory, and CRM references so agents get a prepopulated screen pop. Example webhook payloads should include correlationId, callerId, crmRecordId, and an array of recent intents to make agent-side rendering deterministic.

Observability, testing, and fallback policies are what keep integrations reliable at scale. Instrument latency for CRM fetches (p50/p95/p99), ticket-creation success rates, and handoff reasons; capture transcripts and fallback triggers for retraining. Simulate telephony failures and CRM slowdowns in chaos tests so your dialog manager can exercise deterministic fallbacks—menu-based recovery, confirmation prompts, or immediate agent transfer—without manual intervention. Treat these metrics as first-class: map containment rate and AHT improvements back to specific CRM endpoints or telephony flows to prioritize optimization.

Security, consent, and operational controls close the loop and prepare you to scale. Encrypt audio and API traffic end-to-end, maintain consent metadata alongside recordings, and apply tokenization before sending any sensitive fields to third-party services. When you design integrations this way—correlated tracing, session caching, idempotent CRM connectors, and deterministic telephony fallbacks—you create a reliable foundation for more complex transactions and smoother agent augmentation. Building on these patterns, we can now look at designing a minimal viable voice flow that proves containment and instruments the exact telemetry you need to justify broader rollouts.

Deploy, Monitor, and Optimize Performance

Deploying a voicebot to production is where design assumptions meet real-world variability, and the first 48 hours tell you more than weeks of lab tests. We need deployment patterns that let us observe real traffic, measure containment and latency, and reduce blast radius when something breaks. Start with a staged rollout—canary or blue/green deployments that route a small percentage of live calls to the new model or dialog flow while the remainder uses the stable release. This approach gives you a fast feedback loop on ASR and NLU behavior in production audio profiles without exposing all callers to regressions.

Build observability into the deployment pipeline so metrics and traces are first-class artifacts of every release. Instrument p50/p95/p99 latencies for ASR, NLU, dialog manager decisions, and backend CRM calls; emit model-specific KPIs like ASR word error rate (WER), per-intent precision/recall, and fallbacks per 1,000 calls. Correlate these with business metrics—containment rate, average handle time (AHT), and CSAT—using a common trace or correlationId. How do you know when a model rollout is ready to promote? Gate promotions on both statistical canary analysis and business delta thresholds (for example, no >5% containment regression and p95 latency within SLA for 1,000 canary calls).

Make alerts action-oriented and tied to runbook playbooks so on-call engineers and operations teams can respond consistently. Alert on sustained regressions (containment down >3% for 10 minutes), latency spikes (p99 > 3s for dialog decision), and sudden increases in fallbacks or transfers to agents. Pair alerts with automated diagnostics that collect last-N utterances (redacted), intent confidence trajectories, and correlated backend traces so responders have context immediately. An example Prometheus alert rule looks like this in spirit:

alert: VoicebotContainmentDrop

expr: decrease(containment_rate[10m]) > 0.03

for: 10m

annotations:

runbook: /runbooks/voicebot-containment

Scale ASR, NLU, and orchestration independently because their resource profiles differ: ASR is CPU/GPU-bound and often benefits from horizontal workers that handle streaming media, while NLU and dialog managers are memory-bound and should scale with replica counts and managed caches. Use autoscaling policies based on real signals—queued audio sessions, transcript processing lag, and service latency—rather than raw CPU to avoid mis-sizing under bursty call volumes. Implement circuit breakers and graceful degradation paths: during upstream CRM slowdowns, fall back to cached session data or present deterministic menu options and queue a background write to preserve the caller experience.

Optimization is both operational and model-level. For operations, reduce cold starts by warming NLU containers and keep a hot partition of ASR workers during known peak hours; profile and tune JVM/gc or container memory limits to prevent tail latency. For models, iterate on targeted retraining: harvest fallbacks, misclassified utterances, and noisy transcripts (with PII redaction) into a labeled dataset and retrain per-intent models instead of one monolithic retrain. Run A/B tests on dialog variants to measure lift in containment and CSAT, and promote changes only when business metrics show consistent improvement.

Privacy-aware telemetry is non-negotiable: sample transcripts, redact or tokenize PII, and store raw audio under strict retention policies. Use sampled logging for high-volume paths and full traces only on errors or canaries so you retain signal without exploding storage costs or increasing compliance risk. Keep a pipeline that can link anonymized telemetry back to labeled examples for training while preserving consent metadata for audits.

Taking this concept further, bake continuous validation into your CI/CD so every change runs canary traffic, collects model and business KPIs, and gates promotion with automated rollbacks on regressions. When we deploy this way—observability-first, canary-driven, and privacy-aware—we move from reactive firefighting to measured optimization, letting the voicebot reduce wait times and improve agent productivity predictably as we scale.