Understanding AI Voice Cloning Technology

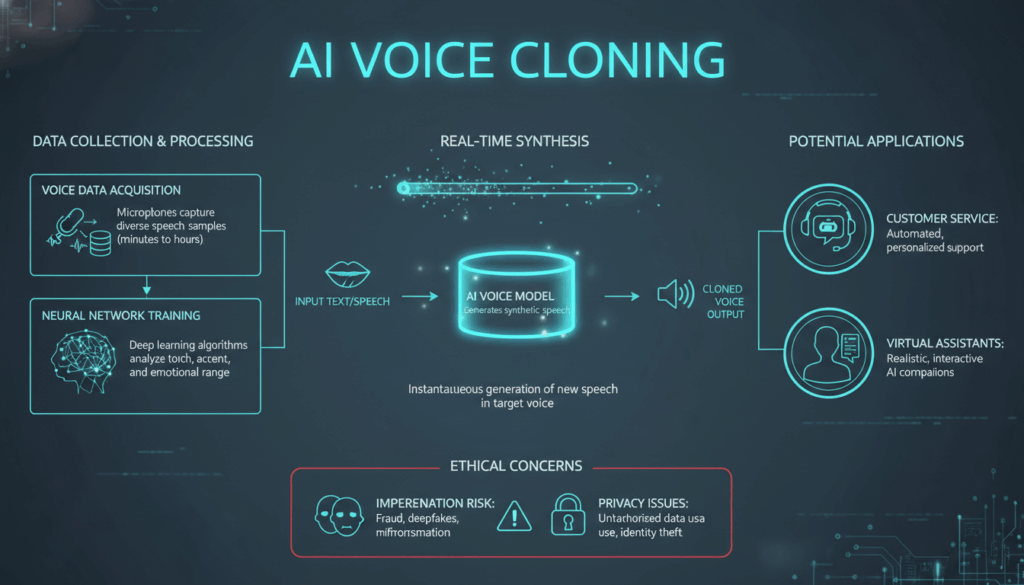

AI voice cloning technology leverages advanced machine learning techniques to replicate human voices with stunning accuracy. This technology involves several sophisticated processes that allow the creation of a digital replica of an individual’s voice, enabling the generation of synthetic speech that closely mirrors the nuances and characteristics of the original speaker.

At its core, voice cloning relies on deep learning models, particularly those centered around neural networks. These models are trained on large datasets containing voice recordings of the individual to be cloned. The process begins by analyzing the unique parameters of the speaker’s voice. These include pitch, tone, rhythm, and accent, which are crucial for capturing the essence of the speaker.

The primary neural network architectures used in voice cloning are recurrent neural networks (RNNs) or, more recently, transformers. These models excel at handling sequential data, making them suitable for processing the temporal elements of speech. Among the prominent architectures, WaveNet, a type of generative model developed by DeepMind, is frequently used. WaveNet samples audio waveforms directly, allowing it to produce highly realistic speech outputs.

Another critical aspect of the technology is the generation phase, where the model, having been trained, can create new voice data. Modern techniques like Tacotron 2 use a two-stage process where a sequence-to-sequence model converts text input into a spectrogram—a visual representation of the frequencies present in the voice at any given time—before another model, such as WaveGlow, synthesizes this spectrogram into audible speech.

The advancements in neural network architectures have also paved the way for the emerging concept of zero-shot voice cloning, where a new voice can be replicated using a very short audio sample. This process significantly reduces the amount of required data, making it practical to clone a voice with just a few seconds of recorded speech.

One practical example of voice cloning is its application in personal assistant technologies, such as Google’s Duplex or Amazon’s Alexa, where personalized voice responses can be generated. Additionally, this technology is finding innovative applications in entertainment industries for creating voiceovers in multiple languages without needing the original actor to record each line.

The sophistication of AI voice cloning opens up a plethora of opportunities but also poses significant ethical and security concerns. It highlights the need for robust guidelines and ethical considerations to prevent misuse, such as creating unauthorized clones for fraudulent activities. Voice fingerprinting and watermarking are being explored as potential solutions to these challenges, aiming to ensure that synthetic voices can be reliably distinguished from authentic ones.

Overall, AI voice cloning technology is a groundbreaking advancement, representing both a powerful tool for innovation and a challenge for responsible usage. Its development continues to push the envelope of what artificial intelligence can achieve in replicating and understanding human characteristics.

Step-by-Step Guide to Cloning Your Own Voice

To embark on cloning your own voice, you’ll need to leverage the power of AI-based voice synthesis tools, which typically involve machine learning and neural networks trained to replicate speech patterns intricately. Here’s a detailed guide to help you through the process:

- Collect Suitable Audio Data

Begin with gathering a dataset of your own voice recordings. For basic cloning, you might need as little as 5 to 10 minutes of clean, high-quality recordings. However, for more accurate and expressive results, a longer dataset (about 30 minutes to an hour) is advisable. Ensure that your recordings are clear, free from background noise, and cover a range of speaking styles and intonations. The goal is to capture the nuances and tonal variations of your speech.

- Select a Voice Cloning Tool

Choose a reliable voice cloning tool or platform. Popular choices include iSpeech, Lyrebird (now part of Descript), or IBM Watson’s custom models. Each tool has its specific requirements and strengths, so review the features to determine which suits your project’s needs best. Many of these tools provide web interfaces, software applications, or APIs that facilitate the cloning process.

- Pre-Process the Collected Audio

Before uploading your audio files, prepare them by converting them to the required format (commonly WAV) and trimming them to exclude any unnecessary silences or noises. Use audio processing software like Audacity or Adobe Audition to fine-tune your recordings. This step ensures that the input data fed into the neural network is as standardized and clean as possible.

- Upload the Audio Data

Follow the platform’s specific guidelines to upload your prepared audio files. The system will typically require you to input the recordings in a structured format, possibly segmenting them into smaller files if needed.

- Train the Model

Once uploaded, the platform will process your data through its machine learning algorithms. If using a service that offers direct control over training parameters, experiment with different model settings to improve synthesis accuracy. Training times vary significantly based on the data volume and the computational resources at your disposal. Larger, more detailed datasets will result in longer training times but potentially more nuanced voice clones.

- Evaluate and Refine the Generated Voice

After the initial cloning, review the synthesized voice outputs. Spot-check the voice samples by listening for any mismatches in tone, pacing, or pronunciation. Most platforms allow you to tweak the learning algorithms or retrain the model with additional data if the output does not meet your expectations. Iterative refinements can significantly enhance the authenticity of the cloned voice.

- Utilize and Implement the Cloned Voice

Once satisfied with the synthesis results, explore integration options offered by your chosen platform. You might create text-to-speech applications, personalized aural experiences, or automated content generation tools. Be sure to leverage any APIs provided to facilitate integration with your existing systems or digital products.

- Legal and Ethical Considerations

As with any innovation involving personal data and identities, it’s crucial to comply with local regulations regarding data privacy and voice synthesis. Ensure that your use of the cloned voice is lawful and ethical, particularly when using it in public or commercial domains.

Following these steps allows you to effectively clone your voice, creating a digital replica that can be utilized in various creative and functional applications, from personalized digital assistants to content creation tools, pushing the boundaries of personalized human-AI interaction.

Exploring Applications of AI Voice Cloning

Advancements in AI voice cloning technology have opened up a myriad of practical applications across a diverse range of sectors, demonstrating both the innovation and potential ethical complexities associated with this technology.

In the field of personal digital assistants, voice cloning allows for a highly personalized interaction experience. Imagine a virtual assistant, like Alexa or Google Assistant, echoing your voice or that of a loved one. This customization can enhance user engagement through familiarity and personalization, offering an increased comfort level and more natural interaction.

In healthcare, voice cloning shows promise in developing communicative tools for individuals who have lost their ability to speak due to conditions such as ALS or throat cancer. By preemptively recording a patient’s voice before they lose the ability or by using a small sample of earlier recordings, AI can regenerate their speech patterns, providing them with a synthetic voice that resembles their own. This technological application is not only transformative in maintaining a sense of identity but also critical in improving mental well-being.

The entertainment industry has been quick to leverage AI voice cloning for creating localized content. Animation and video games can now be dubbed in multiple languages using the original actors’ cloned voices, maintaining the emotion and intent of the original performance without requiring the actors to record the same lines in different languages. This not only cuts down production costs but also ensures a uniform quality of character portrayal across markets. Additionally, filmmakers can utilize voice cloning to convincingly recreate a deceased actor’s voice, allowing for the completion of projects without deviating from the original artistic vision.

Broadcasting and media have also benefited from these advancements. Newscasters can deploy voice clones to automate news delivery in real-time, across various languages and regions. This capability allows media houses to expand their reach and cater to global audiences efficiently, providing immediate access to crucial information without the typical lag associated with translation and localization processes.

In customer service, AI-generated voices offer an innovative solution to scalability challenges. Businesses can create virtual agents with synthesized voices based on their brand’s persona, which provides a consistent voice in customer interactions. This capability allows for 24/7 customer support availability, enhancing client satisfaction and loyalty.

Education is another sector ripe with AI voice cloning opportunities. By developing educational tools that feature cloned voices, learners can engage more effectively with material tailored to their learning style and preferences, such as hearing content narrated in a familiar or preferred voice. Moreover, educators can use this technology to create interactive and compelling lectures without needing to be present, thus freeing them to focus on direct student engagement.

Despite the innovative uses, voice cloning technology doesn’t come without its challenges and ethical considerations. The potential for misuse—such as unauthorized replication of someone’s voice for fraudulent purposes—requires stringent regulations and guidelines to ensure responsible usage. Mechanisms like digital watermarking and stringent verification processes are being explored to corroborate the legitimacy of synthetic voices and protect personal identity.

As these applications illustrate, AI voice cloning possesses a transformative capability that spans across industries, providing new tools for expression, accessibility, and interaction. However, as with any powerful technology, its beneficial applications must be carefully balanced with ethical considerations to safeguard personal identities and respect privacy.

Ethical Considerations and Potential Risks of Voice Cloning

AI voice cloning raises several ethical considerations and potential risks that stakeholders must carefully manage to ensure responsible use of the technology.

First, there’s the concern of consent and privacy. Voice cloning depends on recording an individual’s voice, necessitating explicit consent from the subject. Without proper consent, creating a synthetic replica of someone’s voice can be an invasion of privacy and a violation of personal rights. The risk intensifies in scenarios where voice data becomes misused for fraudulent activities, such as impersonation.

Fraudulent and malicious use poses a significant threat. Synthetic voices can be used to impersonate individuals over the phone or in digital communications, potentially leading to identity theft or financial fraud. Imagine receiving a call that sounds convincingly like a family member, requesting financial details or urgent monetary aid — such misuse can have severe personal and financial repercussions.

From a legal perspective, the absence of comprehensive laws regulating voice cloning complicates enforcement against misuse. While intellectual property law covers aspects of voice cloning, many jurisdictions have yet to catch up with technological advances, leaving individuals vulnerable. Legal frameworks need to evolve to address these gaps, ensuring that both individuals and organizations are protected against unauthorized voice replication.

Security measures like digital watermarking are being developed to help mitigate these risks. Watermarking involves embedding a unique signature within synthetic voices to reliably distinguish them from authentic audio. This could be pivotal in legal disputes or fraud detection, offering a means to verify the authenticity of voice recordings.

The moral implications are also profound. The technology could diminish trust in audio-based evidence, which has been a cornerstone of authenticity in both legal and personal contexts. Audio recordings can lose their credibility if it becomes commonplace to doubt their veracity, affecting everything from journalism to courtroom proceedings.

In media and entertainment, the ethical use of voice cloning is further complicated. While it opens up opportunities for content creation, it also prompts questions about ownership and the rights of actors, especially when their voices are cloned posthumously or used in unanticipated ways.

Technological firms and stakeholders must prioritize developing ethical standards that rigorously define acceptable uses. Companies need to establish stringent data protection policies and consent protocols. Ethical frameworks should be implemented to assess the potential impact and risks before deploying voice cloning technologies.

Furthermore, the broader societal perceptions of identity and digital representation are evolving. As voices convey personality and identity, unauthorized cloning could lead to people feeling violated and without control over their digital personas. Consent, transparency, and control over one’s voice need solidification through both technology and policy development to protect personal identity.

Finally, public awareness and education play a crucial role. Informing the public about the technology’s capabilities and potential risks can build a more informed community that is better equipped to handle, question, and challenge unethical implementations of voice cloning.

Overall, the ethical considerations and risks associated with voice cloning extend beyond technical aspects, touching upon legal, social, and personal domains that require concerted efforts from individuals, businesses, and policymakers to navigate responsibly.

Future Trends in AI Voice Cloning

The landscape of AI voice cloning is on the cusp of transformative change, driven by rapid advancements in technology and increasing demand across various industries. Several trends are reshaping how voice cloning is developed and deployed, heralding a future rich with possibilities yet fraught with considerations.

One of the most striking trends is the advancement in neural network architectures, particularly with the evolution of transformers and sophisticated algorithms like Generative Pre-trained Transformers (GPT). These models are increasingly capable of fine-tuning voice synthesis based on minimal input data, heralding an era of highly personalized voice cloning. With improvements in data efficiency, it will soon become feasible to clone voices using mere seconds of audio, making the technology more accessible for a variety of applications.

Meanwhile, expanding computational power and cloud-based processing are democratizing access to voice cloning capabilities. As cloud infrastructure becomes more robust, developers and small businesses can leverage complex voice synthesis models without needing to invest in expensive hardware. This democratization is expected to accelerate the proliferation of voice cloning applications across diverse sectors—from personalized marketing and customer service to immersive gaming experiences.

The field is also witnessing a push towards multilingual voice cloning models. These systems are designed to clone a person’s voice in multiple languages, preserving the unique vocal characteristics across different linguistic contexts. Such innovations are vital for global businesses aiming to maintain consistent brand voices, and for entertainment industries seeking seamless localization solutions without compromising emotional and performance authenticity.

In terms of adaptive user experiences, contextual voice adaptation is a burgeoning trend where cloned voices can dynamically adjust to different contexts and environments. Utilizing AI’s capability to analyze situational data, applications can tailor voice outputs to express varying emotions or tonal emphases based on user interaction patterns or environmental cues. This adaptability could revolutionize fields like education, where personalized learning modules might adjust voice features to optimize student engagement.

Another exciting development is in synthetic media and metaverse creation. Voice cloning stands to play a fundamental role in crafting rich, interactive environments within virtual realities. As the metaverse concept gains traction, voice cloning can enable more realistic and personalized avatars, paving the way for engaging virtual interactions, whether in gaming, social networking, or virtual collaboration spaces. These integrations emphasize personalized interaction, enhancing user immersion and the overall digital experience.

On the horizon is the convergence with biometric security, where future voice cloning technologies may incorporate biometric authentication features. This integration offers dual benefits of enhancing security and personalizing devices to recognize and reproduce only authenticated voices, potentially securing against unauthorized voice commands or impersonation attempts.

As these trends unfold, ethical considerations and legal frameworks will be crucial to ensuring the integrity and societal acceptance of voice cloning technologies. Proactive policy development, combined with innovation-driven regulations, can ensure that the conveniences and efficiencies gained do not overshadow the rights and privacy concerns of individuals.

Thus, the trajectory of AI voice cloning is paved with innovation, opportunity, and the necessity for vigilant stewardship. Embracing these trends responsibly will not only define the technology’s potential but will also shape its acceptance in the fabric of digital evolution.