Understanding Artificial Intelligence: The Big Picture

Artificial Intelligence (AI) is often described as the grand vision of building machines that can perform tasks requiring human-like intelligence. At its core, AI is the broader concept that encompasses everything from computer algorithms that can play chess to voice assistants that understand natural language. The field of AI has evolved dramatically since its inception in the 1950s, sparking both fascination and debate about the potential for machines to mimic or even surpass human cognition.

AI seeks to solve problems that traditionally require human reasoning, learning, perception, and decision-making. Some of the earliest examples involved programming computers to solve logical puzzles or play checkers. Over time, AI systems advanced into areas such as speech recognition, robotic process automation, and even artistic creation—generating music, paintings, and stories. The scope of AI is vast, covering areas like expert systems, natural language processing (NLP), computer vision, robotics, and more.

To better understand AI, it’s helpful to think of it as a collection of technologies, methodologies, and approaches. For example, techniques like rule-based systems, which encode human expertise into explicit logic and rules, have been foundational to AI development. More recent advancements are powered by learning-based methods, where machines can adapt and improve from experience without direct programming.

One of the most intriguing aspects of AI is its potential impact across sectors, from healthcare and finance to transportation and entertainment. AI-driven diagnostic tools are helping doctors interpret medical images with greater accuracy (Nature Medicine study), while AI-powered portfolio management is transforming the investment landscape (New York Times on AI in finance).

AI can be categorized into two main types: Narrow AI and General AI. Narrow AI refers to systems designed for specific tasks—like facial recognition or language translation—achieving superhuman performance in their limited domains. General AI, which remains largely theoretical, would equal or surpass human intelligence across a wide range of activities. Most AI applications today fall under the narrow AI umbrella, as truly general intelligence remains a subject of research and philosophical debate (MIT Technology Review).

Embracing the “big picture” means understanding AI as both a technical pursuit and a social force. Its influence spans not only what tasks computers can tackle but how they reshape our world, from automating routine jobs to revolutionizing creative industries. By grasping AI’s foundational goals and current capabilities, readers are empowered to navigate more specialized terms like machine learning and deep learning—which are subsets of AI—and appreciate how each fits into this transformative field.

What is Machine Learning? Key Concepts Explained

Machine learning (ML) is a pivotal branch of artificial intelligence that empowers computers to learn from data and make decisions with minimal human intervention. Rather than being explicitly programmed with step-by-step instructions, a machine learning system identifies patterns or correlations within large datasets, and then applies that knowledge to make predictions or classifications. This shift from rule-based programming to data-driven algorithms is revolutionizing countless industries—from healthcare to finance, transportation to entertainment.

At its core, machine learning consists of a few key concepts:

- Data: The fuel for all machine learning models. The process begins by collecting, cleaning, and organizing large volumes of relevant data. For example, to create a model that detects spam emails, you’d need a substantial set of both spam and non-spam messages for analysis.

- Features: Specific variables or characteristics in the data that a model uses to make its predictions. For instance, in the spam detection example, features could include the sender’s email address, the presence of certain keywords, or the frequency of links.

- Algorithms: The mathematical engines that learn from data. Popular algorithms include decision trees, support vector machines, and neural networks. Each algorithm has unique strengths depending on the problem at hand. If you’d like to deep dive into popular types of algorithms, Stanford University’s Elements of Statistical Learning offers substantial insights.

- Model Training: The heart of ML, this phase involves using an algorithm to analyze training data and “learn” the mapping from input features to the desired output. The quality and representativeness of your data have a direct impact on your model’s accuracy.

- Testing and Evaluation: After training, the model is evaluated against new, unseen data to measure how well it generalizes beyond the original set. Common metrics include accuracy, precision, recall, and F1 score—a topic discussed in greater detail by Machine Learning Mastery.

- Prediction and Deployment: Once a model demonstrates reliable performance, it can be deployed to make predictions or automate decisions in real-world applications—like recommending movies on Netflix or identifying fraudulent banking transactions.

One way to visualize how machine learning works in practice is to consider an application like image recognition. Engineers use a large set of labeled images—some of cats, others of dogs—so the model can learn to spot distinctive features associated with each animal. Over time and with sufficient data, the model gets remarkably good at identifying new photos, learning details that human-programmed rules might easily miss.

For those interested in the broader theoretical foundation, the Machine Learning course by Andrew Ng on Coursera is widely considered one of the best introductory resources, covering foundational concepts and real-world applications.

In essence, machine learning is about building systems that improve through experience. As technology progresses and the volume of available data grows, the significance of ML in everyday life—from voice assistants to autonomous vehicles—continues to deepen.

Deep Learning Demystified: How It Works

Deep learning takes the principles of machine learning and turbocharges them with complex structures called artificial neural networks. Inspired by the human brain, these networks enable computers to learn and make decisions in ways that humans can only dream of. But what exactly does this process look like under the hood?

At its core, deep learning uses multiple layers of interconnected nodes or “neurons” that allow a computer to recognize patterns and relationships in vast amounts of data. The more layers a neural network has, the “deeper” it is. Each layer in the network performs specific transformations on the input data, gradually moving from raw information to complex features.

How does this work step by step?

- Input Layer: Everything begins with the input layer, which receives raw data—be it images, text, or sound. For example, in image recognition, each pixel’s value becomes input data.

- Hidden Layers: The magic happens in the hidden layers, where mathematical operations are performed. Each neuron weighs incoming signals and decides how much of that information should be passed to the next layer. This process happens hundreds or even thousands of times as data moves deeper into the network.

- Activation Functions: Neurons use special algorithms called activation functions to introduce non-linearity, allowing the network to capture more complex patterns. Popular activation functions include ReLU and sigmoid.

IBM’s Deep Learning Guide provides a quick overview of these concepts. - Output Layer: After passing through multiple hidden layers, the network produces an output—like identifying that an image contains a dog, or that an email is spam.

- Learning Process: The network learns by comparing its output to the correct answer and adjusting its internal weights. This process is called backpropagation. With thousands, sometimes millions, of trial-and-error cycles, deep learning models become remarkably accurate.

For a deeper dive, MIT Press’s Deep Learning Book is considered a foundational resource in the field.

Deep learning has driven famous breakthroughs, from recognizing faces in photos to mastering the game of Go. For example, Google’s AlphaGo—a program that defeated world champions—used deep neural networks to evaluate potential moves (Nature). Similarly, language models like GPT (the engine behind this tool) use multi-layered architectures to understand and generate human language (Google AI Blog).

One notable advantage of deep learning is its ability to automatically identify features from raw data, reducing the need for manual intervention. This autonomy is especially valuable in fields such as medical imaging, autonomous driving, and natural language processing.

Ultimately, deep learning demystifies the concept of artificial intelligence by mimicking some aspects of human learning and perception, continually pushing the boundaries of what machines can do. Ongoing research at leading institutions ensures the field remains one of the most exciting frontiers in technology (DeepMind Research).

How AI, Machine Learning, and Deep Learning Relate

Understanding the relationship between artificial intelligence (AI), machine learning (ML), and deep learning (DL) is crucial for demystifying the terms and grasping their practical implications. While these buzzwords are often used interchangeably, they occupy distinct positions in a hierarchical structure, each with its own set of characteristics, applications, and limitations.

AI as the Foundation

AI is the broadest concept of the three. It refers to any technique that enables machines to mimic human behavior and intelligence—from simple rule-based systems to self-aware robots. For instance, AI applications include everything from classic chess engines that analyze moves based on pre-programmed rules to sophisticated virtual assistants that anticipate user needs. Importantly, AI is not limited to learning; rather, it encompasses methods that operate by rules and logic as well as learning from data.

Machine Learning: An AI Subset

Machine learning is a specialized branch of AI that focuses on enabling systems to learn from and make decisions based on data, rather than requiring explicit programming. ML algorithms adjust themselves as they are exposed to more information. For example, an email spam filter learns to detect unwanted messages by analyzing large volumes of previously categorized emails. Want to dive deeper? The IBM guide to machine learning provides a comprehensive overview of different ML approaches, including supervised, unsupervised, and reinforcement learning.

Deep Learning: A Specialized ML Technique

Deep learning is a further subset within machine learning, characterized by algorithms often inspired by the structure and function of the human brain—namely, artificial neural networks. These networks contain many layers (‘deep’), allowing them to model complex patterns in massive datasets. For instance, deep learning powers image recognition, enabling services like Google Photos to recognize faces, locations, and objects within photographs. Deep learning’s capacity for feature extraction and abstraction is what distinguishes it from traditional ML techniques.

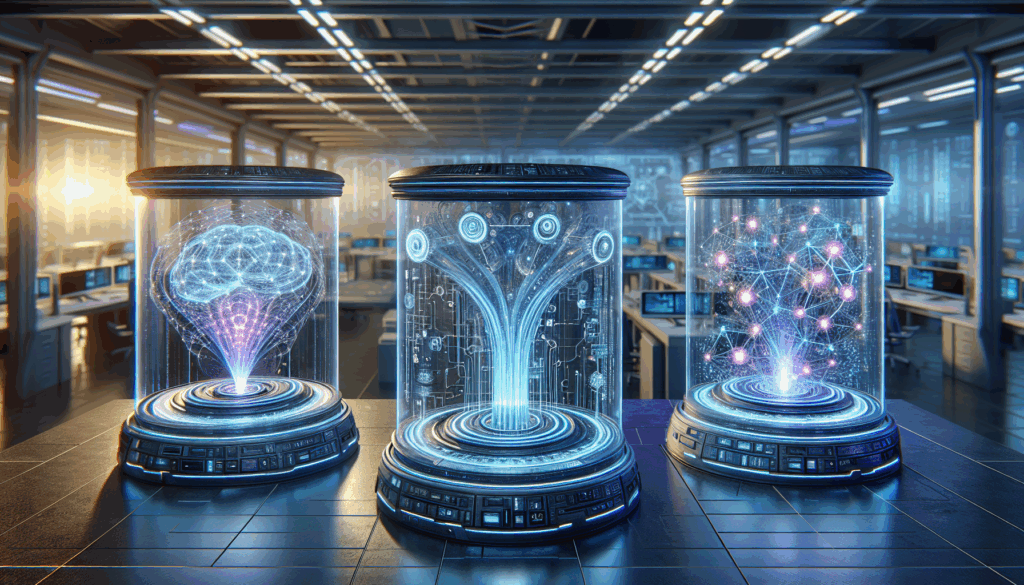

Visualizing the Hierarchy

Visualize the relationship as concentric circles: AI is the largest circle, encompassing machine learning, which, in turn, contains deep learning. Every deep learning solution is also machine learning and AI, but not all AI solutions are based on machine learning—and not all machine learning uses deep learning.

This layered relationship reflects the field’s evolution. Decades ago, AI relied heavily on hand-crafted logic and explicit programming. However, as computing power and access to data increased, machine learning took center stage, automating many cognitive tasks. The rise of big data and more advanced hardware ushered in the era of deep learning, making breakthroughs in speech recognition, natural language processing, and computer vision possible. To explore how these fields interconnect and differ with real-world case studies, check out this landmark article from Nature on deep learning.

In summary, AI sets the ambition of creating intelligent machines; machine learning provides these machines with the ability to learn; and deep learning gives them the tools to perceive and act autonomously at scale. Understanding these relationships equips you to better evaluate headlines, industry claims, and the exciting innovations shaping our future.

Real-World Applications: Comparing Technologies

Understanding the practical distinctions between AI, machine learning, and deep learning becomes easier when we examine how each is put to use in the real world. While these technologies overlap, their unique traits make them better suited for different scenarios, industries, and challenges. Let’s explore how each plays a role and see how their applications stack up.

AI in Everyday Business Processes

Artificial intelligence, in its broadest sense, refers to any technique enabling machines to mimic human intelligence. This can mean everything from rule-based systems—like basic chatbots or automated scheduling assistants—to sophisticated, context-aware virtual agents. Many companies use AI for task automation in healthcare, banking, and customer service. For example, hospitals employ AI for scheduling appointments, triaging patients, and supporting diagnostic workflows. Businesses automate repetitive financial tasks, such as invoice management or fraud detection, reducing errors and freeing up human resources for more complex problem solving. Simple algorithms, rule engines, and statistical analysis are often sufficient, emphasizing the broad versatility of AI beyond the buzz around learning-based systems.

Machine Learning: Powering Data-Driven Decisions

Machine learning (ML) takes AI a step further by enabling systems to “learn” from data rather than following strictly hardcoded rules. ML algorithms are widely used in personalization engines—think Netflix recommending shows or Amazon suggesting products—as they analyze user behavior and predict preferences. Retailers use ML to predict inventory needs and optimize supply chains. Financial institutions employ machine learning for dynamic credit scoring, where models continuously update risk profiles based on new customer data. Similarly, email clients filter spam using models trained on millions of labeled messages. These applications typically require “shallow” learning—algorithms such as decision trees, support vector machines, or basic neural networks that excel at structured data tasks.

Deep Learning: Advanced Perception and Automation

Deep learning, a specialized subset of ML, excels at processing and deriving meaning from vast amounts of unstructured data, such as images, audio, and text. This is where breakthroughs like self-driving cars, language translation, and facial recognition occur. Self-driving vehicle systems, for instance, rely on deep neural networks to process millions of camera images per second, identify objects, predict traffic scenarios, and make split-second navigation choices (see Nature’s feature on deep learning in autonomous vehicles). In healthcare, deep learning powers diagnostic tools that analyze medical scans for early signs of diseases like cancer or diabetic retinopathy (Mayo Clinic on AI in diagnosis). These models, with dozens or even hundreds of layers, require vast amounts of labelled data and significant computational resources—but their predictive accuracy in complex, sensing-dependent environments makes them invaluable.

Choosing the Right Approach in Practice

The choice between AI, machine learning, and deep learning depends on the application’s complexity, data types, and required accuracy. For handling structured routine tasks, classic AI and rule-based systems might be best. Tasks involving lots of historical data, like loan approvals or disease prediction, often call for machine learning. If you’re working with raw images, voice, or natural language—think personal voice assistants or medical imaging—deep learning’s layered approach yields better results.

In summary, these technologies occupy different niches and bring unique strengths to the table. As data grows and computational capability advances, we’re likely to see even more synergy and overlap among these approaches. To dive deeper into the distinctions and real-world use cases, check out these in-depth resources from IBM and Stanford University’s AI lab.

Common Misconceptions and Myths

One of the most persistent myths in the tech landscape is that artificial intelligence (AI), machine learning (ML), and deep learning (DL) are interchangeable terms. Many assume they’re simply different names for the same technology. However, these are distinct concepts that build upon one another in significant ways.

Misconception 1: “All AI Is Machine Learning”

A common misconception is that all AI involves machine learning. In reality, AI is a broad field that encompasses anything enabling computers to simulate human intelligence. This includes rule-based expert systems, symbolic reasoning, and yes, machine learning—but not exclusively. For instance, early AI applications like chess programs and decision trees used hand-coded rules rather than algorithms that learn from data. For more clarification on what AI truly is, check out this primer by IBM.

Misconception 2: “Machine Learning and Deep Learning Mean the Same Thing”

Some believe machine learning and deep learning are synonyms. In fact, deep learning is a subset of machine learning, and a machine learning model doesn’t necessarily have to be a deep neural network. Traditional machine learning uses algorithms like decision trees and support vector machines, which don’t rely on the many-layered structure deep learning does. For example, a spam filter using simple keyword-matching is likely ML, not DL, whereas image recognition in self-driving cars often leverages deep learning networks. This overview by Machine Learning Mastery shows the diversity of machine learning approaches beyond deep learning.

Misconception 3: “AI Can Think and Reason Like Humans”

Portrayals in media fuel the belief that AI possesses human-like cognition, emotion, and creativity. In truth, contemporary AI is specialized, excelling only at tasks it’s specifically designed and trained for—something referred to as “narrow AI.” Systems such as OpenAI’s GPT-4 or Google’s AlphaGo demonstrate impressive skill within their domain but are not adaptable in the way humans are. This Nature article distinguishes between narrow and general AI, highlighting the current limitations.

Misconception 4: “If It’s Not Deep Learning, It’s Outdated”

Deep learning’s popularity has led many to dismiss older or simpler methods as obsolete. Yet, traditional machine learning often remains preferable for many tasks, especially when data is limited or interpretability is crucial. Algorithms such as logistic regression or random forests are still widely used and can outperform deep learning on structured/tabular data, where clear relationships exist between variables. For evidence, see this Harvard Data Science Review article on choosing the right algorithm for your data.

Misconception 5: “More Data Means Better AI”

While data is critical for building effective ML and DL models, more isn’t always better. Low-quality, biased, or irrelevant data can lead to inaccurate or unfair outcomes. Further, business problems often call for data quality and domain-specific understanding more than sheer quantity. Leading AI organizations like Google urge teams to prioritize data cleaning and quality over simply gathering bigger datasets.

Understanding these distinctions is crucial for making informed decisions about adopting AI, ML, or DL in your business or research. Being aware of the true capabilities—and limitations—of these technologies helps avoid unrealistic expectations and enables you to leverage them effectively and ethically.