The Origins of Artificial Neurons: A Brief History

The journey of artificial neurons can be traced back to the mid-20th century when scientists began to wonder how the intricate workings of the human brain could be mimicked by machines. This curiosity led to the creation of the first mathematical models attempting to simulate the basic units of neural processing—the neuron.

It all began in 1943 when neurophysiologist Warren McCulloch and mathematician Walter Pitts published a seminal paper proposing a simple neural network model. Their work, “A Logical Calculus of Ideas Immanent in Nervous Activity,” described how groups of interconnected artificial neurons could, in principle, compute any conceivable logical function (source). This model laid the theoretical groundwork for the development of artificial neural networks, demonstrating that simple units—each performing basic operations—could collectively solve complex problems.

As the interest in artificial intelligence grew, scientists sought to turn theory into practice. In 1958, Frank Rosenblatt introduced the “perceptron,” the first true artificial neural network that could learn from experience (read more here). The perceptron consisted of a single layer of computational nodes, or artificial neurons, that adjusted their weights based on input data, learning to distinguish between different classes such as shapes or letters. This marked a critical leap forward, bringing the dream of machine learning closer to reality.

However, early models faced significant limitations. Marvin Minsky and Seymour Papert’s influential 1969 book, “Perceptrons,” highlighted the serious shortcomings of single-layer networks, notably their inability to solve problems like the XOR logical function (detailed analysis). This critique temporarily halted progress, but it also fueled research into more sophisticated architectures. The development of multi-layer networks and the backpropagation algorithm in the 1980s finally overcame these obstacles, heralding a new era in which artificial neurons could be assembled into powerful, deep networks.

Each step in this evolution was driven by a fundamental question: can we replicate human learning and decision-making in a silicon-based system? The pursuit of answers has not only deepened our understanding of both artificial intelligence and the human brain but continues to inspire groundbreaking research today. The artificial neuron, simple yet remarkably powerful, remains at the heart of this quest, bridging the mysteries of biology and the possibilities of technology.

What Is an Artificial Neuron?

An artificial neuron is a fundamental building block of artificial neural networks, which are the backbone of modern artificial intelligence systems. Inspired by the structure and functioning of the biological neurons in the human brain, artificial neurons aim to replicate the process by which the brain receives, processes, and transmits information. But what exactly constitutes an artificial neuron, and how does it function?

At its most basic, an artificial neuron receives one or more input values. These inputs can be anything from raw pixel intensities in an image to numerical features like age or income in a dataset. Each input is multiplied by a certain weight — a number that signifies its importance — and the results are summed up. Additionally, a bias term is added to this sum, allowing the artificial neuron to shift its output independently of the specific input values. This weighted sum is then passed through an activation function, which determines the neuron’s output value. Popular activation functions include the sigmoid, tanh, and the widely used rectified linear unit (ReLU) function.

This entire process can be understood as a mathematical function. Given a set of inputs and a combination of weights and bias, the artificial neuron computes an output:

output = ActivationFunction(weight1 * input1 + weight2 * input2 + ... + bias)This simplicity is deceptive. By connecting many artificial neurons into layers and networks, you build powerful models capable of recognizing images, translating languages, or even generating new content. The learning process of a neural network involves adjusting the weights and biases across potentially millions of artificial neurons, gradually improving the model’s accuracy — a method known as machine learning.

To visualize how an artificial neuron works, consider the analogy of a group decision. Imagine several friends voting on where to eat. Each friend has a different influence (weight), and combining their inputs (votes) yields a final decision (output). The bias can represent a fixed preference in the group, no matter what the votes are. Through numerous examples and feedback, the group might learn to make better collective decisions, similar to how artificial neurons refine their predictions.

The concept of the artificial neuron was first formalized by Warren McCulloch and Walter Pitts in 1943 (Stanford Encyclopedia of Philosophy), which set the stage for decades of advancement in the field. Modern implementations often layer millions of these units together, resulting in the sophisticated neural networks now used for image recognition, autonomous vehicles, and even creative tools.

For a deep technical dive into artificial neurons and their mathematical foundations, the book Neural Networks and Deep Learning provides a beginner-friendly introduction with practical examples and exercises.

How Do Artificial Neurons Mimic the Brain?

Artificial neurons, the fundamental units of artificial neural networks, are inspired by the structure and function of biological neurons in the human brain. But how do these digital creations mimic the real things so effectively?

At the core, biological neurons receive signals from neighboring cells through dendrites, process this input in the cell body, and generate an output if a threshold is met, transmitting the signal via the axon to other neurons. Artificial neurons emulate this by taking numeric inputs, applying mathematical transformations, and producing an output—mimicking the decision-making capabilities found in nature.

Steps in Mimicking Brain Neurons:

- Input Gathering: Each artificial neuron receives inputs, similar to how a biological neuron gets signals from other neurons. In a neural network, these inputs are often numerical values—pixel values in an image, for instance.

- Weighted Summation: Every input to an artificial neuron has an associated weight, signifying its importance. This is akin to how some synaptic connections in the brain are stronger than others due to learning and adaptation. The neuron sums these weighted inputs. This mechanism is discussed in depth in educational resources such as Stanford University’s introduction to neural networks (Stanford CS221 Handout).

- Activation Function: Once the weighted sum is determined, the artificial neuron processes this value through an activation function. This non-linear function decides whether the neuron should “fire” and to what extent, echoing the threshold activation of biological neurons. Common activation functions include sigmoid, tanh, and the rectified linear unit (ReLU). For more, see the activation function guide by Machine Learning Mastery.

- Output Transmission: The final output can be passed as an input to subsequent neurons, constructing complex, layered architectures just like neural networks in the brain form intricate webs of connections.

These digital analogs of biological neurons shine when layered in large numbers, forming networks with the capability to learn from data, recognize speech, translate text, and even drive vehicles. As researchers continue to refine artificial neurons, they draw on principles from neuroscience, machine learning, and cognitive science, ensuring that our digital minds remain closely inspired by their biological counterparts. For readers interested in a deep dive into the comparison of biological and artificial neurons, this research article in Nature provides detailed scientific insights.

The fascinating journey from biological inspiration to artificial implementation is ongoing, with each step driven by advances in both technology and our evolving understanding of how the brain works. These parallels not only highlight creative engineering but also spark further exploration into what it truly means for machines to “think.”

The Pioneering Question: Can Machines Think Like Us?

It all began with a simple yet profound question: Can machines think like us? This query, posed by researchers and thinkers in the early days of computing, laid the foundation for what would later become the field of artificial intelligence. The concept of machines exhibiting human-like intelligence wasn’t just a curiosity; it was a pursuit that challenged our understanding of both machines and the very essence of human cognition.

In the 1940s, mathematician and logician Alan Turing famously explored this question. His paper, “Computing Machinery and Intelligence,” posed what is now known as the Turing Test, asking: “Can machines think?” Turing’s approach was pragmatic; rather than getting tangled in the semantics of ‘thinking’, he proposed an experiment where a machine’s ability to exhibit intelligent behavior was measured by its capacity to imitate human responses. If a human interrogator could not reliably distinguish between the machine and a human, the machine could be said to “think.”

This question soon led researchers to probe the structure of the brain itself. How do neurons in our brains allow us to learn, reason, and adapt? Taking inspiration from biology, pioneers like Warren McCulloch and Walter Pitts attempted to recreate this process in machines. Their pioneering 1943 paper introduced a mathematical model of the artificial neuron, laying the groundwork for artificial neural networks. By capturing the essence of how biological neurons process signals, they posed the next critical step: if we build networks of these artificial neurons, can machines learn as we do?

Consider this analogy: imagine teaching a child to recognize the difference between a cat and a dog. The child observes, makes mistakes, gets corrected, and refines their understanding over time. Likewise, artificial neurons are constructed to process inputs, generate outputs, and, crucially, learn from feedback—a process made possible by algorithms like backpropagation that adjust network parameters based on errors.

Thus, the quest to make machines think like us became a multidisciplinary endeavor, connecting biology, mathematics, engineering, and philosophy. Each advancement sparked new questions: Are we simply simulating intelligence, or creating it? Can a machine ever become self-aware? These questions continue to drive innovation and debate in modern AI research, as explored in resources like the Association for the Advancement of Artificial Intelligence.

In summary, the journey began with a single, audacious question. The legacy of that curiosity is evident in every smart device, language model, and autonomous system we encounter today—an ongoing attempt to teach machines not just to act, but to think as we do.

Key Breakthroughs in Neural Network Design

The journey toward modern artificial neurons began with the work of mathematician Warren McCulloch and logician Walter Pitts in 1943. Their model—a simple, binary threshold logic unit—laid the foundation for later developments in neural networks. However, it took decades of iterative breakthroughs to reach the powerful architectures we know today.

One of the first major leaps was the Perceptron Algorithm, introduced by Frank Rosenblatt in 1958. The perceptron was inspired by the idea of mimicking the brain’s process of learning through weighted connections. Despite its simplicity, this concept radically shifted how researchers approached pattern recognition and machine learning. A perceptron could learn to solve simple classification problems, and although limited in scope—it struggled with non-linear problems, as highlighted by Marvin Minsky and Seymour Papert in their book, “Perceptrons”—this limitation motivated further innovation.

The rise of multi-layered networks addressed the shortcomings of single-layer perceptrons. However, training such networks was impractical until the development of the backpropagation algorithm in the 1980s by Geoffrey Hinton, David Rumelhart, and Ronald Williams. Backpropagation provided an efficient method to adjust the weights in a multi-layered network by propagating the error backward from the output to the input layer. This breakthrough unlocked the ability to train “deep” networks capable of capturing complex, non-linear patterns in data—an essential feature of contemporary deep learning systems.

Throughout the 1990s and 2000s, neural network design made another leap through specialized architectures tailored for particular problems. The invention of Convolutional Neural Networks (CNNs) by Yann LeCun enabled computers to “see” by learning hierarchical features from image data, sparking the rise of computer vision applications such as facial recognition and autonomous vehicles. Recurrent Neural Networks (RNNs), another crucial advancement, equipped models with a form of memory, making them invaluable for sequential data like language, speech, and time series analysis.

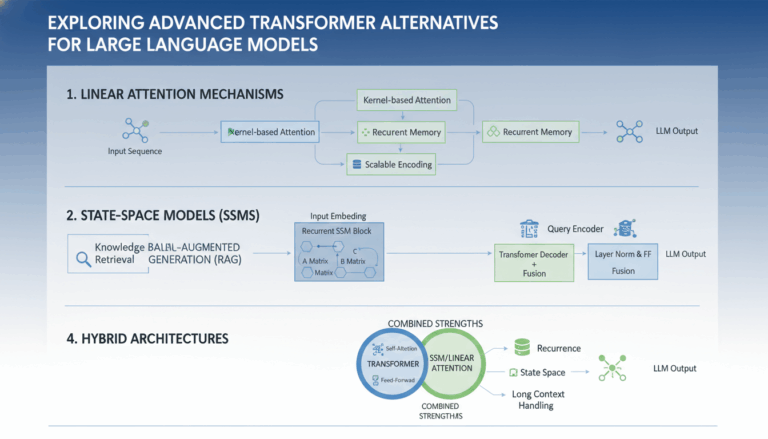

Finally, in recent years, the introduction of transformer architectures has revolutionized natural language processing and generative AI. Popularized by Vaswani et al. in their influential paper, “Attention Is All You Need”, transformers rely on self-attention mechanisms, allowing them to weigh the importance of various words in a sentence regardless of their position. This leap powered the creation of models such as BERT, GPT, and their successors, leading to systems that can summarize, translate, converse, and even compose creative content with astonishing fluency.

Each breakthrough—from the earliest perceptrons to the latest transformers—has brought artificial neural networks closer to echoing the complexities of the human brain. These advances not only improved performance but also unlocked entirely new possibilities for artificial intelligence. For a comprehensive historical overview, the DeepAI History of Neural Networks offers a deep dive into these milestone developments.

From Perceptrons to Deep Learning: An Evolution

The journey from the earliest artificial neurons to the rise of deep learning is one of continual evolution, driven by both technological limitations and the growing desire to model human cognition. In the late 1950s, Frank Rosenblatt introduced the Perceptron, widely recognized as one of the first implementations of an artificial neural network. The perceptron was inspired by biological neurons and designed to process information in a similar way—taking several binary inputs, combining them, and returning a single binary output. With a simple rule, it could learn linear decision boundaries, a breakthrough for its time.

However, researchers soon uncovered the perceptron’s steep limitations. As detailed in the landmark book “Perceptrons” by Marvin Minsky and Seymour Papert, single-layer perceptrons could not solve problems that weren’t linearly separable. The classic example: they failed to model the XOR (exclusive OR) logical function—a seemingly simple task. This limitation led to a period of stagnation in neural network research through the 1970s, now referred to as the “AI winter.” Yet, this was only the beginning, not the end.

The tide turned in the 1980s with the rebirth of neural networks through the backpropagation algorithm, popularized by Geoffrey Hinton and others. Backpropagation enabled the training of multi-layer networks—often called Multi-Layer Perceptrons (MLPs)—to learn increasingly complex patterns. Now, neural networks could model intricate, non-linear relationships between input and output, paving the way for advances in computer vision, language modeling, and beyond.

The breakthrough was soon followed by the development of deep learning, which involves stacking multiple layers—sometimes hundreds or thousands—of artificial neurons to form deep neural networks. Each layer learns gradually more abstract features: in an image classifier, the early layers might detect simple edges, while deeper layers recognize sophisticated textures, shapes, or even entire objects. These architectures, fueled by improved computational power (most notably GPUs), open-source frameworks, and vast datasets, have led to state-of-the-art results in image recognition, language translation, and game playing.

Today, deep learning plays a central role in technologies like self-driving cars, speech assistants, and even medical diagnosis, illustrating the incredible capability that began with the humble perceptron. This evolution has not only advanced AI but also posed important questions about learning, generalization, and the very nature of intelligence—a journey chronicled by experts and skeptics alike.

The trajectory from perceptrons to deep learning is a testament to the power of persistent inquiry, creative problem-solving, and the enduring allure of mimicking human thought. Each step in this evolution has contributed a crucial insight, leading to the remarkable capabilities of today’s artificial intelligence systems.

Why Artificial Neurons Matter in Modern AI

Artificial neurons serve as the fundamental building blocks of neural networks, powering most of today’s groundbreaking advancements in artificial intelligence (AI). To understand their significance, it’s important to grasp both their design inspiration and the pivotal role they play in modern technology.

At their core, artificial neurons are mathematical functions inspired by biological neurons found in the human brain. Just as neurons transmit signals via electrical impulses, artificial neurons process input data and determine how and whether to “fire” a response. This functional similarity has paved the way for algorithms that can learn and adapt, features that are essential to AI.

Modern AI relies on large-scale artificial neural networks — often referred to as deep learning models — to perform complex tasks including image recognition, language processing, and even medical diagnosis. Artificial neurons, arranged in layers, receive input, process it using “weights” (data-modifying parameters learned over time), and pass outputs to subsequent layers. This layered approach enables the extraction of complex features and patterns from raw data, a capability that’s central to deep learning.

The significance of artificial neurons can be further illustrated through real-world examples:

- Healthcare: AI models built on artificial neurons have demonstrated remarkable accuracy in detecting diseases from medical scans, as detailed in this Nature article. Their effectiveness is rooted in the ability of artificial neurons to identify subtle patterns and anomalies in complex datasets that might elude human practitioners.

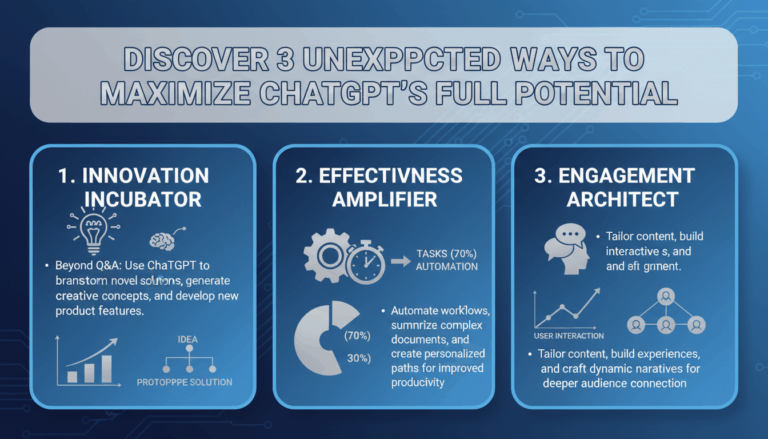

- Natural Language Processing: Tools like chatbots, translation services, and virtual assistants use artificial neurons to learn the intricacies of human language. For example, Google’s BERT model leverages millions of artificial neurons to understand the context and meaning of words, enabling more natural and effective human-machine communication.

- Autonomous Vehicles: Self-driving cars analyze thousands of data points in real time using artificial neural networks. Every sensor input — from cameras to lidar — is processed by artificial neurons, allowing vehicles to detect obstacles, predict movement, and make split-second decisions. Read more on SAE International.

The versatility and scalability of artificial neurons have led to significant advancements in AI, creating systems that can outperform humans in several domains. Their influence extends beyond just technology, impacting society, business, and even our understanding of intelligence itself. To dive deeper, review the comprehensive resources offered by The Alan Turing Institute on AI research and development.

Challenges in Modeling Brain-Like Intelligence

Creating artificial systems that can match the intelligence and versatility of the human brain presents a daunting set of challenges. One of the most profound difficulties lies in accurately modeling the complexity and subtlety of biological neurons — the foundational units of the human nervous system. While artificial neurons used in deep learning are inspired by their biological counterparts, the resemblance is largely superficial. Let’s examine some of the critical hurdles researchers face in this intricate endeavor.

1. Biological Complexity vs. Simplified Abstractions

Biological neurons are remarkably complex, each possessing thousands of synaptic connections, intricate chemical signaling mechanisms, and the ability to adapt and reorganize via neuroplasticity. In contrast, artificial neurons typically perform a basic weighted summation and apply an activation function. This radical simplification omits crucial dynamics such as neurotransmitter variability, feedback loops, and temporal dependencies. Addressing these gaps would require breakthroughs in computational neuroscience and hardware design, as highlighted in cutting-edge neuroscience research.

2. Scalability and Energy Efficiency

The human brain is both massively parallel and astonishingly energy efficient, operating on approximately 20 watts of power. In contrast, modern neural networks often require power-hungry GPUs and cloud infrastructure to function at much lower levels of sophistication. Efforts to bridge this divide, such as neuromorphic engineering (hardware inspired by brain architecture), are ongoing. Institutions like The Human Brain Project are actively exploring this intertwining of neuroscience, computing, and energy efficiency within next-generation technologies.

3. Learning and Adaptation

The brain’s capacity to learn continuously and generalize from sparse data is still largely mysterious and remains an open challenge for artificial intelligence research. Machine learning models require extensive labeled data and are notoriously brittle when faced with unfamiliar scenarios. Meanwhile, humans can learn complex concepts from just a handful of examples, adapt to novel situations, and forget irrelevant information gracefully. Advanced research from institutions such as Stanford AI Lab is shedding light on how lifelong learning and transfer learning may bridge this gap, but significant hurdles remain.

4. Interpretability and Trust

Unlike the human brain, whose decision processes are often transparent (albeit not always conscious), artificial neural networks are “black boxes.” Understanding how these systems make decisions, especially in critical areas like healthcare or autonomous vehicles, is an area of intense investigation. Techniques such as model interpretability and explainable AI are evolving, as discussed in depth by the Nature Machine Intelligence journal, but true transparency is still a work in progress.

Every step toward replicating brain-like intelligence reveals additional subtleties and depths within the original question that sparked the field: How does intelligence emerge from networks of simple processing units? By exploring these challenges, researchers not only edge closer to engineering remarkable machines but also deepen our understanding of the most complex system known to humankind — the brain itself.

Real-World Applications of Artificial Neurons

Artificial neurons, the fundamental units of artificial neural networks, are reshaping industries and solving real-world problems with surprising effectiveness. Their ability to process vast amounts of data, recognize patterns, and make predictions is fueling advancements in numerous sectors. Here’s a closer look at some of the most transformative applications:

Healthcare: Revolutionizing Diagnostics and Personalized Medicine

Artificial neurons are at the heart of neural networks driving significant change in healthcare. Through their use, medical imaging systems can now detect anomalies such as tumors or fractures in X-rays, MRIs, and CT scans with accuracy rivaling or even surpassing human experts. For example, deep learning models developed at Stanford have been applied to identify skin cancer from images, bringing high-accuracy diagnostics to the front lines of patient care.

Beyond imaging, artificial neurons enable personalized treatment planning by analyzing genetic data to predict an individual’s response to therapies. This technology is fostering better outcomes and more efficient care, with AI poised to play a critical role in everything from drug discovery to remote patient monitoring.

Finance: Enhancing Fraud Detection and Algorithmic Trading

In the complex world of finance, artificial neurons analyze millions of transactions in real time, recognizing suspicious patterns that might indicate fraudulent activity. Banks and credit card companies integrate AI-powered fraud detection systems that adapt to new methods of deception, protecting user accounts and financial data. For instance, machine learning systems quickly flag fraud attempts based on subtle changes in user behavior, device information, or transaction location.

In addition, artificial neurons underpin algorithmic trading strategies. These networks identify market trends, price patterns, and execute trades in fractions of a second, outpacing traditional human traders. By learning from vast datasets of historical market data, AI-driven trading platforms can react to market shifts and optimize portfolio allocations for better returns.

Autonomous Vehicles: Powering Perception and Decision-Making

Autonomous vehicles rely on neural networks to interpret and respond to the surrounding environment. Artificial neurons process data from cameras, radar, and LIDAR to recognize pedestrians, traffic signals, lane markings, and other vehicles. Tesla’s self-driving technology and similar systems use neural networks to continuously improve their perception capabilities based on real-world driving data.

These networks also help decision-making algorithms choose safe maneuvers, such as when to change lanes, merge into traffic, or stop for pedestrians. By learning from millions of scenarios, artificial neurons allow self-driving cars to adapt to new environments and unpredictable road conditions, bringing us closer to safer roads and fewer accidents.

Natural Language Processing: Powering Virtual Assistants and Translation Services

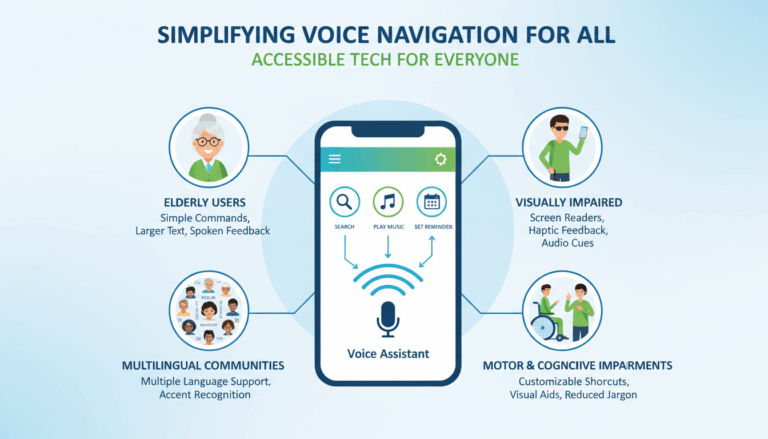

Every time you interact with a virtual assistant (like Google Assistant or Siri), artificial neurons are working behind the scenes to interpret spoken commands, answer questions, and carry out tasks. These advanced models can comprehend context, manage conversations, and even detect emotion based on tone, making technology more accessible and responsive.

They also drive translation services such as Google Translate, instantly converting text and speech across languages by identifying patterns in massive datasets. This global connectivity reduces language barriers, helping businesses, researchers, and travelers communicate more effectively around the world.

Manufacturing: Predictive Maintenance and Smart Automation

Manufacturers employ artificial neurons in neural networks to predict equipment failures before they happen, a practice known as predictive maintenance. By analyzing sensor data from machinery, these systems can detect unusual patterns and issue alerts long before a breakdown—maximizing uptime and reducing operational costs. For instance, IBM’s predictive maintenance solutions leverage neural networks to optimize maintenance schedules, resulting in significant savings for industries from automotive to aerospace.

Furthermore, artificial neurons enable smart automation, where robotic systems adjust their actions based on real-time data from the production line. This adaptability leads to higher efficiency and quality, laying the foundation for truly intelligent factories.

Artificial neurons are neither confined to labs nor limited to experimental research. As the real-world examples above illustrate, they are powering innovations in nearly every facet of modern life. For more technical details on how artificial neurons work, Neuroscience News offers an accessible explanation.