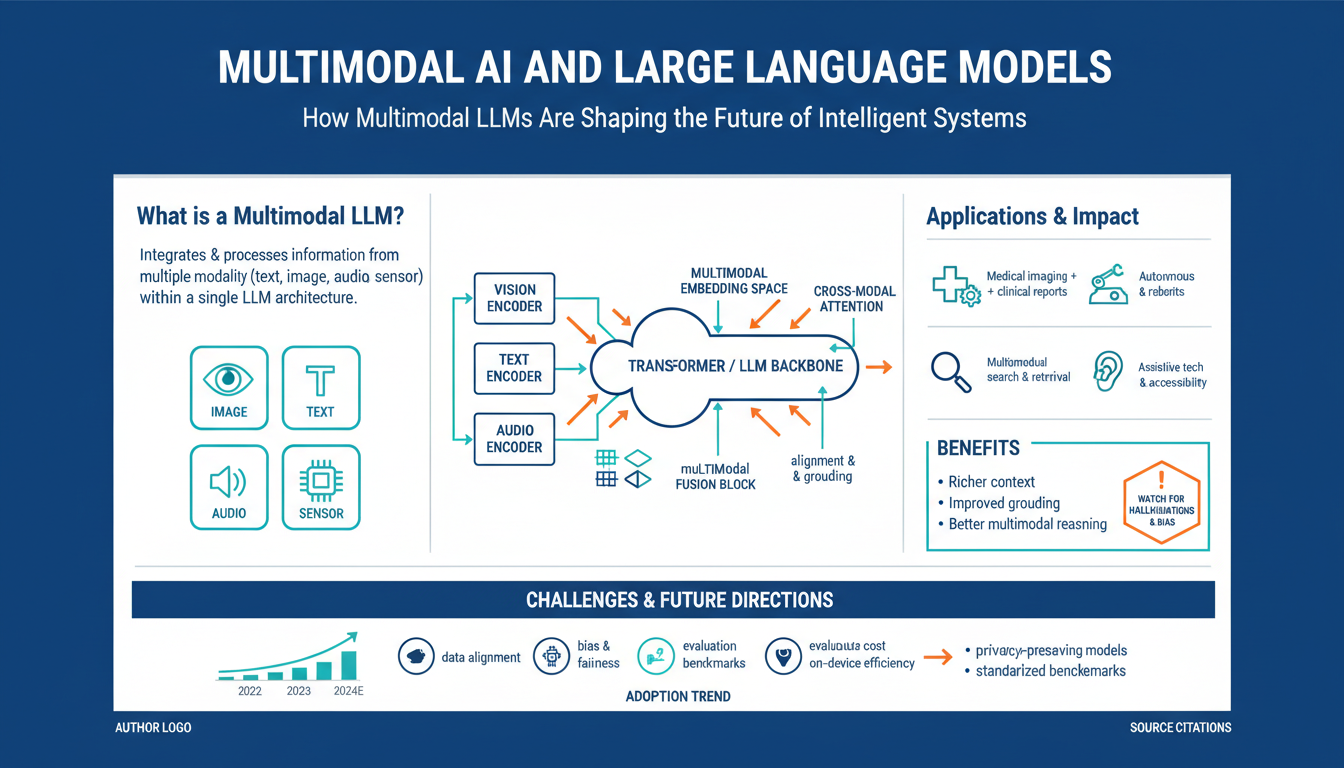

Multimodal AI and Large Language Models: How Multimodal LLMs Are Shaping the Future of Intelligent Systems

Multimodal LLMs Overview Multimodal LLMs combine language understanding with non-text inputs—images, audio, video, and structured data—so a single model can […]