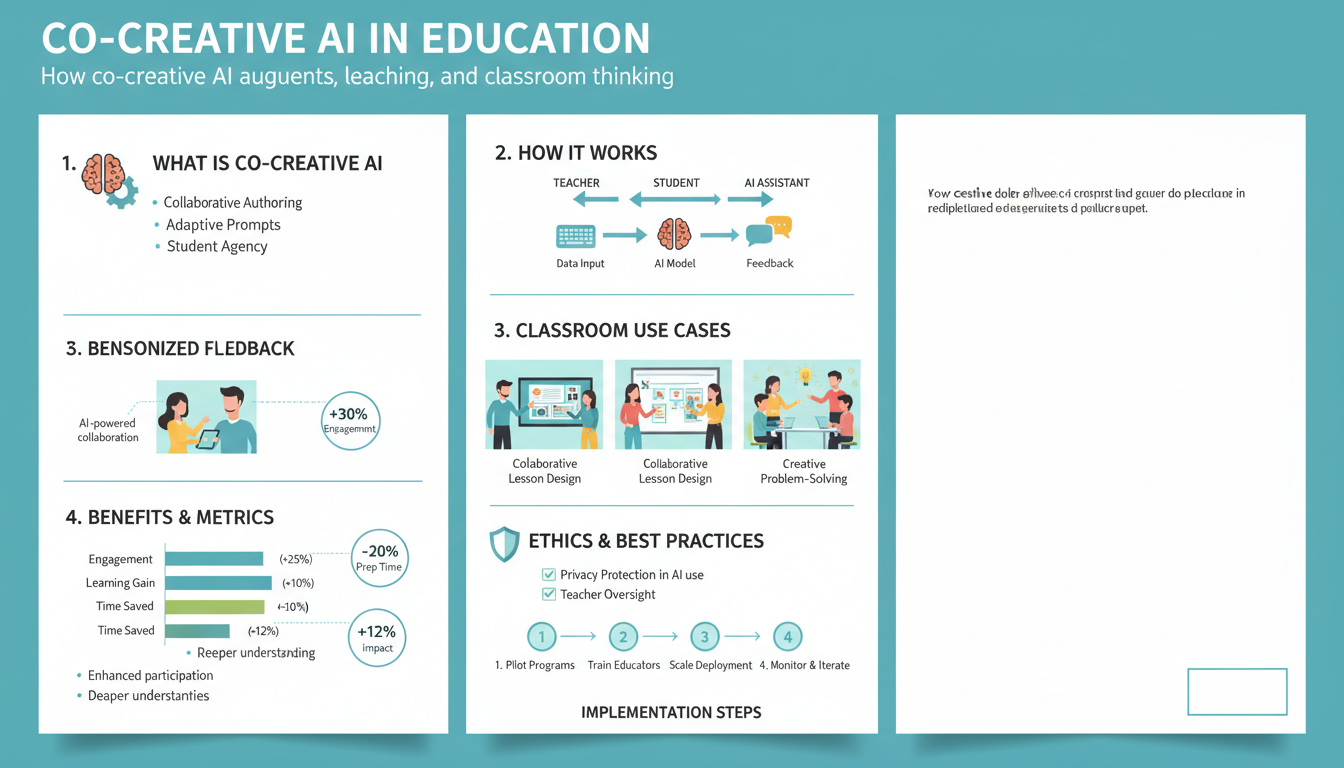

Define Co‑Creative AI

Co-creative AI refers to systems designed to collaborate with students and teachers as generative partners rather than as passive tools. Instead of fully automating tasks, these systems propose ideas, offer scaffolds, surface alternatives, and respond to human direction in iterative cycles—supporting ideation, revision, assessment and reflection. In classrooms this looks like an AI that suggests thesis structures and counterarguments during essay drafting, proposes experiment variations in a lab simulation, or generates question prompts that deepen student inquiry while the teacher sets learning goals and evaluates outcomes. Key features are real-time, context-aware suggestions; controllable levels of autonomy; and explicit affordances for human judgment (edits, accept/reject, prompting). The value lies in extending cognitive bandwidth—accelerating iteration, exposing diverse perspectives, and enabling personalized scaffolding—while preserving learner agency and teacher pedagogy. Practical classroom use requires clear roles (who owns the work), transparent provenance of AI contributions, and guardrails for bias and privacy. When thoughtfully integrated, co-creative AI becomes a scaffold for higher-order thinking: it challenges assumptions, surfaces new directions, and helps learners practice metacognitive strategies while teachers guide the learning trajectory.

Learning Benefits and Outcomes

Students gain deeper, more transferable learning when AI acts as a generative partner: personalized scaffolds surface just-in-time hints and alternative perspectives that keep tasks in the learner’s zone of proximal development, accelerating skill growth without replacing human judgment. Real-time suggestions—such as counterarguments during essay drafting or variable tweaks in a virtual lab—shorten revision cycles, enabling more iterative practice and higher-quality outputs within the same class time. Co-creative workflows encourage metacognition by making reasoning explicit: when students accept, modify, or reject AI proposals they practice evaluating evidence, attributing sources, and reflecting on strategy. Teachers benefit from richer formative data and reduced routine load, freeing time for targeted instruction and mentorship; AI-generated analytics can highlight misconceptions and growth patterns that inform differentiated lessons. Engagement and creativity increase because learners explore diverse idea spaces with low-stakes experimentation, supporting risk-taking and novel problem framing. Importantly, outcomes include not only improved product quality (better arguments, clearer explanations, more robust models) but also stronger process skills: critical thinking, collaboration with intelligent tools, digital literacy, and self-regulated learning. When integrated with clear roles and assessment rubrics, co-creative AI enhances both immediate classroom performance and longer-term readiness for complex, AI-augmented workplaces.

Designing Collaborative Activities

Start by specifying a clear learning goal and the human-AI division of labor: what should students produce, what decisions remain human, and when the AI should propose versus execute. Design tasks that require both idea generation and critical evaluation so the AI’s generative strengths and the students’ judgment complement one another.

Structure work around short iterative cycles: prompt → generate → critique → revise. Make each cycle explicit in the activity rubric (e.g., two AI proposals, one student revision, one peer critique). Short cycles increase practice opportunities, make provenance visible, and keep the classroom pace manageable.

Control AI affordances deliberately. Limit autonomy with prompts or tool settings (suggestions only, explain reasoning, cite sources) and provide templates that scaffold productive interactions (hypothesis frames, counterargument starters, experiment-variant checklists). Require students to annotate what they accepted, modified, or rejected so assessment captures both final products and decision-making.

Foster distributed responsibility in group work by assigning complementary roles: prompt engineer, quality reviewer, integrator, and presenter. Rotate roles across tasks so students practice prompt craft, source appraisal, and synthesis. Use joint artifacts (shared docs, versioned drafts) so AI contributions and human edits remain traceable.

Embed metacognitive prompts into the workflow: ask students to explain why they accepted an AI suggestion, what assumptions it introduced, and what evidence would change their choice. Pair formative rubrics with quick analytics (revision counts, suggestion acceptance rates) to guide teacher interventions.

Finally, account for equity and privacy: provide alternative low-bandwidth options, predefine content filters, and require citation of AI-origin ideas. These design moves keep co-creative activities productive, transparent, and centered on learning rather than automation.

Selecting Classroom AI Tools

Choose tools that map directly to your learning goals and keep teachers firmly in the loop: prefer systems that default to “suggestions only,” provide explanations for their outputs, expose provenance, and let teachers control autonomy levels so student judgment remains primary. (commonsense.org)

Make privacy and compliance non‑negotiable. Verify vendor commitments on FERPA/COPPA compliance, insist on data‑minimization and clear retention/deletion clauses in contracts, and adopt a strict rule against entering identifiable student information into general-purpose models. Require written assurances about how student data will (and will not) be used. (ai.nuviewusd.org)

Assess instructional fit and equity: the tool should support your rubric, integrate with existing workflows (LMS, shared docs), offer accessible interfaces and low‑bandwidth or offline options for students with limited connectivity, and have licensing terms that match classroom scale and budgets. Pilot with representative classes to surface gaps in accessibility and usability before wider rollout. (events.educause.edu)

Vet vendors and formalize governance. Use a short evaluation rubric during procurement that covers pedagogical alignment, privacy, explainability, accessibility, cost, and support for teacher professional learning. Require pilot evidence, a plan for teacher PD, and contractual language that prohibits using classroom data to further model training unless explicitly approved. Centralize approval through an IT/ethics review to ensure consistent safeguards. (impact.acbsp.org)

Finally, evaluate tools in practice: run a brief classroom trial, collect student and teacher feedback on usefulness and trust, and refuse tools that produce unexplainable outputs or undermine learner agency.

Classroom Implementation Steps

Begin by defining a clear learning objective and the specific student product you’ll assess; decide which decisions remain human (evaluation, synthesis) and which the AI will propose (ideas, drafts, experiment variants). Select and configure tools that default to “suggestions only,” expose provenance, and meet privacy requirements; create low‑bandwidth alternatives for students with limited access. Pilot the activity with a small class to surface usability, equity, and accessibility gaps, and schedule a brief teacher PD session that covers prompt design, reading AI explanations, and common failure modes.

Design the lesson as short iterative cycles: prompt → generate → critique → revise. Provide explicit rubrics that score both final artifacts and decision-making (students must annotate what they accepted, modified, or rejected and why). Assign rotating group roles—prompt crafter, reviewer, integrator, presenter—to distribute skills like prompt craft, source appraisal, and synthesis. Use shared, versioned documents so AI contributions and human edits remain traceable.

During class, model effective prompting and critique, scaffold students with starter prompts and frames (hypothesis, counterargument, test variations), and require the AI to explain its reasoning when possible. Monitor formative analytics (revision counts, suggestion acceptance) to target interventions and keep teachers in control of assessment judgments.

Include explicit equity and privacy steps: prohibit entering identifiable student data into open models, predefine content filters, and offer offline or teacher‑mediated options. After the pilot, collect student and teacher feedback, review artifacts for bias or misinformation, and iterate on prompts, rubrics, and tool settings before scaling.

Ethics, Privacy, and Bias

Protect student autonomy and safety by designing clear boundaries: require informed consent from families, avoid entering identifiable student data into general-purpose models, and minimize collected data to what’s essential for instruction. Insist that tools label AI-origin content and expose provenance so teachers and students can trace suggestions and assess reliability. Treat teacher oversight as non‑negotiable—AI should propose, humans should decide—by embedding human checkpoints in every workflow and logging decisions for later review.

Actively guard against bias by testing tools with diverse, representative student samples and routine audits that surface disparate impacts on groups defined by language, race, disability, or socioeconomic status. Require vendors to document training data sources, known failure modes, and mitigation steps; where transparency is limited, run controlled classroom pilots to detect systematic errors before scaling. Teach students to interrogate AI outputs: ask for evidence, identify assumptions, and annotate accepted, modified, or rejected suggestions as part of assessment.

Operationalize privacy and equity: include contractual clauses on data retention and non‑use for model training, provide low‑bandwidth or teacher‑mediated alternatives, and ensure accessible interfaces. Equip teachers with short PD on common failure modes, ethical red flags, and how to escalate concerns. Finally, institutionalize periodic reviews—privacy audits, bias impact assessments, and stakeholder feedback loops—so co‑creative AI supports learning without compromising rights or fairness.