Introduction to LLM Backends

Large Language Model (LLM) backends encompass the underlying infrastructure, frameworks, and methodologies that enable efficient deployment, management, and execution of sophisticated language models. These backends serve as the technological backbone, ensuring that language models operate seamlessly while managing the complexity and scale inherent in modern applications.

At the core of LLM backends is the integration with machine learning frameworks and scalable infrastructure platforms. This setup allows the models to function effectively, leveraging APIs and microservices architectures to support multiple end-user applications simultaneously. As the importance of natural language processing (NLP) continues to rise, the demand for robust backends becomes increasingly critical.

A crucial aspect of these backends is the environment they provide for training and inference. Training large language models require substantial computational resources, often necessitating the use of distributed computing environments and GPU or TPU accelerations to handle the vast datasets and complex computations involved. Integrating tools like TensorFlow or PyTorch within the backend architecture helps streamline the training process by offering optimized computations and flexible model customization.

Moreover, efficient data pipelines are integral to LLM backends. They ensure the flow of data from collection, processing, to model training and evaluation. Effective data management strategies within these pipelines can significantly impact the performance and accuracy of language models. Using frameworks like Apache Kafka or Apache Airflow can automate and optimize these workflows, allowing for real-time data processing and model updating.

Another component of LLM backends is the deployment strategy, which determines how models are made accessible to end-users. Containerized deployments using Docker or Kubernetes ensure that models are scalable and that updates can be deployed with minimal downtime. Implementing load balancers and employing RESTful APIs further allows efficient scaling and easy integration with existing systems.

Security and compliance are also pivotal in designing LLM backends. As these models handle vast amounts of sensitive data, ensuring data privacy and adhering to regulatory requirements (such as GDPR) is paramount. Techniques like data anonymization and encryption, alongside regular audits and compliance checks, help in safeguarding user information.

Lastly, monitoring and logging frameworks enable continuous oversight of the models’ functioning. Tools like Prometheus or ELK stack facilitate real-time monitoring and analytics, allowing for rapid identification and resolution of performance bottlenecks or failures.

Overall, LLM backends epitomize the convergence of software development, data engineering, and AI research, each aspect demanding careful consideration to enable the full potential of language models in diverse applications.

Overview of LangChain Framework

LangChain is a comprehensive framework designed to simplify the development of applications that harness the power of large language models (LLMs). The framework aims to bridge the gap between technical intricacies of LLMs and practical application needs, offering an intuitive, scalable solution that supports a wide variety of use cases.

LangChain provides a high-level API that allows developers to easily integrate language models into their applications. This API abstracts many of the complexities involved in managing language models, such as handling diverse data formats, managing long contexts for language processing, and dealing with input/output operations efficiently.

One of the standout features of LangChain is its capability to manage contextual information. Large language models typically have a fixed context window size, which can limit their ability to process long sequences of text at one go. LangChain addresses this limitation by implementing a system that dynamically manages the context, ensuring that only the most relevant pieces of information are kept within the window. This mechanism enhances the performance of language models by maintaining contextual relevance across interactions.

LangChain also excels in supporting multi-turn conversations by enabling the retention and tracking of conversational state—a feature especially useful for chatbot applications. Through intelligent context management, LangChain allows language models to keep track of previous interactions and user inputs, facilitating more coherent and contextually aware responses.

Additionally, LangChain incorporates a robust library of tools and utilities for data pipelining and preprocessing. These tools support various data transformations, enabling developers to efficiently prepare text data for model input. This includes capabilities for tokenization, encoding, and augmentation, which are essential for tailoring the input data to the specific requirements of LLMs.

In terms of deployment, LangChain seamlessly integrates with cloud services and containerization platforms like Docker and Kubernetes, allowing for scalable and flexible deployment architectures. This integration is crucial for applications that expect high traffic or need to serve multiple instances of language models simultaneously. LangChain offers support for RESTful APIs and WebSocket protocols, facilitating easy integration with front-end platforms and ensuring real-time interaction capabilities.

One of the critical aspects of LangChain is its focus on customization and extensibility. The framework is designed to be adaptable, enabling developers to implement custom strategies and plugins that cater to specific application needs. This extensibility is supported through a modular architecture, where components can be easily swapped or extended without disrupting the entire system.

LangChain also places a strong emphasis on security and compliance, acknowledging the sensitive nature of data processed by language models. The framework includes guidelines and tools for implementing data security measures such as encryption, user authentication, and access control policies. Moreover, LangChain’s architecture complies with industry standards and regulatory requirements, providing developers with a trustworthy environment for deploying compliant applications.

Finally, LangChain incorporates advanced monitoring and logging capabilities, vital for maintaining and optimizing language model performance. Through integrations with popular monitoring tools like Prometheus and Grafana, LangChain offers developers the ability to track system metrics, monitor resource usage, and gain insights into model performance. This level of visibility ensures that developers can swiftly identify and resolve any issues, enhancing the reliability and robustness of applications built on the framework.

In essence, LangChain stands out as a powerful tool for developers seeking to leverage the potential of language models in their applications. It streamlines the complexities of LLM management and deployment, offering a rich set of features designed to facilitate efficient, scalable, and innovative language model applications.

Developing Custom LLM Backends

Developing a custom LLM backend involves designing an infrastructure tailored to specific business needs, focusing on flexibility, scalability, and performance optimization. This approach requires a deep understanding of both software development and machine learning principles, enabling the creation of a robust environment for deploying and managing language models.

To begin crafting a custom backend, one must decide on the foundational technologies and frameworks that will support the workflow. Consider using well-established machine learning frameworks such as TensorFlow or PyTorch. These platforms offer extensive libraries and tools for handling tasks like tensor operations, model building, and training. They also provide compatibility with various processing units (CPU, GPU, TPU), thus facilitating efficient resource utilization.

Once you choose an appropriate machine learning framework, focus on setting up the necessary infrastructural components. A crucial decision is the selection of a computing environment that can accommodate the intense computational demands. Cloud platforms such as AWS, Google Cloud, or Azure provide scalable resources, allowing dynamic resource allocation based on workload requirements. For on-premises solutions, leveraging containers through Docker paired with orchestration tools like Kubernetes ensures the flexible and efficient deployment of LLMs.

In custom LLM backends, creating tailored data pipelines is paramount for preprocessing, managing, and streaming data efficiently. Apache Kafka or Apache Airflow can automate and optimize these pipelines, ensuring that data flows seamlessly from data sources through cleaning and transformation processes, eventually reaching the training and inference stages. These tools support real-time processing, allowing models to train and update with the latest data quickly.

Moreover, designing an effective API layer is essential to enable interaction between the LLM backend and front-end applications or external systems. Building RESTful APIs allows for standardized HTTP communication, making language models accessible across various platforms and devices. Incorporating GraphQL can further enhance this API system by enabling clients to request exactly the data they need, reducing bandwidth usage and improving performance.

Incorporating security measures is another vital component of developing a custom LLM backend. Data protection strategies, such as encryption and token-based authentication, are critical in safeguarding user information. Implementing privacy-preserving technologies like differential privacy ensures data compliance with regulations like GDPR, fostering trust among users.

Monitoring and logging are indispensable for the continuous optimization of LLM performance. Tools like ELK Stack or Prometheus integrate easily into LLM backends, providing real-time analytics and insights into system operations. These tools help track model performance metrics, system utilization, and error rates, enabling timely troubleshooting and system enhancements.

Finally, a custom LLM backend must prioritize flexibility and scalability. By using a modular architecture, different components of the system can be independently developed and maintained, allowing for quick adaptation and integration of new features. This modular design not only supports ongoing innovation but also aids in maintaining high performance and reliability as application demands evolve.

Comparative Analysis: LangChain vs. Custom Approaches

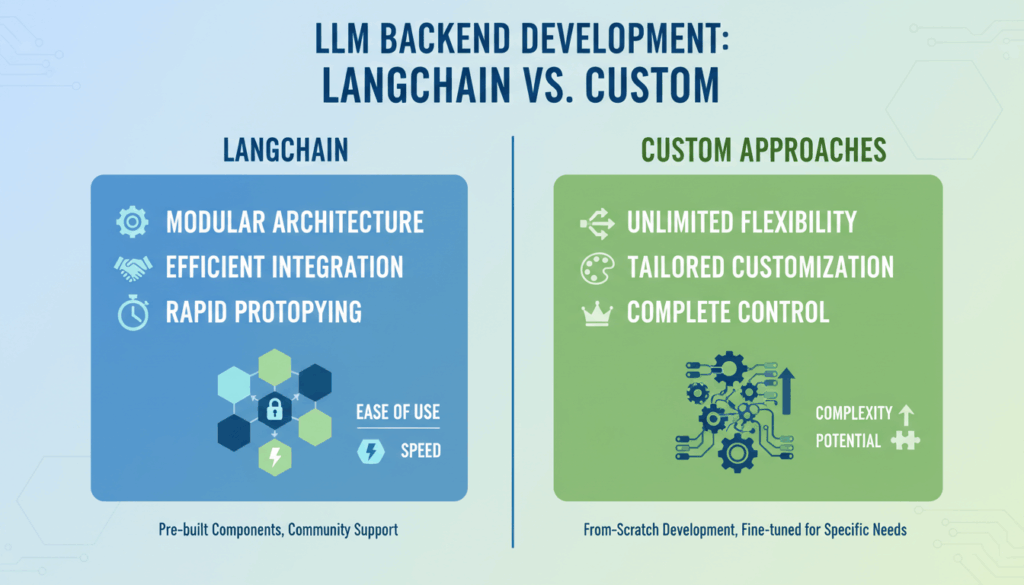

When examining the effectiveness of LangChain versus custom approaches in developing LLM backends, several critical aspects emerge that warrant detailed exploration. At the core of this comparison is how each method handles the intricacies of managing large language models and aligns with varying application requirements.

LangChain provides developers with a ready-made framework that significantly streamlines the development process. Its high-level API and modular design are tailored to simplify complex tasks such as context management and conversational state retention. This makes LangChain an attractive option for developers who prioritize ease of integration and rapid deployment.

Ease of Use and Learning Curve

One of LangChain’s primary strengths is its user-friendliness. The framework abstracts much of the complex backend operations, allowing developers to focus on building applications without delving deeply into the lower-level operations of LLM handling. This results in a lower learning curve compared to custom approaches, which often require more intimate knowledge of machine learning frameworks like TensorFlow or PyTorch.

In contrast, developing a custom backend necessitates thorough understanding and manual configuration of all components. This includes setting up data pipelines, managing computing resources, and creating APIs. While this approach offers unparalleled flexibility, it requires a substantial investment of time and expertise, making it more suitable for teams with specialized skills or unique requirements that pre-packaged solutions might not satisfy.

Flexibility and Customization

LangChain is designed to be extensible, offering a variety of plugins and modules that can be adjusted to suit specific needs. However, there’s a limit to how much you can customize within its given framework. This might be a constraint for applications with very specialized requirements.

Conversely, custom approaches unlock virtually unlimited flexibility. Developers have the freedom to tailor every aspect of the backend to meet precise specifications, whether it’s designing unique data processing flows or integrating specific machine learning optimizations. However, this flexibility comes at the cost of increased complexity and resource demand, as each component must be built, tested, and maintained independently.

Performance Optimization

Performance is another key differentiator. LangChain provides a set of optimized, out-of-the-box solutions that leverage best practices in language model management and deployment. Its integration capabilities with platforms like Docker and Kubernetes facilitate straightforward scaling and performance management in typical usage scenarios.

On the other hand, custom backends allow developers to implement highly specialized performance optimizations. By directly manipulating the underlying ML frameworks and computing environments, teams can fine-tune resource utilization and model efficiency to align perfectly with desired performance goals. This can be particularly advantageous for high-demand applications requiring maximum throughput and minimal latency.

Scalability and Deployment

Both LangChain and custom approaches support scalable deployments, but they differ in the level of control offered. LangChain’s pre-designed integrations simplify scaling processes using cloud environments and container orchestration systems. This is sufficient for many standard applications where scalability needs are predictable and inline with default configurations.

Custom solutions can be systematically engineered from the ground up to accommodate specific scalability requirements. This allows for nuanced control over every deployment aspect, ensuring applications can grow seamlessly alongside increasing user demands. The trade-off is the complexity of managing these deployments, which requires robust infrastructure expertise.

Conclusion

Ultimately, the choice between LangChain and a custom LLM backend approach hinges on specific project requirements, expertise, and resource availability. LangChain’s ease of use and predefined structures suit applications requiring rapid development and deployment within standard frameworks. In contrast, custom approaches are ideal when project demands surpass the capabilities or constraints posed by pre-existing solutions, rewarding teams with the flexibility to innovate and customize extensively.

Key Considerations for Choosing Between LangChain and Custom Solutions

Understanding how to choose between LangChain and developing a custom solution for large language model (LLM) backends requires analyzing several key factors. The decision largely hinges on how these solutions align with your specific project requirements, organizational resource availability, and long-term strategic goals. Below are several considerations to guide this evaluation process.

First, assess your project timeline and available expertise. LangChain offers a streamlined solution that allows developers to quickly integrate and deploy LLMs using predefined templates and APIs. This can be particularly advantageous if your team lacks extensive machine learning expertise and needs to deliver a project within a tight schedule. On the flip side, building a custom backend involves configuring and managing all components—from data pipelines and computing environments to API services—which can be time-consuming and require specialized skills in machine learning and data engineering.

Next, consider the complexity and specificity of your application needs. LangChain is designed with modularity in mind, facilitating certain levels of customization through plugins and configuration options. This makes it suitable for applications with standard language model requirements. However, if your application demands highly specialized features—such as proprietary data processing workflows or unique model interpretations—a custom solution may offer the flexibility needed to innovate and integrate these bespoke elements effectively.

Resource management and cost efficiency are equally important. Utilizing LangChain could reduce initial development and operational overhead, thanks to its built-in integrations and optimizations. It can result in cost savings by decreasing the need for extensive infrastructure investments and ongoing maintenance efforts. Conversely, a custom backend might incur higher initial costs due to the need for tailored development, but it could offer longer-term savings through optimized resource utilization and potentially lower dependency on third-party licenses or services.

When deliberating on scalability and performance requirements, it’s crucial to evaluate your current and future user load. LangChain provides scalable options through integration with cloud services and container orchestrations, making it adequate for many applications. However, custom solutions allow precise control over all facets of scaling, enabling performance optimizations that match specific operational and growth needs, which can be crucial for applications with unpredictable or highly variable traffic patterns.

Security is another critical domain. Data privacy and regulatory compliance are always at the forefront when managing LLMs. LangChain offers predefined standards for security and privacy, aligning with common regulatory frameworks. For organizations with stringent security policies or unique data privacy concerns, developing a custom solution might be necessary. This allows direct oversight and customization of security measures, which can include advanced encryption techniques or proprietary compliance strategies designed to fit specific regulations.

Lastly, contemplate the importance of vendor lock-in and future-proofing your solution. LangChain offers rapid deployment and integration efficiencies; however, it ties you to its ecosystem and strategic direction. Building a custom backend provides the freedom to pivot strategically without relying on third-party updates or support, potentially safeguarding your investment against future changes in external vendor strategies.

Each of these considerations carries weight differently depending on your organizational context and project demands. Understanding your core priorities—whether they are minimizing time-to-market, maximizing customization, controlling costs, or ensuring adaptable growth—will inform the decision on whether to leverage LangChain’s structured offerings or invest in a tailored custom solution that aligns with broad long-term objectives.