Understanding Context in Agentic AI Systems

At its core, context in agentic AI refers to an agent’s awareness of its environment, task history, user preferences, and current objectives. Unlike traditional AI, agentic systems are designed to act proactively, adjust to dynamic inputs, and pursue goals autonomously. Understanding the nuances of context is crucial for building truly intelligent agents that can interact meaningfully with users and their environments.

Context encompasses both explicit data, such as user commands or environmental conditions, and implicit information, including previous interactions, inferred motivations, or broader situational awareness. Effectively managing and leveraging this multifaceted context is what enables agentic AI systems to make informed decisions, adapt to evolving scenarios, and deliver personalized experiences.

For example, consider a virtual assistant that schedules meetings. If it understands context, it won’t just look for free slots on a calendar; it will factor in a user’s typical work patterns, travel schedules, and even preferred times of day for different meeting types. This ability goes beyond rote instruction-following—it demonstrates situational intelligence. For a deeper dive into the role of context in AI, the AI Magazine discusses foundational concepts in context-aware computing.

Capturing and using context effectively in agentic systems involves several key steps:

- Context Acquisition: Gather data from diverse sources such as sensors, user interactions, historical logs, and external APIs. Sophisticated agents can even use natural language processing to interpret user intent from conversations, as covered in this Nature research article on dialogue agents.

- Context Representation: Structure contextual data in a way that the agent can reason about. This could involve knowledge graphs, vector embeddings, or hierarchical memory systems. Representing context accurately helps the agent recall relevant details and draw connections across tasks.

- Context Reasoning: Analyze the current situation in light of past experiences and predicted future states. Reasoning engines can weigh contextual information, prioritize actions, and resolve ambiguities, enabling more nuanced behavior.

By recognizing and dynamically integrating context, agentic AI systems transform from static tools into intelligent collaborators. For those wanting to learn more about technical approaches, the Stanford HAI guide on contextual AI provides accessible explanations and further reading.

Key Challenges in Context Management for AI Agents

Effectively managing context for AI agents is a multifaceted challenge, crucial to ensuring agents’ smart, coherent, and responsive behaviors. Developers face several persistent hurdles, each requiring careful strategy and continuous learning as the field evolves. Below, we explore the key pain points and offer best-practice insights, enriched by examples and links to authoritative resources for deeper exploration.

Maintaining Contextual Coherence Across Interactions

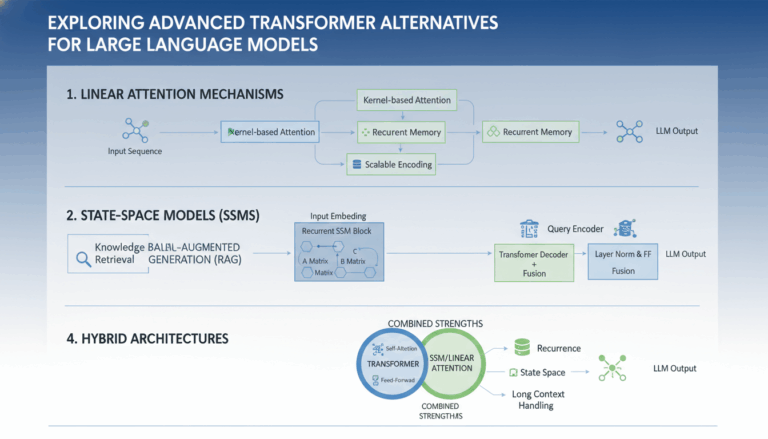

AI agents must keep track of conversational history and situational specifics to deliver meaningful interactions. Without robust context management, agents can lose track of who said what, leading to irrelevant or repetitive answers. This is particularly complex in multi-turn dialogues or ongoing tasks like personal assistants or customer support bots. Techniques such as context windowing, memory embedding, and retrieval-augmented generation (RAG) have been developed to help, but each comes with trade-offs regarding memory limitations and computational expense. According to research published by Microsoft Research, dynamically updating context and selective memory retention are critical to improving user experience.

Balancing Memory Scope and Resource Constraints

AI agents need to balance how much information they remember with the limitations of available memory and processing power. Storing too much context can slow down the agent or introduce irrelevant noise, while too little context leads to misunderstandings. Smart context pruning, where the agent learns to retain only the most salient details, is an emerging solution. For example, in enterprise applications, using vector databases and recurrent neural techniques allows agents to summarize and recall relevant parts of a long interaction efficiently. A detailed discussion on such strategies is provided by Databricks’ guide to Retrieval-Augmented Generation.

Privacy and Security of Persistent Contexts

Agents that store information between sessions must be designed with privacy and data protection in mind. This is especially true when handling sensitive personal or enterprise data. Developers must enforce rigorous data retention and access policies to comply with regulations like GDPR or HIPAA. For instance, anonymization, encryption, and user consent mechanisms are baseline requirements. A lack of oversight can result in inadvertent leaks or misuse of user data, as documented in studies by Nature.

Adjusting to Evolving Contexts and User Preferences

Context in real-world applications is rarely static. Preferences, goals, or situations change over time, and AI agents should adapt accordingly. For example, a travel-planning bot needs to notice changes in a user’s preferred travel method or budget. This dynamic adaptation requires active context monitoring and intelligent inference. Incorporating real-time feedback loops and maintaining transparent user profiles helps foster resilience and relevance. A thorough exploration of adaptive context mechanisms can be found in the DeepMind Blog.

Disambiguation and Noise Filtering

Natural conversations are rife with ambiguity and off-topic noise. Agents must be able to distinguish between relevant context and irrelevant information. This often requires integrating advanced natural language understanding (NLU) models trained on diverse dialogue data. Refining intent detection and entity recognition pipelines, along with continual re-training on real use data, can help. Techniques for improving NLU capabilities are discussed in depth at Google AI Blog.

Addressing these challenges will empower developers to craft more intelligent and adaptable AI agents, capable of supporting users with greater context awareness and reliability. As research and tooling progress, keeping abreast of emerging best practices and solutions, supported by trusted sources, is essential for anyone committed to advancing agentic AI.

Techniques for Effective Context Representation and Tracking

Effectively representing and tracking context is crucial for building agentic AI systems that can understand, remember, and adapt to dynamic environments. Context management goes far beyond simple memory or state-handling—it involves designing mechanisms that allow agents to grasp the who, what, when, where, and why of a situation, and continuously update their knowledge as new information is encountered. Let’s explore some key techniques for robust context representation and tracking, complete with examples and actionable steps.

Structured Context Modeling

At the foundation of context management lies the concept of structured context modeling. This involves defining explicit schemas or ontologies that map out the variables and relationships relevant to an AI agent’s environment. For instance, representing user interactions in a customer support chatbot may involve entities such as user profile, interaction history, and intent.

- Step 1: Identify critical entities and relations—Start by listing the core components the agent needs to keep track of. Read more on the importance of structured context from Microsoft Research: Adaptive Systems and Interaction.

- Step 2: Define a flexible schema—Use formats like JSON, GraphQL, or specialized knowledge graphs to codify relationships and allow future expansion.

- Example: In a virtual assistant, context schema could include current task, location, user preferences, and session history as schema fields.

Dynamic State Tracking and Updates

Real-world contexts evolve, which means agentic systems must not only store but also update contextual information as interactions progress. Dynamic state tracking is typically implemented via memory modules or blackboard architectures, where new data can overwrite or append existing context records.

- Step 1: Decide on mutable vs. immutable state—Choose which parts of the context can change during a session and which should remain static.

- Step 2: Use checkpoints or snapshots—Store periodic snapshots of the context, especially in long-running systems, to facilitate rollback or auditing. Learn more about this by exploring research on memory architectures in AI.

- Example: A conversational agent tracking a multi-turn dialog updates intents and slot-filling variables at each exchange, while keeping track of previously resolved queries in an immutable log.

Contextual Embedding Techniques

Many modern agentic systems leverage machine learning, especially transformer-based models, to create embeddings that capture context in dense vector representations. These embeddings encapsulate not only the explicit state but also inferred semantics, enabling nuanced responses and decision-making.

- Step 1: Generate embeddings from raw input—Use pretrained language models (like BERT or GPT) to encode full conversational histories or environmental data.

- Step 2: Fine-tune with domain-specific data—Improve contextual understanding by training embeddings on industry- or task-specific datasets. The effectiveness of this approach is highlighted in this ACL paper on context-aware dialog systems.

- Example: In a healthcare application, embeddings can include both structured medical records and conversational context to recommend personalized interventions.

Temporal and Spatial Context Management

Agentic systems often operate in environments where timing and location are vital. Managing temporal (time-based) and spatial (location-based) context allows agents to make decisions grounded in the sequence of events or spatial relationships.

- Step 1: Timestamp actions and events—Log every user action or environmental change with a precise timestamp to construct event timelines.

- Step 2: Map spatial entities—Use coordinates, semantic mapping (rooms, objects), or even graph structures to represent space. For an in-depth look, see this article on spatial context in AI.

- Example: Autonomous agents in smart homes track not only current tasks but the timing and location of all interactions to optimize routines or anticipate user needs.

Privacy and Data Minimization

Last but not least, effective context management must align with privacy best practices, especially when handling sensitive user data. Apply data minimization by only tracking context necessary for the agent’s operation and employing anonymization where possible. For further information on privacy standards and guidelines, consult resources from the International Organization for Standardization (ISO/IEC 27001).

- Step 1: Review context schema regularly to strip out non-essential or high-risk data fields.

- Step 2: Implement access controls, context expiration policies, and encryption for stored context information.

By thoughtfully applying these techniques, developers can create agentic AI systems that are not only smarter and more responsive but also ethical and secure.

Best Practices for Maintaining Context Consistency

Maintaining context consistency is essential for building robust agentic AI systems that are truly intelligent and responsive. When context management is overlooked, AI agents risk making illogical decisions, repeating tasks unnecessarily, or failing to adapt to user needs. Here’s how developers can ensure their agentic AI maintains a reliable understanding of its environment and objectives:

Establish Clear Context Boundaries

Defining the scope of context is the first step toward consistent management. Developers should determine what information is relevant to the agent’s tasks—such as user preferences, session states, or environmental cues—and separate it from irrelevant data. By specifying clear boundaries, agents can avoid “context drift” and reduce cognitive load. For practical approaches, researchers at MIT suggest leveraging modular architecture where individual modules manage their own contextual information and only communicate necessary data (source).

Implement Context Versioning

Context can change rapidly, especially in dynamic environments. Employing version control for context data lets agents track and revert changes, minimizing information loss and inconsistencies. Developers might use timestamped context snapshots or assign version numbers to context states. For example, Microsoft Research discusses how context versioning is critical for collaborative and persistent AI agents, providing a mechanism to audit and roll back context errors (details).

Leverage Memory Augmentation Techniques

Many agentic AIs benefit from augmented or persistent memory frameworks, enabling them to recall past interactions or events with high fidelity. Techniques such as vector databases, semantic embeddings, and knowledge graphs help store and retrieve historical context efficiently. For instance, incorporating knowledge graphs allows agents to resolve ambiguities and maintain consistent reference points throughout long user sessions, as demonstrated in Google’s AI systems (read more).

Enforce Context Validation and Normalization

Regular validation checks can flag when context information diverges from expected patterns, helping to maintain integrity. This might include setting validation rules for input data, cross-referencing against known states, or cleansing context using normalization algorithms. Academic guidance from Stanford AI Lab underscores the importance of automated context checks, especially in high-stakes scenarios like healthcare or finance (see discussion).

Facilitate Transparent Context Updates

When context changes—whether due to user input, system events, or environmental cues—it’s crucial to update all relevant components and notify stakeholders. Good design practices include employing event-driven architectures, broadcasting context changes, and logging updates for transparency. For example, OpenAI highlights the significance of transparent context handling to ensure agentic AI remains accountable and explainable (more info).

By integrating these best practices, developers can build agentic AI systems that better understand their environment, adapt agilely to new information, and deliver more intelligent, contextually aware solutions.

Leveraging Memory Architectures for Long-Term Contextual Awareness

In today’s rapidly evolving AI landscape, one of the most critical advancements lies in how agentic AI systems manage and utilize memory architectures to achieve long-term contextual awareness. Effective context management allows agents to make nuanced decisions, learn from accumulated experience, and carry out complex tasks with continuity, much like a human would.

To attain long-term contextual awareness, developers must carefully select and implement memory architectures that empower agentic AI to retain, recall, and reason over extensive histories of interactions and information. Below, we’ll dive into best practices and step-by-step strategies for leveraging these memory systems for smarter development.

Understanding Memory Architectures in Agentic AI

Memory in artificial agents broadly falls into types such as short-term (working) memory, long-term memory, and episodic memory. Each type serves a unique function, much like their biological counterparts. Short-term memory enables agents to process current input and immediate responses, while long-term memory allows them to store knowledge, rules, and historical interactions. Cognitive science research underlines the importance of these distinctions for developing intelligent, context-aware systems.

Best Practices for Implementing Long-Term Memory

- Structured Data Stores: Utilize databases or advanced knowledge graphs (like Google’s Knowledge Graph) that allow agents to record and organize facts, conversations, and relationships over time. This structure ensures reliable retrieval and updating as the agent encounters new information.

- Episodic Memory Integration: Incorporate episodic memory modules, enabling agents to recall past sequences or events in context. For example, autonomous customer service bots that reference previous customer interactions can provide more personalized and helpful solutions. Studies from Google AI show how episodic memory boosts adaptability and personalization in AI agents.

- Hierarchical Memory Management: Organize memory components into tiers according to access frequency and relevance. This mirrors the brain’s own memory retrieval mechanisms and helps agents focus resources on recent or significant context while ensuring deep historical knowledge is accessible when needed.

Mechanisms for Enhancing Contextual Awareness

Achieving true context-awareness means agents don’t just store data; they must understand it in relation to ongoing tasks and environments.

- Relevance-based Retrieval: Design retrieval algorithms that surface the most pertinent memories based on current goals or conversational threads. Techniques such as semantic search or embedding-based memory recall (see Neural Turing Machines) provide higher accuracy in matching contextual cues to stored knowledge.

- Temporal Linking: Ensure agents can follow chains of related events over long periods. For instance, virtual health assistants might remember a user’s medication history and change advice based on new or past symptoms, following recommendations from medical informatics research.

- Contextual Tagging: Use metadata and tags to annotate entries with context indicators such as time, location, user intent, or emotional state. This enables rapid filtering and nuanced response generation, a practice encouraged in IBM’s guidelines on conversational AI.

Practical Steps to Build Context-Rich Agents

- Identify Key Contextual Needs: Map out the types of long-term information your agent must handle (e.g., user preferences, ongoing projects, external events) and create a schema for each type.

- Select or Build a Scalable Memory Backend: Choose technologies such as Redis, MongoDB, or custom vector stores tailored for both structured and unstructured data.

- Integrate with Contextual Triggers: Establish event triggers or intent recognition layers that prompt memory retrieval (like keyword spotting or semantic referencing).

- Test with Extended Simulations: Continuously evaluate your agent’s performance across simulated, long-running scenarios, ensuring the agent maintains coherence and relevance over time. Refer to Microsoft’s episodic memory explorations for benchmarking approaches.

By prioritizing robust memory architectures and context-oriented retrieval techniques, developers can dramatically improve agentic AI’s ability to sustain meaningful, knowledgeable conversations and decisions. This not only elevates user experiences but also propels the field towards truly intelligent, autonomous systems capable of lifelong learning.

Ethical Considerations in Contextual Decision-Making

In the rapidly evolving landscape of Agentic AI, context management is not solely a technical concern; it is equally an ethical endeavor. The ability of AI agents to make nuanced, context-sensitive decisions introduces a spectrum of moral challenges that developers must address to safeguard both users and broader society.

One core ethical consideration is the handling of sensitive or personal data within context management systems. When AI agents personalize interactions using context, even seemingly benign data can become sensitive if combined or used improperly. Developers must adhere to strict data privacy standards such as those outlined by the General Data Protection Regulation (GDPR) in Europe and similar frameworks worldwide. This means implementing mechanisms for anonymization, clear consent protocols, and robust data security at every stage of the context lifecycle.

Another critical aspect lies in bias mitigation. Contextual decision-making algorithms trained on biased data, or exposed to biased environments, can inadvertently perpetuate or even amplify societal inequalities. It is essential to perform regular audits for bias and to integrate guidelines such as the recommendations from the NIST AI Risk Management Framework to ensure fairness and transparency. For example, regular retraining using diverse datasets and implementing explainability features can help developers and stakeholders understand how context is shaping decisions.

Transparency in decision-making is also key for both ethical and practical reasons. Users must be able to understand—at least at a high level—how their data and context are influencing the actions of an AI agent. This builds trust and helps users make informed choices about their interactions with AI systems. Incorporating explainable AI (XAI) techniques, such as context attribution maps or decision logs, reflects best practice as recommended by academic leaders like the Stanford Artificial Intelligence Laboratory.

A step-by-step approach to ethical AI context management might include:

- Assess and map potential ethical risks in context gathering and use.

- Apply privacy-by-design principles, limiting context collection to what is strictly necessary and providing users with granular controls.

- Monitor and evaluate the impact of context management on different user groups to detect and mitigate bias continuously.

- Foster ongoing communication with stakeholders to ensure transparency and adaptability as real-world implications emerge.

Ultimately, the road to ethical context management in Agentic AI is ongoing. Continuous updates, proactive risk mitigation, and a strong commitment to accountability provide a foundation for smarter, more trustworthy AI systems. For more insights on current best practices, consider resources like the Brookings Institution’s analysis of algorithms and bias, which offers expert perspectives on ethical AI development.