Define ROI objectives

Too many projects start by buying a conversational AI platform and hoping value will follow; the real failure point is not the model but vague objectives that can’t be measured. If you want to prove conversational AI ROI, start by translating business goals into crisp, measurable outcomes within the first 30–90 days of a pilot. Building on this foundation, we’ll align expected benefits with enterprise metrics so you can prioritize use cases that deliver quantifiable impact rather than vanity wins.

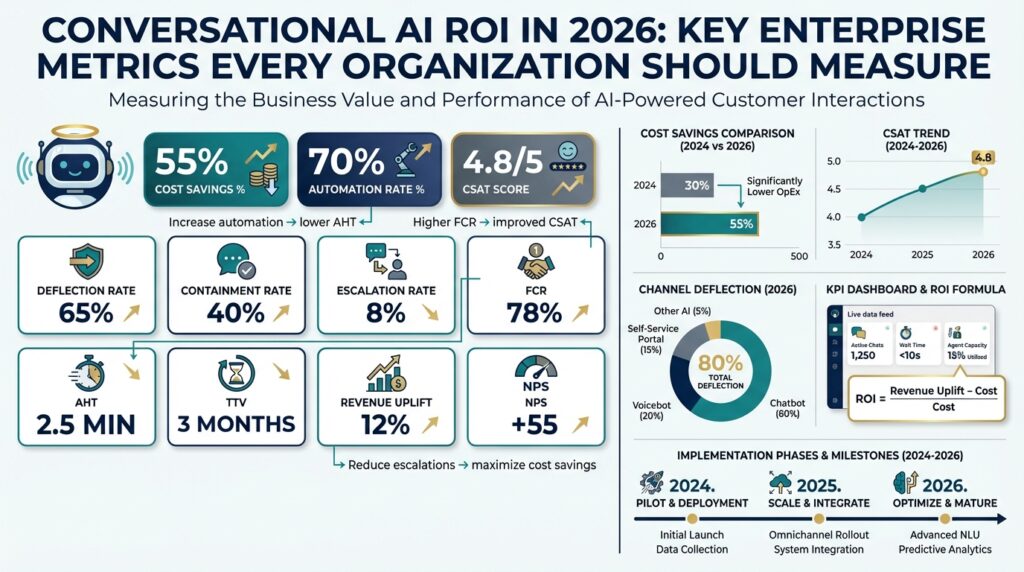

Choose outcome categories first, then specific targets. Anchor objectives to one of four business buckets: cost reduction (lower handling cost per contact), revenue generation (conversion uplift, up-sell attach), customer experience (CSAT, Net Promoter Score), and operational risk/compliance (reduced SLA breaches, auditability). For each bucket, pick a primary metric and one or two supporting metrics—for example, when your primary goal is cost reduction, use cost-per-contact as the lead KPI and containment rate plus average handle time (AHT) as supporting indicators.

Establishing a baseline and timeframe prevents ambiguous claims about lift. Measure current-state metrics for a statistically meaningful period (typically 4–8 weeks for contact centers) and lock the instrumentation before deployment to avoid retrofitting data. How do you set credible targets for revenue uplift from a virtual assistant? Use historical conversion funnels to model expected incremental lift and require at least one controlled experiment (A/B test or randomized rollout) to validate the estimate before you commit capex.

Be explicit about attribution and the math behind ROI. Don’t report gross improvements without separating organic trend from incremental effect; use control cohorts or difference-in-differences to isolate impact. Translate observed changes into dollars with a unit-economics model: incremental revenue = lift% × baseline conversions × average order value; cost savings = (baseline cost-per-contact − post-deploy cost-per-contact) × expected contact volume. Then compute ROI = (benefits − total cost) / total cost and run a sensitivity analysis on traffic, lift, and model maintenance costs.

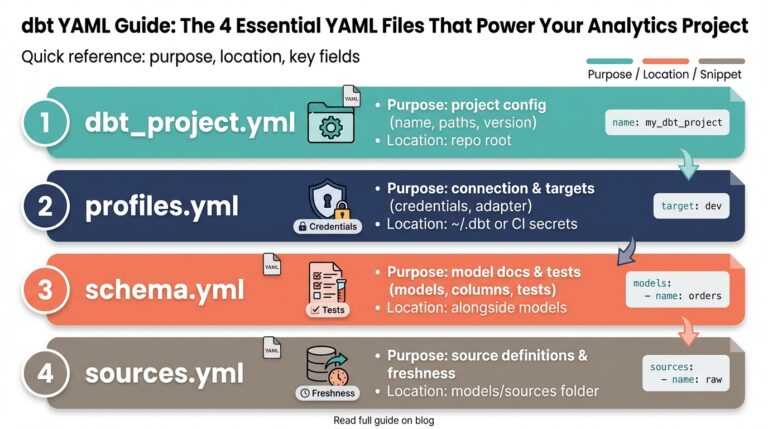

Instrument for measurement from day one; analytics quality is the difference between a convincing business case and noisy anecdotes. Define an event schema that captures session start, intent classification, handoff, resolution flag, time stamps, and revenue-touch identifiers; emit these events to your data warehouse and retain raw transcripts for sampling and QA. Roll out with an experimentation framework—canary the assistant to 10% of traffic, collect lift metrics over a minimum sample size, and only expand when results meet your pre-defined significance thresholds. Include logging, alerting, and a plan for drift detection so your metrics remain valid as models and user behavior change.

Set targets that are SMART and govern them rigorously. Create quarterly milestones tied to enterprise metrics, specify owners for measurement (analytics, product, finance), and require a reconciliation cadence: weekly health checks during rollout, monthly financial reconciliations, and a formal post-implementation review at 90 days. Model the break-even point and present upside scenarios (best/worst/likely) to stakeholders so funding decisions are data-driven rather than aspirational. Taking this approach ensures your conversational AI investments are accountable, auditable, and directly tied to the enterprise metrics that matter most.

Next, we’ll map specific KPIs and implementation patterns to common enterprise use cases—sales enablement, post-sale support, and internal IT service desks—so you can pick measurement recipes tailored to the outcomes you just defined.

Track operational KPIs

Building on this foundation, the first step is to treat operational KPIs as product features: define them, instrument them, and iterate on them with the same rigor you apply to model improvements. Operational KPIs should be front-loaded into your rollout plan so dashboards, alerts, and experiment hooks exist before traffic is routed to the assistant. This prevents post-hoc measurement gaps and lets you prove incremental impact rather than relying on anecdotes.

Focus on a small set of signal-rich metrics that map directly to the business buckets we set earlier: cost-per-contact and containment rate for cost reduction, average handle time (AHT) and time-to-resolution for operational efficiency, CSAT and task success for experience, and SLA breach rate and auditability for risk. Choose one leading KPI (for example, cost-per-contact) and two supporting KPIs (containment rate and AHT) so you avoid noisy multidimensional reporting. These operational KPIs are the ones you’ll monitor daily; everything else is diagnostic.

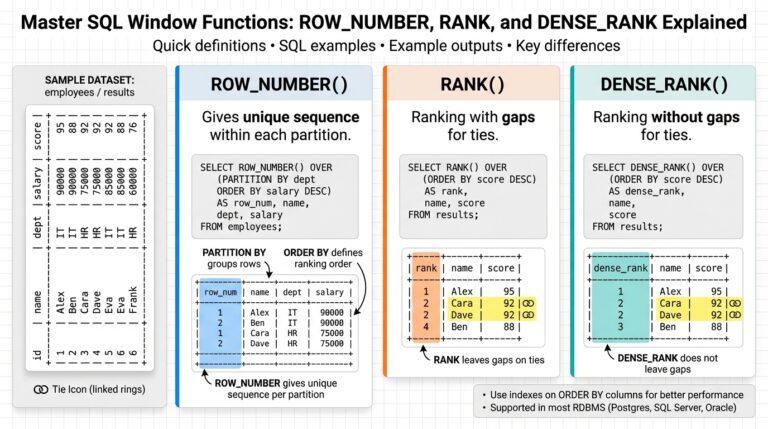

Measurement precision matters: define events and deterministic calculations. For instance, mark a session as “contained” if resolved by the assistant without human handoff and a resolution flag is set before session end; compute AHT using timestamps aggregated over resolved sessions only. Segment metrics by channel, intent, customer tier, and traffic source so you can identify where the assistant helps versus where it degrades performance. How do you ensure statistical validity? Pre-specify minimum sample sizes for A/B tests and require confidence thresholds (commonly 95%) before changing traffic splits.

Tie dashboards to operational workflows, not just data tables. Create a live operations dashboard with rolling windows (1h, 24h, 7d) that surfaces containment rate, AHT, escalation/handoff rate, fallback rate, and error rate. Attach playbooks to alert thresholds: if containment rate drops >10% week-over-week or escalation rate exceeds an SLO, trigger a triage workflow that collects representative transcripts, recent model versions, and deployment metadata. Give ownership for each KPI—analytics owns the metric definition, product owns targets, and ops owns on-call remediation—so accountability is explicit.

Make the math reproducible and auditable. Store simple canonical queries in your analytics repo so anyone can reproduce cost-per-contact or incremental savings. For example, containment rate can be computed with a single SQL query that aggregates session flags; cost-per-contact is then a multiplication of post-deploy contact volume by the measured cost delta. Example SQL (simplified):

SELECT

SUM(case when resolved_by_bot then 1 else 0 end) AS bot_resolves,

COUNT(session_id) AS total_sessions,

SUM(case when resolved_by_bot then 1 else 0 end)/COUNT(session_id) AS containment_rate

FROM events

WHERE event_date BETWEEN '2026-01-01' AND '2026-01-31';

Translate KPI changes into dollars with unit economics and sensitivity analysis. If cost-per-contact falls from $5.00 to $3.75 after deployment, compute monthly savings = (baseline_cost − new_cost) × monthly_contact_volume and then compare that to model maintenance and infrastructure spend. Run scenario analyses across traffic, lift%, and model decay to show break-even windows and to prioritize model retraining or rules adjustments.

Finally, operational KPIs are living artifacts—review them on a cadence aligned with change velocity. Daily health checks catch regressions quickly; weekly trend reviews with product and finance validate business assumptions; quarterly audits reassess definitions and SLOs as usage evolves. Taking this disciplined, production-grade approach to operational KPIs ensures your conversational AI program scales with verifiable impact and makes the next step—mapping KPIs to specific sales, support, and IT workflows—straightforward and repeatable.

Measure cost and savings

Building on this foundation, start with a single, auditable cost model so your conversational AI ROI and cost savings are measurable from day one. Define cost-per-contact as a canonical unit in that model and record it across channels and segments before you deploy. Capture baseline containment rate, average handle time (AHT), and per-agent fully loaded hourly cost so every downstream calculation maps to the same inputs. Front-loading these definitions prevents post-hoc adjustments that inflate claimed savings and undermines credibility with finance.

Break costs into explicit categories so you can attribute savings correctly and avoid double-counting. Separate one-time integration and implementation expenses (data migration, labeling, connector work) from recurring operating costs (inference, hosting, licensing, monitoring, human-in-the-loop review). Include indirect headcount effects such as reduced training, lower shrinkage, and redirected FTEs when they are material, but report them as supporting benefits with clear assumptions. For example, note whether a projected FTE reduction is a redeployment or headcount avoidance—this determines how you present cash flow to stakeholders.

Convert operational improvements into dollars with simple, reproducible math. When containment improves, translate that into avoided human-handled contacts: incremental_resolves = delta_containment × monthly_contact_volume. Then incremental_savings = incremental_resolves × baseline_cost_per_contact. For example, a 10 percentage-point containment lift on 100,000 monthly contacts at a $5 baseline cost-per-contact yields 10,000 avoided human contacts and $50,000 monthly savings. Run this calculation at the channel and intent level so you can prioritize use cases that return cash fastest.

Don’t ignore time-based savings—small reductions in AHT compound into sizable FTE equivalence. Quantify AHT savings by converting seconds saved per resolved interaction into agent minutes per month, then into full-time equivalent (FTE) capacity using your organization’s minutes-per-FTE assumption. For instance, cutting AHT by 30 seconds on 200,000 monthly human-resolved contacts frees roughly 1,666 agent hours—about 0.8 FTE at 2,080 hours/year. Present both the dollar equivalent and the operational impact (e.g., reduced hiring, faster service) so decisions reflect both cash and capability.

Account for model and infra costs transparently and run sensitivity analyses across key drivers. Aggregate total_cost = integration_costs + annualized_model_ops + annual_hosting + human_review_costs + vendor_fees, then compute ROI = (annualized_benefits − total_cost) / total_cost. Sensitivity scenarios (pessimistic/likely/optimistic) should vary traffic, lift %, and model decay to show break-even windows. When should you capitalize integration versus expense it? Follow your finance policy, but always show both treatments in your board materials so stakeholders see conservative and aggressive cases.

Instrument attribution at the event level to reconcile projected versus realized savings during rollout. Tag sessions with source, intent, experiment cohort, and resolution flag; use canonical SQL queries or analytics views to reproduce key numbers. Allocate shared costs (platform, SRE, data pipelines) proportionally by traffic or weighted by intent severity so each use case carries its fair share of burden. Reconcile monthly during rollout and publish a 90-day post-implementation financial review that compares predicted savings, realized cash flow, and variance drivers.

With disciplined costing, attribution, and sensitivity analysis we turn operational KPIs into trustworthy financial outcomes you can act on. Next we’ll map these measurement recipes to concrete use cases—sales enablement, post-sale support, and IT service desks—so you can apply the same cost model to prioritize high-impact deployments.

Monitor CX and satisfaction

If your conversational AI program is going to deliver measurable ROI, you must treat experience metrics as first-class financial signals rather than optional niceties. Customer experience (CX) and customer satisfaction are the interface between operational improvements and real business outcomes—improvements in containment or AHT mean little if they erode sentiment and retention. Front-load CX measurement into your rollout plan so dashboards, experiments, and sampling exist before traffic is routed; that makes the difference between defensible lift and anecdotal claims.

Building on this foundation, pick the right CX metrics and define them precisely. CSAT (Customer Satisfaction Score) is a short survey-based measure of immediate interaction satisfaction; Net Promoter Score (NPS) captures broader loyalty and referral intent. Use CSAT as a near-term health metric tied to individual sessions and NPS as a leading business outcome you monitor at cohort level. Define survey timing, sampling rate, and weighting by customer tier so your CSAT baseline is stable and comparable across channels.

How do you attribute CX changes to the assistant versus other factors? Design attribution into experiments: holdout cohorts, randomized rollout, or difference-in-differences are non-negotiable. Control cohorts should mirror traffic sources, intent mixes, and customer segments; then measure CSAT delta, escalation rates, and downstream behaviors (repeat contacts, churn) for both groups. Instrument event-level flags—session_start, resolved_by_bot, survey_shown, survey_response—and join them to your customer table so you can run regression models that adjust for confounders like time-of-day, channel, and recent marketing touches.

Translate CX movement into dollars and prioritization signals with unit economics that tie CSAT and retention. For example, if a 5-point CSAT increase on a high-value intent reduces churn by 1% for a cohort with $1,000 ARPU and 10,000 users, incremental annual revenue ≈ 0.01 × 10,000 × $1,000 = $100,000. Use conservative lift-to-revenue assumptions and run sensitivity analysis by intent and channel—this surfaces the highest-return intents to optimize first. Instrument per-intent CSAT and compare containment-adjusted CSAT to avoid optimizing for scripted wins that produce low long-term satisfaction.

Operationalize monitoring so CX signals trigger actionable workflows rather than passive reports. Build rolling windows (1h, 24h, 7d) for CSAT, task-success rate, sentiment trend, and escalation frequency; attach playbooks to threshold breaches—if CSAT drops >0.5 points on a critical intent, automatically collect representative transcripts, recent model versions, and user properties for root-cause triage. Complement quantitative alerts with regular qualitative sampling: review 5–10 transcripts per alert to confirm whether the failure is model misclassification, prompt design, external system latency, or survey bias.

Finally, close the loop between CX measurement and model/product ops with a clear cadence and ownership. Assign analytics to maintain reproducible queries, product to own CSAT targets by intent, and ops to run the triage playbooks; schedule weekly trend reviews and a formal 90-day reconciliation that compares predicted CX lift to realized revenue and retention. This continuous feedback loop—survey data, event instrumentation, controlled experiments, and qualitative review—lets you optimize conversational AI for both efficient operation and sustained customer satisfaction, preparing us to map these CX-driven KPIs to specific sales, support, and IT use cases next.

Assess revenue impact

Building on this foundation, start by treating revenue as a measurable product metric rather than an afterthought; conversational AI ROI and revenue uplift must be anchored to event-level attribution from day one. You should instrument revenue-touch identifiers (order_id, cart_value, AOV) into every session so the assistant’s influence flows through your data warehouse. This upfront instrumentation enables us to separate gross trend from incremental effect and to run the controlled experiments that finance will trust.

How do you confidently attribute a dollar of revenue to the assistant? Design experiments and analysis that directly estimate incremental revenue: randomized rollouts, holdout cohorts, or uplift models that compare treatment and control conversion funnels. Define a primary revenue metric up-front—incremental_revenue_per_session or incremental_conversions—and pre-register the calculation, sample size, and significance thresholds. For example, if baseline conversion is 2% on 100,000 sessions and the assistant increases conversion to 2.4% with $120 AOV, incremental_revenue = (0.024 − 0.02) × 100,000 × $120 = $48,000 for the test window; present this alongside confidence intervals and sensitivity checks.

Map intents and funnels to unit economics so revenue impact becomes actionable at the use-case level. Not all intents have equal revenue potential: a “checkout help” intent influences AOV and conversion directly, while “shipping status” impacts retention and repeat purchases. Join event-level intent tags to order tables to compute revenue-per-intent and revenue-per-session; here’s a compact analytic pattern you can adapt:

SELECT intent,

COUNT(DISTINCT session_id) AS sessions,

SUM(order_value) AS revenue,

SUM(order_value)/COUNT(DISTINCT session_id) AS revenue_per_session

FROM events

LEFT JOIN orders USING (session_id)

WHERE event_date BETWEEN '2026-01-01' AND '2026-03-31'

GROUP BY intent;

Use this breakdown to prioritize development: focus on intents where small lift yields large incremental revenue. Also account for cannibalization—if the assistant shifts purchases from paid channels to organic ones, total revenue may not grow even if containment and AHT improve; build channel-aware cohorts to detect this.

Extend short-term lift to lifetime value when revenue timing matters. Some assistant interactions increase immediate conversion, others improve retention or average order frequency; convert measured CSAT and repeat-contact improvements into incremental LTV using cohort analysis. For example, estimate incremental LTV by measuring retention delta across randomized cohorts and multiply by cohort ARPU; then compare annualized incremental LTV to model ops and integration amortization to judge payback period.

Practical experiment and analysis rules matter more than clever models. Pre-specify the primary revenue metric, block by seasonality and traffic source, and require minimum sample sizes for sub-segment inference. Run heterogeneity checks—does uplift concentrate in premium customers, mobile users, or a particular channel?—and surface those segments in your roadmap so product and sales can focus optimization where dollar returns are highest.

Finally, operationalize reconciliation so finance and product share a single source of truth. Emit canonical events (session_start, intent, resolved_by_bot, order_id, order_value), maintain simple reproducible queries in an analytics repo, and automate a weekly reconciliation that compares predicted versus realized incremental revenue and calls out variance drivers. Taking this disciplined approach turns conversational AI experiments into audited financial outcomes we can scale, and prepares us to map these revenue measurements to concrete use cases in the next section.

Attribution, data, and governance

If you want stakeholders to trust the numbers you present, start by treating attribution as a first-class engineering problem rather than a post-hoc analytics exercise. Attribution must be explicit, reproducible, and tied to an auditable event stream so finance, product, and compliance can all reconcile the same facts. Building on this foundation, we’ll show how tight instrumentation plus rigorous data governance turns operational KPIs into credible financial outcomes you can act on. How do you prove a lift was truly incremental and not just seasonality or channel shift?

Building on the measurement patterns we described earlier, embed attribution into every session: session_start, intent, cohort_tag, resolved_by_bot, order_id, and revenue_touch should be canonical fields emitted by the assistant and downstream systems. Pre-register your attribution math (primary metric, lookback window, handling of multi-touch) in an experimentation plan so A/B tests or difference-in-differences analyses map directly to the event model. Avoid retrofitting instrumentation after the fact; instead, route events to a data warehouse and a streaming analytics layer so queries are deterministic and reproducible across teams. This reduces debates about “what the numbers mean” and lets us compute ROI with clear unit economics.

Design a minimal, enforceable event schema and couple it to a data governance policy that defines ownership, lineage, and retention. For example, publish a lightweight JSON schema for session events and require schema validation at ingestion so missing fields fail fast:

{

"session_id": "uuid",

"timestamp": "iso8601",

"intent": "string",

"resolved_by_bot": true,

"experiment_cohort": "A|B|holdout",

"order_id": "nullable",

"order_value": "nullable"

}

Then tie that schema to data governance controls: enforce cataloged schemas, automated lineage metadata, and column-level access controls. Those controls let you prove which data contributed to a given ROI calc, who changed a query, and when a model or connector was updated—critical for audits and regulatory needs. Keep retention rules and PII handling explicit: redact or tokenise customer identifiers at ingestion and store link tables with strict role-based access for reconciliation tasks.

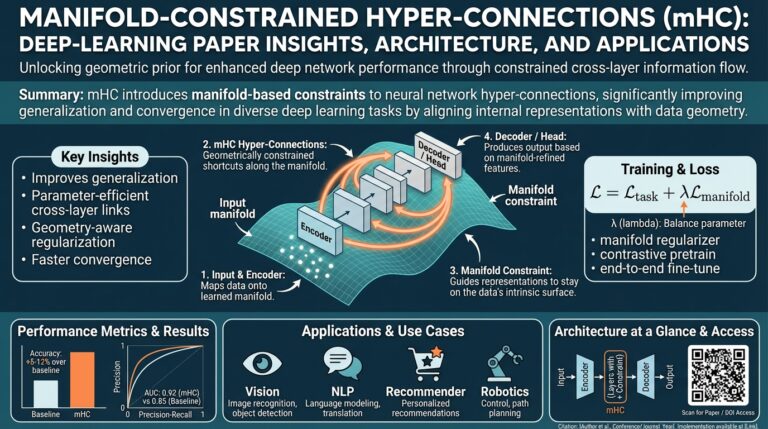

Model governance is the other half of trustworthy attribution. Version every deployed model, store associated prompts/config, and produce a machine-readable model card that records training data slices, performance by intent, and known failure modes. Instrument drift detection on both input distributions and key metrics (containment, fallback, escalation) and link alerts to a playbook that includes a sampling pipeline for labeled ground truth. Require human-in-the-loop signoff for experiments that touch high-risk intents (billing, legal, or escalations) so governance and ops remain aligned with product and legal teams.

Make financial reconciliation procedural and repeatable: keep canonical SQL or analytic views in a single analytics repo, pre-register primary metrics and sample-size rules, and automate weekly variance reports that reconcile predicted incremental savings to realized cash flows. Question attribution windows and conversion lookbacks—short windows may undercount downstream conversions, long windows may over-assign credit—so run sensitivity checks and show conservative and aggressive cases to stakeholders. Allocate shared costs (platform, SRE, data pipelines) transparently by traffic or weighted intent severity so each use case carries its fair share of the burden.

Finally, operationalize governance with a cadence: daily health signals for containment and escalation, weekly reconciliation between analytics and finance, and quarterly audits of schema, retention, and access. Tie alerts to forensic artifacts—representative transcripts, model version, recent schema changes—so triage surfaces root cause quickly. Taking this approach ensures attribution is defensible, data governance is operational, and the ROI figures you present are auditable and actionable as you map measurement to specific sales, support, and IT use cases next.