Introduction to Pagination in MySQL

When managing large datasets in MySQL, it’s often necessary to divide results into smaller, more manageable chunks of data. This process is known as pagination. Pagination is essential for performance optimization as it helps in reducing memory usage and load times by not loading the entire dataset at once.

SQL language provides tools to help you paginate through results effectively, with the most common approach being the use of the LIMIT and OFFSET clauses. To illustrate, consider an e-commerce application where fetching thousands of rows of product data could be cumbersome both for the server and the client. Pagination allows users to fetch, say, only 10 rows at a time.

An example query using LIMIT and OFFSET would look like this:

SELECT * FROM products ORDER BY product_id ASC LIMIT 10 OFFSET 20;

In this query, LIMIT 10 indicates that only 10 rows will be returned starting from the 21st row, defined by the OFFSET 20. This approach allows for iteration through data in chunks, making it easier to handle.

However, the typical LIMIT and OFFSET technique is not without its pitfalls. One major problem is performance degradation for large datasets because MySQL still needs to scan from the beginning of the dataset up to the starting point of the offset. This can become inefficient when dealing with large tables.

Moreover, using LIMIT and OFFSET can lead to inconsistencies in data, especially if the underlying data changes between page requests. For example, if rows are inserted or deleted in the products table between two pagination queries, a row might be skipped or duplicated when navigated.

To improve efficiency beyond LIMIT and OFFSET, it is beneficial to leverage indexed columns for pagination. Suppose you have a products table with a unique product_id. You could adjust the pagination query using the primary key for consistency and performance:

SELECT * FROM products WHERE product_id > 100 ORDER BY product_id ASC LIMIT 10;

In this query, pagination is achieved by filtering on the primary key. Each subsequent query uses the last retrieved product_id from the previous query as a starting point. This approach minimizes the performance overhead associated with large offsets by making use of the index.

This foundational understanding of pagination provides the basis upon which one can explore more advanced techniques and strategies to improve efficiency and scaling in complex MySQL queries. By starting with these basics, developers can build towards more sophisticated solutions that can accommodate increased data demands and reduce response times significantly.

Limitations of LIMIT and OFFSET for Pagination

Using LIMIT and OFFSET for pagination in MySQL is a widely practiced technique due to its simplicity and ease of implementation. Despite their popularity, LIMIT and OFFSET have several inherent limitations that can significantly affect performance and scalability, especially when working with extensive datasets.

One of the most striking issues with LIMIT and OFFSET is their tendency to perform poorly with large offsets. When you execute a query with OFFSET, MySQL must scan all preceding rows to reach the offset’s starting point. For example, a query like:

SELECT * FROM products ORDER BY product_id ASC LIMIT 10 OFFSET 10000;

will result in MySQL scanning, sorting, and discarding the first 10,000 rows before returning the desired subset. This linear degradation becomes evident in large tables, leading to increased query execution times and resource usage. This scenario becomes worse when pagination requires accessing several pages in succession, which doubles down on inefficiencies as the offset increases.

Furthermore, another critical problem with utilizing LIMIT and OFFSET is the potential for data inconsistency. In dynamic environments where data modification operations (such as inserts or deletes) are frequent, using simple offsets can lead to skipping or duplicating records during navigation. Consider a user browsing through a set of results; if new entries are added to the table or existing ones are deleted between requests, the dataset’s state changes, resulting in potentially missing rows or seeing some data multiple times.

To exemplify, imagine an online store where new products are often added. A user exploring products with a pagination request could see a product on page three that suddenly appears again on page four due to new entries pushing items across offsets. This inconsistency can seriously impact user experience, leading to confusion or dissatisfaction.

There is also the added risk of increased load on the database server under high concurrency. Multiple users issuing paginated queries simultaneously can exacerbate the resource consumption due to the constantly repeated scans of the same datasets. This can lead to a considerable drain on system resources, increasing the potential for bottlenecks.

To circumvent these limitations, alternative strategies such as keyset pagination (also known as the “seek method”) are recommended. This approach leverages an indexed column to track the position in the dataset without using offsets. By anchoring each subsequent query to a primary key or another indexed field, you achieve both improved performance and consistency. For instance:

SELECT * FROM products WHERE product_id > 100 ORDER BY product_id ASC LIMIT 10;

With each new query using the last product_id from the previous batch, this method reduces overhead by eliminating the need to process skipped records, leading to linear time complexity for each page.

In summary, while LIMIT and OFFSET are convenient for basic pagination, they come with significant drawbacks in efficiency and reliability when scaling to larger datasets. Understanding these limitations is crucial for database administrators and developers seeking to design performant, scalable applications.

Implementing Keyset Pagination for Improved Performance

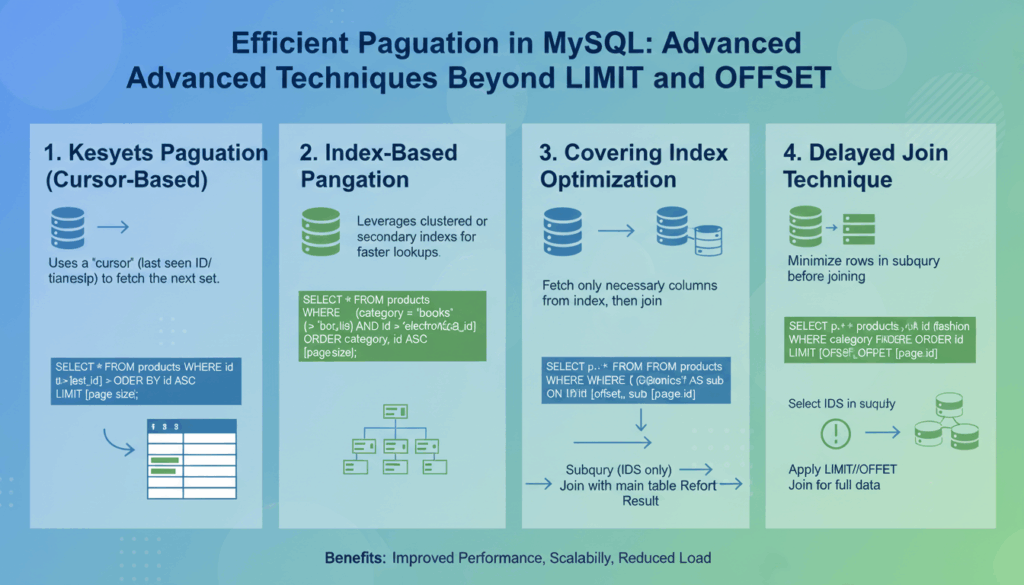

Keyset pagination, sometimes referred to as cursor-based pagination, is a method that enhances query performance and consistency by navigating through data based on the last seen value of indexed columns, rather than using the traditional LIMIT and OFFSET clauses. This approach is particularly effective for managing large datasets where conventional methods suffer from inefficiencies.

In keyset pagination, each subsequent query uses a specific indexed column to determine the starting point for fetching the next set of rows. This method ensures that the database only processes the remaining records after the last record retrieved, thereby eliminating the performance overhead of scanning and discarding rows.

Implementing Keyset Pagination

To exemplify the implementation, consider a products table with the following schema:

CREATE TABLE products (

product_id INT PRIMARY KEY,

name VARCHAR(255) NOT NULL,

price DECIMAL(10, 2) NOT NULL,

category_id INT,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

Step-by-Step Example

- Initial Query Setup:

Start by fetching the first page using a simple query on an indexed column, such as product_id.

sql

SELECT * FROM products

ORDER BY product_id ASC

LIMIT 10;This retrieves the first 10 rows ordered by product_id. Note the maximum product_id value from this result set (let’s say it is 10).

- Subsequent Page Retrieval:

Use the last product_id of the previous result to fetch the next set of rows. This means the query for the next page will be:

sql

SELECT * FROM products

WHERE product_id > 10

ORDER BY product_id ASC

LIMIT 10;By continuing this pattern, each new query uses the last fetched product_id as its starting point. This avoids reprocessing the previously retrieved rows.

- Handling Dynamic Data:

One of the significant advantages of keyset pagination is its robustness in dealing with real-time changes in data. Since the pagination uses unique identifiers, it remains consistent even if records are added or removed from the dataset between requests.

- Advantages:

- Performance: By leveraging indexes, the database skips scanned rows, resulting in faster query execution, particularly for larger datasets.

- Consistency: Avoids duplicate or missing records due to concurrent data modifications.

- Efficiency: Reduces server load by minimizing unnecessary data processing, particularly beneficial for high-traffic applications.

Real-world Application

In a real-world scenario, consider an endless scrolling feature on an e-commerce website. As the user scrolls, new data is fetched seamlessly without apparent load times or glitches, even as other users interact with the same dataset. The experience remains smooth due to efficient data retrieval enabled by keyset pagination.

When implementing keyset pagination, it’s crucial to consider the choice of indexed columns. It should uniquely and incrementally maintain the order of the records to ensure flawless pagination.

To summarize, keyset pagination provides a robust solution for performance issues associated with traditional pagination methods in MySQL. Developers dealing with substantial datasets would find it a perfect fit for building scalable, responsive applications that provide a seamless user experience.

Optimizing Pagination with Proper Indexing

Efficient database query performance is critical when managing large data sets, especially for operations like pagination. Proper indexing plays a crucial role in optimizing such queries by improving data retrieval speeds and ensuring consistency.

When a query is executed, MySQL uses indexes to quickly locate data without scanning every row in a table, akin to using a book index to find information without flipping through every page. By leveraging indexes effectively, pagination operations become much more efficient, particularly as the data volume increases.

Importance of Indexing

Indexes act as pointers to rows in a database table, significantly reducing the amount of data the database engine needs to sift through to locate results. This reduction is vital in pagination, where the primary goal is to quickly retrieve small subsets of large datasets.

Without the use of indexes, the database must search sequentially through each row to reach the desired starting point of each page, which becomes increasingly time-consuming as the table grows. Properly applied indexes can make pagination near-instantaneous, irrespective of scale.

Creating Effective Indexes

- Identify Key Columns: Begin by identifying columns commonly used in sorting and filtering during pagination. In transactional systems, these might include primary keys or timestamp fields.

For example, if your pagination queries often involve ordering by created_at, ensure an index on this column:

sql

CREATE INDEX idx_created_at ON products(created_at);- Compound Indexes: When queries use multiple conditions, compound indexes (indexes on multiple columns) can help. For instance, if queries often filter by both

category_idandpricein addition to pagination:

sql

CREATE INDEX idx_category_price ON products(category_id, price);- Regularly Update Statistics: Database statistics determine how the query optimizer uses indexes. Ensure these are regularly updated to maintain optimal performance.

sql

ANALYZE TABLE products;Optimizing with Indexes in Pagination Queries

To enhance pagination performance with proper indexing, consider rewriting queries to prioritize indexed columns over non-indexed ones. Here’s an efficient query example that uses indexes to paginate:

SELECT * FROM products

WHERE product_id > 1000

ORDER BY product_id ASC

LIMIT 10;

By ensuring product_id has an index, this query can immediately identify the starting point (product_id > 1000) without scanning non-indexed parts of the table.

Monitoring and Refining

Keep monitoring the performance of indexes by observing slow query logs or using database profiling tools. Revisit indexing strategies as database workloads evolve, and introduce new indices or modify existing ones to align with changing query patterns.

Key Consideration:

– Maintenance Overheads: While indexes improve query speeds, they add overhead to data insertions and updates. Periodically review and remove unused indexes to balance dead index space and performance.

By adopting proper indexing practices, MySQL databases can handle paginated queries more efficiently, paving the way for faster and more scalable applications. Maintaining a balance between performance optimization and hardware resources involves constant monitoring and adjustments but ultimately results in significant efficiency gains.

Advanced Techniques: Deferred Joins and Summary Tables

To improve MySQL query performance and scalability, especially in complex environments, employing advanced techniques like deferred joins and summary tables can significantly enhance pagination and overall application efficiency.

Deferred joins involve restructuring a query to delay expensive join operations until absolutely necessary. This technique comes in handy when dealing with large datasets where retrieving unnecessary columns via joins can lead to greater processing times and memory usage. Instead, the approach extracts only primary identifiers or necessary attributes first, then joins additional tables as a separate step.

How to Implement Deferred Joins

- Initial Query Filtering:

Start by selecting only the essential identifiers necessary for the initial pagination phase, typically using an indexed field. This limits the data volume being processed.

sql

SELECT product_id

FROM products

WHERE category_id = 5

ORDER BY product_id

LIMIT 10;This retrieves product_id for products with a specified category, adequately setting up for more complex operations.

- Apply the Join Later:

Once the primary key list is obtained, join this result with other relevant tables to gather additional data fields when actually required.

sql

SELECT p.product_id, p.name, s.stock

FROM (

SELECT product_id

FROM products

WHERE category_id = 5

ORDER BY product_id

LIMIT 10

) AS temp

JOIN stock AS s ON temp.product_id = s.product_id

ORDER BY temp.product_id;By deferring the join, only the requisite datasets are processed further, optimizing the resource load and performance.

-

Benefits:

– Performance Boost: Reduces computing demand by limiting join operations upfront.

– Flexibility: Improves query flexibility in handling dynamically changing data. -

Caveats:

– This approach might lead to more complex query maintenance.

– Designed primarily for systems where reducing initial data retrieval time significantly impacts performance.

Using Summary Tables

Summary tables are pre-computed tables that store aggregated data, thus minimizing repetitive computation during query execution. These tables are beneficial when dealing with aggregate data analytics in paginated views.

- Creating a Summary Table:

First, create a summary table that consolidates necessary computations. For instance, if you need frequent access to product sales data:

sql

CREATE TABLE sales_summary AS

SELECT product_id, SUM(quantity) AS total_sales

FROM sales

GROUP BY product_id;This table now holds a summarization of sales data for streamlined access.

- Efficient Query Utilization:

Use this summary table directly in paginated queries by joining it with the base data table to integrate aggregate insights efficiently.

sql

SELECT p.product_id, p.name, ss.total_sales

FROM products p

JOIN sales_summary ss ON p.product_id = ss.product_id

ORDER BY ss.total_sales DESC

LIMIT 10;Accessing aggregate data becomes faster as the summary computations have already been processed and are readily available.

-

Advantages:

– Speed: Dramatically reduces query time by avoiding repeated computations.

– Scalability: Decouples complex aggregates from row-level data queries, enhancing scalability across larger datasets. -

Considerations:

– Maintenance: Regular updates are required to ensure summary tables reflect the latest data.

– Storage Overhead: Additional storage will be necessary, which is generally a minor trade-off for speed increment.

Using deferred joins and summary tables in MySQL not only optimizes the performance for large datasets but also enhances the robustness of pagination strategies beyond basic implementations.