Introduction to AI Memory Management

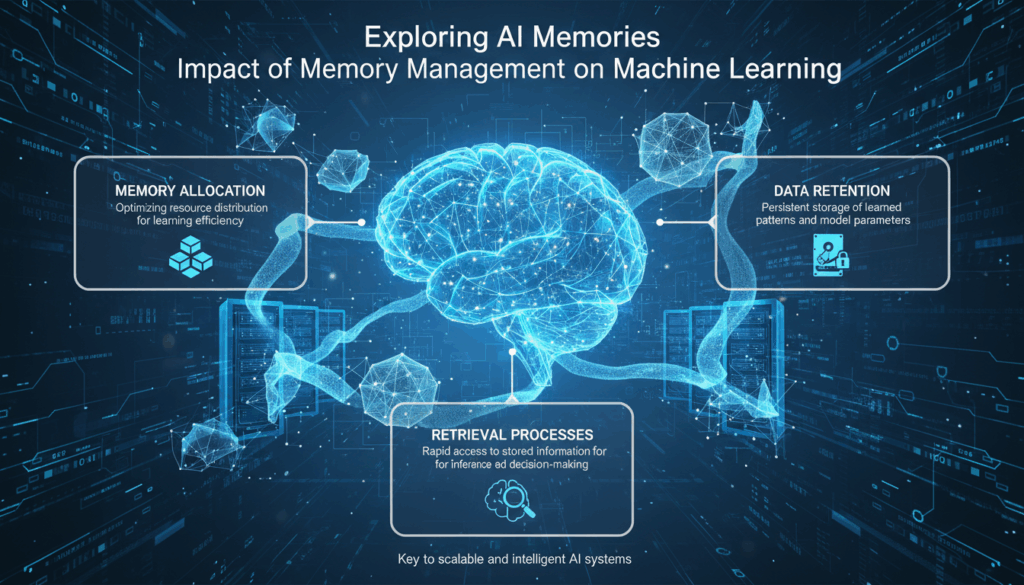

Artificial intelligence (AI) memory management is a critical component in the development and performance of machine learning systems. As models become more complex, managing memory efficiently can significantly impact system performance and scalability. AI memory management involves various strategies and techniques to optimize how data is stored, retrieved, and processed during the execution of machine learning algorithms.

In traditional computing systems, memory management focuses on allocating, tracking, and freeing memory in a way that maximizes performance and minimizes resource wastage. However, when it comes to AI, especially deep learning, the requirements and challenges become more intricate.

Machine learning models, particularly deep neural networks, operate with large datasets and require substantial computational resources to perform training tasks. The memory needs of these models can vary dramatically, depending largely on model architecture, dataset size, batching strategies, and optimization techniques. Here are some crucial aspects of AI memory management:

1. Memory Efficient Data Structures:

Developers often utilize specialized data structures that enhance memory efficiency. Sparse representations, for instance, are used in scenarios where data includes a significant amount of zero entries, such as in natural language processing (NLP) tasks. By representing only the non-zero components, sparse matrices reduce memory consumption significantly.

2. Batch Processing Strategies:

Batch processing is a common technique to manage memory overhead during training phases. By processing data in smaller, manageable chunks (batches), it becomes feasible to train large models on limited hardware resources. The batch size directly influences memory usage; smaller batches require less memory but may prolong training time, while larger batches can accelerate training but demand more memory.

3. Gradient Checkpointing:

This technique targets memory savings during backpropagation by strategically saving and recomputing intermediate results. Instead of storing every activation layer in memory, gradient checkpointing only stores specific ones and recalculates others on an as-needed basis during backward passes. This approach can drastically reduce memory requirements without significant losses in speed.

4. Pruning and Quantization:

Model pruning involves removing unnecessary neurons and weights after training, which reduces complexity and memory usage without compromising performance. Similarly, quantization reduces the precision of model weights, typically from 32-bit to 8-bit, for models where some degradation in precision is acceptable. Both techniques deliver lighter models that fit better in memory-constrained environments.

5. Memory Pinning and Optimized Data Transfers:

In environments involving multiple devices (such as GPU clusters), the speed of data transfer between host and device memory can be a bottleneck. Techniques like memory pinning lock certain pages of memory, improving transfer speeds by preventing system-induced overheads. Optimization of data paths to efficiently shuttle information between devices is also crucial to minimize latency.

6. Use of Specialized Hardware:

The advent of AI-optimized hardware such as Tensor Processing Units (TPUs) and Graphics Processing Units (GPUs) has introduced new memory management approaches. These specialized units have architectures that support efficient parallelism and memory throughput, ensuring that even complex models can be executed smoothly. Innovative memory hierarchies, like HBM (High Bandwidth Memory), facilitate rapid access to large memory blocks needed for AI workloads.

7. Dynamic Memory Allocation:

Dynamic memory allocation strategies, such as those implemented in deep learning frameworks like TensorFlow and PyTorch, allow systems to scale memory usage based on current loads. These frameworks allocate memory on the fly, adapting to the computing requirements as they change during the model’s lifecycle.

The importance of efficient memory management in AI extends beyond resource conservation to encompassing performance optimization, energy efficiency, and scalability. By understanding and meticulously implementing these strategies, developers enhance the capability and accessibility of complex AI models, paving the way for advances in diverse fields such as genomics, language processing, and autonomous systems.

Common Memory Challenges in Machine Learning

Machine learning, especially deep learning, presents several memory challenges that can impede performance and scalability if not properly addressed. As models grow in complexity and datasets expand, managing memory effectively becomes increasingly difficult.

One of the primary challenges is handling large-scale datasets. Machine learning algorithms, particularly those involved in training deep neural networks, require extensive computational resources to process vast amounts of data. Given the finite memory available on devices like GPUs, efficient handling of these datasets is crucial. Techniques such as data preprocessing and dimensionality reduction can help minimize the memory footprint by filtering out irrelevant or redundant data before it reaches the model.

Another significant challenge arises from model complexity. As models become more sophisticated, with deeper layers and more parameters, their memory requirements increase exponentially. Memory usage during the training phase, especially, is intensive due to the need to store intermediate computations and gradients for backpropagation. Techniques like model parallelism, where different parts of the model are distributed across multiple devices, can alleviate pressure on memory by balancing the load and making effective use of distributed resources.

Overhead of intermediate results is also a common issue, particularly in neural networks. Intermediate activations and gradients can take up substantial memory and aren’t needed beyond a certain scope. Using gradient checkpointing, developers can choose to store only critical activations and recompute others on demand, helping to lower memory usage without greatly impacting computational time.

Memory inefficiency due to fixed allocation is another hurdle. Many machine learning frameworks reserve a fixed amount of memory for computation, which can lead to inefficiencies, especially if the actual usage is much lower than anticipated. Dynamic memory allocation, as implemented in frameworks like PyTorch, offers a solution by allocating only as much memory as necessary at any given time.

Furthermore, models often face hardware limitations. In environments with limited hardware capabilities, the choice of hardware can significantly impact how memory is utilized. Using more advanced hardware, such as GPUs with larger memory capacities or TPUs designed specifically for deep learning tasks, can offer much-needed relief. Additionally, adopting training techniques such as mixed precision, where calculations use half-precision floating numbers, helps further optimize memory usage by reducing data size without degrading model performance significantly.

Memory challenges also include scalability issues, where models that work well at a smaller scale may fail to operate effectively as they expand. This is often due to the massive increase in memory requirements, which can far exceed the original capacity planned for. Employing cloud-based resources or using distributed computing can help overcome these barriers by providing scalable solutions to accommodate growing data and model sizes.

Finally, energy consumption linked to memory usage presents another layer of challenge. Efficient memory management not only concerns itself with optimizing for performance but also with reducing the energy footprint, which is crucial for sustainable AI development. Methods that optimize memory utilization, like pruning and quantization, also inadvertently lower energy demands by simplifying computations and reducing resource strain.

Addressing these memory challenges requires a comprehensive strategy combining technical upgrade, algorithmic improvements, and efficient resource management to unlock the full potential of machine learning applications.

Techniques for Efficient Memory Utilization

Efficient memory utilization in machine learning is pivotal for enhancing the performance and scalability of AI systems. Techniques for optimizing memory usage not only conserve resources but also enable more sophisticated models to run on standard hardware. Here’s an in-depth exploration of several key techniques.

One fundamental approach is leveraging memory-efficient data structures. Many machine learning tasks involve large datasets with repeated or sparsely populated values. Data structures like sparse matrices are particularly useful in such contexts. They allow storage of data by emphasizing non-zero entries, thereby reducing the overall memory footprint. For instance, in natural language processing, utilizing sparse encoding for high-dimensional word representations can result in significant memory savings.

Another crucial technique is model compression, which includes pruning and quantization. Pruning involves removing less significant weights and neurons from a neural network, effectively simplifying the model structure without major performance compromise. After the primary training phase, model weights can be pruned based on their contribution to prediction accuracy, allowing smaller and faster models without substantial accuracy loss. Quantization goes hand-in-hand with pruning by reducing the number of bits used to represent each weight. Switching from 32-bit floating-point numbers to 8-bit integers drastically decreases the memory requirements, especially when deploying models to environments with limited resources.

Moreover, gradient checkpointing stands out as an effective method for minimizing memory use during model training. Instead of storing all intermediate activation outputs, which is typical during the forward pass, it allows selective storage of these outputs. When the backward pass requires intermediate values, only those stored checkpoints are used for recalculating non-stored outputs. This enables the retention of crucial state information at a reduced memory cost, which is particularly useful for deep networks where memory is a bottleneck.

Batch processing strategies also play a vital role in optimizing memory utilization. Rather than feeding entire datasets into the model, data is broken down into smaller, manageable batches. This approach not only aids memory management but also contributes to stabilizing training by introducing stochastic variations. Adjusting the batch size dynamically, depending on available memory, ensures that models are trained within resource limits while maximizing computational efficiency.

Use of optimized libraries and hardware acceleration can significantly bolster memory management. Libraries like NumPy and TensorFlow have built-in mechanisms to handle operations in a memory-conscious manner. More importantly, utilizing hardware such as GPUs or TPUs capitalized on efficient parallel processing and memory throughput. These devices are designed to accommodate the intensive memory demands of training large-scale AI models, especially when paired with optimized software.

Finally, dynamic memory allocation strategies provided by modern deep learning frameworks like PyTorch are integral. These frameworks employ a just-in-time memory allocation strategy that allocates resources according to the immediate workload requirements rather than reserving a large static block of memory. The dynamic allocation negates the inefficiency of fixed memory allocation models and aligns resource usage more closely with actual needs, improving overall memory utilization.

Through these strategies, developers can effectively mitigate memory constraints, enabling the execution of complex models on conventional computational platforms. Such techniques not only improve model performance and training efficiency but also expand the accessibility and applicability of machine learning solutions across diverse technological landscapes.

Impact of Memory Management on Model Performance

Effective memory management can profoundly influence machine learning model performance, particularly as it affects the ability to both train and deploy complex models efficiently. Memory-related issues can lead to slower processing, limited scalability, and even model inaccuracies, but by addressing these through focused strategies, it’s possible to enhance model throughput and predictive power.

During the training phase, memory management has a direct impact on data handling and computational workload. Large datasets are commonly processed through batch operations, which need to be optimized for memory efficiency. The selection of batch sizes is crucial, as smaller batches conserve memory but can extend training times, whereas larger batches increase training speed but demand more memory. The balance here impacts hardware utilization, ensuring that models can maximize resource availability. For instance, dynamic batching dynamically adjusts based on available GPU memory to ensure optimal use of hardware, thereby mitigating potential bottlenecks.

Additionally, the intricate execution of gradient checkpointing is a significant factor influencing memory management. By strategically storing fewer intermediate states during model training, and recomputing them as needed, this technique can significantly conserve memory. This optimization not only reduces the total memory footprint but also facilitates smoother model operation on less powerful hardware without compromising on learning capacity or precision.

Moreover, memory management techniques such as pruning and quantization can enhance model performance by simplifying model architectures post-training. Pruning reduces unnecessary nodes, which decreases the memory load without critically affecting model accuracy. Quantization further optimizes this by reducing the number of bits required to store individual weights, effectively decreasing memory usage. This is particularly beneficial when deploying models in resource-constrained environments, as it allows for leaner runtimes and faster inference stages.

Memory management also plays a pivotal role in the deployment phase. Models with optimized memory configurations, such as those utilizing sparse data structures, are inherently more efficient and faster during inference. Sparse matrices, for example, reduce the space and time required for computation, enabling models to operate quickly and effectively on devices with limited computational resources.

Furthermore, the use of memory-cognizant hardware accelerators like TPUs and GPUs, which leverage high-bandwidth memory and parallel processing capabilities, offers substantial enhancements. These devices are purpose-built to handle the memory-intensive requirements of AI training processes, ensuring minimal latency and maximum performance.

Finally, dynamic memory allocation provided by frameworks like PyTorch and TensorFlow aligns closely with the actual load requirements of ongoing operations, thus preventing wastage and allowing models to scale flexibly based on need. This adaptability ensures models remain responsive and efficient, even as they grow in complexity or when processing fluctuating data volumes.

By implementing robust memory management techniques across both training and deployment phases, developers can not only enhance the efficiency and performance of AI models but also extend their reach and applicability across diverse technological environments, ultimately leading to greater innovation and capability in artificial intelligence applications.

Advanced Strategies for Memory Optimization

Memory optimization in AI models involves leveraging techniques that strategically reduce memory usage while maintaining performance. These advanced strategies include memory mapping, use of low-rank approximations, intelligent caching, asynchronous data loading, and exploration of novel deep learning frameworks optimized for memory efficiency.

Firstly, memory mapping allows large datasets or models to be accessed directly from disk rather than loading them entirely into memory. This can be particularly effective when working with datasets that far exceed the available RAM. By mapping file segments to the memory space only as needed, systems can process very large datasets efficiently. In Python, for example, libraries such as NumPy support memory mapping via numpy.memmap, which provides an interface to read large arrays from disk without full loading.

Low-rank approximations are another powerful method for reducing the footprint of neural networks. This involves approximating a weight matrix with lower-rank matrices, significantly reducing the number of parameters and, correspondingly, the memory requirements. In practical terms, this is often implemented using singular value decomposition (SVD) methods during the training process to compress layers of a neural network. Research in this area continues to evolve, with recent improvements focusing on minimizing the loss of accuracy during decomposition.

Intelligent caching mechanisms can enhance memory efficiency by storing frequent computations or results, thereby avoiding redundant calculations. This is particularly useful in hyperparameter tuning, where the same computations might be needed across multiple runs. Frameworks like Ray Tune use caching to optimize hyperparameter trials, reducing the need for repeated operations and conserving memory.

The use of asynchronous data loading is crucial for optimizing memory usage by ensuring that data is preloaded and prepared as needed without blocking the computational pipeline. This is particularly beneficial when training models on high-bandwidth datasets or when pre-processing operations are computationally expensive. Data loaders with asynchronous capabilities, such as PyTorch’s DataLoader with workers, ensure that data retrieval and processing do not become bottlenecks, facilitating efficient memory usage.

Exploration of newer deep learning frameworks or libraries that prioritize memory efficiency can lead to significant optimizations. Libraries like Timeloop and TVM are designed to optimize neural network performance specifically on edge devices by minimizing memory usage, thus driving resource-efficient execution. Such frameworks implement advanced techniques like loop transformations and multiple buffering strategies that streamline memory access patterns.

Furthermore, custom memory allocators tailored to the specific requirements of neural network operations can offer substantial improvements. These allocators pre-emptively manage and allocate memory based on probable usage patterns seen in model training iterations, thereby reducing overheads associated with typical memory allocation and freeing operations.

Parallelization and sharding, which distribute workloads evenly across multiple processors or devices, can also be employed to optimize memory use. This involves breaking down the data and model into smaller, manageable parts that can be processed independently, effectively distributing memory usage and improving execution efficiency. Current toolkits, like Horovod, enable seamless model training distribution for both TensorFlow and PyTorch.

Finally, integrating robust profiling and monitoring tools to analyze model memory usage during both the training and inference stages helps in identifying bottlenecks and opportunities for optimization. Techniques like tensorboard profiling or PyTorch’s memory profiling tools provide insights into memory consumption patterns, allowing developers to make informed adjustments to improve efficiency.

These advanced strategies, when integrated thoughtfully, can significantly improve the memory efficiency of AI models, paving the way for faster, more resource-conscious deployments without sacrificing performance quality.