Introduction to Large Language Models (LLMs)

Large Language Models (LLMs) have become a cornerstone in the realm of artificial intelligence, particularly in natural language processing. These models, like OpenAI’s GPT, BERT by Google, and Facebook’s RoBERTa, have achieved remarkable feats due to their sophisticated architectures and training methods.

What are Large Language Models?

At their core, LLMs are advanced types of neural networks designed to understand and generate human language. Typically, these models consist of billions of parameters and are pretrained on vast corpora of text. Their size and the diversity of text they consume give them a broad understanding of human language nuances.

Key Characteristics of LLMs

- Scale: Larger models tend to perform better due to their capacity to learn complex patterns in data. The sheer size allows them to process and generate more coherent text compared to smaller models.

- Transfer Learning: They leverage transfer learning, meaning they are initially trained on a large dataset and then fine-tuned to specific tasks, which enhances their adaptability and performance.

- Versatility: LLMs can perform a wide array of tasks such as translation, summarization, question-answering, and even creative writing.

How Do LLMs Work?

-

Pretraining:

– LLMs are typically trained on a process known as unsupervised pretraining. During this phase, the models learn to predict the next word in a sentence, a task known as language modeling. This step is crucial because it equips the model with a foundational understanding of syntax, semantics, and context.

– Example: For a sentence like “The cat sat on the…”, the model learns probabilities for possible continuations like “mat” or “sofa.” -

Fine-Tuning:

– After pretraining, the models undergo fine-tuning on labeled datasets relevant to specific tasks. This step involves supervised learning where the model’s predictions are guided towards correct answers or outcomes.

– Example: Fine-tuning on a sentiment analysis dataset helps the model discern different emotional tones in text.

Applications of LLMs

- Text Generation: LLMs are proficient at generating human-like text, which has led to applications in automated content creation and conversational agents.

- Translation Services: Models such as Google Translate utilize LLMs to provide real-time, high-quality language translation.

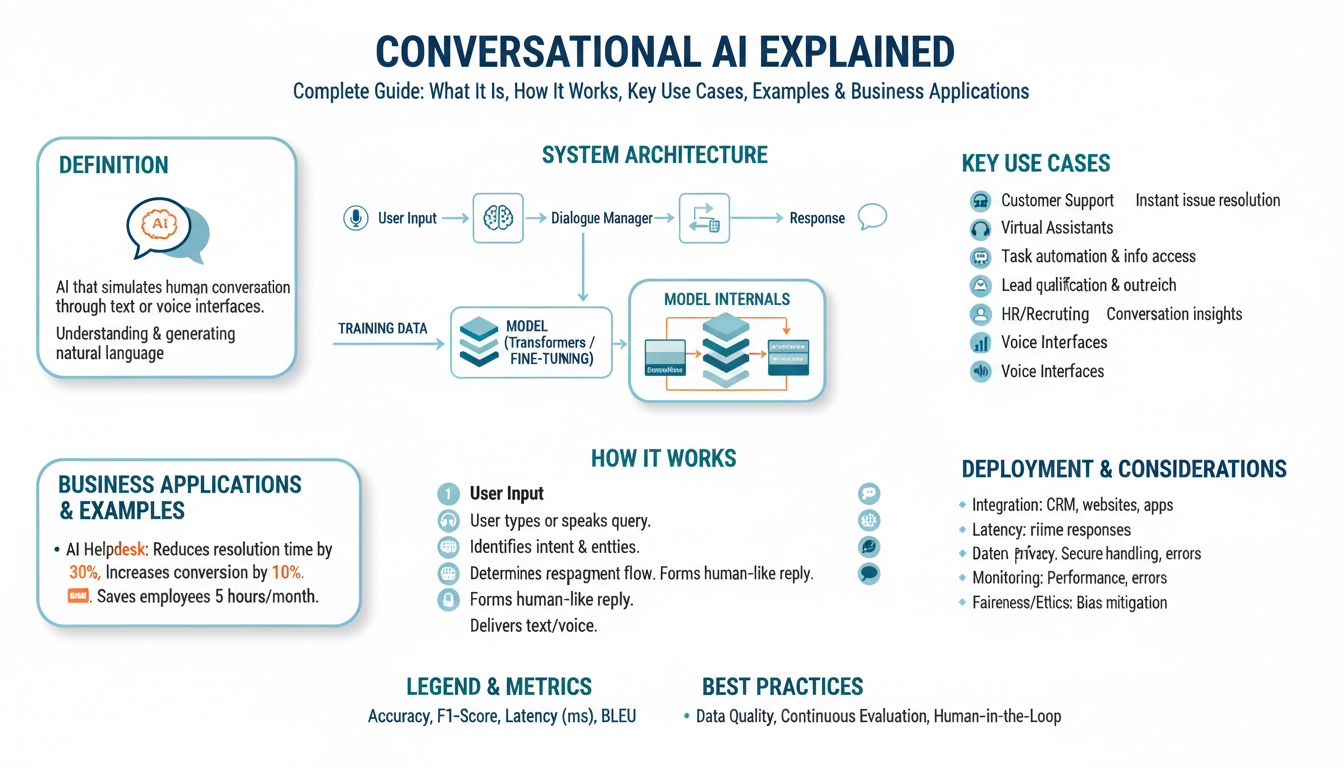

- Customer Support: Chatbots powered by LLMs can handle customer inquiries and provide support across various platforms.

Challenges and Considerations

- Bias and Fairness: LLMs inherit biases present in the data they are trained on, which can lead to unfair or inappropriate outcomes.

- Resource Intensive: Training and deploying these models require significant computational resources, which can limit their accessibility.

- Interpretability: Understanding and explaining the decision-making process of LLMs is inherently challenging, often described as a “black box.”

Despite these challenges, LLMs are continuously evolving, promising further breakthroughs in language understanding and generation. As researchers find more efficient ways to train and utilize these models, their impact on technology and society is bound to grow exponentially. The advancements in this field continue to bridge the gap between human-like language comprehension and artificial intelligence.

The Transformer Architecture and Self-Attention Mechanism

Transformer Architecture Overview

The Transformer architecture has revolutionized natural language processing (NLP) due to its efficiency and performance, which significantly surpass previous models like recurrent neural networks (RNNs) and long short-term memory networks (LSTMs). Introduced in the paper “Attention is All You Need” by Vaswani et al. in 2017, the Transformer model relies heavily on mechanisms of self-attention and feedforward neural networks.

Key Components

- Multi-Head Attention Layer

– This layer allows the model to focus on different parts of the input sentence for each word. It accomplishes this by using several attention mechanisms, or “heads,” which independently process the sequence to learn diverse representations.

-

Step-by-Step Process:

- Input Embedding and Positional Encoding: The input words are first mapped to their corresponding vectors. Additionally, positional encoding is added to these embeddings to maintain the order of words.

- Scaled Dot-Product Attention: Each attention head computes a score using the dot product of query (Q), key (K), and value (V) vectors, followed by scaling and applying a softmax function:

markdown \[ \text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)V \]– Concatenation and Linear Transformation: The outputs from each attention head are concatenated and linearly transformed back to the original dimension.

-

Feedforward Neural Network

– After attention, the output is passed through a position-wise feedforward network. Typically, this consists of two linear transformations separated by a ReLU activation:markdown \[ \text{FFN}(x) = \text{max}(0, xW_1 + b_1)W_2 + b_2 \]– This step enhances the representation by enabling the model to capture non-linear combinations of features.

-

Layer Normalization and Residual Connections

– To ensure stable and faster training, each sub-layer (attention and feedforward) is followed by layer normalization and employs residual connections to bypass some gradients directly to earlier parts of the model.

Self-Attention Mechanism

The self-attention mechanism is at the heart of the Transformer’s ability to relate every word with every other word in a sentence, irrespective of their positional distance.

- Mechanism:

- Each word generates three vectors through learned linear transformations: Query (Q), Key (K), and Value (V). These vectors are projected into a dimension specified by the model’s settings (commonly denoted as (d_k)).

-

The self-attention score is computed by taking the dot product of the Query vector of a particular word with the Key vector of each word in the sequence, determining the “attention” or importance attributed to each word when encoding.

-

Benefits:

- Parallelization: Unlike RNNs or LSTMs, which are inherently sequential, Transformers allow full sequence parallelization during training, leading to efficient hardware utilization.

- Long-Distance Dependency Handling: Self-attention enables comprehension of dependencies between words even if they are far apart in a sentence, improving performance on tasks requiring contextual understanding.

Practical Implications

- Dynamic Contextual Representations: By focusing on relationships between all words, the self-attention mechanism generates context-dependent representations of words, leading to nuanced and sophisticated language models.

- Transfer to Various Domains: Due to the modularity and effectiveness of the Transformer architecture, it has been adapted beyond NLP to areas such as image processing, drug discovery, and more.

Transformers continue to be the backbone of cutting-edge NLP systems, inspiring architectures such as BERT, GPT, and T5, which utilize enhanced versions of these components.

Training Processes: Pre-training and Fine-tuning

Understanding Pre-Training

Pre-training is a foundational phase in the development of large language models (LLMs). This process equips the model with a broad understanding of language by training it on an extensive array of text data. Here’s how pre-training unfolds:

- Objective:

– The primary goal during pre-training is to learn a generic language representation. This is often achieved through unsupervised learning techniques where models, such as GPT (Generative Pre-trained Transformer), predict the next word in a sentence.

markdown

Given the sentence prefix "The cat sat on the", the model learns to continue with plausible words like "mat" or "sofa".-

Dataset:

– The model is exposed to large, diverse datasets consisting of billions of words. Text is sourced from books, articles, websites, and other written media to cover diverse linguistic contexts. -

Training Mechanism:

– The Transformer architecture, using self-attention mechanisms, processes this input to develop contextual relationships between words. -

Learning Features:

– During this phase, the model learns about syntax, semantics, and common structures in language, although it does not specialize in any specific task.

Fine-Tuning: Tailoring for Specific Tasks

Following pre-training, models undergo fine-tuning to adapt their general knowledge to specific applications.

- Goal:

– Fine-tuning involves supervised learning, where the model’s behavior is adjusted based on labeled data to perform well in particular tasks such as sentiment analysis or entity recognition.

markdown

For sentiment analysis, sentences are labeled as "positive", "negative", or "neutral", and the model is trained to map textual input to these labels.-

Dataset:

– Smaller, task-specific datasets are employed. These datasets are significantly less extensive than those used in pre-training but are rich in task-relevant information. -

Adjustment Process:

– The model’s parameters are adjusted through continued learning. Fine-tuning requires a careful setting of hyperparameters to avoid overfitting. -

Examples of Tasks:

– Sentiment Analysis: Understanding and classifying text based on emotional content.

– Named Entity Recognition (NER): Identifying entities such as names, organizations, and locations within text.

– Machine Translation: Translating text between languages using learned patterns and structures. -

Transfer Learning Benefits:

– Fine-tuning leverages transfer learning. Pre-trained weights are fine-tuned, which allows learning from fewer task-specific examples while maintaining performance.

Execution and Optimization

- Computational Resources:

-

Both pre-training and fine-tuning are resource-intensive processes requiring powerful computation to process large volumes of data efficiently. GPUs or TPUs often facilitate this computation.

-

Hyperparameter Tuning:

- Fine-tuning involves carefully adjusting learning rates, batch sizes, and other hyperparameters to optimize performance without overfitting.

Real-World Implications

-

Accessibility: Pre-trained LLMs make sophisticated AI applications accessible to smaller entities by minimizing the need for extensive computing resources and expertise in AI model training.

-

Task Efficiency: Through fine-tuning, applications are more efficient and effective, capable of high performance with minimal data-specific inputs.

This dual-step training process ensures that LLMs are not only versatile but also highly specialized, making them powerful tools in various applications ranging from content generation to customer support and beyond.

Understanding Context and Semantic Relationships

Understanding how large language models (LLMs) interpret context and semantic relationships involves delving into the intricacies of how these models analyze, process, and generate coherent and contextually accurate language.

Capturing Context in Language

Effective understanding of context is crucial for any language model. Context in human language often involves understanding the relationships between words in a sentence, sentences in a paragraph, or even the cultural and situational backdrop against which words are used.

- Contextual Embeddings:

-

Word Embeddings: LLMs use embeddings like Word2Vec or GloVe to capture the syntactic and semantic meaning of words. They provide a dense vector representation, which positions semantically similar words closer in a multi-dimensional space.

“`python

from gensim.models import Word2VecSample code snippet on training word embeddings

sentences = [[‘context’, ‘matters’, ‘in’, ‘language’], [‘language’, ‘models’, ‘are’, ‘advanced’]]

model = Word2Vec(sentences, vector_size=100, window=5, min_count=1, workers=4)

vector = model.wv[‘context’] # Access the word vector for ‘context’

“`

– Contextualized Word Vectors: Unlike static embeddings, models like BERT generate contextualized vectors, meaning the representation of a word varies depending on the sentence. This allows for nuanced interpretation based on surrounding words. -

Attention Mechanisms:

- The self-attention mechanism in Transformers plays a key role in establishing context by relating each word to every other word in the input sequence.

- For instance, the word “bank” in:

- “He sat by the river bank.”

- “She went to deposit money in the bank.”

- Self-attention helps the model distinguish between meanings by adjusting the attention weights based on context.

Semantic Relationships

Semantic understanding requires the model to go beyond word-to-word interactions and grasp deeper relationships.

- Semantic Role Labeling:

- Involves identifying roles played by entities in a sentence, like who did what to whom, when, and where.

-

Helps in tasks like information extraction, allowing the model to pull out entities and their interactions based on semantic meanings.

markdown \[ \text{Semantic Role: Agent (John)} \rightarrow \text{verb: bought} \rightarrow \text{Object: car} \] -

Relation Extraction:

- Models are trained to identify and categorize relationships between entities in a text. For example, detecting the relationship “Author” between “J.K. Rowling” and “Harry Potter.”

- Utilizes patterns learned during pre-training and fine-tuning stages to predict relationships based on input data.

Challenges and Solutions

- Ambiguity:

-

Natural language is inherently ambiguous. Words may have different meanings based on context; understanding this requires sophisticated disambiguation techniques.

-

Cross-Sentence Context:

-

Understanding context across multiple sentences remains complex. Models are now being equipped with memory architectures or longer context-windows to improve comprehension over longer texts.

-

Incorporating World Knowledge:

- External knowledge bases (like Knowledge Graphs) are integrated to provide contextual data that is not explicit in the text but understood culturally or situationally.

By comprehensively understanding context and semantic relationships, large language models achieve a more holistic grasp of language, approximating human-like processing and comprehension. This enables them to perform complex tasks such as dialogue generation, text summarization, and high-level reasoning.

Challenges in Language Interpretation and Ambiguities

Interpreting Ambiguities in Language

In natural language processing (NLP), ambiguities present a significant challenge for models attempting to accurately understand and interpret human language. These ambiguities arise due to the inherent complexity and variability in how language is used. Understanding these facets is crucial for improving the performance of large language models (LLMs).

Types of Linguistic Ambiguities

- Lexical Ambiguity:

– Occurs when a single word has multiple meanings.

– Example: The word “bark” can refer to the sound a dog makes or the outer covering of a tree. Disambiguation requires context.

“`markdown

“The dog barked loudly all night.”

“The bark of the tree was rough and thick.”

“

- Syntactic Ambiguity:

– Arises when a sentence can be parsed in multiple ways due to its structure.

– Example: “Visiting relatives can be boring.”- Could mean that you find it boring to visit relatives, or that relatives visiting you can be boring.

`plaintext

- Parsing 1: [Visiting relatives] [can be boring].

- Parsing 2: [Visiting] [relatives can be boring].- Semantic Ambiguity:

– Occurs when the meaning of a sentence can be interpreted in more than one way.

– Example: “I saw the man with the telescope.”- Could imply that you used a telescope to see the man, or the man has a telescope.

`plaintext

- Interpretation 1: I saw [the man] [with the telescope].

- Interpretation 2: I saw [the man with the telescope].- Pragmatic Ambiguity:

– Involves understanding the intended meaning or context beyond the literal interpretation of words.

– Example: “Can you pass the salt?”- Pragmatically understood as a request rather than a question of ability.

Challenges in Resolving Ambiguities

- Context Sensitivity:

- Proper disambiguation often requires an understanding of broader context, including prior conversation, author intention, or situational context.

-

Example: In a dialogue, “bank” needs context to denote either a financial institution or the bank of a river.

-

Commonsense Knowledge:

- Solving ambiguities often relies on commonsense reasoning, an area where many LLMs are still developing.

-

Example: “The glass is light” could refer to weight or color, needing common sense to infer meaning from situational context.

-

Multiple Interpretational Layers:

- Ambiguities often layer, requiring models to handle nested or sequential ambiguities.

- Example: “Leave the door open when you leave” involves sequential processing of actions and intents.

Approaches to Mitigating Ambiguities

- Enhanced Contextual Embeddings:

-

Utilize embeddings that account for surrounding text to predict meaning accurately. BERT and similar models create contextual embeddings by considering the entire sentence or paragraph.

-

Incorporating External World Knowledge:

- Use external databases and knowledge graphs to augment linguistic inputs with factual and situational information.

-

Technique Example: Use of tools like Google’s Knowledge Graph to relate “Apple” to either a tech company or fruit.

-

Advanced Parsing Techniques:

- Using syntactic parsers that comprehensively assess potential interpretations.

-

Techniques involving dependency parsing can resolve grammatical ambiguities.

-

Self-supervised Learning on Diverse Corpora:

- Training LLMs on diverse datasets makes them more resilient to ambiguities, enabling them to handle varied linguistic inputs effectively.

Conclusion

Understanding and mitigating these ambiguities is central to enhancing the efficacy of large language models in nuanced and sophisticated communication tasks. Researchers continually develop intricate models and strategies to bridge these interpretational gaps, pushing the boundaries of what LLMs can achieve in real-world applications. By improving on these fronts, language models will better approximate the human ability to navigate the complex landscape of natural language.

Evaluating LLMs’ Comprehension and Performance

Evaluating Comprehension and Performance in LLMs

Evaluating the comprehension and performance of Large Language Models (LLMs) is a multifaceted task that involves analyzing how well these models understand and generate human language. Here’s how this evaluation can be structured:

Metrics for Evaluation

-

Perplexity:

– Definition: A measurement of how well a probability model predicts a sample.

– Usage: Lower perplexity indicates better predictive performance, meaning the model is more confident in its word prediction.

– Calculation:python import numpy as np def calculate_perplexity(probabilities): entropy = -np.mean(np.log(probabilities)) return np.exp(entropy) -

BLEU Score (Bilingual Evaluation Understudy):

– Purpose: Often used to evaluate the quality of text that has been machine-translated from one language to another.

– Method: Compares n-grams of the generated text with those in reference texts to find the level of overlap.

- Interpretation: Higher BLEU scores represent greater similarity with the reference texts.

-

ROUGE (Recall-Oriented Understudy for Gisting Evaluation):

– Purpose: Measures the overlap of n-grams, precisions, and recalls.

– Evaluation: Preferred for tasks such as summarization where the model output needs to match key phrases or segments in the reference text. -

Accuracy and F1-Score:

– Application: Used extensively in classification tasks such as sentiment analysis or named entity recognition.

– F1-Score Calculation:“`markdown

[

\text{F1} = 2 \times \frac{\text{Precision} \times \text{Recall}}{\text{Precision} + \text{Recall}}

]“`

Benchmarks and Datasets

- GLUE (General Language Understanding Evaluation):

- Role: Provides a suite of tasks for evaluating natural language understanding systems.

-

Components: Includes tasks like textual entailment, sentence similarity, and sentiment analysis.

-

SuperGLUE:

-

An advancement over GLUE, offering more complex tasks to assess deeper language understanding capabilities.

-

OpenAI’s Chatbot Arena:

- Evaluates conversational capabilities in dialogue settings.

- Focuses on response relevance, engagement, and appropriateness.

Human Evaluation

-

Crowdsourced Evaluation:

– Using platforms like Amazon Mechanical Turk to have humans assess output quality, accuracy, and naturalness. -

Expert Review:

– Involves professionals in linguistics or specific domain tasks to analyze model outputs for nuanced comprehension and application tasks.

Real-world Use Cases

- Customer Service Automation:

-

Evaluation based on models’ ability to resolve issues accurately and maintain customer satisfaction.

-

Content Creation:

- Performance assessed by creativity, coherence, and alignment with input prompts in content generation tasks.

Challenges in Evaluation

- Subjectivity in Human Judgments: People’s opinions on what constitutes “good” language can vary widely.

- Ambiguous Outputs: Determining the correctness when multiple plausible outputs exist.

- Bias in Datasets: Intrinsic biases in training datasets can skew results, necessitating careful consideration of the sources and representations.

Conclusion

Evaluating LLMs involves combining quantitative metrics with qualitative human assessments to gain a well-rounded view of their capabilities and limitations. These evaluations guide the refinement of models for improved comprehensions, such as resolving ambiguities and enhancing contextual understanding.