What Is Generative AI?

Generative AI refers to a class of artificial intelligence systems designed to create new content, whether it’s text, images, music, or even code, by learning from vast amounts of data. Unlike traditional AI systems, which focus on analyzing data and making predictions, generative AI systems learn the underlying patterns of their input data and leverage those insights to generate entirely new outputs that didn’t exist before.

At its core, generative AI uses advanced machine learning techniques, notably deep learning models such as Generative Adversarial Networks (GANs) and transformer architectures. These technologies enable software like ChatGPT and DALL·E to produce convincingly human-like text or original works of digital art from short prompts.

Here’s how generative AI commonly works:

- Training on massive datasets: To generate convincing outputs, these AI models are “trained” on expansive datasets containing examples of text, images, sounds, or other mediums. For instance, a language model like ChatGPT learns by analyzing billions of sentences and passages from books, articles, websites, and more.

- Learning complex patterns: Through training, the AI model identifies statistical patterns and relationships—such as grammar rules, semantics, tone, and visual composition—enabling it to reconstruct and recombine elements in new ways.

- Generating new content: When prompted (say, with a phrase, question, or idea), the AI draws on what it learned to assemble original responses or creations. For example, given a prompt to “write a poem about sunsets,” ChatGPT composes a unique poem, while DALL·E might produce a fresh digital painting based on a textual description.

The applications of generative AI are wide-ranging and rapidly expanding, from automating creative tasks in industries like marketing and entertainment to assisting scientists in drug discovery by generating novel molecular structures that could be used as new medicines. For a deeper dive into generative AI’s technical foundations, Microsoft’s guide on Generative AI Concepts is a reputable resource.

Through their ability to both analyze and create, generative AI systems open up a world of innovation, yet they also prompt important discussions about authorship, ethics, and copyright in the digital age. As the technology progresses, staying informed about the ethical considerations and practical uses of generative AI becomes increasingly crucial for both developers and users alike.

How Generative AI Differs From Traditional AI

Unlike traditional AI systems, which are typically designed to classify, sort, or make predictions based on input data, generative AI goes a step further by producing brand-new content that has never existed before. This fascinating leap in AI capability has unlocked creative potential across industries, from natural language processing to digital art. But what sets generative AI apart from its traditional counterparts?

Traditional AI is primarily characterized by its ability to analyze existing data and return a result from limited, predefined outputs. For example, a typical image recognition AI might be trained to recognize cats or dogs in pictures, but it cannot create a new image of a cat or imagine a dog wearing sunglasses. Its intelligence is based on finding patterns and making predictions or classifications based on what it has been shown. You can read more about traditional AI approaches in this detailed Brookings overview.

In contrast, generative AI uses complex models—most notably large language models (LLMs) and generative adversarial networks (GANs)—to craft original material, ranging from text and images to music and synthetic data. These models are not limited to simply analyzing data but are trained to “understand” the structure and style of enormous datasets, thus enabling them to generate entirely new content in response to user prompts. For instance, GPT-3 and its successors form responses based on billions of words across the internet, while DALL·E can create novel images from textual descriptions.

Here’s an example that illustrates the distinction:

- Traditional AI: Given a photo, the system labels it as either a cat or a dog using learned features from a set of labeled animal images.

- Generative AI: Given the prompt, “A surreal painting of a dog wearing sunglasses at a jazz club,” a model like DALL·E creates an original work of art that matches this description, synthesizing new elements based on learned patterns in art, colors, and textures.

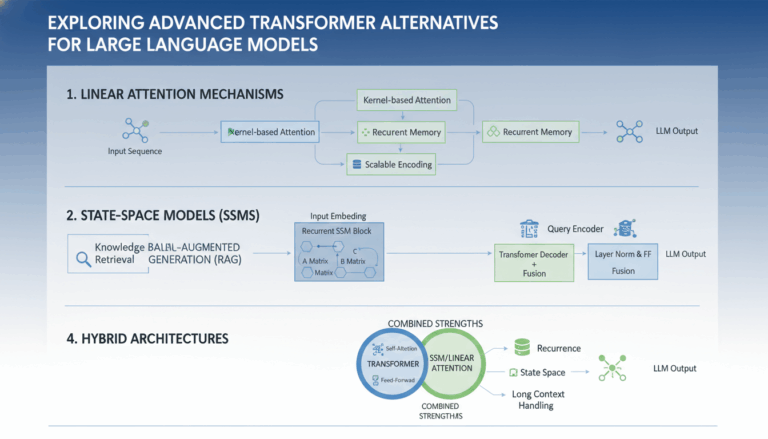

Key enablers of this shift are the architectures powering generative AI:

- Transformers: The underlying foundation for modern LLMs, transformers can process massive volumes of sequential data efficiently and with context. Read about transformers in this landmark Google research blog.

- GANs (Generative Adversarial Networks): These involve two neural networks—the generator and the discriminator—that “compete” to produce increasingly realistic outputs. An in-depth DeepMind explanation covers the basics and breakthroughs of GAN technology.

This paradigm shift enables creativity, nuanced language generation, and the ability to produce multimedia content at scale, fundamentally transforming what we expect from AI systems. As generative models continue to evolve, they raise exciting possibilities—and complex questions—about creativity, authenticity, and the boundaries between human and machine intelligence.

The Core Technologies: Neural Networks, Transformers, and Large Language Models

At the heart of generative AI lie three transformative advancements: neural networks, transformers, and large language models (LLMs). These core technologies have redefined what’s possible in machine learning, powering breakthroughs like ChatGPT, DALL·E, and many others. Let’s explore each in depth:

Neural Networks: The Building Blocks

Inspired by the structure of the human brain, neural networks are systems of interconnected nodes (“neurons”) that process information through weighted connections. At the most basic level, these networks learn patterns from data through repeated exposure and iterative adjustments. Each layer in a neural network extracts increasingly abstract representations of the input data:

- Input Layer: Receives raw data (like images or text).

- Hidden Layers: Transform inputs through mathematical operations, detecting complex patterns.

- Output Layer: Produces the final prediction or result.

Early neural networks led to advancements in image recognition and speech recognition. However, their capability was limited by architectural constraints and available computational power.

Transformers: A Revolutionary Architecture

Introduced by researchers at Google in 2017, the transformer architecture radically changed the landscape of AI. Unlike earlier models that processed information in sequence, transformers can analyze input data all at once—enabling them to understand context and relationships between words or pixels, no matter their distance from one another.

The core innovation is the self-attention mechanism, which allows the model to focus on different parts of the input with varying intensity. This is akin to reading an entire paragraph and immediately grasping how each sentence connects to the others. For example, in natural language processing, this enables models to resolve ambiguous pronouns and track long-range dependencies—a crucial ability for tasks like translation, summarization, and question-answering.

- Step 1: Assigns attention scores to every part of the input.

- Step 2: Weighs the importance of each word or token accordingly.

- Step 3: Aggregates this information to produce a context-aware representation.

This architecture forms the backbone of models like BERT, GPT, and DALL·E.

Large Language Models (LLMs): Scaling Up Intelligence

With the development of transformers, the next leap came through scale. Large language models leverage billions—or even trillions—of parameters, trained on vast swaths of textual and visual data from the internet. By processing such immense datasets, these models learn nuanced associations, writing styles, facts, and even coding skills.

Example: Given the prompt, “Write a poem about the moon,” an LLM like ChatGPT will generate a creative, original piece drawing on everything it knows from centuries of poetry, science, and more.

LLMs excel at a range of generative tasks, such as:

- Conversational agents (e.g., ChatGPT)

- Text-to-image creation (e.g., DALL·E)

- Programming assistance (e.g., GitHub Copilot)

Despite occasional errors or “hallucinations,” extensive research from places like Stanford AI Lab explores how to make these models more reliable and transparent.

These core technologies are not just powering today’s breakthroughs—they’re shaping the future of human-computer interaction, creativity, and problem-solving at an unprecedented pace.

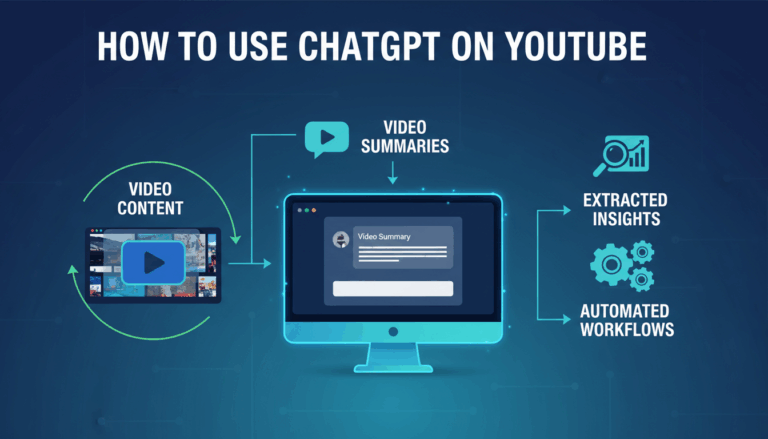

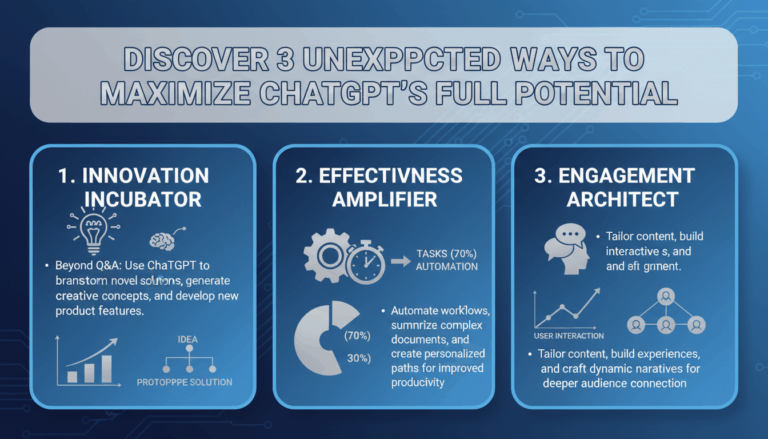

ChatGPT: Conversational AI in Action

One of the most prominent examples of generative AI in action is ChatGPT, developed by OpenAI. This conversational AI system is designed to interact with users in a natural, human-like manner, answering questions, providing recommendations, and even holding in-depth discussions on a wide range of topics.

At its core, ChatGPT relies on a large language model known as GPT (Generative Pre-trained Transformer). The AI is trained using vast amounts of text from books, articles, and websites, allowing it to understand context, grammar, and even subtle nuances of language. Instead of simply retrieving information from a database, ChatGPT generates responses on the fly, synthesizing information it has learned during training. This gives the impression of a truly dynamic conversation, making the technology particularly useful for customer support, virtual assistants, and creative writing applications.

To understand how ChatGPT powers conversations, consider the step-by-step process it follows:

- Input Processing: When a user types a prompt, ChatGPT analyzes the text to understand the intent and context of the question. This context awareness is what enables more coherent multi-turn conversations, which you can read more about through Scientific American’s exploration of AI in science.

- Contextual Generation: The model references its internal knowledge gained during training, using deep neural networks to generate a fluent, contextually relevant response. This process involves selecting the next word in a sentence based on probability, as explained in detail by the Harvard Data Science Review.

- Response Output: The generated response is returned to the user. If feedback or clarification is provided, ChatGPT takes this new input into account, maintaining context across multiple exchanges.

This generative capability makes ChatGPT incredibly versatile. For example, businesses are integrating it into their websites to handle customer inquiries 24/7, while educators use it to simulate tutoring sessions or language learning conversations. The potential for personalized experiences is enormous—as seen in platforms such as Khan Academy’s pilot of AI-powered learning tutors.

However, it’s important to note some challenges. Since ChatGPT generates responses based on patterns in data, it can occasionally produce incorrect or biased outputs. OpenAI and the broader AI community are continually researching ways to mitigate these issues through improved training techniques and model safety protocols, as discussed in-depth by Google’s conversational AI research.

As generative AI like ChatGPT continues to evolve, it brings us closer to seamless, human-like interaction between people and machines—paving the way for unprecedented innovation across industries and redefining what’s possible in digital communication.

DALL·E and the Magic of AI-Generated Images

Imagine describing a scene—a cat surfing on a pizza in space—and, seconds later, seeing it come to life as a digital painting. That’s the magic behind tools like DALL·E, a generative AI developed by OpenAI, which transforms text prompts into vivid, realistic images. This technology is built upon deep learning architectures called neural networks, designed to understand and synthesize information in mind-bending ways.

At the core of DALL·E is a model known as a transformer, which enables the AI to interpret the complex relationships between words and visuals. It’s trained on a massive dataset that includes billions of text-image pairs, learning to recognize patterns, attributes, and artistic nuances. When you describe a scene or scenario, DALL·E interprets the text and generates one or more original images that match the description, even if the combination of elements seems fantastical or has never been seen before.

How does DALL·E create these images?

- Understanding the Prompt: The AI first breaks down your input—processing the context, objects, actions, and styles you mention. For example, “a Renaissance-style portrait of a robot.” The transformer model identifies the unique elements and artistic cues in the request.

- Generating Visual Concepts: Guided by its training, the model draws on its learned associations, envisioning what a Renaissance painting or a robot typically looks like. By blending styles and features, it forms an internal blueprint.

- Creating Pixel-by-Pixel Imagery: Through a generative process called diffusion or autoregressive rendering, DALL·E sculpts the image from random noise into a clear, coherent picture. Each iteration fine-tunes colors, shapes, and textures to achieve a strikingly realistic or artistic result.

- Offering Variations: The model often presents multiple interpretations, letting users select the most appealing or accurate depiction for their needs.

This technology has made headlines for its ability to democratize art and creativity. Graphic design, advertising, film, and even education have benefited from instant concept art and imaginative illustrations. For instance, teachers can request custom imagery for lesson plans, while marketers spin up visuals for campaigns in moments.

However, DALL·E also raises important ethical questions. The ability to produce hyper-realistic images blurs the line between fact and fiction, prompting debates about misinformation, copyright, and digital manipulation. OpenAI, along with many in the AI community, continues to work on guidelines and safeguards to encourage responsible use and limit the generation of harmful or misleading imagery. For more on these considerations, see this article from Nature on AI-generated images.

While DALL·E’s capabilities may seem like science fiction, this technology is only the beginning. As AI models grow more sophisticated, collaborative tools for artists, educators, and professionals across industries are becoming more accessible. To learn more about how models like DALL·E are built, check out this detailed primer from MIT on machine learning and creative applications.

Other Exciting Applications of Generative AI

Beyond their headline-grabbing roles as chatbots and image generators, generative AI models are quietly transforming numerous other fields—often in ways that are both unexpected and profound. Let’s take a closer look at several exciting applications where generative AI is making a real-world impact.

Revolutionizing Drug Discovery

Traditionally, discovering new drugs is a laborious and expensive process that can take years, if not decades. However, generative AI models are now able to simulate molecular structures and predict how they will interact with targets in the human body. For instance, startups and pharma giants are using AI to generate and evaluate potential drug candidates much faster than before. This approach was illustrated by researchers at MIT, who used generative models to find promising new antibiotics by exploring chemical spaces that humans might never consider. They trained an AI to predict antibiotic activity, which allowed them to discover a compound effective against several antibiotic-resistant bacteria. The process typically includes:

- Training a model on a large dataset of known molecules.

- Using the model to generate novel compounds with desired properties.

- Testing top candidates in the lab to identify promising drugs.

Designing Fashion and Art

The fusion of technology and creativity has never been more apparent than in the world of fashion and art. Generative models can now create new designs, enabling artists and fashion designers to rapidly prototype and experiment. Tate Modern explains how generative art leverages algorithms to produce never-before-seen visual forms, which can serve as inspiration or even final products in digital art galleries. In fashion, brands like Tommy Hilfiger have used AI to generate new styles, taking cues from past collections and customer demand. Designers can:

- Input parameters such as colors, fabrics, and styles.

- Use generative AI to produce a range of unique fashion prototypes.

- Iterate based on customer feedback and manufacturing feasibility.

Personalizing Education

Imagine a classroom where every student receives individualized learning resources tailored to their needs, learning pace, and interests. Generative AI is making this possible by creating custom teaching materials, quizzes, and even interactive simulations. According to Stanford University, these tools can significantly improve student engagement and learning outcomes. For example:

- An AI tutor generates step-by-step guides for students struggling with math concepts.

- Custom reading passages are created based on a student’s reading level and interests.

- Teachers use AI to quickly prepare lesson plans and resources adapted for different abilities.

Automating Code Generation

Writing code can be a time-intensive process, but generative AI models are helping software developers write, debug, and optimize code. Tools like GitHub Copilot can now suggest entire functions or even create small applications based on natural language descriptions. This doesn’t just accelerate development—it also helps those learning to code by providing context-relevant guidance. The typical workflow includes:

- Describing a desired function or feature in plain language.

- Letting the AI generate code suggestions or full code snippets.

- Reviewing and integrating the generated code into a project.

Enhancing Music Composition

Composers and musicians have begun exploring generative AI as a source of inspiration and collaboration. AI models can generate original melodies, harmonies, and entire compositions in a chosen style. As highlighted in this New York Times article, artists are using tools like OpenAI’s MuseNet and Google’s Magenta to co-create music, speeding up production and sparking novel creative directions. For example:

- Musicians input a genre, mood, or instrument lineup.

- The AI model composes an initial track or accompaniment.

- Artists refine the piece, blending human intuition with computational creativity.

As these applications demonstrate, the reach of generative AI stretches far beyond chatbots and image generation. It is transforming industries, amplifying human creativity, and opening up new possibilities in fields once thought to be immune to automation.