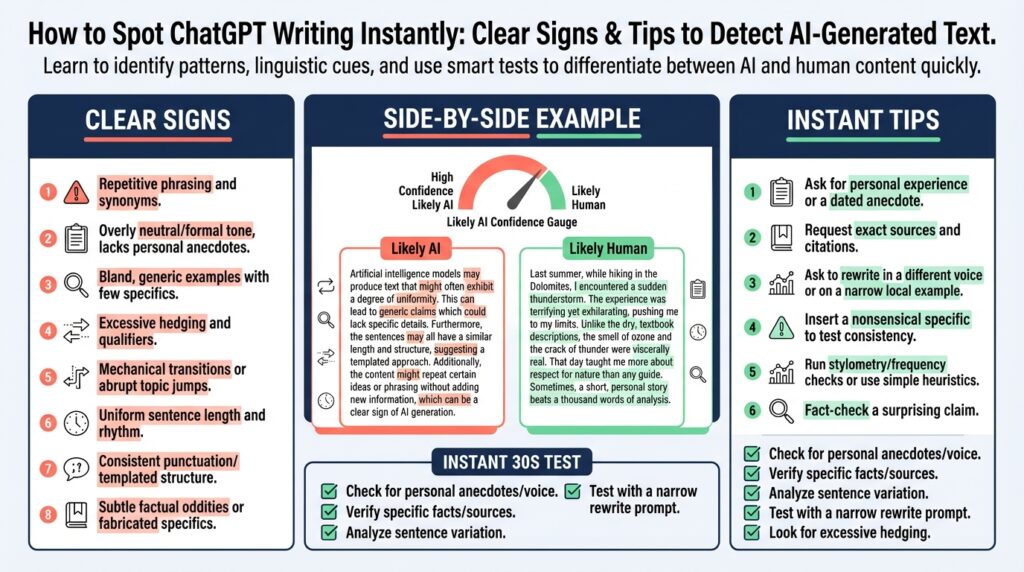

Quick checklist to spot AI writing

Building on this foundation, start with a compact checklist you can run in under a minute when you suspect AI writing or AI-generated text. First glance: do the paragraphs feel uniformly paced, with balanced sentence length and near-perfect grammar throughout? When ChatGPT or other models generate content it often produces evenly structured, neutral-toned prose that reads like an edited technical spec rather than a lived engineer’s account. How do you verify a suspicious paragraph in minutes? Use the quick tests below to differentiate polished machine output from human-authored nuance.

Scan for generic, surface-level examples and placeholders. A clear sign of automated writing is example code or scenarios that are syntactically correct but lack real context — for instance for (i = 0; i < n; i++) presented without a real input domain, error scenario, or expected output. Humans tend to include specific constraints: expected input ranges, failure modes, or a performance number; AI-generated text often stops at a correct-but-empty example. If concrete values, filenames, or function names are missing, flag the passage for closer review.

Look for brittle-perfect grammar paired with bland judgment. Machines often maintain flawless punctuation and parallel phrasing while avoiding strong opinions or trade-offs. A human reviewer will usually call out cost, time-to-implement, or trade-offs (e.g., “this adds ~200ms on cold starts and complicates observability”). If every option is described as concise, clear, and low-risk without trade-offs, that uniform positivity is a heuristic for AI writing.

Probe for verifiable specifics and first-hand signals. Ask the author to describe a past debugging session, include a precise date, a commit hash format, or a file path and line number that you could hypothetically check. Models cannot claim real-world personal experiences, so when they dodge or invent specifics, the answer will either refuse, generalize, or hallucinate plausible-sounding but unverifiable facts. Requesting verifiable details quickly exposes fabricated narratives.

Audit numerical claims and chronology for internal consistency. AI-generated text sometimes fabricates sources, rounds numbers inconsistently, or contradicts a metric later in the same article. Check whether throughput numbers, latency percentiles, or cost estimates remain consistent across the piece. If a paragraph claims “99.99% availability” and later states “monthly downtime averaged 2 hours,” those two statements conflict; humans usually reconcile such numbers in a single pass, whereas AI output can leave dangling contradictions.

Watch for stylistic fingerprints: repetitive transition phrases and tidy summaries. Phrases like “However,” “Moreover,” “In summary,” and closing-sentence recaps appear at predictable intervals in model outputs. These act like scaffolding: clear signal-to-noise but limited stylistic variation. If every paragraph starts with a scaffolded connective and ends with a near-synoptic line, you’re likely reading generated text rather than an engineer’s irregular, context-driven prose.

Use short, active tests you can run live. Ask two rapid follow-ups: one requesting a specific, dated personal anecdote and another asking for a line-by-line rationale for a code choice (not chain-of-thought, but explicit decision points and trade-offs). Then run an exact-phrase search for a suspicious sentence; duplicate sentences or near-identical paragraphs across different sites often indicate recycled AI outputs. These practical checks help you label content confidently and prioritize which pieces need a deeper forensic review.

Taking these quick heuristics further, treat this checklist as a triage tool: if multiple signals line up — bland examples, perfect grammar, unverifiable specifics, inconsistent numbers, and patterned transitions — escalate the piece for human review and source verification. We’ll use these flags in the next section to craft targeted tests and tooling that automate parts of this detection workflow.

Linguistic cues in grammar and phrasing

Building on this foundation, one of the fastest ways to detect AI-generated text is to inspect subtle linguistic cues in grammar and phrasing. When you want to spot ChatGPT or other systems producing AI-generated text, start by reading for uniform sentence cadence, neutral hedging, and consistently perfect punctuation within the first few lines. These surface signals are cheap to check and often show up before you dive into factual verification, which makes them valuable when you need to triage content quickly. How do you separate polished machine prose from human writing without a deep forensic pass? Focus on the grammar and phrasing patterns below to get high-confidence signals fast.

You’ll notice models favor balanced sentence length and tidy parallelism, which creates a characteristic rhythm. Sentences are often coordinated with simple connectors (“and,” “but,” “however”) rather than nested subordinate clauses that humans use when recalling a messy troubleshooting story. This produces paragraphs that feel evenly paced: similar sentence length, repeated transition phrases, and syntactic symmetry. Pay attention to nominalization (turning verbs into nouns like “optimization” instead of “optimize”) and a high incidence of passive voice; these are common in machine-written neutral summaries where direct action and lived process are underrepresented.

Pronoun patterns and voice choices give away a lot about authorship because they reveal stance and source. Machine outputs tend to avoid first-person experiential language—you’ll see “we recommend” or “the system should” rather than “I changed the config and saw X at 10:32 AM.” Humans include concrete anchors: precise times, file paths, error messages, and contractions (“can’t,” “didn’t”) in informal write-ups. Try reading a suspicious paragraph aloud: if it never contracts or never shifts perspective between “we” and “I” when a real anecdote would, treat that as a red flag for AI-generated text.

Punctuation choices and small grammatical quirks are practical micro-tests you can run in minutes. Machines often use the Oxford comma consistently, default to tidy em-dash spacing, and avoid typo-like punctuation patterns humans produce under time pressure (missing commas in long lists, parenthetical tangents that break sentence flow). Measureable signals like low variance in sentence length—many sentences clustering around 15–22 words—also suggest automated generation because natural prose exhibits wider variance. If you have a corpus of documents, a quick check of sentence-length standard deviation will surface unusually uniform pieces that merit deeper review.

Beyond structural checks, inspect phrasing for lack of concrete constraints and trade-offs, which we discussed earlier, but here focus on grammar-level giveaways: overuse of modal verbs (“could,” “might,” “should”) with hedged clauses, and repetitive scaffolding like “In summary” or “Moreover” at paragraph starts. These act like templates; humans more often break the template with a blunt qualifier, a specific caveat, or an emotionally colored aside. To elicit a revealing follow-up, ask for a dated, line-specific example: request a commit hash, the exact error log line, or the file path and line number that caused the issue. A human author can supply those; a model will generalize, refuse, or invent plausible but unverifiable specifics.

Taking these grammar and phrasing cues together gives you a compact, reliable toolkit to detect AI-generated text and decide what to escalate. We can automate parts of this—sentence-length variance, contraction density, and modal-verb frequency are all easy to compute—while leaving the richer judgment calls (trade-offs, lived anecdotes, verifiable artifacts) for human reviewers. In the next section, we’ll convert these heuristics into concrete tests and lightweight tooling you can run against suspected content to prioritize follow-up and verification.

Stylistic patterns: repetition and verbosity

Building on this foundation and the quick checklist you just read, one of the most reliable stylistic fingerprints to watch for is a pattern of repeated phrasing and unnecessary wordiness that adds no new signal. You’ll notice this quickly when paragraphs feel like they’re circling the same point with near-identical sentences rather than progressing an argument. For technical readers, that redundancy often masquerades as thoroughness: it looks like careful documentation but lacks concrete constraints, numbers, or trade-offs. Spotting repetition and verbosity early saves you time when deciding whether to run deeper verification or ask for human clarification.

First, define the terms so we’re precise: repetition here means reuse of the same lexical units or sentence frames across a paragraph or document; verbosity means extra words or clauses that don’t change the informational content. Models that generate AI-generated text often rely on scaffolded templates, so you’ll see the same connective phrases, mirrored sentence structure, or repeated clause boundaries appearing across different paragraphs. That produces a mechanical rhythm that differs from human variance—engineers writing from experience tend to introduce irregularities: a parenthetical aside, an exact metric, or a short frustrated sentence that breaks the pattern. Understanding these definitions helps you separate stylistic noise from intentional emphasis.

A quick manual check you can run in a minute: read a suspicious paragraph and highlight repeated phrases or near-duplicate sentences. How do you tell when repetition is purposeful versus a model artifact? If repetition conveys a different constraint or a new example each time, it’s likely intentional; if sentences repeat semantically—“The API should be resilient. The API should be reliable. The API should be available.”—with no differing data, treat that as an automated signature. Also watch for verbose scaffolding that pads content with hedges (“it is important to note that,” “in many cases,” “this should be considered”) without specifying which cases or how often.

You can automate part of this with lightweight metrics that are easy to compute on any corpus. Measure sentence-level n-gram repetition (percentage of bigrams or trigrams that reappear), lexical diversity (unique tokens divided by total tokens), and compression ratio (how much a paragraph can be losslessly shortened before information loss). Low lexical diversity, high n-gram overlap, and a high compressibility score together correlate strongly with generated text. We don’t need perfect thresholds—pragmatic cutoffs like >20% trigram reuse or lexical diversity under 0.4 are useful starting points—but calibrate them to your team’s documentation style to avoid false positives.

When you’re reviewing content, give directed editing prompts instead of broad critiques. Ask the author to remove redundant clauses, replace one repeated sentence with a concrete example, or add a single metric that would change your interpretation (latency p95, failure count, or a file path). Show them a targeted rewrite: instead of “The job should retry on failure. The job should retry on failure to avoid data loss,” propose “Retry up to 3 times with exponential backoff; include a dead-letter queue for persistent failures.” That kind of before/after forces specificity and breaks the scaffolding that models tend to produce.

Be mindful of false positives: some technical formats legitimately repeat wording for clarity, particularly in APIs, SLAs, or safety-critical docs where redundancy is intentional. Context matters—repetition in a README that introduces separate examples may be fine, whereas the same pattern appearing across multiple unrelated articles is suspect. Use human review to resolve borderline cases and combine stylistic signals with the factual checks we covered earlier (commit hashes, timestamps, and verifiable artifacts) before escalating.

Taking this concept further, incorporate these heuristics into the lightweight tooling we’ll discuss next: automated n-gram checks, compression-based flags, and targeted edit prompts that guide authors toward concrete, verifiable content. That approach turns repetition and verbosity from vague red flags into measurable signals you can act on, letting you prioritize deeper verification when it actually matters.

Content clues: vagueness and generic phrasing

When you skim a draft and a nagging vagueness sticks out, that’s often the first practical clue you’re reading AI-generated text from models like ChatGPT. Start by asking a simple question: what would you check first if this paragraph were part of a post you must trust? That immediate skepticism frames the review—vagueness and generic phrasing reduce informational density and mask missing constraints, so we prioritize specificity when triaging content.

Vague language shows up as abstract assertions without measurable anchors. You’ll see sentences that praise an approach (“robust,” “scalable,” “easy to maintain”) but never say how robust, at what scale, or what maintenance costs to expect. In technical writing, that lack of quantification is a red flag: humans documenting lived experience usually attach numbers, error examples, or environment details because those details matter for reproducibility and decision-making.

A concrete contrast exposes the problem quickly. Compare a generic loop example like for (i = 0; i < n; i++) presented with no context to a grounded one that reads: “Iterate i from 0 to n-1 where n is the number of active connections (max 1024), break on socket timeout after 200ms, and log the connection ID to /var/log/conn.log on error.” The second version supplies input domain, failure mode, observability artifact, and a file path—items humans add when they’ve run the code and debugged it under load. When you see the first form repeatedly, treat it as a candidate for AI-written material.

Probe authors with targeted follow-ups that force verifiability. Ask for a timestamped anecdote, a commit hash format, an exact error message, or the file and line number that caused a regression: “Which commit introduced the 15% latency regression, and what test reproduced it?” A human author can answer with specifics; a model will generalize, hedge, or fabricate plausible-sounding but unverifiable details. Those short follow-ups are practical tests you can run in under a minute during editorial review.

You can also lean on linguistic signals that correlate with generic phrasing. Watch for heavy modal-verb hedging (“could,” “might,” “should”) paired with parallel scaffolding (“Furthermore,” “In summary”), and low lexical diversity where the same phrases reappear across paragraphs. These are measurable: compute trigram repetition, contraction density, or sentence-length variance to surface unusually uniform prose at scale. Automating those checks helps you prioritize content for human verification rather than replacing expert judgment.

When editing or requesting rewrites, demand actionable replacements rather than open-ended improvement notes. Replace “improve observability” with “add p95 latency metrics for the payment API, emit traces with a 128-bit trace ID, and capture the failing request body for non-PII debugging.” That kind of directive converts vague, generic phrasing into verifiable tasks and forces the author to supply artifacts you can audit. Doing so improves documentation quality and reduces the chance you’ll ship unchecked AI-generated content.

Treat vagueness as a signal, not a verdict: context matters because template-like repetition is legitimate in APIs or SLAs where wording is intentionally redundant. However, when generic phrasing coincides with missing metrics, absent examples, and hedged language, escalate the piece for deeper verification. In the next section we’ll turn these heuristics into concrete tests and small tooling you can run against suspected content to automate the first-pass triage and surface the highest-risk items for human review.

Tool checks: detectors and watermarking limits

Building on this foundation, it helps to treat detectors and watermarking as complementary signals rather than authoritative verdicts when you triage suspected AI-generated text. How do you weigh an automated detector score against a watermark flag, a stylistic heuristic, and a provenance check? Start by recognizing what each tool is optimized to do: detectors evaluate token-level or model-probability patterns, while watermarking injects a detectable signal at generation time—both provide useful but brittle signals for spotting AI-generated text and ChatGPT outputs. (aclanthology.org)

Detectors come in a few flavors you’ll run into operationally: statistical-visual tools that surface improbable token sequences, zero-shot probes that analyze probability curvature, and supervised classifiers trained to separate human and machine distributions. GLTR and similar statistical visualizers expose sampling artifacts by comparing observed tokens to a model’s top predictions, while DetectGPT leverages geometric properties of a model’s log-probability landscape to flag generated passages—both approaches are informative but sensitive to sampling scheme and model family. When you rely on detectors, calibrate them to your content domain and sampling settings because their false-positive and false-negative rates move dramatically with domain shift. (aclanthology.org)

Watermarking is a different approach: it purposefully nudges sampling to leave an algorithmic signature that is invisible to readers but statistically detectable with short samples. Implementations that promote a randomized subset of “green” tokens during sampling can produce high detection rates without materially degrading text quality, and the original watermarking framework shows how to compute interpretable p-values for detection. However, watermarking assumes control over model generation and a threat model where adversaries cannot fully rewrite or semantically rephrase the output; when those assumptions break, the signal weakens. (proceedings.mlr.press)

The practical limits matter because attackers and natural workflows exploit them. Robust evaluations show that paraphrasing, recursive rewriting, or adversarial post-processing can substantially reduce both detector and watermark signal strength; hybrid human+AI text (where humans edit model output) further blurs the boundary and increases false negatives. Detectors also suffer from domain-shift and demographic bias—non-native prose, niche technical jargon, or intentionally terse writing can raise false-positive rates—so you can’t treat a high detection score as incontrovertible proof. These empirical results argue for conservative interpretation of automated signals and for combining multiple orthogonal checks. (emergentmind.com)

Given those constraints, design your pipeline around layered evidence and human validation. Use detectors and watermark checks as automated triage: flag content for review when multiple signals align (watermark present, detector score high, and stylistic heuristics match), but always follow with provenance checks—API logs, generation timestamps, and retrieval-based matching against a corpus of known model outputs can convert weak signals into verifiable artifacts. Recent work shows that augmenting detection with a retrieval step that searches previously generated sequences can recover many paraphrased outputs; therefore, keep generation logs and sampled outputs whenever policy and privacy allow. (hongsong-wang.github.io)

Operationally, set conservative thresholds and a human-in-the-loop escalation path to avoid false accusations: tune detectors on in-domain examples, log and retain short candidate spans for statistical retesting, and treat watermarking as a high-precision but not foolproof indicator. For everyday editorial triage, combine the quick linguistic heuristics we discussed earlier with these tool checks: when a detector or watermark flags content, ask for verifiable specifics (timestamped edits, commit hashes, or original prompts) before acting. That combined approach turns brittle signals into actionable leads and prepares you for the tooling tests and lightweight automation we’ll build next.

Practical verification steps and best practices

When you need to decide fast whether a draft was produced by ChatGPT or another model, start with layered, reproducible steps you can run under editorial time pressure. Building on the earlier heuristics, we front-load the high-value checks: automated detectors, watermarking signals, and simple forensic probes like exact-phrase matching and provenance requests. These quick signals help triage suspected AI-generated text before you invest time in deep forensics, and they surface the most actionable leads for human review.

Begin with a short, prioritized verification checklist that you can execute in under five minutes. How do you quickly confirm whether a passage is AI-generated? Run a detector to get a probability-style score, check for a watermark if available, perform an exact-phrase search across your corpus and the wider web, and then ask the author for one verifiable artifact (timestamp, commit hash, or file/line reference). If two or more signals align—detector high, watermark positive, and no verifiable artifact—escalate the item for a fuller audit.

Use concrete, reproducible commands to collect evidence fast. For text matching, an exact-string search will catch recycled or copied model outputs that have been published elsewhere; for example, run a local search with ripgrep: rg -nF "suspicious sentence or phrase" ./corpus/ to find duplicates. For web-level checks, wrap the exact phrase in quotes in a search engine or use site-limited searches on internal knowledge bases. Save the matched snippets and timestamps as evidence so you can compare them to claimed provenance.

Probe authors with targeted requests that force verifiability rather than opinions. Ask them to provide a timestamped edit, the commit hash that introduced the paragraph, or the exact error log line mentioned in the text; avoid prompts that ask for chain-of-thought. We prefer concrete anchors—file paths, test names, or a terminal output line—because models cannot claim genuine personal debugging artifacts without inventing them. If the author cannot supply verifiable specifics or replies with hedged, general answers, treat that as a substantive signal.

Operationalize detectors and watermarking as automated triage, not final verdicts. Use detectors to flag probability patterns and watermarking to provide a high-precision signal when you control generation; combine both with linguistic heuristics we covered earlier (uniform cadence, modal hedging, lack of concrete examples). Be mindful of false positives from domain shift or terse non-native prose: keep conservative thresholds, log flagged spans, and route any high-risk findings to a human reviewer rather than acting unilaterally.

Add lightweight quantitative metrics to make triage repeatable and auditable. Compute sentence-length variance, lexical diversity (unique tokens / total tokens), and trigram reuse rate across the paragraph; pragmatic cutoffs to start tuning from are >20% trigram reuse or lexical diversity under 0.4, though you must calibrate these to your documents to avoid false flags. Also measure compressibility (how much a paragraph can be losslessly shortened) and contraction density; these metrics convert stylistic intuition into repeatable signals you can threshold and monitor over time.

Treat verification as evidence aggregation with a human-in-the-loop escalation path. When automated checks and forensic probes converge, request provenance artifacts and perform a contextual review; when signals diverge, prioritize targeted edits that force specificity (replace “improve observability” with “emit p95 latency and a 128-bit trace ID to /traces”). By combining detectors, watermarking, exact-phrase searches, and author-side verifiable specifics we get a practical, defensible workflow that scales editorially and reduces reliance on any single brittle indicator. In the next section we’ll convert these steps into lightweight tooling you can run as part of your CI/editorial pipeline.