Introduction to LLM-Based AI Chatbots

Large Language Models (LLMs) have revolutionized the landscape of artificial intelligence, giving birth to highly capable AI chatbots that can engage in natural, contextual conversation indistinguishable from human communication. Unlike previous generations of rule-based or pattern-matching bots, modern LLM-based chatbots utilize deep learning techniques to read, comprehend, and generate human-like text. Pioneering architectures such as the Transformer model developed by Google have elevated the capacity of machines to process and manipulate language at an unprecedented scale.

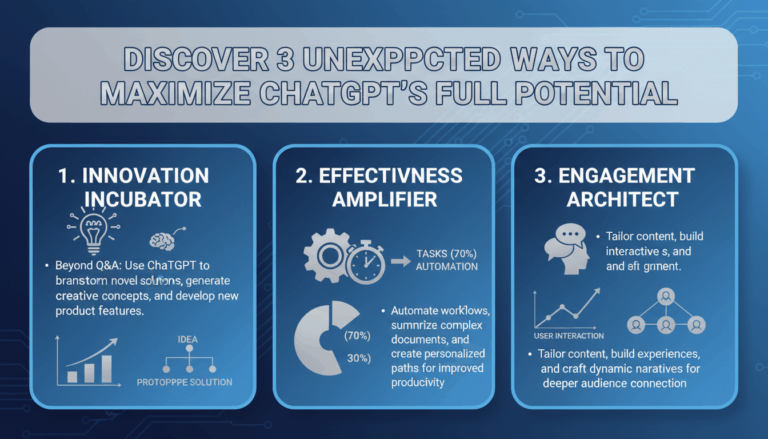

These sophisticated systems are trained on massive datasets encompassing a wide range of topics and writing styles, enabling them to understand context, tone, and nuance. The foundation of their success lies in the science of pre-training and fine-tuning: pre-training on diverse internet-scale corpora, followed by domain-specific fine-tuning, shapes an LLM’s ability to serve as a versatile conversational agent. As a result, chatbots like OpenAI’s ChatGPT and Google’s Bard are now adept at answering questions, brainstorming creative ideas, summarizing complex documents, and even tutoring users in various subjects.

To understand how these AI chatbots operate, it is important to recognize the interplay between three core components:

- Natural Language Understanding (NLU): LLM chatbots analyze user inputs to capture intent, sentiment, and contextual clues. For example, when a user asks about the “effects of climate change,” the model identifies the intent to retrieve scientific explanations.

- Dialogue Management: This involves planning and controlling conversation flow. Advanced LLM-based bots employ attention mechanisms to remember context across multiple turns, creating interactions that feel coherent and personalized. As highlighted in the Nature review on conversational AI, such bots can maintain complex, multi-step dialogues with ease.

- Natural Language Generation (NLG): Finally, the chatbot generates responses using probabilistic models that predict the next word or phrase based on context, prior history, and learned knowledge. This capability allows them to craft explanations, advice, or stories that are both accurate and engaging.

The real-world value of LLM-based chatbots is underscored by their wide adoption in sectors like customer support, healthcare, and education. For instance, universities and companies are leveraging these systems to power virtual assistants capable of handling thousands of queries daily with human-like empathy and diligence. As AI research continues to push boundaries, the sophistication of these tools is expected to grow, opening new possibilities for seamless, intelligent human-machine collaboration. For more details on the emergence and impact of LLMs, consider exploring scholarly resources such as ScienceDirect’s overview of language models.

Evolution of Large Language Model Architectures

The architecture of large language models (LLMs) has rapidly evolved, driving forward the capabilities of AI chatbots in both sophistication and utility. This journey from early neural network structures to today’s transformative models is marked by innovation in scalability, training methods, and efficiency. Let’s delve into the major architectural milestones that have shaped the landscape of LLM-based chatbots.

The Transformer Revolution

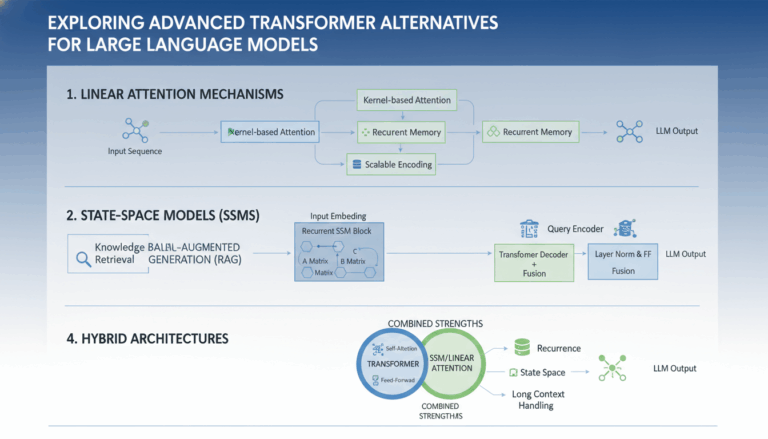

The introduction of the transformer architecture in the seminal paper “Attention Is All You Need” by Vaswani et al. in 2017 marked a decisive turning point. Unlike previous sequence models such as RNNs and LSTMs, transformers leveraged a multi-head self-attention mechanism. This allowed the model to consider relationships between all tokens in a sequence simultaneously, greatly enhancing both parallelization and context understanding.

With transformers, chatbots began understanding complex sentence meaning, handling long-range dependencies in conversation, and generating coherent, contextually relevant responses. This architecture paved the way for much larger models with billions of parameters, such as OpenAI’s GPT-3 and Google’s BERT.

The Rise of Foundation Models

Foundation models extend the transformer approach by scaling up in three key dimensions: data, model size, and compute resources. Modern LLMs are trained on vast, diverse datasets using unsupervised and self-supervised learning, making them deeply knowledgeable in many domains. Notably, models like GPT-3 and PaLM by Google feature tens or even hundreds of billions of parameters.

Such scale brings unique challenges and advantages:

- Pretraining and Fine-tuning: Foundation models are first pretrained on expansive text corpora and then fine-tuned for specific chatbot tasks. This approach allows rapid adaptation to new domains with minimal labeled data.

- Zero-shot and Few-shot Learning: LLMs can perform novel tasks with little or no additional training, making them incredibly versatile for real-world applications (Harvard Data Science Review).

Architectural Optimizations and Efficiency Gains

The increasing cost and complexity of training gigantic models has driven research into architectural efficiency. Key advancements include:

- Parameter Sharing: By reusing weights across layers, as seen in models like ALBERT from Google, the number of learnable parameters can be reduced without sacrificing performance.

- Sparse Attention Mechanisms: Efficient transformers like Big Bird and Perceiver utilize sparse attention to handle longer sequences and reduce computation.

- Distillation and Quantization: These techniques shrink models while maintaining performance, making LLMs accessible for smaller-scale use cases and on-device inference (Google AI Blog).

Multimodal and Instruction-Tuned Architectures

The next leap in LLM architecture entails integrating multiple data modalities—text, images, audio, and even video—resulting in more versatile and context-aware chatbots. Prominent examples include GPT-4V and DeepMind’s Gato. These models advance interactive abilities by reasoning across formats, essential for applications like virtual assistants and educational tools.

Additionally, instruction-tuned models like Stanford’s Alpaca follow user directions with higher accuracy and safety, thanks to fine-tuning on large datasets of example requests and responses.

This architectural evolution is at the core of today’s most advanced, reliable, and useful AI chatbots, setting the foundation for future innovations in language understanding and human-AI interaction. For those interested in the technical depth, the LLM architectures guide by Lilian Weng offers further reading.

Key Components and Workflows in LLM-Based Chatbots

Modern LLM-based AI chatbots are built upon layers of sophisticated architecture and well-orchestrated workflows. To demystify how these intelligent systems function, it’s essential to break down their key components and sequential processes, showcasing both the technical underpinnings and practical flows that bring text-based AI to life.

1. Data Ingestion and Preprocessing

The foundation of any large language model (LLM) chatbot is data—vast quantities of text sourced from books, websites, articles, and dialogues. Before the chatbot can learn, its creators must meticulously gather, clean, and structure this data:

- Collection: Teams aggregate millions or billions of words from diverse text corpora, ensuring a wide range of topics and language constructs. For example, the Common Crawl dataset is popular for LLM training.

- Normalization: Text is converted into a uniform format, removing inconsistencies like mixed capitalization, encoding errors, and unwanted symbols.

- Tokenization: The data is broken down into manageable pieces—words, subwords, or tokens—enabling models to interpret and generate text efficiently. More on tokenization and its importance can be found at Google BERT Tokenizer Guide.

2. Neural Network Architecture and Training

The transformer, introduced in the seminal paper “Attention Is All You Need”, is the backbone of most LLM architectures. Here’s how this step unfolds:

- Layer Construction: Transformers stack attention and feedforward layers, enabling the model to focus on different parts of a sentence for context-aware understanding.

- Fine-tuning: After global training on broad data, the model is often fine-tuned on task-specific datasets—such as customer support dialogs or medical Q&A—to impart niche expertise (Hugging Face Training Guide).

- Supervised & RLHF: Supervised learning uses labeled responses, while Reinforcement Learning from Human Feedback (RLHF) further polishes conversation quality and safety, as discussed in this DeepMind study.

3. Prompt Engineering and Context Management

A chatbot’s ability to generate relevant responses hinges on prompt engineering—the art of framing user input, model instructions, and past conversation in a way the model best understands. Here’s how this works in practice:

- Input Construction: Developer-defined templates or real-time logic decide how prompts are composed, incorporating user queries and relevant past exchanges.

- Context Windows: Most models have a fixed memory window (e.g., 4,000 tokens), so careful design ensures important information persists for coherent conversations. Techniques for managing long context can be found at Microsoft Research.

- Role Instructions: Instructions like “Answer as a helpful medical assistant” are often prefixed to guide the model’s tone and expertise.

4. Response Generation and Output Filtering

With context established, the model generates responses. This isn’t just a naive output—modern chatbots employ additional steps to ensure quality and safety:

- Decoding Strategies: Techniques like beam search, top-k, and nucleus sampling determine which words or phrases the model selects next, balancing variety and coherence (Hugging Face Blog).

- Safety Policies: Output is filtered via explicit rules and additional models trained to detect hate speech, misinformation, or PII, as explained in this Meta AI report.

- Feedback Loops: User reactions and moderator interventions inform continuous improvement, enabling iterative model refinements over time.

5. Integration with Downstream Systems

For real-world impact, LLM chatbots are tightly integrated into apps, customer support portals, or enterprise platforms:

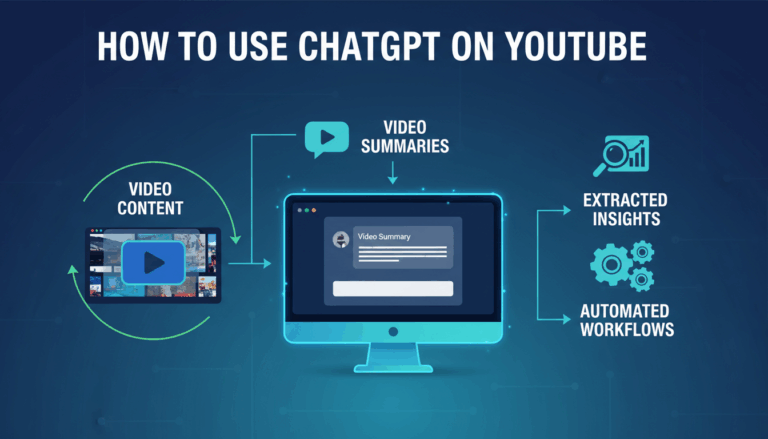

- APIs and Middleware: Models are accessed through APIs, enabling seamless embedding within digital workflows (OpenAI API documentation).

- Plugin Ecosystems: Many systems now support plugins—third-party modules that extend chatbot functionality, such as fetching live data or making bookings.

- Multimodal Capabilities: Advanced workflows allow combining text, image, and even speech interaction, expanding the reach of LLM-powered assistants (Google Research announcement).

Each of these components and workflows is a testament to the rapid evolution of AI chatbots. By understanding what goes on under the hood, businesses and technologists can make smarter choices about deploying, customizing, and scaling conversational AI for real-world value.

Core Applications Across Industries

LLM-based AI chatbots have quickly become indispensable across a wide variety of industries, meeting the complex needs of organizations through automation, personalization, and enhanced customer engagement. Their widespread adoption is driven by powerful natural language understanding and generation capabilities, making them versatile tools in domains ranging from healthcare to finance. Here’s a detailed look at their core applications across key sectors:

Healthcare

AI chatbots powered by large language models are revolutionizing patient engagement and administrative workflows in healthcare settings. These applications include:

- Patient Triage and Appointment Scheduling: Chatbots can assess patient symptoms through conversational triage, suggest possible conditions, and book appointments, reducing wait times and healthcare professional workloads. For example, conversational agents deployed by Mayo Clinic streamline patient intake processes.

- Medical Information Dispensation: By leveraging extensive medical literature, LLM chatbots answer patients’ questions based on the latest evidence, enhancing accessibility to trustworthy healthcare advice. This is particularly valuable in mental health, where chatbots like Woebot provide supportive cognitive behavioral coaching.

- Administrative Support: Chatbots automate paperwork, insurance verification, and follow-up reminders, enabling efficient hospital operational management.

Banking and Financial Services

Financial institutions use LLM-based AI chatbots to enhance customer service, automate back-office processes, and improve fraud detection:

- Customer Assistance: Interactive bots can manage basic inquiries about account balances, loan applications, and credit card management—all in natural language. For instance, Bank of America’s virtual assistant “Erica” helps millions users daily.

- Personal Finance Advice: Chatbots can offer tailored financial planning tips, investment advice, or reminders to reduce late fees, drawing on customer-specific data.

- Compliance and Risk Monitoring: AI models detect unusual transactions, automate compliance checks, and generate regulatory reports to support anti-fraud initiatives, as outlined by Deloitte.

E-commerce and Retail

The retail space benefits significantly from AI chatbots that offer scalable, always-on support, and personalized shopping experiences:

- Shopping Assistance and Recommendations: Chatbots can analyze browsing and purchase data to recommend products tailored to individual tastes, increasing conversion rates. See how Shopify merchants use AI-powered chat to boost sales.

- Order Management and Tracking: Shoppers interact naturally to check order statuses, initiate refunds, and resolve issues without human intervention.

- Customer Support Automation: LLM-based bots handle FAQs, product info, and troubleshooting, ensuring prompt replies and customer satisfaction even during peak periods.

Education

The educational landscape is being reshaped by LLM-powered chatbots that deliver on-demand learning and personalized tutoring:

- Intelligent Tutoring and Study Support: Bots can explain concepts, quiz students, and offer feedback on essays, catering to individual learning gaps. Research from Stanford Graduate School of Education highlights the role of AI in tailored education.

- Administrative Assistance: Institution bots can handle student queries regarding registration, course selection, and campus resources.

Enterprise Productivity

Organizations are integrating AI chatbots into their workflows to streamline operations and facilitate knowledge management:

- Internal Knowledge Bases: Employees consult bots to find policy documents, troubleshoot IT issues, or get HR information, reducing support tickets and onboarding times. Harvard Business Review explores how generative AI is improving workplace creativity and efficiency.

- Meeting Scheduling and Project Management: Chatbots can coordinate meetings across teams, send reminders, and assist with project tracking via integration with tools like Slack and Microsoft Teams.

As LLM-based chatbots continue to evolve, industries are discovering more ways to leverage their adaptive intelligence, driving productivity and redefining the standard for digital interaction. Their expanding role underscores the importance of continuous innovation and responsible deployment to unlock their full potential.

Challenges and Limitations Facing LLM Chatbots

Large Language Model (LLM) chatbots have taken center stage in modern AI-driven conversation systems, yet they are not without significant challenges and limitations. Understanding these obstacles is crucial for both developers and organizations adopting this technology. Below, we explore the key issues that practitioners encounter, along with examples and recommended readings for deeper insight.

1. Data Privacy and Security Concerns

LLM chatbots rely heavily on vast datasets, often containing sensitive user information. This creates risks related to data privacy and security:

- Data Leakage: If training data is not carefully filtered, models may inadvertently disclose confidential information. For instance, research has demonstrated that LLMs can sometimes memorize and regurgitate snippets from their training data, posing a risk of personal data exposure.

- Lack of Encryption: Secure encryption during chatbot interaction is essential, but not always enforced, leaving conversations susceptible to eavesdropping. Learn more about secure communications from the UK National Cyber Security Centre.

To address these issues, organizations should implement robust privacy-preserving machine learning techniques and ensure transparent data handling processes.

2. Hallucination and Misinformation

One particularly problematic limitation is the tendency of LLM chatbots to “hallucinate”—confidently generating plausible but incorrect or fabricated information:

- Example: When asked about recent events or niche topics not in the training data, LLMs may present inaccurate answers as facts. This has far-reaching implications in areas like healthcare, where accuracy is critical. Nature provides a detailed discussion on why AI hallucinations happen and their potential dangers.

- Mitigation Steps: Combining LLM chatbots with retrieval-augmented generation (RAG) or fact-checking systems can help, but there’s still no guaranteed solution.

3. Ethical Bias and Fairness

Bias in training data leads to biased chatbot responses, which can reinforce negative stereotypes or unfairly disadvantage certain groups:

- Example: Studies like those from the Stanford AI Lab indicate that even highly curated datasets can carry subtle prejudices, resulting in biased conversational outputs.

- Addressing Bias: Ongoing efforts include dataset diversification, bias detection algorithms, and human-in-the-loop evaluation. Yet, fully eliminating bias remains a formidable challenge.

4. Context Retention and Understanding

Maintaining coherent long-term conversations is another primary hurdle for LLM chatbots:

- Short-Term Memory: Most commercial LLMs have a fixed window for context, often between 2,000 and 8,000 tokens. This limits their ability to recall details from earlier in a conversation thread.

- Example: In multi-turn dialogues, a chatbot might “forget” previously provided information, disrupting customer experience. Techniques like memory-augmented networks offer some promise—see Google AI Blog’s discussion on memory in chatbots for advancements.

5. Scalability and Resource Demands

Deploying LLM chatbots at scale comes with significant resource requirements:

- Compute Costs: Extensive GPU/TPU options and power consumption can make large-scale deployments cost-prohibitive for many firms.

- Solution Examples: Strategic use of smaller, distilled models and methods like on-device inference can offset some costs, but may affect accuracy and capability. Meta AI’s research highlights recent progress in optimizing LLM efficiency.

6. Adaptation to Domain-Specific Tasks

General-purpose LLM chatbots often struggle to deliver high performance on specialized or niche domains:

- Customization Challenges: Fine-tuning LLMs on proprietary data can improve relevance but often requires extensive labeled data and ongoing maintenance.

- Example: Industry use cases like legal, healthcare, or engineering frequently require domain adaptation frameworks and expert oversight to minimize risks. The MIT Press’s fundamental texts provide guidance on adapting machine learning models for specialized tasks.

In summary, while LLM chatbots represent a powerful evolution in conversational AI, their deployment demands careful attention to privacy, reliability, fairness, context handling, efficiency, and domain adaptation. Addressing these challenges is essential for realizing their full potential and ensuring user trust in AI-powered interactions.

Emerging Trends and Future Directions in LLM AI

As large language model (LLM) based AI chatbots rapidly evolve, several emerging trends and future directions are shaping their development. Staying informed about these innovations is crucial for organizations and developers seeking to harness the full potential of LLMs. Below, we explore some of the most prominent trends and next steps for LLM-powered conversational AI.

1. Multimodal Models and Integration

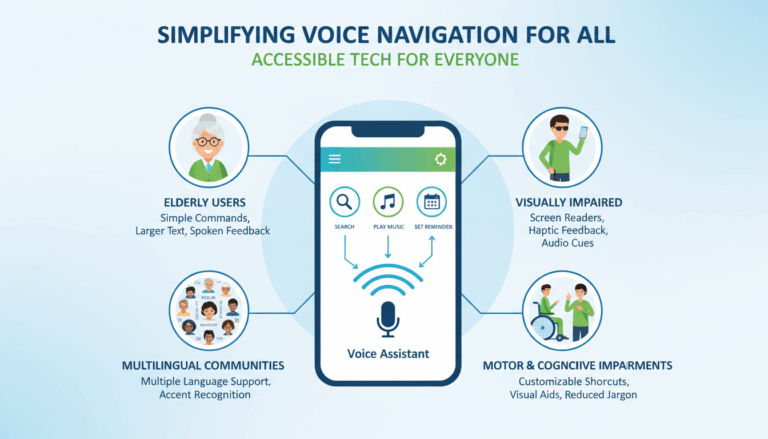

Next-generation LLMs are expanding beyond text-only interactions. Multimodal models such as Meta’s Llama 3 and Google’s Gemini support text, image, audio, and even video input/output. This advancement allows chatbots to interpret images, generate visual content, and process spoken language, greatly enhancing user engagement and accessibility.

- Step 1: Integrate APIs to handle various media types, such as image recognition libraries or speech-to-text systems.

- Step 2: Design chat interfaces that allow users to upload or interact with non-textual data.

- Example: A customer support chatbot that can analyze photos of receipts or troubleshoot machinery visually.

2. Personalization and Context Awareness

Future LLMs will increasingly personalize conversations by leveraging user data, context, and conversation history. These improvements help chatbots understand subtle user preferences and deliver tailored responses, leading to higher engagement and satisfaction. Techniques such as reinforcement learning from human feedback (RLHF) and dynamic memory networks are central to this evolution.

- Step 1: Implement secure storage for user profiles and interaction history.

- Step 2: Use context windows and memory attention mechanisms to track ongoing conversations.

- Example: Healthcare chatbots offering personalized advice based on a patient’s medical history, as highlighted by Mayo Clinic research.

3. More Transparent and Explainable AI

As LLMs are increasingly deployed in high-stakes environments, the demand for explainable AI (XAI) grows. The goal is to ensure users and stakeholders can understand how chatbot decisions are made, increasing trust and facilitating regulatory compliance. Research, such as that conducted by Harvard Data Science Review, underscores the importance of transparency in AI.

- Step 1: Incorporate attention visualization tools to show which input features influenced a response.

- Step 2: Offer users on-demand explanations or reasoning chains for each answer.

- Example: In legal or financial chatbots, allowing users to request a summary of how specific sources or data led to a decision.

4. Responsible and Ethical AI Deployment

Bias, hallucinations, and malicious use remain significant challenges in LLM-based chatbots. Developers and enterprises are prioritizing ethical AI guidelines, extensive model audits, and robust content moderation protocols. Organizations like the World Economic Forum and academic bodies offer comprehensive frameworks for responsible deployment.

- Step 1: Regularly test responses for bias and re-train models on diverse, representative datasets.

- Step 2: Employ monitoring tools to detect and flag inappropriate content in real time.

- Example: Banking chatbots that screen for discriminatory language or misinformation before responses reach users.

5. Integration with Knowledge Bases and Tools

Emerging LLMs can connect dynamically with external databases and software systems, enabling chatbots to retrieve up-to-date information and perform actions on users’ behalf. Tool integration, or toolformer models, allows LLMs to execute code, access company knowledge bases, and trigger workflows via APIs.

- Step 1: Connect LLMs with real-time search or custom plugins, such as OpenAI’s Plugin platform.

- Step 2: Leverage retrieval-augmented generation (RAG) architectures to bring in external knowledge for more accurate and current chatbot answers.

- Example: Enterprise AI assistants that schedule meetings, pull CRM reports, or fetch policy documents on demand.

Looking Forward

The landscape of LLM-based AI chatbots is rich with innovation. Advancements in multimodal interaction, personalization, explainability, ethical responsibility, and tool connectivity will redefine both user experience and practical capabilities. Those leveraging the latest research and frameworks will remain on the leading edge as conversational AI continues to transform digital interaction.