Introduction to LLM-Based AI Chatbots

In recent years, Large Language Models (LLMs) have revolutionized the field of artificial intelligence, particularly in the development of conversational agents or AI chatbots. LLM-based chatbots leverage vast neural networks, trained on massive datasets, to process, understand, and generate human-like language. These advances have enabled AI chatbots not only to interpret user intent more accurately but also to engage in nuanced dialogue, tailoring responses with unmatched contextual awareness.

At the core of these chatbots is the use of transformer architectures, which excel at modeling relationships in sequences of data. This innovation was first introduced in the landmark paper “Attention Is All You Need” published by researchers from Google (read the paper). LLMs such as OpenAI’s GPT series, Google’s PaLM, and Meta’s LLaMA are built upon these transformer principles, allowing them to understand subtleties in language, manage multi-turn conversations, and continually improve through feedback loops.

Unlike earlier rule-based chatbots, which relied on scripted responses and limited keyword recognition, LLM-powered models use deep learning to grasp semantic meaning, context, and even sentiment. They have shown remarkable ability in tasks such as summarization, translation, creative writing, and code generation. Research led by the Stanford Artificial Intelligence Laboratory confirms that these models frequently outperform traditional approaches across a wide array of benchmarks.

Applications of LLM-based chatbots extend from customer service and healthcare to education and entertainment. Companies such as IBM Watson and Google Dialogflow have integrated LLM technology into their platforms, enabling enterprises to automate interactions, personalize user experience, and analyze conversational data at scale. For example, in healthcare, LLM-based bots can assist patients by providing symptom checks, appointment reminders, and mental health support, with sensitivity and privacy compliance.

However, these capabilities also raise new challenges around bias, misinformation, and ethical usage. Mitigating these risks is an ongoing area of research, as highlighted by organizations such as the Oxford Internet Institute. Understanding how LLMs work, their strengths, limitations, and expanding roles in society, is therefore vital for developers, businesses, and end users alike.

In summary, LLM-based AI chatbots have moved far beyond the constraints of their rule-based predecessors, promising unprecedented conversational intelligence and adaptability. Their growing influence marks an exciting frontier in artificial intelligence, one that continues to evolve as the underlying science and ethical considerations advance.

Core Architecture and Components of LLM Chatbots

The rapid rise of Large Language Model (LLM) chatbots, such as OpenAI’s GPT series and Google’s Gemini, has redefined the way humans and machines interact. To understand what makes these chatbots powerful, it’s crucial to explore their core architecture and the essential components that facilitate their function.

1. The Foundation: Large Language Models

At the heart of AI chatbots are large transformer-based architectures. These models, like GPT-4 and BERT, are pre-trained on a massive corpus of text to learn language patterns, syntax, and semantics. Transformers use self-attention mechanisms, allowing them to weigh the relevance of different input words for better context understanding (Read the foundational paper here). Pre-training equips these models with a deep, contextual understanding of language, which is then fine-tuned for specific chatbot tasks.

2. Model Training and Fine-tuning

While pre-training builds a knowledge foundation, fine-tuning focuses on aligning the model with intended chatbot applications. Training usually happens in two distinct stages:

- Supervised Fine-Tuning (SFT): Here, the model is further trained on a smaller, labeled dataset that’s relevant to chatbot conversations.

- Reinforcement Learning from Human Feedback (RLHF): This technique uses human feedback to iteratively improve responses by rewarding desirable outcomes, as outlined by Hugging Face.

3. Input Processing and Tokenization

Before a chatbot can understand and respond, it must process the user’s input via tokenization. The input message is converted into tokens—smaller, meaning-carrying units. This process ensures the text can be ingested by the LLM efficiently, preserving both meaning and context (see a technical guide here).

4. Context Management and Memory

Modern LLM chatbots maintain context over a conversation, allowing for fluid, multi-turn dialogues. This is achieved by embedding a conversation history or a “window” of recent exchanges in the input, so each subsequent response remains relevant. Advanced models, like those powering enterprise chatbots and assistants, leverage external memory modules to enhance long-term context handling.

5. Response Generation: Decoding Strategies

Generating outputs isn’t just about predicting the next word. Chatbots deploy sophisticated decoding algorithms, such as beam search, sampling, and nucleus sampling, to create coherent, engaging replies. These strategies influence the creativity, diversity, and informativeness of chatbot responses, striking a balance between relevance and naturalness.

6. Safety, Moderation, and Feedback Loops

As conversational AI matures, addressing safety concerns—like harmful or biased outputs—is vital. Industry leaders incorporate moderation frameworks, bias detection systems, and real-time user feedback to continually refine performance and uphold ethical standards (Google AI’s approach to responsible AI). These processes are iterative, leveraging both automated classifiers and human moderators for optimal safety.

7. Integration and Deployment Layers

For practical application, LLM chatbots must interface with various platforms—web, mobile, or enterprise systems. APIs and orchestration pipelines allow seamless deployment and ongoing interaction, with middleware handling session state, authentication, and analytics. This integration ensures the AI chatbot can support real-world needs, from customer support to creative writing, as explained by Microsoft Azure OpenAI Service.

These core components—ranging from the foundational model architecture to context handling and ethical safeguards—collectively shape the state-of-the-art in LLM-based chatbots. As research advances, each element continues to evolve, promising even more dynamic, safe, and effective conversational agents.

Popular Applications Across Industries

AI chatbots powered by large language models (LLMs) are revolutionizing various sectors by providing intelligent automation, seamless customer interaction, and powerful analytics. These conversational agents are becoming ubiquitous, thanks to advancements in natural language processing and deep learning. Here’s an in-depth look at how they’re transforming key industries with real-world applications, use cases, and examples.

Customer Service and Support

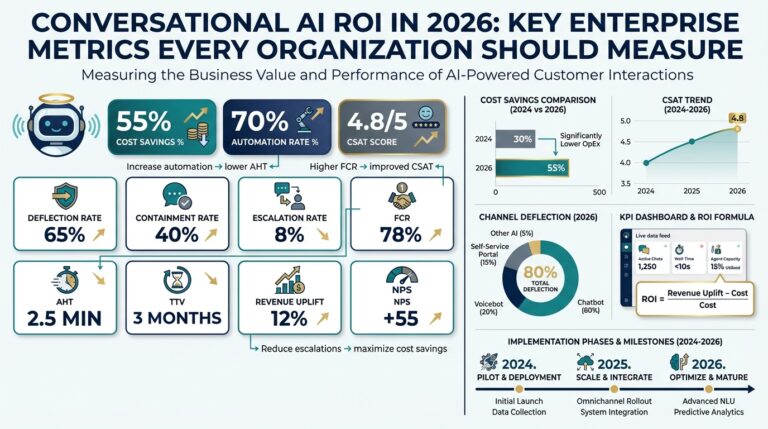

One of the earliest and still most prevalent applications of LLM-based chatbots is in customer service. Businesses across industries leverage chatbots to provide immediate, 24/7 assistance to users. These AI-driven systems can accurately understand and respond to natural language queries, handle multiple requests simultaneously, and escalate complex issues to human agents when needed.

- Banking & Finance: Leading financial institutions use chatbots for tasks such as balance inquiries, transaction histories, and fraud detection. For example, JPMorgan Chase has implemented AI chatbots to streamline operations and reduce costs.

- Retail: E-commerce giants like Amazon use LLM chatbots to assist customers with product recommendations, order tracking, and returns, significantly increasing customer satisfaction and operational efficiency.

Healthcare and Telemedicine

LLM-powered chatbots are increasingly utilized in healthcare for their ability to provide instant support, automate patient engagement, and aid in clinical workflows. These chatbots can perform a variety of functions, such as symptom checking, appointment scheduling, and post-visit follow-ups.

- Symptom Triage: Platforms like Mayo Clinic’s AI health chat offer symptom triage powered by medical knowledge bases, guiding patients toward proper care.

- Mental Health: Apps such as Woebot use LLMs to deliver cognitive-behavioral therapy and ongoing mental health support through conversational engagement.

Education and E-Learning

In the field of education, LLM chatbots are personalizing learning experiences, supporting students worldwide, and optimizing administrative work for educators. Their ability to understand context and adapt responses makes them ideal virtual tutors and teaching assistants.

- Personalized Tutoring: Platforms powered by LLMs like OpenAI can evaluate students’ queries and provide tailored explanations in multiple disciplines, helping to close learning gaps.

- Onboarding and FAQ Automation: Universities such as Oxford leverage chatbots to automate responses to common administrative and academic questions, enhancing the student experience.

Human Resources and Employee Experience

LLM-based chatbots streamline HR operations from recruitment to onboarding and employee engagement. They provide instant answers to HR policies, payroll questions, and benefits administration, reducing the administrative burden on HR staff.

- Recruitment: Automated chatbots can screen resumes, schedule interviews, and answer candidate FAQs, as implemented by companies like IBM.

- Employee Support: Chatbots embedded within intranet portals deliver real-time support for leave requests, benefits selection, and compliance training.

Marketing and Sales Automation

In marketing, LLM-powered chatbots excel at engaging potential customers, qualifying leads, and nurturing prospects through personalized, real-time interactions. They analyze user intent, provide tailored product information, and guide users through the decision journey.

- Lead Qualification: LLM chatbots integrated into digital platforms can identify high-quality leads and segment them for further outreach, as discussed in Harvard Business Review.

- Conversational Commerce: Brands utilize chatbots in platforms like WhatsApp and Facebook Messenger to facilitate guided shopping experiences and instant purchasing.

As industries embrace LLM-based chatbots, the ecosystem expands rapidly—delivering not just efficiency, but fundamentally reshaping how businesses and customers interact. For a deeper understanding of technical mechanisms and real-world case studies, resources such as the McKinsey AI in Business Report and the Comprehensive Survey on Chatbots (arXiv) provide valuable insights.

Techniques for Training and Fine-Tuning LLMs

Training and fine-tuning Large Language Models (LLMs) is a multifaceted process, blending computational rigor with cutting-edge machine learning techniques. The journey from raw data to an operational AI chatbot involves several complex stages, each requiring careful design choices. Below, we explore the primary methodologies, the essential steps, and illustrative examples that underpin this transformative process.

Pretraining: Building the Foundation

LLMs initially undergo a phase known as pretraining, where they learn generalized language patterns from vast text corpora. The core technique here is unsupervised learning, letting the model predict the next word in a sentence based on context. Reputable datasets, such as the Common Crawl and Wikipedia, provide the textual fuel for training, offering comprehensive linguistic exposure (Google AI Blog).

- Transformer Architecture: The transformer framework, introduced by researchers at Google (original paper), underpins nearly all modern LLMs. This architecture enables efficient capturing of long-range dependencies in text through self-attention mechanisms.

- Scalable Parallelism: Training megascale models, such as OpenAI’s GPTs, often employs distributed computing strategies across thousands of GPUs. This parallelism speeds up training but also necessitates rigorous synchronization techniques (MosaicML Guide).

- Masked Language Modeling: For models like BERT, random masking of input tokens teaches the network to infer missing information, boosting its contextual understanding.

Fine-Tuning: Specializing the Model

After pretraining, LLMs can be adapted for specific domains or tasks through a process called fine-tuning. This step involves supervised learning, where the model learns from labeled examples related to its intended application.

- Domain Adaptation: To tailor an LLM for healthcare, for example, developers might fine-tune it on medical literature and patient records, ensuring specialized vocabulary and patterns are learned (Nature Digital Medicine).

- Instruction Tuning: By exposing the model to carefully curated instruction-based datasets, its ability to follow user commands improves significantly (Stanford Alpaca paper).

- Prompt Engineering: While not strictly parameter updating, designing effective prompts dramatically impacts outcomes, steering the model’s behavior without additional training.

Reinforcement Learning from Human Feedback (RLHF)

A breakthrough approach to aligning LLM behavior with human preferences is Reinforcement Learning from Human Feedback. Here, AI responses are evaluated by humans, and their preferences shape the final model via reward optimization.

- Collect Demonstrations: Gather examples of good and bad chatbot responses, judged by expert annotators.

- Train a Reward Model: Model learns to predict which responses are preferred, using labeled examples (OpenAI Research).

- Policy Optimization: Through algorithms like Proximal Policy Optimization (PPO), the model iteratively improves at producing desirable output, directly optimizing for human-like quality.

Transfer Learning and Continual Learning

Modern LLMs continually evolve. Transfer learning allows for repurposing a pretrained base for different downstream applications. Meanwhile, continual learning strategies enable the model to incorporate new knowledge over time without forgetting prior learning (IBM: Transfer Learning).

- Adapters: Lightweight modules called adapters can be inserted into neural architectures, allowing efficient training for various tasks without full retraining (Adapter paper).

- Example: A customer service LLM may be continually updated with the latest product FAQs, ensuring it remains accurate and sensitive to change.

Best Practices and Challenges

Fine-tuning and training LLMs require balancing precision with concerns about bias, data privacy, and resource consumption. Open research and transparent reporting — as seen in Meta’s LLaMA — are vital for pushing the field forward, ensuring new models are ethical, robust, and democratized for positive societal impact.

Performance Evaluation and Benchmarking Methods

Evaluating the performance of Large Language Model (LLM) based AI chatbots is a nuanced process that requires a blend of quantitative benchmarks, real-world simulations, and user-centric assessments. Accurate performance evaluation and benchmarking form the backbone of comparing various architectures, identifying suitable deployment scenarios, and understanding the evolving capabilities of AI-driven conversational agents. Below, we explore the prominent methods, detailing their implementation and value.

Quantitative Metrics and Benchmarks

Quantitative evaluation relies on standardized datasets and well-defined metrics to gauge chatbot proficiency across tasks. Common benchmarks for LLM-based chatbots include:

- Language Understanding: Datasets like GLUE and SuperGLUE measure a model’s ability to comprehend context, reason linguistically, and adhere to prompt instructions.

- Dialogue Quality: Tasks such as next utterance prediction or conversation continuation are assessed using benchmarks like ConvAI and DSTC (Dialog State Tracking Challenges). These measure fluency, relevance, and goal completion rates.

- Automated Metrics: BLEU, ROUGE, and METEOR provide numeric scores for response quality, though their correlation with human judgment remains an area of active research (source).

Researchers should be mindful that benchmarks must be regularly updated to reflect evolving user expectations and the rapid pace of AI development.

Human Evaluation and User Studies

While automated metrics provide a baseline, human-in-the-loop evaluations are indispensable for assessing real-world usability, satisfaction, and trustworthiness. Typical steps in conducting user studies include:

- Designing tasks that mirror authentic conversational goals—such as customer support or information retrieval.

- Recruiting a diverse pool of evaluators to mitigate bias and capture a broad range of perspectives.

- Rating chatbot responses on dimensions like coherence, friendliness, informativeness, and safety. Techniques such as A/B testing or blind comparison are commonly used (see Google AI Blog).

Human feedback is pivotal for fine-tuning and iterative improvement, often revealing critical flaws missed by purely automated tools.

Robustness, Safety, and Ethical Considerations

Performance evaluation also encompasses robustness to adversarial prompts, resistance to generating toxic or biased content, and adherence to ethical guidelines. Researchers employ stress-testing frameworks that:

- Introduce malformed or malicious queries to assess chatbot resilience (source).

- Leverage bias and toxicity detection benchmarks, such as Perspective API or the ToxiGen dataset.

Comprehensive safety audits are now a prerequisite for LLM chatbot deployment, particularly in critical sectors like healthcare and education.

Real-Time and Longitudinal Deployment Testing

Evaluating chatbot performance in live operational environments is crucial. Approaches typically include:

- Real-time analytics: Tracking metrics such as latency, error rate, and user retention in production.

- Longitudinal studies: Monitoring chatbot performance over weeks or months to detect concept drift and ensure sustained user satisfaction (read more).

These insights guide ongoing fine-tuning and model updates, aligning chatbot behavior more closely with evolving user needs.

Emerging Trends in Benchmarking

With LLMs progressively powering multimodal and multi-domain chatbots, the benchmarking landscape continues to evolve. Researchers are actively exploring:

- New metrics for contextual understanding, emotional intelligence, and cross-lingual capabilities.

- Community-driven benchmarking platforms like Dynabench, which gather dynamic and adversarial user inputs to expose model weaknesses in real-time.

Integrating these innovative evaluation strategies ensures that LLM-based chatbots not only achieve technical excellence but also deliver meaningful, responsible, and user-aligned outcomes in real-world applications.

Current Challenges and Limitations

Large Language Model (LLM)-based AI chatbots, while groundbreaking in their ability to understand and generate human-like text, face several challenges and limitations that impact their effectiveness, scalability, and trustworthiness. Understanding these hurdles is essential for both practitioners and businesses aiming to integrate chatbots into their operations. Below, we explore the most significant obstacles and illustrate why they matter in real-world applications.

1. Data Privacy and Security

LLMs require vast amounts of data to be trained, often utilizing text scraped from the internet or obtained from user interactions. This raises concerns about privacy and the risk of exposing sensitive information. Incidents like inadvertent data leaks or unauthorized access have spotlighted the potential dangers of poorly managed data. For example, researchers from the Harvard highlight how critical data governance is in machine learning systems. Additionally, security vulnerabilities can allow attackers to manipulate outputs or gain access to private conversations, leading to potential legal and reputational repercussions for deploying organizations.

2. Bias and Fairness

Despite advancements, LLMs often inherit biases present in their training datasets, which can result in outputs that are prejudiced or discriminatory. This issue is particularly pressing in fields like recruitment or healthcare, where fairness is paramount. For example, a 2023 study by the Stanford Institute for Human-Centered Artificial Intelligence found that LLMs may amplify social stereotypes, potentially affecting decision-making processes. Addressing bias demands improved dataset curation, constant monitoring, and the development of bias-mitigation algorithms, all of which are resource-intensive endeavors.

3. Hallucination and Factual Inaccuracy

One major challenge facing LLM-based chatbots is their propensity to “hallucinate”—confidently generating plausible-sounding but false or misleading information. This problem is not limited to trivial details; even critical facts can be misreported. For instance, a chatbot might generate incorrect medical advice that could endanger users. A comprehensive analysis by Nature details several cases where LLMs hallucinated facts or fabricated references. Such behavior undermines trust in chatbot interactions and limits their use in sensitive domains.

4. Context Limitation and Memory Constraints

LLM chatbots struggle with maintaining context during long, multi-turn conversations. Although models have drastically improved, they can still lose track of previous exchanges, repeating questions or contradicting themselves. For example, while some models leverage extended context windows, much work remains in developing strategies for persistent long-term memory, as researched by DeepMind. This limitation becomes apparent in applications like customer support, where continuity and personalized interaction are critical.

5. Computational Cost and Environmental Impact

Training and running LLM chatbots require significant computational resources, often necessitating specialized hardware and considerable energy consumption. The environmental footprint of large-scale AI systems is increasingly under scrutiny. According to MIT, the carbon emission from training a single large model can rival that of several cars over their lifetimes. This makes it essential for organizations to weigh the trade-offs between model complexity, deployment size, and sustainability.

6. Lack of Explainability and Transparency

Often referred to as “black boxes,” LLMs provide little insight into their decision-making processes, making it difficult to explain or justify outputs. This limitation poses issues in regulated industries where explainability is required by law, such as finance or medicine. Initiatives from the National Institute of Standards and Technology (NIST) emphasize the imperative for transparent and explainable AI solutions. Until explainability improves, it remains challenging to fully trust or audit chatbot recommendations, especially when human oversight is crucial.

Overcoming these significant challenges demands multidisciplinary collaboration, continuous research, robust engineering, and responsible governance. By acknowledging and addressing these issues, the potential of LLM-based AI chatbots can be unlocked in a manner that is safe, ethical, and effective for real-world applications.

Emerging Trends and Future Directions

As the development of Large Language Model (LLM) based AI chatbots accelerates, new trends are emerging that will profoundly impact their architecture, capabilities, and applications. These promising advancements open up transformative opportunities, but also introduce complex challenges. Below, we explore these trends and offer insight into where the future of LLM-based chatbots is heading.

Multimodal Capabilities: Bridging Text, Image, and Beyond

Modern chatbots are rapidly evolving from processing only text to integrating multiple modalities like images, audio, and even video. This enables more interactive and context-aware experiences. For example, OpenAI’s GPT-4 has demonstrated the ability to interpret both text and images, enhancing its usefulness in fields such as education, customer service, and healthcare. In the future, expect seamless integration with sensors, augmented and virtual reality platforms, and IoT devices, making conversational AI significantly more embedded in everyday life.

Personalization at Scale

Personalization is becoming the cornerstone of next-generation chatbots. With advancements in fine-tuning and prompt engineering, AI chatbots can now tailor responses based on user behavior, preferences, and even emotions. This is particularly valuable in sectors such as healthcare and finance, where individualized recommendations or support can improve outcomes and satisfaction. Techniques such as active learning and user embedding are playing a pivotal role in this high-level customization (Nature.com).

Federated and Privacy-Preserving Learning

As concerns about data privacy and security intensify, emerging trends are focusing on decentralized training methods, such as federated learning. This approach enables AI models to learn from data without it leaving the user’s device, significantly reducing privacy risks. Expect more chatbots to offer strong guarantees regarding user data security, adhering to regulations like GDPR, and employing advanced cryptographic techniques for secure on-device inference.

Interpretable and Explainable AI

Transparency in AI systems is critical, especially as chatbots are deployed in sensitive areas such as healthcare, legal, and financial sectors. Explainability helps users and regulators understand why a chatbot made certain recommendations. Techniques such as attention visualization, saliency maps, and post-hoc interpretability methods are being actively researched (NIST AI Risk Management Framework). Future chatbots are likely to come equipped with built-in tools that enable users to trace back the logic and data behind AI decisions.

Towards Autonomous Agents and Self-Improving Systems

Beyond following predefined scripts, the frontier is moving towards autonomous agents capable of complex tasks, self-refinement, and even collaborative problem-solving with humans. Systems such as DeepMind’s generalist agents represent an early step in this direction. Such agents will have the capacity to independently gather information, verify its validity, and perform continuous self-improvement over time, drastically reducing the need for human intervention in mundane query handling.

Sustainability and Responsible AI

The environmental impact of training and running LLMs cannot be overlooked. Cutting-edge work is now focusing on reducing the carbon footprint of LLM development through methods like model compression, efficient inference techniques, and utilizing renewable energy sources (MIT Technology Review). Ethical considerations, such as avoiding biases and ensuring inclusivity, are becoming foundational in model training and deployment strategies.

Integration with Knowledge Graphs and External Databases

To surpass limitations of static memory and reinforce factual accuracy, chatbots are increasingly being paired with external knowledge graphs and real-time databases. By leveraging structured data, these systems can deliver up-to-date and contextually accurate responses, even in rapidly changing domains such as news or scientific research (Google Knowledge Graph). The combination of LLMs with symbolic reasoning is also an exciting area of active research.

In summary, LLM-based chatbots are on the cusp of a new era characterized by richer interactivity, robust privacy, enhanced interpretability, and sustainable operation. Organizations embracing these trends will be better positioned to deliver transformative, trustworthy AI solutions to users worldwide.