Understanding the Rise of Prompt-Based Interfaces

The proliferation of large language models (LLMs) has radically transformed how we interact with artificial intelligence. At the forefront of this revolution lies the rise of prompt-based interfaces, which have quickly shifted from an insider tool for developers to a mainstream feature adopted by everyday users. Understanding this evolution offers invaluable insights into the future of digital product design, as it signals an important shift in user experience (UX) and digital literacy.

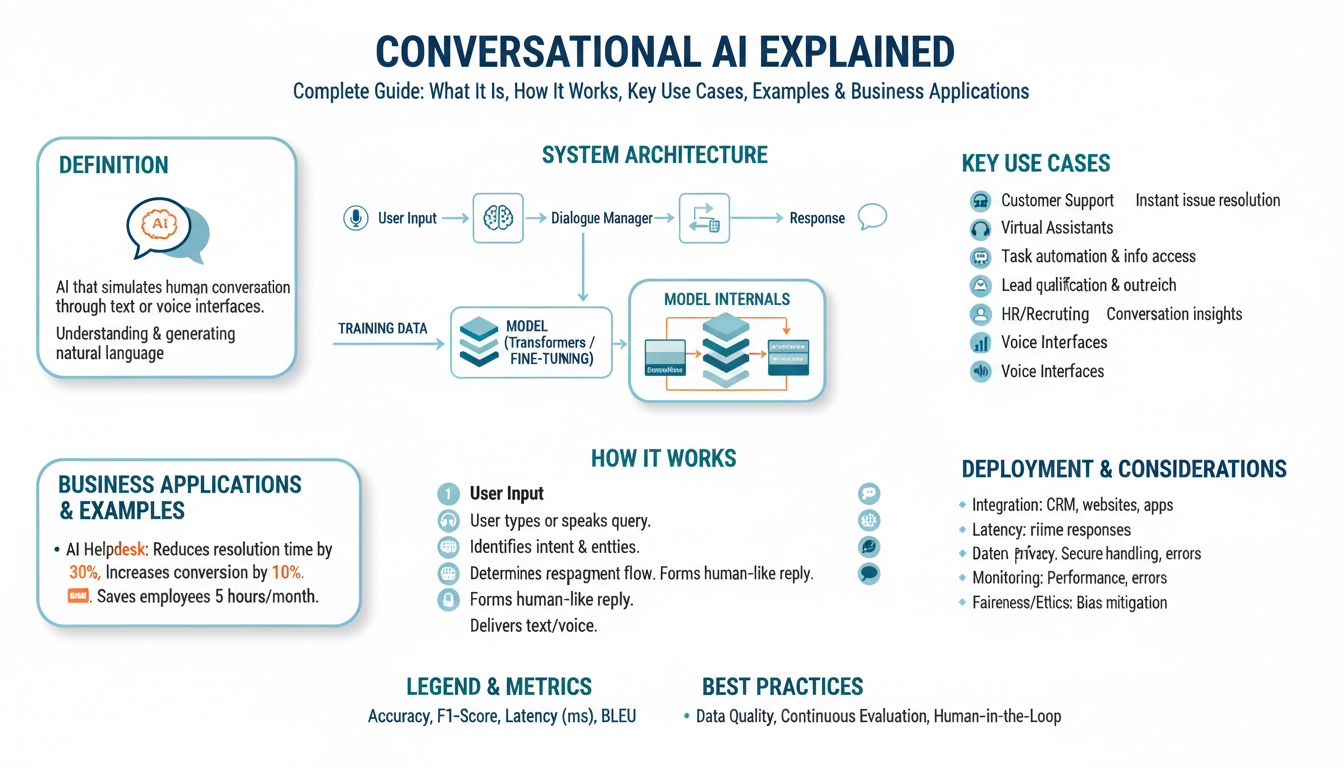

Prompt-based interfaces are essentially systems where users type or say natural language commands and queries, and the AI responds with contextually relevant answers or actions. These interfaces have gained enormous popularity due to their simplicity and flexibility. Platforms like ChatGPT and Google Bard allow users to generate text, write code, brainstorm ideas, and more, all through natural conversation rather than rigid menu structures or command-line syntax.

Several factors contribute to the rise of these interfaces:

- Accessibility: Unlike traditional interfaces that require learning specific commands or navigating complicated menus, prompt-based systems leverage common language, making them more approachable. Users can simply say what they want, lowering the barrier to entry. For example, anyone can ask ChatGPT to draft an email, regardless of their technical skills.

- Versatility: Prompting is not confined to a single function or domain. Whether composing music, designing workflows, or summarizing articles, the open-ended nature of prompts means that the same interface can serve a multitude of diverse needs. This broad applicability is highlighted in research such as Prompt Programming for Large Language Models: Beyond the Few-Shot Paradigm from Stanford HCI Group.

- Personalization: By framing inputs in natural language, users can inject personal context, preferences, or even humor directly into prompts. LLMs are able to interpret and tailor responses, boosting user satisfaction and engagement.

Yet, prompt-based UX also raises critical questions. While it democratizes access, it assumes users can articulate their intents effectively, which can create invisible barriers for some. Furthermore, not all tasks are equally suited to freeform text entry, especially those requiring precision or structured outcomes. Researchers at MIT’s Journal of Design and Science have discussed both the opportunities and challenges of designing interfaces for AI systems, highlighting the need for smart scaffolding and guidance within prompt-driven workflows.

Prompt-based interfaces are only the beginning. Their rapid adoption signals a broader movement toward more intuitive, conversational, and user-driven technologies. As more apps integrate LLMs and conversational AI, understanding the fundamentals of prompt-based UX will be critical for designers, developers, and users alike. With careful thought, these systems can become not just more powerful, but also more inclusive and effective tools in our daily digital lives.

What Does ‘AI-Native UX’ Truly Mean?

When discussing AI-native user experiences, it’s important to move beyond the buzzwords and clarify what this concept truly encompasses. At its core, an AI-native UX is more than just layering prompts over a language model; it represents an intentional, thoughtful design approach that puts the user’s needs, habits, and context at the center of every interaction with artificial intelligence.

To truly understand AI-native UX, consider how technology evolves alongside user expectations. Early graphical user interfaces (GUIs) transformed computing by making digital environments intuitive and visual. In a similar way, AI-native UX aims to harness the unique capabilities of modern AI to craft interfaces that feel effortless, anticipatory, and uniquely tailored to individual users. This means designing not just with AI, but for AI as a foundational component.

Broadly, an AI-native UX must excel in the following areas:

- Contextual Awareness: Unlike static interfaces, AI-native experiences leverage user data, preferences, and situational cues to deliver hyper-personalized responses. For example, Google Assistant can interpret commands differently based on location, recent activity, and ongoing tasks—making experiences more relevant and efficient.

- Multimodal Interactions: AI-native products often combine text, voice, image, and even gesture-based inputs to meet users where they are. Take ChatGPT’s voice capabilities as an example: the platform adapts conversationally, supplementing text responses with voice in hands-free scenarios. This flexibility increases accessibility and engagement.

- Transparency and Trust: As AI systems become more integral to our lives, users demand transparency—clear explanations of how decisions are made, and why. This is underscored by ethical frameworks like those discussed at Google’s Responsible AI Practices that guide builders toward systems that are not just powerful, but fair and trustworthy.

- Proactive Assistance: One hallmark of a genuine AI-native UX is its ability to proactively surface information, reminders, or suggestions at the right moment—sometimes before a user even realizes they need it. For instance, Microsoft’s Copilot anticipates workflow needs, offering autocomplete options and suggestions directly within productivity apps.

This approach to UX is not merely about making AI seem humanlike; it’s about empowering users to do more, faster, and with less effort. Designers must consider the entire user journey, integrating context, intent, and feedback loops to ensure the experience feels seamless and supportive. As industry leaders like Nielsen Norman Group point out, successful AI-native products meld sophisticated machine intelligence with intuitive, user-centered design—pushing the boundaries of what digital experiences can achieve.

Ultimately, an AI-native UX is defined by its ability to interpret, adapt, and respond—not just to explicit queries, but to the evolving context of the user. This represents a massive shift from prompt-driven interfaces to those built around the full spectrum of human-computer interaction. Getting there requires a deep understanding of both human needs and machine capabilities, setting a new gold standard for digital products powered by AI.

The Limitations of LLM-First Product Designs

Despite the remarkable abilities of Large Language Models (LLMs), relying solely on LLM-driven prompting as the foundation for digital products comes with a host of limitations. While LLMs like GPT-4 can understand natural language, generate coherent text, and carry out a variety of tasks, making prompts the centerpiece of product design often leads to lackluster results—both for users and organizations striving for true AI-native UX.

One of the primary drawbacks is that LLMs, by design, are language processing engines—not end-to-end user experience architects. When LLMs are used as the main interface—where users must articulate their needs as prompts—several challenges quickly emerge:

- Cognitive Overload for Users: Natural language interfaces may intuitively seem easier, but they often require users to be precise, explicit, and structured in their queries. This can confuse users unfamiliar with prompt engineering or the boundaries of what the AI can or cannot do. Instead, graphic interfaces that guide users with contextual suggestions, step-by-step workflows, or visual cues can dramatically reduce friction, as explored by experts at Nielsen Norman Group.

- Lack of Structured Interactions: LLMs excel at unstructured tasks—like answering open-ended questions—but struggle with multi-step, stateful workflows. For example, in a finance application, guiding users through a sequence of data entry, validation, and confirmation is best handled by a purpose-built UX, not just chat prompts. This issue is discussed in research by MIT’s Computer Science and Artificial Intelligence Laboratory.

- Poor Error Handling and Feedback: With LLM-first designs, errors and misunderstandings are harder to diagnose. Was it a misphrased prompt, a misunderstood intent, or an AI limitation? Traditional UX patterns offer redundancy and guidance—like tooltips, inline validation, and undo actions—that conversational AI lacks without additional layers.

- Limited Discoverability: Well-designed products surface features through thoughtful navigation and affordances. If the only avenue is a prompt box, users may never discover the full range of capabilities unless they happen to ask. Companies like Intercom have noted the importance of blending LLMs with explicit menu-driven designs to maximize user empowerment.

Consider the process of reserving a table at a restaurant. A dedicated booking app can visualize availability on a calendar, automatically suggest optimal times, and walk the user through preferences (e.g., outdoor seating, dietary requests). An LLM-powered prompt interface would require the user to articulate all variables—date, time, size of party, special requests—correctly in one go, often resulting in back-and-forth clarification.

True AI-native products use LLMs not as the surface layer, but as enablers behind the scenes. The best interfaces blend generative AI with familiar, structured elements—drag-and-drop, autofill, visual feedback—to keep users in control while benefitting from AI’s flexibility. As Fast Company observes, the future of AI-powered experiences hinges on designing thoughtfully between machine intelligence and human intuition.

Prompting vs. Product: Identifying the Gaps

As AI technologies like large language models (LLMs) become more sophisticated, many organizations are eager to leverage text-based prompting as the engine of new digital experiences. However, the assumption that a powerful LLM alone can deliver a satisfying product reveals significant gaps in understanding what users actually need. The complexity lies in the distinction between simply providing access to AI and designing a coherent, purposeful product experience built around it.

Prompting, at its core, offers a way to interact with LLMs: users type instructions and receive often impressive results. Yet, prompting alone makes heavy cognitive demands on users—it assumes they know what to ask, how to phrase queries, and how to interpret the often-verbose, unpredictable responses. This limits accessibility and often fails to address usability and discoverability challenges inherent in user-centric product design. Research from the Stanford HCI Group highlights how prompt-based interactions can lead to user frustration due to ambiguity, lack of guidance, and inconsistency in outputs.

In contrast, mature products are characterized by designed pathways, reusable workflows, and intuitive interfaces that anticipate user needs. They reduce friction by surfacing context, offering suggestions, and allowing users to seamlessly complete tasks without deep technical knowledge. For instance, consider how GPT-4 is integrated into platforms like Microsoft Copilot. The product abstracts away direct prompt engineering by providing contextual widgets, templates, and guardrails, enabling users to accomplish goals such as drafting emails or analyzing data with minimal effort or expertise.

Identifying the gaps between prompting and true product design involves several dimensions:

- Contextualization: Unlike a typical LLM prompt, a product often maintains user context—recent actions, preferences, and ongoing tasks. This means results are tailored, reducing repetitive input and making experiences feel cohesive (Nielsen Norman Group: Contextual Design).

- Guidance and Scaffolding: Effective products lead users through tasks with clear UI patterns, tooltips, and guardrails to help avoid errors. For example, a design tool with AI-powered suggestions shows possible next steps or auto-fixes, rather than expecting users to know what’s possible via prompting.

- Actionability: Products transform raw AI output into actionable features—buttons to trigger the next step, visualizations for interpretation, or API connections for automation. LLMs, on their own, output static text; products turn insight into workflows and results fully integrated with the user’s environment (MIT Innovation: AI UX Principles).

- Reliability and Consistency: Products must guarantee predictable outcomes. Because LLMs are probabilistic, without product overlays such as fallback routines, validation, or user feedback loops, AI responses can be erratic—undermining user trust and utility (Nature: Why Language Models Are Unreliable).

In essence, AI-native product design must go beyond exposing an LLM behind a text box. It requires thoughtful orchestration of context, guidance, action, and reliability, ensuring that AI’s power is filtered, focused, and embedded within experiences users actually want to repeat. By minding these gaps, designers and builders can transform impressive underlying technology into truly valuable, robust, and engaging products.

Why Contextual Awareness Matters for AI Applications

One of the core elements missing in many AI-enhanced applications today is robust contextual awareness. Context extends far beyond just understanding the last few lines of a conversation. It’s about capturing the user’s intent, preferences, ongoing workflow, and even the digital or physical environment in which tasks are performed. LLMs, though powerful in language understanding, often falter when nuanced, real-world context is a prerequisite for intelligent behavior.

Many AI-powered apps default to static prompting strategies—they pass the most recent text or data to a language model and await a clever-sounding answer. However, this approach misses the subtlety required in dynamic, real-world usage. For instance, if you’re using an AI writing assistant embedded in a project management tool, the ideal system should understand not just what you typed, but also which project you’re in, what deadlines loom, and previous decisions made. Contextual awareness in AI, as researchers at DeepMind note, crucially shapes model behavior and output relevance.

Why is contextual awareness so important? Imagine an AI that schedules your meetings but doesn’t consider your travel time between locations or your lunch breaks. Such a tool would quickly become an annoyance rather than an assistant. Contrast that with an AI which integrates your calendar, email context, and even local traffic data, making recommendations that fit into your full day. As Harvard Business Review points out, the best AI solutions excel due to their deep integration with contextual signals, not just their raw language capabilities.

- Step 1: Capture multi-dimensional context. This might include workspace metadata, user roles, external data feeds, prior user actions, and more. For example, apps like Notion and Slack enrich their AI features by pulling in context from multiple sources, far beyond what LLMs alone can infer.

- Step 2: Maintain persistent context across sessions. Instead of forgetting everything between interactions, context-aware AI should seamlessly pick up where you left off. This is especially crucial for long-term projects or research workflows.

- Step 3: Anticipate needs proactively. By understanding ongoing context, AI can suggest actions before users even realize they need them, akin to intelligent notifications or next-step recommendations. Google’s contextual AI research demonstrates how anticipating user needs is becoming a new frontier in user experience.

Ultimately, context isn’t just extra data—it’s the linchpin in making AI intuitive, trustworthy, and truly helpful. As AI-native products evolve, those that embrace deeper, more adaptive contextual awareness will set themselves apart, transforming static prompts into dynamic, personalized experiences.

Integrating Multi-Modal Interactions for Better User Experience

One of the most significant shifts in AI-native user experience (UX) design is the move from simple text-based Large Language Model (LLM) interfaces toward genuinely multi-modal systems. While conversational AI driven by LLMs has democratized access to information and automation, the reality is that human communication—and effective digital experiences—rarely happen through a single channel. Instead, users often think, learn, and express themselves through a combination of text, voice, images, gestures, and even video.

Relying solely on text prompts, no matter how sophisticated, risks oversimplifying or even alienating users with diverse cognitive preferences or accessibility needs. Recent advances make it possible to design interfaces where users seamlessly shift between typing, speaking, snapping photos, or even sketching. This isn’t just a luxury—it’s increasingly an expectation in both consumer and enterprise settings (Nielsen Norman Group).

Understanding Multi-Modal Interactions

A multi-modal AI system integrates two or more interaction modes—such as language, vision, and audio—to better interpret user intent and deliver richer feedback. This approach mirrors real human understanding, where context from tone of voice, facial expressions, or an accompanying image often clarifies meaning beyond words alone.

For example, a travel app powered by a multi-modal AI could let users:

- Type or dictate a query about flight options

- Upload a photo of a passport for instant document recognition

- Draw a rough map to specify a destination

This flexibility provides a more fluid and humanized digital experience, catering to various situations—like using voice when hands are full, or pointing the phone camera at a product when unsure what it’s called.

Steps to Creating Effective Multi-Modal Experiences

- Identify Common User Scenarios: Map out points where users might naturally switch between modes—typing a question, uploading an image, or switching to voice on mobile. Empathizing with real-world contexts ensures the right modality is available at the right time (Interaction Design Foundation).

- Ensure Back-End AI Can Process Multiple Inputs: Integrating multi-modal LLMs like GPT-4 with image and audio processing capabilities, or combining with separate vision and speech modules, allows the system to interpret, synthesize, and cross-check information from diverse inputs (DeepMind: Gemini Project).

- Design Clear Feedback Loops: Users need confirmation that the system understood their input—whether that’s reading back a dictated message, showing an annotated image interpretation, or proactively offering clarifications.

- Prioritize Accessibility: Multi-modality not only maximizes convenience, but also inclusivity. For example, people with visual impairments might prefer audio feedback, while those with speech disabilities benefit from robust text input options. Adhering to Web Accessibility Initiative guidelines ensures broad usability.

- Test with Real Users: Validate experiences across scenarios and user groups. Identify pain points, misinterpretations, and places where switching modes enhances (or hinders) flow. Iterate quickly with feedback.

Examples of Multi-Modal AI in Practice

- Healthcare: AI apps like those explored at Mayo Clinic analyze speech patterns for mental health, interpret medical images, and support clinical documentation through dictation—all enhancing accuracy and speed.

- Retail: Visual search in apps like Google Lens lets users snap a picture of a product to find reviews or purchase options instantly, bypassing lengthy search queries.

- Education: Platforms blend video lectures, spoken Q&A, handwriting recognition, and visual diagramming to accommodate varied learning styles and needs.

Multi-modal interfaces elevate AI from a clever chatbot to a true digital collaborator. By embedding images, audio, gestures, and other inputs into AI UX, designers can create tools that feel intuitive, inclusive, and genuinely helpful—allowing users to interact with technology in the ways that suit them best.

Balancing Transparency and Predictability in AI UX

To create truly effective AI-native user experiences, designers and product teams must navigate the complex interplay between transparency and predictability. Users interacting with large language models (LLMs) often struggle to trust or fully understand the outputs generated, particularly due to the black-box nature of these systems. Yet, for AI-powered products to drive engagement and deliver value, users need to feel both confident in the AI’s capabilities and aware of its limitations.

Transparency: Demystifying AI Decisions

Transparency involves openly communicating how AI-driven features work, what data they use, and where their boundaries lie. This can be achieved through UI elements that explain the reasoning behind an answer or suggestion, such as tooltips, expandable sidebars, or summary panels. For instance, a design might include a “Why this?” button, allowing users to peek into the AI’s rationale or data sources. Initiatives like Microsoft’s approach to building transparent AI systems highlight how clear communication can bolster user understanding and trust.

Predictability: Creating Reliable and Consistent Interactions

Predictability aims to ensure users know what to expect when engaging with AI-powered features. While LLMs are impressively flexible, their outputs can sometimes be surprising or inconsistent, undermining user confidence. To counteract this, AI UX should:

- Set clear expectations: Use onboarding checklists or notifications to outline what the AI can and cannot do—before the first interaction.

- Standardize response formats: Offer consistent templates for output (such as actionable lists or bullet points), making the AI’s responses more digestible and less whimsical.

- Feature fallback explanations: When the AI is unsure, it should gracefully admit limitations or request clarification, rather than bluffing or generating filler content.

For example, Google’s experiments with explainable AI in search aim to provide users with sources and justification for each answer, making the process feel more stable and dependable.

Balancing Act: Practical Tips and Examples

The balance between transparency and predictability is not just theoretical; it plays out in tangible product decisions:

- Explain failures as well as successes: If the AI cannot complete a task, state why and suggest next steps. For instance, GitHub Copilot often provides reference links or asks for clarifications, framing its limits transparently and predictably.

- Allow user corrections and feedback: Integrate feedback tools or correction loops, so users feel empowered to improve the system’s accuracy and behavior. Research discussed on Harvard Business Review stresses the importance of iterative feedback for responsible AI UX.

- Humanize uncertainty: Communicate probabilistic outcomes in human terms, like confidence levels or alternate suggestions, rather than binary right/wrong outputs. This fosters a collaborative partnership rather than a one-way, unpredictable experience.

Pure prompting is only a piece of the puzzle. By explicitly designing for transparency and predictability, product teams encourage deeper trust, better user engagement, and long-lasting value in AI-enabled experiences. This thoughtful balance not only distinguishes AI-native products but also sets a new standard for intuitive and responsible design.

Designing for Trust: Beyond Black-Box Interactions

Building trust in AI-powered products goes far beyond simply slapping an LLM onto an interface and hoping for the best. Users want more than answers — they crave transparency, predictability, and a genuine sense of partnership when interacting with AI. If a product’s UX feels like a black box, users may hesitate to rely on it, especially when stakes are high or decisions are complex.

Why Transparency Matters for Trust

Human trust is often rooted in understanding. In traditional apps, clear controls and visible logic reinforce predictability. AI systems — particularly those driven by large language models (LLMs) — are different: they generate responses based on vast and sometimes inscrutable datasets, which can make outputs feel random or even uncanny. If users can’t see how the AI arrived at its answers, doubt seeps in.

- For critical applications such as healthcare or finance, the need for visibility is paramount (Nature: Making AI Transparent).

- Even for everyday use-cases like email assistants or chatbots, users appreciate knowing why a particular suggestion was made, and from where information was sourced.

Strategies to Move Beyond the Black Box

To foster trust and reduce the “black box effect,” designers and product teams should consciously integrate certain strategies:

- Explain Outputs Clearly: Whenever feasible, show users the reasoning or evidence behind an AI’s response. This could be through AI-generated rationale or by displaying relevant source links alongside answers.

Example: A research assistant tool might cite the scholarly articles that influenced its summary. - Enable Control and Feedback: Trust grows when users feel they can shape outcomes. Allow actions such as editing prompts, rating responses, or toggling AI confidence levels (Nielsen Norman Group: Principles of Human–AI Interaction).

Example: Letting users highlight and correct errors, which both improves AI learning and boosts user confidence. - Offer Predictable Interactions: Clear onboarding and helpful microcopy prepare users for how the AI will behave and what its limits are. Offering examples, highlighting edge cases, and communicating uncertainty are key (Google AI: Responsible AI Practices).

- Emphasize Human Oversight: Remind users that they are ultimately in control, especially for consequential decisions. Blend AI suggestions with clear calls-to-action for human validation or override.

Example: In a writing tool, the “Accept/Reject” buttons reinforce that the AI assists but doesn’t replace the author’s voice.

Design Patterns to Inspire Confidence

Some interface patterns can help demystify AI, bridging the gap between automation and user assurance.

- Explanatory Tooltips: Short, contextual pop-ups that decode AI suggestions in plain language are invaluable.

- Side-by-Side Comparisons: Showing options generated by AI, plus the original user input, makes the transformation process explicit.

- Model Disclosures: Brief notes about which AI model powers the feature, what its training data includes, and known limitations — with links to more information — support informed use.

Thoughtful UX choices not only encourage adoption but also help users build a mental model of how their AI teammate works. Ultimately, trust in AI-native products grows out of the same roots as any relationship: communication, honesty, and a sense of shared purpose. Investing in transparency and control isn’t just ethical or regulatory — it’s essential for products that hope to become indispensable, trusted tools.

The Role of Feedback Loops in AI-Native User Journeys

At the heart of every successful AI-native user experience lies a robust feedback loop—a continuous cycle of collecting user input, analyzing that data, and iteratively refining the system. Unlike traditional interfaces, where user feedback might only come in the form of feature requests or support tickets, AI systems thrive on ongoing interactions. Effective feedback loops are essential for shaping not just individual outputs, but the entire user journey, driving improvement and adaptation in real time.

How Feedback Loops Empower Adaptivity

Feedback loops allow AI systems to move beyond static responses, fostering a truly adaptive product that “learns” over time. For example, recommendation engines like those used by LinkedIn and Google continuously refine their suggestions by monitoring user clicks, dwell time, and explicit ratings. This constant adjustment is what helps the AI remain effective and personalized, especially in dynamic environments where user needs can shift quickly.

Implementing Feedback Loops: Steps for AI-Native UX

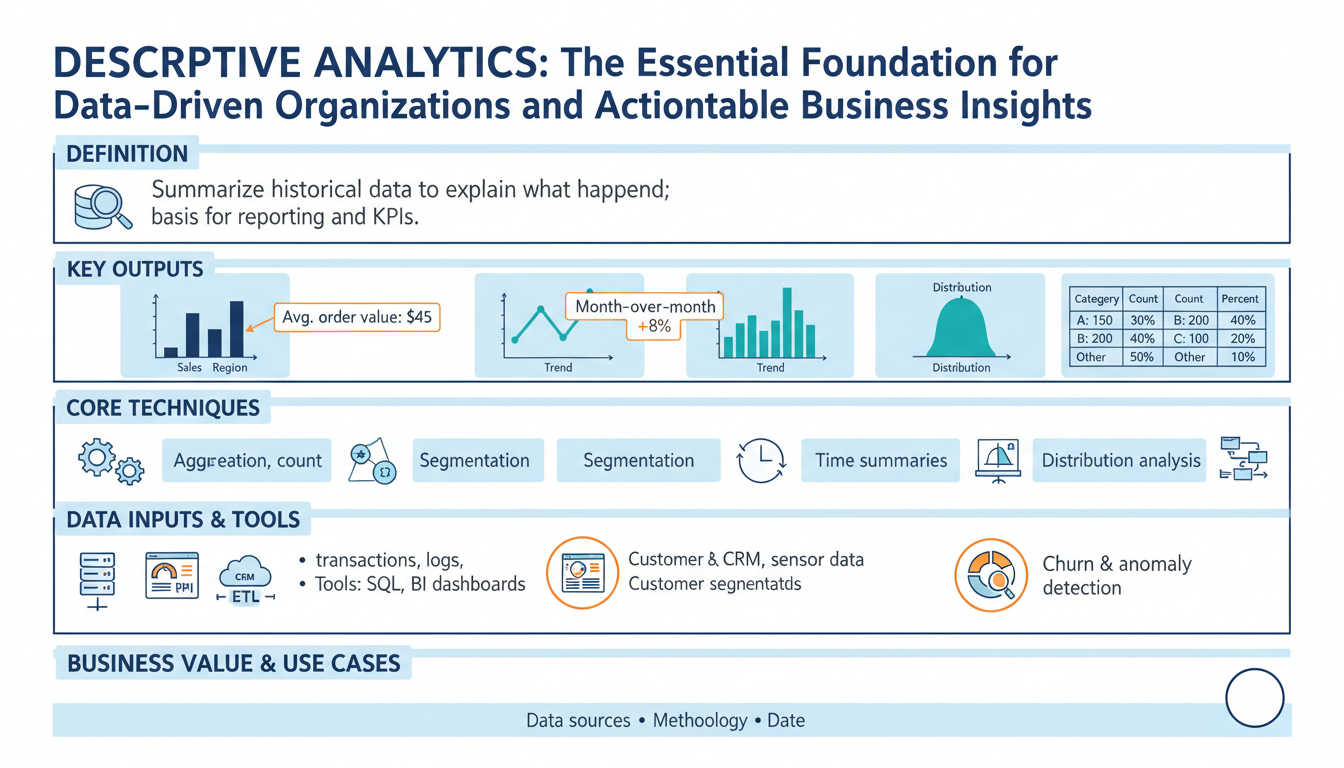

- Gather Dynamic, Contextual Data: Rather than relying solely on text prompts, collect nuanced behavioral signals—like the speed of user interaction, skipped actions, or re-engagement rates. These expand the feedback surface, enabling richer training data.

- Analyze for Patterns and Anomalies: Use methods like cohort analysis or anomaly detection to surface trends and outliers, informing not just what users explicitly request, but how they react to AI outputs. For an in-depth overview, see Harvard Business Review’s analysis of feedback loop efficacy.

- Iterate and Deploy Improvements: Close the loop by deploying changes based on feedback, then measuring outcomes to ensure that refinements are impactful and don’t introduce new issues. This may involve fine-tuning LLM parameters, updating training data, or tweaking UI flows.

Case Study: Real-Time Refinement in Language Learning Apps

Consider language learning platforms like Duolingo, which constantly tailor lesson difficulty, hints, and corrective feedback based on learner performance. Each interaction provides a micro-feedback loop, allowing the system to recalibrate its teaching approach instantly. This dynamic process ensures that learners remain both challenged and engaged, providing a more effective learning journey than static prompting alone.

Designing for AI-Native Feedback: Best Practices

- Encourage Explicit and Implicit Feedback: Make it easy for users to clarify, correct, or rate AI responses—while also tracking implicit signals such as abandonment or repeat queries.

- Maintain User Trust and Transparency: Let users know how their feedback is used and offer clear controls. For guidance, this academic paper on trust in AI feedback is highly recommended.

- Automate Where Possible, but Involve Human-in-the-Loop Review: For higher-stakes applications, human oversight can provide a safety net, ensuring quality and ethical considerations remain central as the AI evolves.

Ultimately, feedback loops are the scaffolding upon which user-centric, AI-native experiences are built. The more surfaces for user input that we design, the more opportunities for AI to connect, learn, and deliver value—underscoring that prompting alone is never enough. A thoughtful, data-driven feedback ecosystem transforms AI from a simple respondent into a constantly evolving collaborator.