Introduction to the RASA Framework

The RASA Framework is a sophisticated tool designed to build custom chatbots and conversational applications effectively. It is part of a broader trend in conversational AI focused on creating more human-like interactions between machines and users. The RASA Framework emphasizes flexibility, scalability, and ease of customization, making it especially appealing to developers working on a wide array of projects across industries.

One of the standout features of the RASA Framework is its open-source nature, which promotes a collaborative developmental environment. This openness not only allows for community contributions but also provides developers the freedom to tailor the framework specifically to their project needs. Unlike many proprietary tools that come with rigid structures, RASA allows you to build fully adaptive dialogue systems without being tied into specific utility constraints.

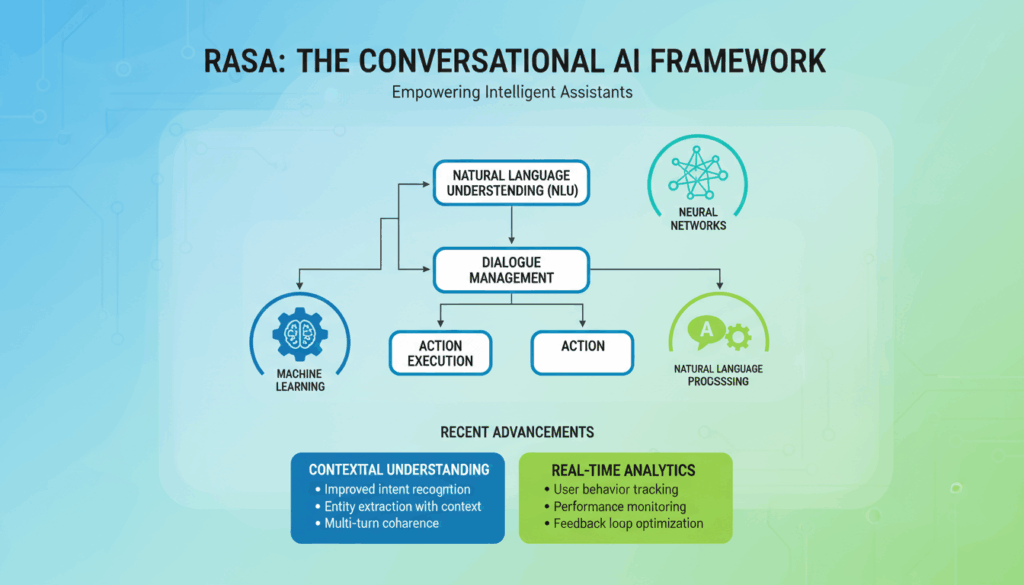

RASA primarily consists of two components: RASA NLU (Natural Language Understanding) and RASA Core.

RASA NLU is responsible for understanding the user’s intent by interpreting their inputs. It works by analyzing unstructured sentences and mapping them to intents and entities. For instance, in a banking chatbot, if a user says “I want to transfer money,” RASA NLU would recognize “transfer money” as an intent and identify entities like “sender account,” “recipient account,” and “amount.” This component can be trained with examples to improve its accuracy in interpretation, allowing customization for different industries or applications.

RASA Core handles the dialogue management part, utilizing the intents and entities extracted by RASA NLU to generate appropriate responses. It employs machine learning models to predict what the next action should be based on the dialogue context. This allows the chatbot to maintain state and context over a conversation, making interactions more coherent and meaningful. For example, if a user repeatedly asks for movie recommendations, RASA Core can remember past interactions and offer personalized suggestions over time.

The RASA Framework supports various integrations, making it versatile for deployment across different platforms like Slack, Facebook Messenger, or even telephony systems. This adaptability ensures that a bot can maintain a consistent user experience across channels, effectively enhancing engagement.

Moreover, RASA facilitates seamless implementation of voice interfaces by integrating with speech-to-text and text-to-speech technologies, expanding the ways users can interact with your application. This multi-channel capability ensures that your chatbot is not only limited to text-based interactions but can extend to voice-enabled services, tapping into the evolving demands of digital customer service.

Developers appreciate RASA for its strong emphasis on testing and evaluation, which are critical for delivering robust conversational experiences. The framework includes tools for conducting tests and analyzing the performance of the chatbot, helping to pinpoint areas for improvement. Continuous feedback loops mean your chatbot can evolve and adapt, refining its accuracy and intelligence over time.

For those new to RASA, getting started requires setting up a few basic elements. Typically, you’d begin by defining your NLU training data, which involves creating examples of various intents and entities your bot should recognize. Following this, story files for RASA Core are drafted to guide how conversations should generally flow. Once your basic framework is in place, you can train your models and start testing iterations.

The RASA Framework encourages a highly iterative approach—developers can continuously refine and enhance their bots by gathering user input and retraining models, ensuring that the chatbot grows more intelligent and responsive with each update. This aligns with the ever-changing landscape of technology and consumer interaction, as adaptability is key to maintaining relevance and efficiency.

Integrating Large Language Models (LLMs) with RASA

Integrating Large Language Models (LLMs) with the RASA Framework represents a significant advancement in enhancing the capabilities of conversational AI systems. LLMs, such as OpenAI’s GPT series or Google’s T5, offer sophisticated language understanding that can significantly augment and streamline various aspects of chatbot development within RASA.

Begin by exploring how these models play a role in refining the natural language understanding (NLU) processes. Traditional RASA NLU components rely on predefined training data to interpret intents and extract entities. However, integrating LLMs can supercharge this process. LLMs excel at deep language comprehension due to their extensive pre-training on diverse data corpora. By leveraging an LLM, RASA applications can dynamically process more complex and varied user inputs without requiring extensive custom datasets.

To integrate LLMs with RASA, you can follow several practical steps. Initially, configure your RASA setup to interact with an external LLM API. This often involves defining an endpoint in your RASA configuration file (endpoints.yml) that communicates with a hosted LLM service. Here’s a simplified example:

# endpoints.yml

nlu:

url: 'http://external-llm-api.com/process'

Once the endpoint is set up, modify your NLU pipeline to incorporate this LLM service. In config.yml, you might define a custom component that sends a user’s message to the LLM, retrieves the interpreted intent, and then parses the response back into a format usable by the RASA NLU pipeline.

pipeline:

- name: WhitespaceTokenizer

- name: RegexFeaturizer

- name: CustomLLMComponent

- name: IntentClassifierFeaturizer

Developing Custom Components

Creating a custom component that facilitates communication between RASA and an LLM involves programming in Python. This component handles the complexities of HTTP requests and parsing. Key stages include:

-

HTTPRequest: Compose an HTTP request in your Python file to send user queries to the LLM. This requires handling authentication keys if the LLM service necessitates API access.

-

Processing LLM Output: Once the response is returned from the LLM, parse the JSON to extract relevant information such as predicted intent and confidence scores. Convert the data format to align with RASA’s NLU structure.

-

Integration with RASA: Append this component to the RASA NLU pipeline to ensure it becomes a functional part of intent processing. Configure pipelines to handle fallback or default intents if the LLM fails to match confidently.

Enhancing Dialogue Management

In addition to boosting NLU capabilities, LLMs can assist with dialogue management. RASA Core’s dialogue management predicts responses based on tracked states and histories. LLMs offer enhanced contextuality, enabling more fluid response generation during ambiguous or complex interactions.

You might choose to interface the RASA dialogue management module with an LLM to generate dynamic, personalized responses. This can be achieved by using the LLM to propose possible responses which are then filtered through custom logic that considers the conversation flow and user-specific data.

Example Use Case

Consider implementing a customer service chatbot capable of understanding nuanced customer inquiries. Integrating an LLM allows the bot to interpret queries with more subtle emotional undertones like frustration or urgency and adjust dialogues accordingly. For instance, instead of simply routing a query about service delays to a default workflow, the bot might leverage LLM capabilities to offer empathetic, contextually tailored assistance.

Testing and Evaluation

Integrating a component as powerful as an LLM requires thorough testing to ensure stability and accuracy. Utilize RASA’s testing tools alongside manual evaluations to compare model performance pre- and post-integration. Continuous A/B testing can inform optimizations and adjustments necessary to ensure that the system adheres to expected performance metrics.

In conclusion, while integrating LLMs with RASA necessitates additional setup and development efforts, the resulting conversational bots can achieve significantly higher levels of adaptability, understanding, and user satisfaction.

Implementing CALM: Conversational AI with Language Models

Combining Conversational AI principles with advanced Language Models results in a powerful framework known as CALM, designed to enhance the quality and depth of machine-to-human interactions. This approach leverages the holistic understanding capabilities of Language Models to drive more intelligent and contextually aware chatbot responses.

Language Models, such as those within CALM, excel in natural language processing by enabling the grasp of complex linguistic nuances. These models are built on vast neural networks pre-trained with diverse datasets, granting them the capability to parse and generate human-like text efficiently. Integrating CALM into a conversational AI application involves several critical stages, each enhancing the conversational capabilities of your systems.

Creating a Conversational Foundation

To effectively implement CALM, begin by ensuring your AI platform has a robust conversational base. This involves setting up a comprehensive interaction framework using tools like the RASA Framework, which aids in structuring interaction flows and understanding user intents with its powerful NLU capabilities.

-

Define Intents and Entities: Start by delineating the types of conversations your AI should handle. This includes creating exhaustive lists of user intents and associated entities. For example, if creating a healthcare appointment bot, include intents like “book appointment,” “reschedule,” and “consultation queries.”

-

Story Mapping: Develop conversation scripts or “stories” that map user journeys within the system. This involves scenario crafting where chatbot responses are dynamically predicted based on actions within the conversation flow.

Integrating Language Models

Once the foundational interaction model is established, focus on integrating a Language Model to harness CALM’s full potential. This integration is crucial for enhancing both intent recognition and dynamic response generation.

- API Integration: Connect your RASA system with an external Language Model through APIs. This requires setting up the RASA

endpoints.ymland configuring it to interface with a trained Language Model such as GPT-3 or similar. This enables RASA to leverage the Language Model for processing more subtle, varied inputs without needing an exhaustive list of pre-defined responses.

# Example API Configuration

nlu:

url: 'http://calm-language-api.com/process'

- Custom Components: Develop custom RASA components to facilitate communication between your AI system and the Language Model. This typically involves Python programming to handle API requests and parsing of Language Model outputs into a format digestible by RASA’s pipeline.

Enhancing Contextual Comprehension

With the integration complete, focus on enhancing the AI’s ability to maintain and leverage conversational context. This is one of the primary advantages of using Language Models, as they excel at keeping track of dialogue history and user intent through their advanced neural architectures.

-

Dynamic Context Management: Implement mechanisms to track conversational states and context. Utilize the Language Model’s capability to recall and utilize conversation history for generating more coherent and contextually aware responses.

-

Real-time Adaptation: Utilize CALM for real-time adjustments in dialogue strategies. By analyzing ongoing interactions, the system can adaptively modify responses, thereby enhancing user engagement and satisfaction levels.

Testing and Optimization

Thorough testing is critical to ensure the CALM-enabled chatbot meets performance expectations. This involves a suite of automated tests through RASA’s testing framework, coupled with manual user evaluations.

-

Performance Metrics: Continuously track intent accuracy, response relevance, and conversation fluency. Use metrics like precision, recall, and user feedback to guide iterative improvements.

-

Feedback Loops: Establish feedback mechanisms to capture user sentiment and response effectiveness, using insights to train the Language Model further and refine the system’s response strategies.

Implementing CALM necessitates careful orchestration of both technological and design elements, ultimately driving the creation of conversational agents that are not only responsive and engaging but also deeply intuitive and human-like in dialogue quality.

Enhancing Conversational AI with RASA Pro and RASA Studio

RASA Pro and RASA Studio are pivotal tools for enhancing the capabilities of conversational AI applications, offering features that aid in the development, testing, and maintenance of sophisticated chatbot systems.

RASA Pro extends the open-source RASA framework with additional capabilities that improve scalability, security, and integration options suitable for enterprise use. It includes features like enhanced role-based access controls, detailed usage analytics, and improved deployment workflows. These features are crucial for large teams or corporations that require robust management and monitoring of multiple chatbot environments.

For example, role-based access control in RASA Pro allows enterprises to define specific permissions for team members. This means a development team could assign roles such as “Developer,” “Tester,” and “Admin,” each with unique access to certain components of the chatbot deployment, ensuring that sensitive operations like API key management or deployment configurations are only editable by authorized personnel.

Moreover, RASA Pro offers integration with existing enterprise infrastructure like CI/CD pipelines, which is essential for automating testing and deployment processes. This integration ensures that any changes made to chatbot models are tested and deployed systematically, minimizing the risk of errors and preserving the integrity of the deployed environment.

Parallelly, RASA Studio acts as a comprehensive visual interface that simplifies the design and management of conversational agents and their workflows. It offers a drag-and-drop approach to building conversation flows, enabling non-technical team members to contribute to the development process. This democratizes chatbot development within an organization, allowing product managers, UX designers, and customer support professionals to have a hands-on role in shaping the conversational experience.

In RASA Studio, creating a conversation involves mapping user intents to specific responses and defining paths for handling different scenarios. For example, a logistics company could use RASA Studio to visually map customer interactions starting with package tracking inquiries and then branching into detailed workflows that address issues such as delivery delays or address updates.

The real-time collaboration feature in RASA Studio further enhances development efficiency by allowing multiple users to work on the same project concurrently, providing live updates and comments. This ensures the alignment of cross-functional teams working on various aspects of the chatbot and speeds up the development lifecycle.

Moreover, RASA Studio supports straightforward integration with third-party messaging platforms and CRM systems. This allows the seamless embedding of chatbots into existing customer support or sales channels, which is often where these bots generate the most value. Teams can utilize the intuitive event tracking and analytics features within RASA Studio to gather insights into user interactions, which inform iterative improvements and optimizations.

Testing and validation are integral to the deployment of a successful conversational AI system, and both RASA Pro and Studio provide robust features in this area. RASA Pro’s analytics allow for in-depth performance monitoring, while A/B testing tools in RASA Studio enable teams to compare different dialogue strategies and refine them based on user feedback and interaction data.

Together, RASA Pro and Studio provide a powerful ecosystem for building, managing, and optimizing conversational AI. These tools streamline the development process, enhance collaboration across teams, and ensure that chatbots not only meet but exceed user expectations by adapting to evolving conversational demands.

Ensuring Security and Compliance in RASA Implementations

Ensuring the security and compliance of chatbot implementations built using the RASA Framework involves several measures that developers and organizations must consider to protect user data, comply with regulatory requirements, and maintain trust.

To start with, the RASA framework’s open-source nature offers transparency but also requires vigilant security practices. Developers must adhere to best security practices throughout the development lifecycle. This includes monitoring libraries for vulnerabilities using tools like Dependabot or GitHub Security Alerts, ensuring dependencies are up-to-date, and applying patches regularly to mitigate risks of exploitation.

Data Encryption and Access Control

Implementing data encryption is critical in securing sensitive information processed by chatbots. Both data at rest and in transmission should be encrypted using strong protocols like AES (Advanced Encryption Standard) for stored data, and TLS (Transport Layer Security) for data in transit. This prevents unauthorized access and eavesdropping, especially when integrating RASA with third-party services or external databases.

Access control mechanisms are equally important. RASA implementations should use role-based access controls (RBAC) to ensure that only authorized users can interact with sensitive components, such as configuration files containing API keys or user interaction logs. This could involve integrating with directory services like Active Directory or using OAuth tokens for authentication and access management.

Auditing and Monitoring

Regular auditing and monitoring are essential for identifying and responding to potential security incidents. Implement logging mechanisms within the RASA environment to track all chatbot interactions and system activities. Tools like ELK stack (Elasticsearch, Logstash, Kibana) can be utilized to aggregate logs, visualize data, and detect anomalies in real-time.

Organizations should configure proper alerting systems to notify relevant stakeholders of unusual activities or potential breaches. Integrating monitoring solutions like Prometheus and Grafana can provide insights into application performance and security events, helping teams to respond swiftly and maintain service integrity.

Compliance Considerations

Compliance with regulations such as GDPR (General Data Protection Regulation) or CCPA (California Consumer Privacy Act) is paramount when handling user data. RASA implementations should include privacy by design principles, limiting data collection to only what is necessary for functionality and ensuring compliance with data subject requests, such as data deletion or information access.

Implement data minimization techniques, ensuring that only necessary data is processed or stored, and consider pseudonymization or anonymization for datasets wherever possible. Regularly evaluate and document data processing activities, and ensure data privacy notice transparency to users.

Incident Response and Risk Management

Develop a robust incident response plan that outlines steps to take in the event of a data breach or security incident. This plan should define roles, communication plans, and decision-making processes to quickly isolate and mitigate threats.

Conduct regular risk assessments to identify and address potential vulnerabilities specific to your RASA deployment. Threat modeling sessions can help visualize attack vectors, allowing developers to anticipate and prepare for security challenges.

Regular Training and Updates

Continuous training for development teams on security best practices and compliance requirements ensures awareness and adaptation to new threats. Encourage a culture of security within your team by offering resources and incentives for staying informed about the latest vulnerabilities and security trends.

Finally, maintain a schedule for regular updates to your RASA environment, encompassing both the framework and its dependencies. This proactive approach will help fortify against evolving threats and maintain compliance with the latest privacy standards.

By implementing these security and compliance measures, organizations can effectively safeguard their RASA-based conversational AI systems, build user trust, and adhere to legal obligations, ensuring robust and resilient chatbot operations.