Introduction to Real-Time Speech-to-Speech Technology

Real-time speech-to-speech technology is a pivotal breakthrough in the realm of voice processing, combining the power of speech recognition, translation, and synthesis to enable seamless cross-lingual verbal communication. At its core, this technology involves three primary components: speech recognition to convert spoken words into text, translation to interpret the text into a different language, and speech synthesis to produce spoken words in the target language. By integrating these components, voice assistants can facilitate natural conversations between speakers of different languages in real time.

One of the foundational technologies in this field is Automatic Speech Recognition (ASR). ASR systems use complex algorithms, often powered by machine learning models, to transcribe spoken language into written text. These models are typically trained on massive datasets of audio and text to learn patterns in speech. They decode nuances such as intonation and accent, which are essential for accurate transcription.

Once speech has been transcribed into text, the next step is language translation, which exploits the strengths of Natural Language Processing (NLP). Advanced translation models, such as those utilizing neural networks, have significantly improved in capturing the context and subtleties of language. Unlike traditional phrase-based or rule-based translation methods, neural translations use large neural networks to understand semantic connections between words, which helps in producing more accurate and fluent translations.

The final component of the technology is Text-to-Speech (TTS), which synthesizes spoken language from text. With the development of neural TTS systems, the quality and naturalness of synthesized speech have dramatically improved. Techniques such as WaveNet and WaveRNN leverage deep learning to model speech waveforms directly, producing human-like intonation and cadence, allowing for lifelike synthetic voices.

Combining these technologies in real-time presents significant challenges. Computational efficiency is paramount, requiring algorithms optimized for speed without compromising on accuracy. Additionally, latency must be minimized to create an experience indistinguishable from natural speech. This requires high-performance processing and often leverages cloud-based platforms that provide scalable and powerful infrastructure to handle the demanding tasks of speech processing.

Real-time speech-to-speech technology is not only transformative for personal digital assistants but is also being integrated into customer service applications, healthcare, and education. For example, a health app could use this technology to converse with patients in their native language, enhancing accessibility and convenience. In customer service, it can facilitate instant translations, making global business interactions smoother and more personable.

These advancements in speech technology are also fostering the creation of inclusive digital solutions. By breaking down language barriers and promoting accessibility, real-time speech-to-speech technology holds promise for not just technological innovation, but social transformation as well. With continuous advancements in AI and machine learning, the potential applications and accuracy of this technology are destined to grow, offering even more refined and user-friendly communication tools in the near future.

Key Features to Consider in Speech-to-Speech APIs and Libraries

When evaluating speech-to-speech APIs and libraries, there are several key features and capabilities to consider to ensure optimal performance and suitability for your application. These components are critical, whether you’re developing a multilingual voice assistant, a real-time translation service, or an interactive educational tool.

1. Language Support and Translation Accuracy

One of the foremost considerations is the breadth of language support and the accuracy of translations offered by the API or library. Comprehensive language support enables broader application across different nationalities and regions, which is particularly valuable for businesses aiming to reach global audiences. Look for APIs that not only support major languages like English, Spanish, and Mandarin but also cater to less common languages and dialects. Additionally, the translation accuracy needs to be evaluated based on semantic understanding and contextual appropriateness. APIs leveraging neural networks for translation, such as those using Transformer models, tend to offer more nuanced and contextually aware translations, which are often required for professional applications.

2. Integration Ease and Compatibility

Ease of integration is another critical feature. APIs that are well-documented and supported with robust SDKs (Software Development Kits) for multiple programming languages will significantly streamline the development process. Consider whether the library or API is compatible with the platforms you are targeting, such as web applications, iOS, Android, or desktop software. For instance, Google Cloud’s Speech-to-Speech API offers comprehensive documentation, sample code, and community support that facilitate smooth integration into various applications.

3. Real-Time Processing Capabilities

Given the increasing demand for real-time voice interactions, the ability to process speech quickly and efficiently is crucial. Evaluate the latency levels associated with the API or library: low latency is essential for applications requiring real-time interaction, such as live conversational agents. The trade-off between speed and quality must be carefully managed. Ensure that the API can handle simultaneous requests efficiently, which is often facilitated by cloud-based infrastructures.

4. Speech-to-Text and Text-to-Speech Quality

The quality of speech recognition (speech-to-text) and speech synthesis (text-to-speech) is vital for user experience. High ASR accuracy and TTS quality can significantly impact how users perceive the responsiveness and intelligence of your application. Look for technologies using cutting-edge techniques like Deep Neural Networks (DNNs) to improve accuracy and naturalness. For instance, Amazon Polly uses advanced deep learning technologies to synthesize natural-sounding speech.

5. Customization and Adaptability

Consider the customization capabilities offered by the API or library. Customizable models can adapt more precisely to your specific domain, whether it’s medical terminology, technical jargon, or casual conversational style. Some APIs allow custom vocabularies or pronunciation adjustments that can greatly enhance user experience in niche applications. The ability to train the model with domain-specific datasets can further improve accuracy and relevance.

6. Cost and Licensing Considerations

Finally, the cost structure and licensing agreements are essential considerations. Determine whether the pricing model aligns with your budget, especially if you anticipate scaling your application. Some APIs offer pay-as-you-go models, which can be beneficial for startups, while others might offer enterprise-level licensing for broader usage. Examine the terms of service for any usage restrictions or data handling policies, particularly concerning privacy and security of user data.

By carefully evaluating these key features, developers and businesses can select the most appropriate speech-to-speech API or library that meets their objectives, enhances functionality, and provides a seamless user experience.

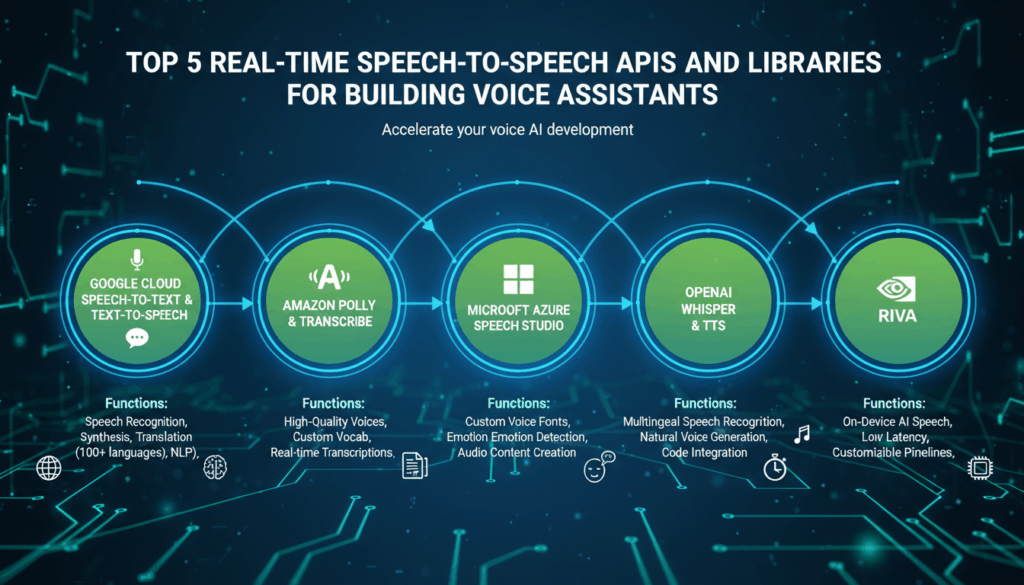

Top 5 Real-Time Speech-to-Speech APIs and Libraries for Voice Assistants

When developing voice assistants, selecting a robust speech-to-speech API is essential to ensure seamless, real-time interactions. Among the offerings available, five stand out due to their comprehensive features, ease of integration, and real-time capabilities.

Google Cloud Speech-to-Speech API

Google Cloud’s Speech-to-Speech API sets a high standard for real-time processing and integration capabilities. It offers extensive language support and uses advanced machine learning models, including deep neural networks, to deliver high-quality transcription and synthesis.

Features:

– Wide Language Compatibility: Supports a vast array of languages and dialects, even accommodating regional variations, which is crucial for global applications.

– Easy Integration: Provides comprehensive SDKs and documentation that facilitate seamless integration with multiple platforms such as Android and iOS.

– Real-Time Processing: Offers efficient real-time speech recognition with minimal latency, ensuring responsive interactions.

For example, deploying this API involves creating a project within Google Cloud, enabling the Speech API, and using client libraries available for different programming languages to implement speech recognition features effortlessly.

Amazon Transcribe and Polly

Amazon’s combination of Transcribe for speech recognition and Polly for speech synthesis creates a powerful speech-to-speech capability suitable for dynamic voice assistant applications.

Features:

– Customization: Provides options for custom pronunciations and vocabulary, allowing for adaptation to specific industry needs.

– Integration and Scalability: Easily integrates with other AWS services, offering scalability and reliability critical for enterprise-level applications.

– Advanced Neural Network Models: Leverages advanced models to improve the naturalness of generated speech, resulting in lifelike voice outputs.

Integrating these services typically involves utilizing AWS SDKs to connect and manage transcription and voice synthesis tasks through code.

IBM Watson Speech-to-Text and Text-to-Speech

IBM Watson delivers state-of-the-art speech-to-speech capabilities through its cloud-based AI services. Watson’s API provides extensive customization and fine-grained control over voice synthesis parameters.

Features:

– Extensive Customization: Enables users to tailor the voice characteristics, such as pitch and speed, and supports custom language models to enhance accuracy in niche fields.

– Robust Language Models: Utilizes Watson’s powerful AI to support various languages and handle domain-specific lexicons expertly.

– Real-Time Transcription and Synthesis: Designed for low-latency applications, making it suitable for high-demand environments.

Developers can start by creating an IBM Cloud account, setting up the relevant services, and using Watson’s SDKs to streamline development.

Microsoft Azure Speech Service

Microsoft’s Azure Speech offers comprehensive services that combine speech recognition, translation, and synthesis for real-time applications. Known for its accuracy and seamless integration, Azure is a strong contender in the speech domain.

Features:

– Multilingual Support with High Accuracy: Provides broad language support and precise translation capabilities powered by sophisticated AI models.

– Integration with Azure Ecosystem: Easily integrates with other Azure services, aiding in efficient scaling and management of applications.

– Real-Time Processing: Delivers low-latency responses, crucial for real-time voice assistant applications.

Azure provides a well-documented API interface and an array of SDKs that facilitate integration and configuration, making it accessible for both small and large enterprises.

iFLYTEK AI Voice

iFLYTEK, a leading AI company, offers a speech-to-speech solution specifically renowned for its Mandarin language processing capabilities, making it especially valuable for applications in Chinese-speaking markets.

Features:

– Specialized Language Support: Focuses on Chinese speech recognition and synthesis with exceptional accuracy, advantageous for localized applications.

– Customization Options: Allows tweaking voice synthesis and integrating tailored vocabularies to enhance contextual understanding.

– Integration and Performance: Delivers efficient real-time capabilities and supports various platforms for seamless deployment.

Utilizing iFLYTEK involves accessing their API through a developer account, where numerous documentation and community resources assist in implementation.

In conclusion, these APIs not only underscore the diversity available in speech-to-speech technology but also emphasize the importance of choosing an API that aligns with specific project requirements, whether that involves language support, customization, or integration with existing systems. Each service brings its distinct advantages, enabling developers to enhance their voice assistant applications effectively.

Integrating Speech-to-Speech APIs into Voice Assistant Applications

Integrating Speech-to-Speech APIs into voice assistant applications involves several intricate steps that leverage advanced technologies to achieve natural and seamless interactions. This integration process combines various components such as Automatic Speech Recognition (ASR), Natural Language Processing (NLP), Language Translation, and Text-to-Speech (TTS) systems.

When beginning the integration, a critical first step is selecting the appropriate API that aligns with the specific needs of your application. For instance, if the target audience primarily speaks a specific language, you might choose an API that excels in that language. Consider factors like language support, ease of integration, and customization options.

API Setup and Configuration

-

API Selection and Credential Acquisition: Start by choosing a preferred API provider based on the languages needed and integration capabilities. Obtain API keys and configure authentication details within your application to ensure secure communication with the API server.

-

Installation of SDKs: Most API providers offer Software Development Kits (SDKs) for various programming languages, which simplify communication with the API. These SDKs typically include built-in functions for speech recognition, translation, and speech synthesis.

-

Function Implementation: Implement the necessary functions in your application. For instance, for Google Cloud’s API, you would utilize their client libraries to call speech recognition functions, process the text, and send it to the translation module before finally using TTS to synthesize the translated speech.

Designing the Workflow

-

Speech Input Processing: Capture audio input using the device’s microphone. This audio input is then sent to the ASR system. Maintain a buffer to handle unprocessed audio data to manage low-latency requirements.

-

Text Processing and Translation: Once the audio is converted to text, apply NLP techniques to interpret context and semantics. Utilize an integrated translation module to convert the output into the target language. This step may involve preprocessing tasks like text normalization or handling domain-specific language nuances.

-

Speech Synthesis: Convert the translated text back into speech using a TTS system. Modern TTS solutions like Amazon Polly or Google’s WaveNet offer human-like speech rendering, which enhances the quality of the interaction.

Optimization and Testing

-

Latency Minimization: Post-transcription, prioritize reducing latency by optimizing network requests and using computationally efficient algorithms. Techniques such as asynchronous processing and pre-fetching potential responses can help improve responsiveness.

-

Quality Assurance: Conduct rigorous testing to ensure accuracy and naturalness of speech and translation quality. Iteratively refine models based on user interactions, feedback, and performance metrics.

-

Error Handling and Fail-Safes: Implement robust error handling and fail-safes for scenarios such as poor network connectivity, ambiguous translations, or API downtime. Providing users with a fallback or retry mechanism can enhance reliability.

Final Integration

Embed these functionalities into a cohesive user interface. Consider intuitive design principles and voice command interfaces that facilitate effortless user interaction. Voice assistants should be tested extensively in real-world scenarios to ensure they meet user expectations for consistency and accuracy.

Through careful consideration of these elements, developers can effectively integrate speech-to-speech APIs into voice assistant applications, resulting in a comprehensive solution that enhances usability and provides a compelling user experience. Each API’s unique features, such as model customization and extensive language support, can be harnessed to tailor the solution to specific industry needs, whether in customer support, healthcare, or education.

Best Practices for Optimizing Performance and Accuracy

To achieve optimal performance and maximize accuracy in developing real-time speech-to-speech applications, several best practices are essential. These practices not only improve computational efficiency but also ensure high-quality user experiences.

One of the critical factors in optimizing performance is careful model selection and deployment. Choose models that are specifically designed for real-time applications, prioritizing those with low latency and high throughput capabilities. Efficient models such as deep neural networks or transformer-based architectures have been shown to reduce processing time significantly while maintaining accuracy.

Network optimization is another paramount consideration. Minimize data transfer times by optimizing network requests. Use techniques such as data compression for audio files and prioritize protocols that offer the least latency. Ensuring a high-bandwidth connection and strategically placing servers closer to users geographically can also reduce lag and improve response times.

The use of caching is a potent method for enhancing performance, especially when dealing with frequently requested translations or text-to-speech outputs. Implement intelligent caching strategies to store and quickly retrieve commonly used phrases and language models. This technique ensures faster access times and lowers system load.

Load balancing is another strategy that can sustain performance during high-traffic periods. Distributing inbound requests evenly across multiple servers ensures that your system can handle large volumes of data efficiently. This is particularly important for scalable applications where demand can fluctuate dramatically.

Enhancing accuracy involves leveraging specialized algorithms and training data. Employ domain-specific language models to improve ASR and translation accuracy. Custom vocabularies and phrase lists tailored to your application’s context help the system understand and process user inputs more accurately. Periodic retraining of these models with updated datasets can help them adapt to new languages or dialects, maintaining their relevance over time.

Moreover, implementing contextual awareness can significantly enhance both performance and accuracy. Use Natural Language Processing (NLP) techniques to ascertain the context and semantics of the speech. Contextual models enable more precise translation and natural voice outputs. Techniques such as sentiment analysis and intent recognition ensure the system understands subtleties in speech and responds appropriately.

Conducting extensive testing is vital for identifying performance bottlenecks and accuracy issues. Use real-world scenarios to test how efficiently the system processes various inputs. Monitor key performance indicators (KPIs) such as latency, throughput, and error rates, tweaking system parameters to resolve detected issues promptly.

Finally, prioritize user feedback and iterative updates. User interaction data provides invaluable insights into system efficacy. Regularly update the application based on user feedback, ensuring new features and improvements are aligned with user expectations and contribute to the high quality of interactions.

By incorporating these best practices, developers can ensure their speech-to-speech applications perform optimally and deliver precise, responsive, and reliable user experiences. The ongoing improvement of AI models and infrastructure will only continue to refine these systems further, enhancing their capabilities and reach across diverse applications.