What is LLM hallucination?

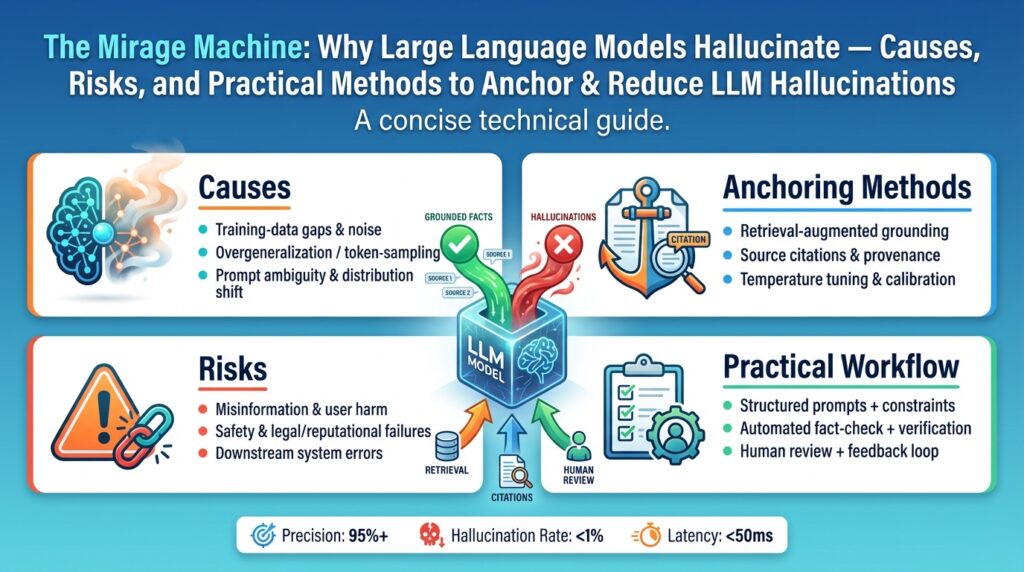

When you rely on a large language model for answers, the most insidious failure mode isn’t a crash or an error code—it’s fluent, confident misinformation. We call that LLM hallucination: the model producing statements that are plausible-sounding but factually incorrect, fabricated, or unsupported by available evidence. Why does this happen, and how does it look in practice? Those are the practical questions you’ll want answered before you deploy models into production systems that make decisions or inform users.

At a technical level, hallucination arises from how models are trained and how they generate text. The core training objective for most modern models is next-token prediction: learn statistical patterns in sequences of words from massive corpora and pick the most likely continuation. This optimizes fluency and pattern completion, not truth verification. During decoding, sampling choices (temperature, top-k/top-p) trade off determinism and creativity, which can increase the chance the model invents details. In other words, the model is a sophisticated pattern matcher, not a grounded oracle; it synthesizes plausible-sounding assertions without an inherent fact-checker.

Not all hallucinations are the same; practical taxonomy helps you reason about mitigation. Extrinsic hallucinations are claims about external facts or documents the model cannot verify—fabricated citations, nonexistent legislation, or an invented API method. Intrinsic hallucinations are internal inconsistencies the model makes within a generated answer—contradictory dates, impossible parameter values, or flawed arithmetic steps. For example, a code assistant that returns a library function signature that doesn’t exist demonstrates an extrinsic hallucination, whereas a multi-step reasoning answer that contradicts itself mid-stream exhibits intrinsic hallucination.

The risk profile and detectability of hallucination vary with task and context. High-stakes domains—medical, legal, financial—amplify harm because fluent falsehoods are persuasive; in developer tools, hallucinated code can silently break pipelines or introduce security bugs. Spotting hallucinations requires skepticism: check provenance, request explicit citations, and validate outputs against authoritative sources or test suites. You can reduce surface risk by tuning decoding parameters, constraining generation with templates, or using retrieval-augmented generation (RAG) so the model conditions on verifiable documents, but none of these eliminate hallucination entirely.

Building on this foundation, we now have a clear, operational definition: LLM hallucination is the model-generated production of plausible but unverified or incorrect content caused by predictive training and unconstrained decoding. We’ll next unpack the root causes in depth, quantify the practical harms you should prioritize in your projects, and then walk through concrete engineering patterns to anchor outputs—retrieval, grounding, calibration, and monitoring—so you can deploy with measurable confidence rather than hope.

Why models hallucinate

Building on this foundation, the root of fluent falsehoods is a mismatch between the model’s optimization goal and the demands of truth-seeking. Large language models are trained to predict the next token given context, which rewards plausibility and syntactic coherence over factual accuracy; as a result, the model learns statistical associations rather than explicit facts verified against an external reality. Because we ask models to behave like experts, they often amplify confident-sounding patterns learned from training text even when those patterns are unsupported, producing what we call LLM hallucination early and often in open-ended tasks.

A second major cause is noisy, inconsistent, or incomplete training data. Training corpora aggregate web pages, books, code, and user-generated text with varying quality, contradictions, and stale information; when the model encounters gaps or conflicting signals, it interpolates from nearby patterns and fills blanks with plausible—but incorrect—tokens. This is especially visible in long-tail domains where authoritative examples are rare; the model will synthesize an answer that looks like the scarce sources, creating fabrications rather than admitting uncertainty. Because the model compresses knowledge into parameters, it cannot mark which facts are well-supported versus which are inferred, and that compression drives many extrinsic hallucinations.

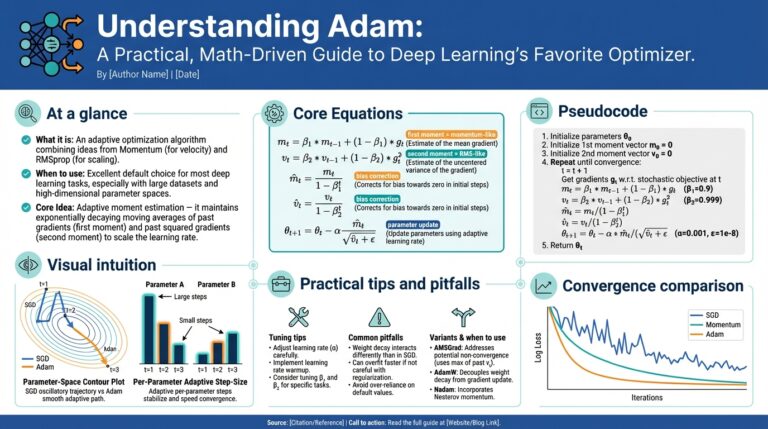

Decoding dynamics and sampling choices amplify hallucination risk in production. When you increase temperature or use wide top-p sampling to get creative outputs, you trade determinism for diversity—and you also increase the probability of inventing unsupported details. Conversely, greedy decoding (for example, temperature=0) suppresses creativity but can still yield confidently wrong answers if the learned distribution favors a false completion. How do you end up with a model asserting nonexistent API endpoints or invented citations? Often it’s a combination of partial prompt context, permissive sampling, and the model’s learned prior that “looks like” an API signature.

Distribution shift between training and runtime prompts is another practical driver. The model sees a different distribution of inputs during deployment—shorter contexts, different task framing, or domain-specific jargon—and that mismatch encourages the model to rely on generic priors rather than grounded evidence. Instruction-following tweaks such as system prompts and chain-of-thought supervision change how the model prioritizes fluency versus cautiousness, so an otherwise accurate assistant can begin fabricating when given unusually framed queries or when asked to extrapolate beyond its knowledge cutoff. Prompt engineering reduces but does not eliminate this class of hallucination.

Architectural gaps matter: these models lack an internal, authoritative verifier. There is no built-in process that checks a generated statement against a canonical database, runs unit tests, or queries an external API unless we wire one in. In practice, the model’s “knowledge” is an implicit, distributed memory—an associative network that reconstructs likely continuations from similar contexts—so when a ground-truth document isn’t available in the context window, the model will synthesize. Retrieval-augmented generation (RAG) helps by providing evidence, but it transfers the problem: if the retriever misses documents or returns low-quality sources, the generator will still hallucinate, now anchored to weak references.

Intrinsic modelling behaviors like overconfidence and shortcut learning also contribute. Models are typically poorly calibrated: they output high-probability tokens with unjustified certainty. They also learn shortcuts in training—surface cues that correlate with certain answers without causal grounding—so small prompt changes can trigger a confident but wrong response. From an engineering perspective, this means hallucination is not only a data problem or a decoding problem; it’s a systemic interaction across objective, data, architecture, and operational setup.

Taken together, these mechanisms explain why hallucination persists even after practical mitigations like temperature tuning or RAG are applied. Understanding the interplay—objective mismatch, training data noise, sampling dynamics, distribution shift, lack of verification, and model overconfidence—lets us focus on targeted controls: better retrieval, calibration, provenance, and end-to-end testing. In the next section we’ll quantify the harms you should prioritize and start mapping those controls to concrete engineering patterns you can implement.

Risks and real-world impact

A single confident-sounding lie from an LLM can cascade into real-world cost, downtime, or harm. LLM hallucination isn’t a theoretical nuisance; it’s an operational risk that shows up as bad decisions, broken automation, and legal exposure. Building on the technical causes we covered earlier, here we look at what actually breaks when a model invents facts, and why you should prioritize mitigation as part of engineering risk management. How do hallucinations translate into operational harm?

Fluent falsehoods create immediate, measurable failures in high-stakes domains. In healthcare, a generated recommendation that misstates a dosage or omits contraindications can cause clinical errors when clinicians accept model output without verification. In legal or compliance workflows, an invented precedent or statute citation can steer counsel toward incorrect conclusions and expose firms to malpractice. In developer tooling, hallucinated API signatures or package names slip into code, compile locally, and surface only later in production—sometimes as security vulnerabilities when the wrong library is introduced. These are not edge cases; they map directly to patient safety incidents, regulatory penalties, and exploitable bug windows.

Less obvious but equally dangerous are systemic and compounding effects. When you integrate models into pipelines—auto-generated docs, CI helpers, or ticket triage—hallucinations can pollute downstream data that future models learn from, creating a feedback loop of amplified falsehoods. We’ve seen scenarios where a code assistant writes a unit test that asserts a fabricated behavior; continuous integration marks that test green, and the false assumption becomes part of the codebase’s perceived truth. Over time, these silent failures erode trust in automation: engineers stop relying on model suggestions, increasing cognitive load and negating the productivity gains you hoped to achieve.

Regulatory, auditability, and liability risks change how you architect systems. Models are typically poorly calibrated and provide no provenance unless you build it in; that complicates post-hoc investigations and compliance reporting. If a model-driven decision affects a customer—loan denial, medical triage, or a safety alert—auditors will ask for evidence: what sources were used, what confidence thresholds applied, and who approved the output? Without retrieval-augmented generation and strict provenance logging, you lack defensible records. That gap increases legal exposure and forces conservative product decisions that reduce value to end users.

Operational costs add up quickly in headcount and incident response. Time spent debugging hallucination-origin incidents—tracing back to prompts, retriever misses, or decoding settings—diverts engineers from feature work and raises MTTR (mean time to repair). To reduce that cost curve, prioritize deterministic pipelines for critical paths: lock down model versions, enforce RAG with vetted corpora, validate outputs against canonical services, and apply model calibration or uncertainty estimates so the system can fall back to human review when confidence is low. These patterns convert amorphous risk into measurable controls.

Practically, you should treat hallucination mitigation as part of your SRE and security playbooks. Instrument model outputs with provenance metadata, add lightweight unit and integration tests that assert factual constraints, and run synthetic adversarial prompts in staging to detect brittle behavior before deployment. For external-facing automation, require explicit human-in-the-loop gates for decisions with regulatory or safety impact. Investing in retriever quality, canonical data sources, and calibration reduces false positives and makes incidents actionable rather than mysterious.

Taking this view forward, we shift from accepting hallucination as an inevitable quirk to treating it as an engineering fault mode we can measure and control. Next we’ll map those controls—retrieval design, grounding strategies, calibration techniques, and monitoring—to concrete implementation patterns you can apply in production to lower both frequency and impact.

Detecting and measuring hallucinations

Building on this foundation, the first practical step is instrumenting reliable detection and measurement so you know when hallucination is happening and how badly it matters. Hallucination and LLM hallucination should be treated as measurable fault modes: ask yourself both “how often does the model invent facts?” and “what is the impact when it does?” This section focuses on detection techniques you can automate, measurement metrics you can track, and testing patterns that surface both frequency and severity of hallucination in real workflows. How do you separate harmless creative text from a dangerous factual error in production?

Detecting incorrect outputs starts with layered checks that catch different failure modes. At the surface level, compare generated claims against retriever-backed documents or canonical APIs and flag mismatches; at the deeper level, run consistency and entailment checks across model responses and prior context. Use a lightweight verifier model (an NLI-style entailment classifier) to ask whether a generated statement is supported by one or more evidence passages, and combine that with simple heuristics like missing citations, improbable dates, or non-existent entity lookups. For intrinsic hallucinations—contradictions inside an answer—implement internal consistency tests: regenerate answers under different random seeds and check for stable assertions.

Measurement requires both frequency metrics and impact-weighted scores so you can prioritize fixes. Track a primary hallucination rate (fraction of checked outputs judged false or unsupported) and complement it with a Severity-Weighted Hallucination score that multiplies each incident by a domain-specific impact weight (for example, 10 for medical dosage errors, 1 for stylistic fabrications). Measure calibration with expected calibration error (ECE) over model confidence or verifier scores, and monitor precision/recall of the verifier against a gold set of labelled failures. Finally, capture mean time to detection (MTTD) in your pipeline and the false positive rate of your detectors so you understand operational cost vs. coverage.

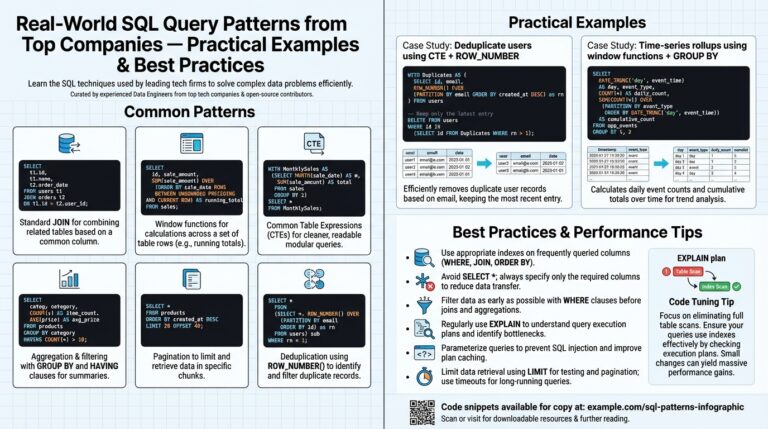

Operationalize detection with concrete tests you can run in CI and staging. Write unit tests that assert facts produced by the model against authoritative services: for a code assistant, validate all returned function signatures against the real package registry or run static type checking on generated code; for a knowledge assistant, require that each factual claim includes a source and that the source text contains the claim. Example assertion patterns look like assert evidence_contains(generated_claim, retrieved_docs) or assert type_check(generated_code) == OK. Add adversarial test cases and red-team prompts that reproduce common hallucination triggers (e.g., long-tail APIs, speculative “what-if” scenarios).

Make detection continuous with telemetry and sampling strategies that prioritize high-risk paths. Log provenance metadata (retriever scores, evidence doc IDs, verifier probabilities) for every response and surface daily cohorts of low-confidence or unsupported outputs to a human review queue. Use stratified sampling in production: sample more heavily from outputs that touch regulated domains or have low retriever overlap, and create dashboards that show hallucination rate by endpoint, model version, prompt template, and user intent. Establish alert thresholds tied to your Severity-Weighted Hallucination score so you can auto-gate rollouts or trigger human-in-loop review when the metric crosses a risk budget.

Finally, treat measurement as a feedback loop: use labeled incidents to improve retrievers, calibrate model confidences, and expand your golden dataset for continuous evaluation. Run A/B experiments to quantify how architectural changes—lower temperature, stronger retrieval, verifier ensembles—reduce your measured hallucination rate and ECE. By instrumenting detection, defining impact-aware measurement, and baking automated tests into pipelines, we convert hallucination from a mysterious failure into an engineering metric we can lower and monitor. In the next section we’ll map these controls to concrete anchoring strategies—retrieval design, grounding, and calibration patterns—that reduce both frequency and operational impact.

Grounding with retrieval (RAG)

Building on this foundation, one of the most effective levers we have for reducing fluent falsehoods is conditioning generation on retrieved, verifiable documents—what practitioners call retrieval-augmented generation. How do you design a retrieval-backed pipeline that meaningfully reduces hallucination while remaining performant? The short answer is: treat retrieval as the system of record for facts, and design generation to cite and reason over that evidence rather than inventing unsupported details.

Start by separating concerns: a retriever locates candidate passages, a ranker filters and scores them, the generator composes the answer conditioned on those passages, and a verifier checks support and consistency. This decomposition gives you clear engineering touchpoints for improving accuracy: enhance the retriever to increase recall, tighten the ranker to improve precision, constrain the generator with evidence snippets, and run an entailment or NLI-style verifier over the final claim set. By instrumenting each stage you convert a monolithic hallucination problem into measurable failure modes: missed documents, poor ranking, unsupported generation, and verification failures.

In practice, retriever choice matters. Sparse methods (BM25) are reliable for exact-match queries and structured corpora, while dense vector retrieval with embeddings excels at semantic matches and paraphrased content; use both when your corpus mixes formal docs and conversational text. Chunking strategy is equally important: chunk too coarsely and you dilute signal; chunk too finely and you lose context that supports a claim. We typically index canonical sources (API docs, spec PDFs, product manuals) with metadata that includes source ID, timestamp, and section anchors so you can trace why a passage was returned and enforce provenance policies during generation.

A concrete developer-tool example makes trade-offs obvious. Suppose your assistant is asked to return a function signature for a public SDK. First, retrieve matching sections from the official package docs and the package registry. Then constrain the generator with an explicit prompt fragment like: “Given these evidence passages, return the exact function signature and the doc ID that contains it.” Finally, run a quick verification step: check the generated signature against the registry API or run static type checking. If the verifier fails, escalate to a human or return a conservative, provenance-backed response instead of fabricating an answer.

Provenance is non-negotiable in production: store document IDs, retrieval scores, and passage offsets with every response so auditors and downstream systems can reconstruct the chain of evidence. Evidence scoring should be normalized across retriever and ranker outputs so the generator can weight passages (for example, prefer a high-score official spec over a low-score community forum). We recommend exposing a confidence band derived from combined retriever and verifier scores and using thresholding to gate automatic actions; for high-risk operations, require manual approval when confidence is below your safety budget.

Verification amplifies the benefits of retrieval but introduces new engineering choices. Lightweight entailment models can quickly flag unsupported claims, while more expensive verification (querying authoritative APIs, running unit tests, or executing type checks) provides stronger guarantees. Tune these checks to your latency and cost constraints: run cheap verifiers inline and schedule expensive validators asynchronously with clear user-facing messaging. When the verifier and generator disagree, fall back to the evidence passages verbatim and surface the conflict for human review rather than attempting risky reconciliation.

Be explicit about failure modes and measurement. Common problems include retriever recall gaps, stale corpora, and generator overfitting to weak evidence (producing hallucinated bridges between unrelated passages). Track a hallucination rate that compares generated claims to retrieved support, and use a Severity-Weighted Hallucination metric for prioritization in regulated flows. Continuous evaluation—A/B testing retrieval models, refreshing indexes, and expanding your golden dataset with labeled verifier failures—turns retrieval-augmented systems from brittle prototypes into auditable services.

Taking retrieval seriously means treating it as the primary anchoring mechanism for factual claims, not an optional enhancement. When you design pipelines with robust retrieval, traceable provenance, and layered verification, you reduce the frequency and impact of hallucinations and create a clear escalation path for residual uncertainty. In the next section we’ll build on these controls with calibration and monitoring patterns that close the loop between model behavior and operational risk.

Prompting and decoding strategies

Building on this foundation, the place where you gain the most control over hallucination is in how you prompt the model and how you decode its outputs. Prompting strategies and decoding strategies are complementary levers: prompts shape the model’s prior over plausible continuations, while decoding settings determine how aggressively the model explores that prior. If you treat the model as a powerful but fallible pattern generator, you design prompts and sampling to constrain conjecture, force provenance, and expose uncertainty rather than amplify confident-sounding fabrications.

Start each interaction with a precise contract. State role, scope, output format, and a strict refusal policy up front: we ask the model to produce one JSON object with keys “answer”, “sources” and “confidence”, and to respond with “I don’t know” when evidence is missing. Few-shot examples are powerful here—show exact, small examples that demonstrate the desired format and the correct handling of unknowns. When you need step-by-step reasoning, choose whether to enable chain-of-thought: it improves traceability for audits but can increase intrinsic hallucinations if unchecked, so include a verifier step when you allow reasoning traces. These prompting strategies reduce ambiguous interpretation and make downstream checks deterministic.

Constrain generation with templates and explicit tokens so you avoid open-ended free text when facts matter. Ask the model to always cite a document ID and an excerpt for every factual claim, and include a structured delimiter (for example, triple dashes) to separate the evidentiary block from commentary. For API or code outputs, require exact signatures and add a “validation” field that enumerates which external check passed—typecheck, registry-lookup, or unit-test. When you design prompts this way, you shift the system from inventing details toward surfacing verifiable artifacts that your pipeline can programmatically validate.

On the decoding side, tune sampling to match risk. Lower temperature (0–0.4) and tighter nucleus sampling (top-p 0.7–0.95) reduce creative departures from the most likely completion, while beam search or deterministic decoding yield repeatability that simplifies verification. However, deterministic outputs can still be confidently wrong if the model’s learned prior is incorrect, so treat low-entropy decoding as risk reduction rather than an accuracy guarantee. Contrastive decoding or logit bias can actively demote hallucinated tokens—ban known bad tokens (for example, fabricated citation formats) and boost tokens that map to canonical identifiers. These decoding strategies let you trade creativity for factual stability in a controlled way.

When one run isn’t enough, use ensemble and reranking techniques to detect and suppress hallucination. Sample multiple outputs with different seeds or temperatures and run a lightweight entailment verifier or a domain-specific validator (static type checker, registry API, or database query) to rank candidates. Apply self-consistency voting for reasoning tasks—collect many chain-of-thought outputs and select the majority-supported conclusion—or use a verifier model that checks each claim against retrieved evidence. For code and API answers, run the generated signature against the real package registry or compile the snippet; only promote the response if the external check passes. These patterns convert uncertain generations into artifacts you can automatically accept, reject, or route to human review.

Operationalize the combo of prompting strategies and decoding strategies with measurable gates. Log retriever overlap, verifier scores, and decoder entropy with every response so you can set thresholded policies: auto-accept high-confidence, well-supported answers; require human approval for medium-confidence outputs; and refuse or return a conservative response when checks fail. Run A/B tests that vary prompt templates and sampling configs while tracking the Severity-Weighted Hallucination metric you already instrumented. Taking this concept further, tie these gates into your monitoring and calibration pipeline so you continuously close the loop between model behavior, detection telemetry, and the verification patterns we cover next.